What is a Neural Network

- Muiz As-Siddeeqi

- Sep 15, 2025

- 6 min read

The Invisible Machine That’s Rewriting the Rules of Everything

It doesn't matter whether you're a founder, marketer, startup dreamer, or a sales executive trying to make sense of all the AI noise in 2025. You’ve probably heard the term neural network tossed around like confetti — in tech conferences, LinkedIn threads, product demos, and startup pitches.

But here's the truth: most people who use the term don’t really understand what it means — and that’s not their fault. It’s because this technology, which is now deeply embedded in everything from TikTok algorithms to Tesla autopilot to Gmail spam detection to sales forecasting tools, is intentionally abstracted, brutally complex, and historically mysterious.

So let’s break it wide open. Let’s demystify it. Using only the truth. Only real examples. Only cited data. No fantasy. No metaphors. No “the brain of the machine” analogies. No made-up illustrations.

Let’s dive straight into it.

The Roots: Where Neural Networks Actually Began (And Why That History Was Ignored for Decades)

The idea behind neural networks is not new at all. It goes all the way back to 1943, when neurophysiologist Warren McCulloch and logician Walter Pitts published a paper titled “A Logical Calculus of the Ideas Immanent in Nervous Activity.” In it, they described a model of artificial neurons — simple decision-making units that could mimic basic logic gates.

Then in 1958, Frank Rosenblatt created the Perceptron, an early type of neural network, funded by the U.S. Navy for image recognition. It was celebrated as a technological marvel — The New York Times even called it “the embryo of an electronic computer that the Navy expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.”

That prediction turned out to be... premature.

Because by 1969, a paper by Marvin Minsky and Seymour Papert titled Perceptrons revealed critical limitations in neural networks — and funding dried up. The field went silent for years. This was the first AI winter.

The Resurrection: How Neural Networks Came Back from the Dead

The 1980s changed everything. Geoffrey Hinton, known today as the “Godfather of Deep Learning,” along with David Rumelhart and Ronald Williams, published the famous backpropagation algorithm in 1986.

That changed the game.

Backpropagation made it possible to train multi-layered neural networks — meaning instead of just one layer of decision-making, networks could learn abstract features across layers. This is what allowed neural networks to go from toy models to powerful tools.

But once again, computing power was a problem. Training deep networks was too slow. Datasets were too small. Researchers were underfunded.

And yet, a few kept pushing.

The Real Breakthrough: 2012 and the ImageNet Moment

Now here comes the turning point that changed the entire future of artificial intelligence.

In 2012, at the ImageNet competition (a global image recognition contest involving over 1.2 million labeled images), a neural network model called AlexNet, built by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton (yes, again), crushed the competition.

It reduced the top-5 error rate from 26% to 15%. That was massive.

How did it do that?

It used deep convolutional neural networks (CNNs)

It ran on NVIDIA GPUs, tapping into parallel processing

It relied on ReLU activation, dropout, and data augmentation for faster and better learning

Source: Krizhevsky, A., Sutskever, I., & Hinton, G. (2012). ImageNet Classification with Deep Convolutional Neural Networks — NeurIPS 2012

From this moment on, neural networks were no longer a fringe curiosity. They became the engine of the AI revolution.

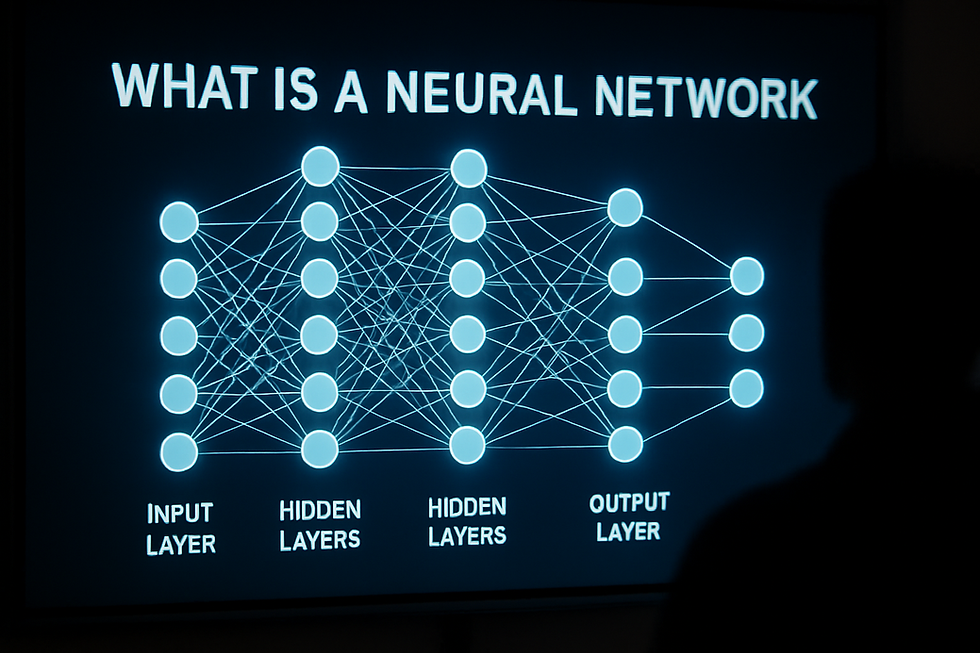

So... What Is a Neural Network Really?

Let’s get brutally honest.

A neural network is a mathematical function approximator. That’s it. It’s a system of layers — where each layer performs weighted calculations on its inputs and passes the results through nonlinear functions (like ReLU, Sigmoid, or Tanh). These outputs then become inputs to the next layer.

Think of it as:

Input layer → Receives the raw data (numbers, pixels, word embeddings, etc.)

Hidden layers → Transform the data through weights, biases, and activations

Output layer → Produces the final prediction or classification

The "learning" happens during training, where the network uses a method called gradient descent to adjust the weights and biases so that its predictions better match the correct answers. This is guided by loss functions like cross-entropy or mean squared error.

There is no magic. Just brutal math and massive computation.

Types of Neural Networks: And Who’s Using What in Real Business

1. Feedforward Neural Networks (FNN)

These are the simplest types. No memory. Data flows one way. Used in basic tasks like:

Email spam detection (used by Google Gmail)

Sales lead classification in tools like Zoho CRM AI

2. Convolutional Neural Networks (CNNs)

Primarily used in image, video, and visual tasks. Real-world use cases:

Amazon Rekognition for image tagging and moderation

Salesforce Einstein Vision for visual product recognition in retail sales workflows

3. Recurrent Neural Networks (RNNs) and LSTMs

Handle sequence data. Great for time-series, speech, and text. Used in:

Grammarly’s tone and grammar correction

Gong.io’s AI sales call analysis (detecting intent, objection, interest, etc.)

4. Transformer-based Networks

These power ChatGPT, Google Bard, Claude, Bing Copilot, and more.

Based on the 2017 paper Attention Is All You Need by Vaswani et al.

These models are trained using billions of parameters and datasets with trillions of tokens

Used in HubSpot AI Assistant, Notion AI, Jasper.ai, and more

Source: Vaswani, A., et al. (2017). Attention Is All You Need — NeurIPS

Neural Networks in Sales: Real Revenue, Real Use, Real Impact

Let’s ground this in sales technology. Neural networks are not just for big tech. They're inside many of the tools your sales teams are already using:

Uses deep neural networks to analyze 100% of sales calls — extracting emotional cues, objection patterns, follow-up triggers, and deal risks.

Gong claims its users win 20% more deals and shorten sales cycles by 18%.

Source: Gong Revenue Intelligence Study 2023

Leverages neural models to identify coachable moments, competitive mentions, and buyer intent signals in meetings.

Cited by Forrester in 2021 as helping sales teams improve rep ramp-up time by 25%

Built with deep learning models for predicting lead scores, suggesting follow-ups, forecasting revenue, and optimizing email send times.

Salesforce reported that companies using Einstein saw an average 43% increase in sales productivity.

Source: Salesforce Customer Success Metrics 2022

The Rise of Neural Network Hardware: Why GPUs & TPUs Became Billion-Dollar Assets

The explosion of deep learning led to one of the biggest technology investment shifts of the decade: hardware.

NVIDIA’s data center revenue grew from $2.9 billion in 2020 to $47.5 billion in 2024 — largely driven by demand for AI training chips like the A100 and H100.

Source: NVIDIA FY2024 Annual Report

Google launched TPUs (Tensor Processing Units) — custom silicon designed specifically for TensorFlow neural networks

Amazon AWS offers Inferentia chips optimized for neural inference at scale — used by companies like Snap and Airbnb

Neural Network Failures: The Truth We Shouldn't Ignore

Neural networks are not infallible. In fact, they’re infamous for being black boxes, vulnerable to bias, and requiring massive energy and data.

In 2018, Amazon scrapped an AI hiring tool built with neural networks because it learned to penalize resumes that included the word "women's", due to historical data bias.

In 2023, OpenAI paused ChatGPT’s browsing feature due to misuse — showcasing the risks of model hallucination and data leakage.

Source: OpenAI Safety Announcements, 2023

Final Word: Why Understanding Neural Networks Isn’t Optional Anymore

If your business uses sales software, eCommerce platforms, CRM systems, email tools, or analytics dashboards, then you’re already using neural networks — whether you know it or not.

They’re deciding who gets your emails.

They’re analyzing your sales reps’ performance.

They’re ranking leads in your CRM.

They’re predicting which customer is about to churn.

They’re even determining ad bid strategies on Google and Facebook.

Neural networks aren’t coming. They’re here. And they’re driving the revenue engines of the most powerful companies on Earth.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments