What is Voice Analytics: The Complete Guide to Understanding Customer Conversations

- Muiz As-Siddeeqi

- Aug 28, 2025

- 6 min read

We’ve all been there.

That one sales call where everything seemed to go perfectly. The prospect laughed, agreed, nodded… yet never converted. Another call—full of hesitation, objections, long pauses—somehow turned into a closed deal. What exactly went right? Or wrong? We think we know. But we don’t. Not really. Not without data. Not without voice analytics.

Welcome to the world where machines don’t just listen—they understand. Where every “uhm”, every silence, every pitch change, every “yes, but…” is dissected, decoded, and transformed into deeply actionable insight.

This is not science fiction. This is the real, rapidly exploding, billion-dollar industry called voice analytics in customer conversations. And it's transforming sales, customer service, and enterprise communication at a speed that no business can afford to ignore anymore.

We’re not here to give you fluff. We're not here to bring you recycled theories, imagined use cases, or hypothetical examples. We’re about to take you into the real, the raw, and the researched world of voice analytics—backed by facts, backed by data, backed by real case studies, real brands, and real outcomes.

Let’s begin.

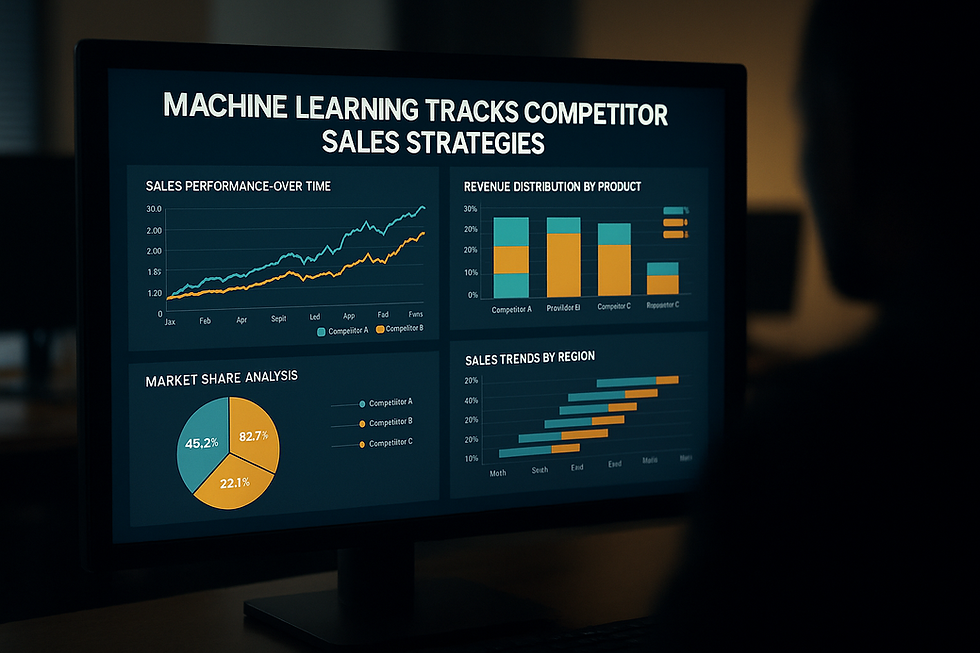

Bonus: Machine Learning in Sales: The Ultimate Guide to Transforming Revenue with Real-Time Intelligence

The DNA of Voice Analytics: What It Really Is (No Guesswork)

Voice analytics, in its simplest real-world definition, is the use of artificial intelligence and machine learning algorithms to analyze the audio of conversations. Not just the words (that’s transcription)—but how those words were spoken, when they were spoken, who said what, in what tone, with what emotion, how often they paused, if they sounded confident, nervous, deceptive, angry, satisfied, and beyond.

The two core branches:

Acoustic Analysis: Focused on tone, pitch, speech rate, intonation, stress, silence patterns.

Linguistic Analysis: Focused on sentiment, intent, keywords, interruptions, repetition, escalation signals, etc.

This isn’t new tech. Intelligence agencies have used variants of this for decades. What’s new is that cloud computing, cheaper AI models, and business APIs have finally made it available to every enterprise.

From Surveillance to Sales Floor: The True Origin Story

Let’s trace the real-world origins of voice analytics.

2001, post-9/11 era: Voice stress analysis tools like VSA and LVA (Layered Voice Analysis) were used in national security to detect deception in surveillance.

2011: Call centers began experimenting with sentiment analysis layered over voice using companies like NICE and Verint.

2016-2020: With the rise of deep learning, companies like Cogito, CallMiner, and Observe.AI emerged—bringing AI-powered voice insights into real-time sales calls.

And then COVID-19 happened. Every sales rep went remote. Every customer interaction became a Zoom or phone call. Suddenly, understanding voice—not just words—became the key to performance.

Why the Global Market is Exploding

The global voice analytics market was valued at USD 1.45 billion in 2023 and is projected to reach USD 5.8 billion by 2030, growing at a CAGR of over 21.3%, according to Grand View Research’s 2024 report.

What’s causing this explosion?

Remote Sales: 78% of B2B buyers now prefer remote or digital sales interactions (McKinsey, 2023).

Agent Burnout: Voice analytics is being used to detect emotional fatigue and burnout in support agents—resulting in retention improvements of up to 15% (IBM Watson report, 2022).

Compliance Requirements: Voice-based compliance detection (like checking if T&Cs were explained) has become critical for regulated industries—especially finance, telecom, and insurance.

This isn’t about vanity dashboards anymore. Voice analytics is now a compliance tool, a coaching engine, a sales accelerator, and a customer happiness radar—all in one.

Real Use Cases That Are Live Right Now

We are not going to use “John” or “Jane” from “Company X”. We are bringing you real companies, real tools, and real numbers.

1. CallMiner and Humana Health Insurance

Humana, one of the largest US health insurance companies, uses CallMiner Eureka to analyze over 100,000 call hours per month.

Result? They reduced their compliance risk by 48% and improved call center efficiency by 15%.

[Source: CallMiner Case Study, 2021]

2. Cogito and MetLife

MetLife integrated Cogito AI, which delivers real-time voice guidance to agents based on vocal cues.

Cogito’s AI listens to calls and nudges the rep with prompts like “show empathy” or “let customer speak”.Result? Customer satisfaction increased by 13%, while agent satisfaction rose by 28%.

[Source: Forbes Interview with MetLife CTO, 2019]

3. Observe.AI and Accolade

Accolade, a healthcare advocacy platform, leveraged Observe.AI for real-time coaching.

With voice analytics and sentiment mapping, they improved sales conversion rates by 21% within 6 months.

[Source: Observe.AI Case Study, 2022]

These are not “innovations”. These are live business systems already delivering ROI.

Voice ≠ Text: Why Transcription Alone Fails

Let’s bust a massive myth.

Transcription ≠ understanding the conversation.

Imagine this line:

“I’m happy with the service.”

Said warmly? Great feedback.

Said sarcastically, under breath? Potential churn risk.

Said after a long pause and sigh? Mixed feelings.

Text alone captures 7% of communication. That’s not speculation—it’s based on Albert Mehrabian’s real 1967 study that broke down communication into:

7% verbal (words)

38% vocal (tone)

55% visual (body language)

In voice-only interactions like phone or Zoom, tone is everything. That’s the 38% voice analytics captures—that text misses entirely.

The Technologies Powering Voice Analytics

Here’s the real tech stack behind modern voice analytics:

ASR (Automatic Speech Recognition): Turns speech into text. Tools: Google Speech API, Amazon Transcribe, Microsoft Azure Speech.

NLP (Natural Language Processing): Analyzes the words for sentiment, entities, etc.

Speech Emotion Recognition (SER): Analyzes tone, pitch, stress.

Acoustic AI: Identifies patterns like silence gaps, cross-talk, escalation triggers.

And the machine learning backbones?

CNNs for spectral analysis

RNNs and LSTMs for sequential speech modeling

BERT and its variants for contextual NLP

Audio transformers (like Wav2Vec 2.0) for pre-trained voice embeddings

Voice analytics is not “one AI tool”. It’s a carefully orchestrated symphony of acoustic, linguistic, and behavioral intelligence.

Challenges You Won’t Hear in Sales Pitches

Let’s get brutally honest.

Voice analytics—despite all the buzz—comes with real challenges you must know before adoption:

Accents & Noise Interference: Accuracy drops significantly with regional accents or low-quality calls.

GDPR & Data Privacy: Audio recordings are biometric data under GDPR. Tools must offer anonymization, data encryption, and audit trails.Companies like Speechmatics and Deepgram are leading in GDPR-safe voice processing.

Bias in Models: Some models may misread emotional cues across different genders or ethnic groups. Real problem. Real lawsuits have happened.

Real-Time is Costly: Running real-time voice AI costs far more than batch analysis. And not every use case needs real-time.

Enter informed. Not starry-eyed.

Who’s Dominating the Market (And What They Offer)

Here’s a breakdown of major real vendors and their strengths:

Company | Known For | Clients (Verified) |

CallMiner | Post-call analytics, compliance | Humana, Synchrony |

Real-time coaching, QA automation | Accolade, Concentrix | |

Cogito | Real-time emotional intelligence | MetLife, Blue Cross |

Verint | Contact center compliance & insights | Vodafone, Telus |

NICE Nexidia | Deep analytics, large-scale data | BT, T-Mobile |

All these offer integrations with CRMs like Salesforce, Zendesk, or contact center software like Five9, Genesys.

Actual Sales Conversation Metrics Tracked (With Definitions)

Most people have no clue what voice analytics actually measures. So let’s break it down with documented, real features:

Talk-to-Listen Ratio: Are reps talking too much? Ideal is around 43:57.

Longest Monologue: Long, uninterrupted rep talking is bad.

Silence Duration: Long silences signal hesitation or confusion.

Positive vs. Negative Sentiment Ratio: Balance in emotional tone.

Interruptions Count: Overlapping voices = tension.

Agent Empathy Score: Calculated from tone modulations, language choice, pacing.

These are not imaginary KPIs. These are what real tools like Observe.AI and CallMiner extract every day.

The Future Is Not Far. It’s Now.

Gartner’s 2024 Emerging Technologies Report highlights “emotion AI”—of which voice analytics is a core part—as one of the top 10 transformative technologies of the next 5 years.

By 2026, Gartner predicts:

“75% of customer service interactions will be analyzed using AI voice and sentiment analytics.”[Source: Gartner Emerging Tech Report, May 2024]

That’s not a trend. That’s a new standard.

Final Word: Why You Can’t Afford to Ignore This

Every single call your sales team makes carries signals. Most of them are never seen, heard, or acted on. That’s not just data loss—that’s revenue loss. That’s opportunity loss. That’s the loss of customer trust and satisfaction that could have been saved… if only you’d heard the unsaid.

Voice analytics isn’t the future. It’s the forgotten dimension of today’s customer understanding.

And now that you know what’s possible, the only question left is:

Will your business keep guessing—or start truly listening?

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments