What Is Machine Learning (ML)? The Complete 2026 Guide to AI's Foundational Technology

- Muiz As-Siddeeqi

- Jan 8

- 30 min read

Every time Netflix suggests your next show, Gmail filters spam, or your phone recognizes your face, machine learning is working behind the scenes. This technology has exploded from a $35.32 billion market in 2024 to a projected $309.68 billion by 2032 (Fortune Business Insights, 2024). But what exactly is machine learning? How does it differ from traditional programming? And why are companies worldwide racing to implement it? This guide cuts through the hype to reveal the real story of machine learning—from its 1950s origins with Alan Turing and Arthur Samuel to today's deep learning revolution transforming industries from healthcare to finance.

Don’t Just Read About AI — Own It. Right Here

TL;DR

Machine learning enables computers to learn from data without explicit programming, using patterns to make predictions

Three main types exist: supervised learning (learns from labeled data), unsupervised learning (finds patterns in unlabeled data), and reinforcement learning (learns through trial and error)

The global ML market reached $35.32 billion in 2024 and is growing at 30.5% annually toward $309.68 billion by 2032

Real applications include Netflix recommendations, Amazon's dynamic pricing, Tesla's self-driving systems, and healthcare diagnostics

Deep learning breakthrough happened in 2012 when AlexNet won ImageNet with 15.3% error rate, beating competitors by 10.9 percentage points

Key challenges include data quality, computational costs, model interpretability, and potential bias in algorithms

What Is Machine Learning?

Machine learning is a type of artificial intelligence that enables computer systems to automatically learn and improve from experience without being explicitly programmed. Instead of following rigid instructions, ML algorithms analyze data, identify patterns, and make decisions or predictions based on those patterns. The system becomes more accurate over time as it processes more data.

Table of Contents

What Is Machine Learning?

Machine learning is a subset of artificial intelligence that gives computers the ability to learn from data and improve their performance over time without being explicitly programmed for every task. Unlike traditional software that follows predetermined rules, ML systems discover patterns in data and use those patterns to make predictions or decisions.

Think of it this way: traditional programming is like following a recipe step by step. Machine learning is like learning to cook by tasting hundreds of dishes, figuring out what ingredients work together, and creating your own recipes based on that experience.

The field has exploded in recent years. According to Grand View Research (2024), the global machine learning market was valued at $55.80 billion in 2024 and is projected to reach $282.13 billion by 2030, growing at a compound annual growth rate of 30.4%. North America dominated with a 29% market share in 2024, but Asia Pacific is experiencing the fastest growth.

Machine learning powers countless technologies you use daily. When Spotify recommends songs, when banks detect fraud, when medical imaging systems identify diseases, or when search engines understand your queries—that's machine learning at work.

How Machine Learning Differs from Traditional Programming

Traditional programming and machine learning approach problems from opposite directions.

In traditional programming, a human developer writes explicit rules. If you wanted to identify spam email, you'd program specific conditions: "If the email contains certain keywords AND comes from an unknown sender AND has suspicious links, THEN mark as spam." Every scenario requires a new rule.

Machine learning flips this model. You feed the system thousands of examples of spam and legitimate emails. The algorithm finds patterns on its own—maybe spam emails use certain word combinations at specific frequencies, or originate from particular server types. The system creates its own internal rules, often discovering patterns humans never noticed.

This difference matters enormously. Traditional programs handle well-defined, rule-based problems efficiently. Machine learning excels at complex, pattern-based challenges where rules are unclear or too numerous to code manually.

Consider facial recognition. Writing rules to identify a specific person's face across different angles, lighting conditions, and expressions would require millions of lines of code—and still fail regularly. A machine learning system simply studies thousands of photos and learns to recognize distinctive features automatically.

A Brief History: From Turing to Today

The Foundations (1940s-1950s)

Machine learning's roots trace back to 1943 when Warren McCulloch and Walter Pitts developed the first computational model of a neural network, inspired by how the brain processes information (Sanfoundry, 2025).

In 1950, Alan Turing published "Computing Machinery and Intelligence" in Mind magazine, asking the provocative question: "Can machines think?" He introduced the Turing Test, a benchmark still referenced today for evaluating machine intelligence (Britannica, 2024).

The breakthrough came in 1952. Arthur Samuel, an IBM computer scientist, created a checkers-playing program for the IBM 701 that learned and improved through experience. Samuel coined the term "machine learning" in 1959 to describe this new approach to computing (Linode, 2022). His program eventually beat the Connecticut state checkers champion in 1961—the first time a machine defeated a human in state-level competition.

The Neural Network Era (1950s-1960s)

Marvin Minsky and Dean Edmonds built SNARC in 1951, the first artificial neural network using 3,000 vacuum tubes to simulate 40 neurons (TechTarget, 2024). Frank Rosenblatt developed the Perceptron in 1957, an early neural network that could learn from data and became the foundation for modern neural networks.

The term "artificial intelligence" was officially coined by John McCarthy in 1956 during the Dartmouth Workshop, widely recognized as the founding event of AI as a field (Pigro AI, 2024).

The AI Winter (1970s-1980s)

Progress stalled in the 1970s. Marvin Minsky and Seymour Papert published "Perceptrons" in 1969, describing limitations of simple neural networks. This led to reduced funding and what became known as the "AI Winter" (TechTarget, 2024).

Despite the chill, important work continued. Arthur Bryson and Yu-Chi Ho described backpropagation learning algorithms in the 1970s, enabling multilayer neural networks. Kunihiko Fukushima released work on neocognitron in the 1980s, a hierarchical neural network for pattern recognition.

The Revival (1990s-2000s)

The 1990s marked a turning point. Statistical methods like Support Vector Machines, decision trees, and Bayesian models enabled more accurate predictions (Sanfoundry, 2025). The growth of the internet provided vast datasets, while hardware improvements made training complex algorithms practical.

Deep learning research advanced steadily. Companies like Google, Amazon, and Facebook generated massive datasets that accelerated ML research. Researchers like Geoffrey Hinton developed deep neural networks that outperformed traditional ML in image and speech recognition.

The Deep Learning Revolution (2012-Present)

The modern era began on September 30, 2012, when AlexNet won the ImageNet Large Scale Visual Recognition Challenge. The convolutional neural network achieved a 15.3% error rate, crushing the competition by 10.9 percentage points (Wikipedia, 2025). This wasn't just a victory—it was proof that deep learning worked at scale.

AlexNet's success sparked an explosion of AI research and investment. According to market research (SkyQuestTechnology, 2025), global corporate investments in AI reached $252.3 billion in 2024, with private investment rising 44.5% year-over-year.

The Three Core Types of Machine Learning

Machine learning divides into three primary categories, each with distinct learning methods and applications.

Supervised learning trains models on labeled data—examples where you know the correct answer. The algorithm studies input-output pairs and learns to map new inputs to outputs.

How it works: You feed the system thousands of images labeled "cat" or "dog." The algorithm identifies distinguishing features—whisker patterns, ear shapes, body proportions. When shown a new image, it predicts whether it's a cat or dog based on learned patterns.

Two main tasks:

Classification: Categorizing data into predefined groups (spam vs. legitimate email, disease present or absent)

Regression: Predicting continuous numerical values (house prices, stock prices, temperature forecasts)

Common algorithms: Linear regression, logistic regression, decision trees, random forests, support vector machines (SVM), neural networks

Real applications: Email spam filtering, medical diagnosis, credit scoring, facial recognition, sentiment analysis, fraud detection

Unsupervised learning finds hidden patterns in unlabeled data. The algorithm receives no correct answers—it must discover structure independently.

How it works: You give the system customer purchase data without labels. The algorithm identifies groups of customers with similar buying behaviors, revealing natural segments you didn't know existed.

Main tasks:

Clustering: Grouping similar data points (customer segmentation, gene sequence analysis)

Dimensionality reduction: Simplifying complex data while preserving important patterns

Anomaly detection: Identifying unusual patterns that don't fit normal behavior

Common algorithms: K-means clustering, hierarchical clustering, principal component analysis (PCA), autoencoders

Real applications: Customer segmentation, market basket analysis, anomaly detection, recommendation systems, compression

Reinforcement learning involves an agent learning through interaction with an environment. The agent takes actions, receives rewards or penalties, and learns to maximize long-term success through trial and error.

How it works: Imagine teaching a robot to walk. It tries different leg movements. If it stays upright, it gets a reward. If it falls, it gets a penalty. Over thousands of attempts, it learns which actions lead to stable walking.

Key concepts:

Agent: The learning system making decisions

Environment: The world the agent interacts with

Actions: Choices the agent can make

Rewards: Feedback signal indicating action quality

Common algorithms: Q-learning, SARSA, Deep Q-Networks (DQN), policy gradient methods

Real applications: Game playing (AlphaGo, chess engines), robotics, autonomous vehicles, resource optimization, dynamic pricing

Hybrid Approaches

Modern systems often combine multiple learning types. Semi-supervised learning uses small amounts of labeled data with large amounts of unlabeled data—useful when labeling is expensive. Self-supervised learning, a newer approach, trains systems to solve pretext tasks that generate their own labels.

According to MIT Sloan research (June 2025), 64% of senior data leaders believe generative AI—which often combines supervised and unsupervised techniques—has potential to be the most transformative technology in a generation.

How Machine Learning Actually Works: Step-by-Step

Machine learning follows a consistent workflow regardless of the specific algorithm or application.

Step 1: Problem Definition

Define exactly what you're trying to predict or decide. Are you classifying, predicting numbers, grouping, or optimizing? Clear problem definition determines everything that follows.

Example: "Predict whether a loan applicant will default within 12 months."

Step 2: Data Collection

Gather relevant data. Quality matters more than quantity, though you often need both. Data sources might include databases, sensors, web scraping, APIs, or manual collection.

Example: Collect 50,000 past loan applications with details on income, credit history, employment, and whether they defaulted.

Step 3: Data Preparation

Raw data is messy. This step typically consumes 60-80% of project time. Tasks include:

Cleaning: Remove duplicates, fix errors, handle missing values

Transformation: Convert data into usable formats

Feature engineering: Create new variables that might improve predictions

Splitting: Divide data into training set (to teach the model) and test set (to evaluate it)

Step 4: Model Selection

Choose appropriate algorithm(s) based on your problem type, data characteristics, and performance requirements. You often test multiple approaches.

For supervised learning problems:

Classification: Logistic regression, decision trees, random forests, neural networks

Regression: Linear regression, polynomial regression, neural networks

For unsupervised problems:

Clustering: K-means, DBSCAN, hierarchical clustering

Dimensionality reduction: PCA, t-SNE, autoencoders

Step 5: Training

Feed training data into the algorithm. The model adjusts internal parameters to minimize prediction errors. Training continues through multiple iterations (epochs) until performance stabilizes.

During training, the model:

Makes predictions on training data

Compares predictions to actual outcomes

Calculates error (loss)

Adjusts parameters to reduce error

Repeats thousands or millions of times

Step 6: Evaluation

Test the trained model on data it has never seen. Compare predictions to actual outcomes using metrics like:

Classification: Accuracy, precision, recall, F1-score

Regression: Mean absolute error, root mean squared error, R-squared

Clustering: Silhouette score, Davies-Bouldin index

If performance is unsatisfactory, return to earlier steps—adjust features, try different algorithms, collect more data.

Step 7: Hyperparameter Tuning

Fine-tune model settings (hyperparameters) that control learning behavior. Use techniques like grid search or random search to find optimal configurations.

Step 8: Deployment

Integrate the model into production systems where it makes real-time predictions on new data. Set up monitoring to track performance and detect issues.

Step 9: Monitoring and Maintenance

Models degrade over time as the world changes. Continuously monitor performance. Retrain periodically with new data. Update or replace models when performance drops.

According to industry research (Itransition, 2024), 42% of enterprise-scale companies actively use AI in their business, with an additional 40% exploring AI implementation. Proper implementation of this workflow is critical to joining these numbers successfully.

Real-World Case Studies

Case Study 1: Siemens Predictive Maintenance

Problem: Siemens needed to reduce unscheduled downtime and maintenance costs for industrial equipment across manufacturing facilities.

Solution: Siemens deployed predictive maintenance systems using machine learning to analyze real-time sensor data from equipment. Algorithms process signals and patterns indicating wear, tear, or deviations from normal operating conditions (DigitalDefynd, 2024).

Implementation: The system integrates historical data with real-time sensor input to continuously update predictive models. Maintenance teams receive actionable insights before equipment fails.

Results: Siemens reported a 30% decrease in maintenance costs across implemented sites. The system extended machinery operational life and significantly reduced unscheduled downtime (DigitalDefynd, 2024).

Technology used: Industrial IoT platform, time-series analysis, anomaly detection algorithms, supervised learning for failure prediction.

Case Study 2: Amazon Dynamic Pricing

Problem: Amazon needed to optimize prices across millions of products in real-time based on demand, competition, and inventory levels while maximizing profitability.

Solution: Amazon employs sophisticated machine learning algorithms for dynamic pricing that automatically adjust prices based on multiple variables. The system processes competitor pricing, customer behavior, inventory levels, and historical sales data (InterviewQuery, 2025).

Implementation: The system uses regression models and ensemble methods like random forests or gradient boosting machines. Big data platforms process vast amounts of real-time information. The pricing engine updates continuously throughout the day.

Technology used: Apache Hadoop for big data processing, TensorFlow or PyTorch for machine learning, real-time data streaming with Apache Kafka.

Impact: Amazon can maintain competitive pricing while optimizing profit margins across its entire inventory without manual intervention. The system processes billions of data points daily.

Case Study 3: Starbucks Personalization Engine

Problem: Starbucks wanted to enhance customer engagement and loyalty by delivering personalized offers that genuinely resonated with individual customers.

Solution: Starbucks developed a recommendation engine that analyzes customer data to uncover patterns and preferences for tailoring marketing efforts (InterviewQuery, 2025).

Implementation: The system clusters customers based on similar behaviors, identifies offer types most likely to appeal to each group, and generates personalized recommendations. Machine learning models continuously learn from customer responses.

Results: Increased customer retention and sales through highly targeted marketing. The personalization engine processes purchase history, app usage, location data, and time patterns to create individualized experiences.

Technology used: SQL databases for structured data storage, Python with scikit-learn for clustering and recommendation algorithms, collaborative filtering techniques.

Case Study 4: GE Aviation Predictive Maintenance

Problem: General Electric needed to reduce unplanned aircraft downtime and maintenance costs while ensuring safety across global airline operations.

Solution: GE's predictive maintenance system, part of their Industrial Internet of Things (IIoT) platform, collects and analyzes data from connected equipment worldwide (DigitalDefynd, 2024). The system integrates historical data with real-time sensor input to update predictive models continuously.

Results: Significant cost savings through reduced unplanned downtime and extended equipment life. The technology has been particularly impactful in aviation, helping airlines save on maintenance costs while improving aircraft availability.

Technology used: IIoT platform, sensor data fusion, machine learning for failure prediction, real-time analytics.

Case Study 5: Toyota Manufacturing Efficiency

Problem: Toyota needed to improve factory efficiency and enable workers to develop and deploy machine learning models without extensive data science expertise.

Solution: Toyota implemented an AI platform using Google Cloud's AI infrastructure that enables factory workers to develop and deploy machine learning models directly (Google Cloud Blog, 2024).

Results: Reduction of over 10,000 man-hours per year, increased efficiency and productivity across manufacturing operations. Workers can now solve problems using ML without waiting for data scientists.

Technology used: Google Cloud AI Platform, AutoML capabilities, user-friendly model development tools, production deployment infrastructure.

Machine Learning Across Industries

Healthcare

Machine learning is transforming medicine from diagnosis to drug discovery. The healthcare segment dominated the ML market with the largest share in 2024 and is anticipated to be the most attractive segment through the forecast period (Precedence Research, 2025).

Applications:

Medical imaging analysis for disease detection

Drug discovery and development

Personalized treatment recommendations

Patient risk stratification

Predictive analytics for hospital readmissions

Google DeepMind developed algorithms that identify retinal diseases including diabetic retinopathy from eye images. Machine learning analyzes medical data to predict outcomes, offer risk scores, and optimize resource allocation.

Finance and Banking

The banking, financial services, and insurance (BFSI) segment led the AI market with a 19.6% share in 2024 (Precedence Research, 2025).

Applications:

Fraud detection and prevention

Credit scoring and loan approval

Algorithmic trading

Risk management

Customer service chatbots

Anti-money laundering detection

Amazon's fraud detection system uses machine learning to identify and prevent fraudulent transactions in real-time. The system analyzes transaction patterns, user behavior, and numerous variables to flag suspicious activity without creating excessive false positives.

Retail and E-Commerce

The retail segment is expected to show significant market share growth during the forecast period. Retailers using AI and machine learning saw annual profit growth of approximately 8% in both 2023 and 2024, outpacing competitors (Itransition, 2024).

Applications:

Personalized product recommendations

Inventory optimization

Price optimization

Customer segmentation

Demand forecasting

Visual search

Netflix's recommendation engine analyzes viewing habits to suggest content users are likely to enjoy. This personalization is critical for user satisfaction and subscription retention. Approximately 80% of watched content on Netflix comes from recommendations.

Manufacturing

The manufacturing industry holds the largest share of the ML market at 18.88% (DemandSage, 2024).

Applications:

Predictive maintenance

Quality control and defect detection

Supply chain optimization

Production planning

Robotics and automation

Energy consumption optimization

Automotive and Transportation

Applications:

Autonomous vehicles

Driver assistance systems

Route optimization

Predictive maintenance

Traffic prediction

Insurance risk assessment

Tesla's self-driving system uses machine learning to handle complex driving tasks and adapt to diverse conditions. The system continuously learns from fleet data, improving with each mile driven.

Telecommunications

The IT and telecommunications segment is expected to lead the market during the forecast period (Fortune Business Insights, 2024).

Applications:

Network optimization

Predictive maintenance

Customer churn prediction

Fraud detection

Virtual assistants

Service personalization

Agriculture

Applications:

Crop yield prediction

Pest and disease detection

Soil analysis

Precision farming

Autonomous farming equipment

Weather forecasting

Deep Learning: The Game Changer

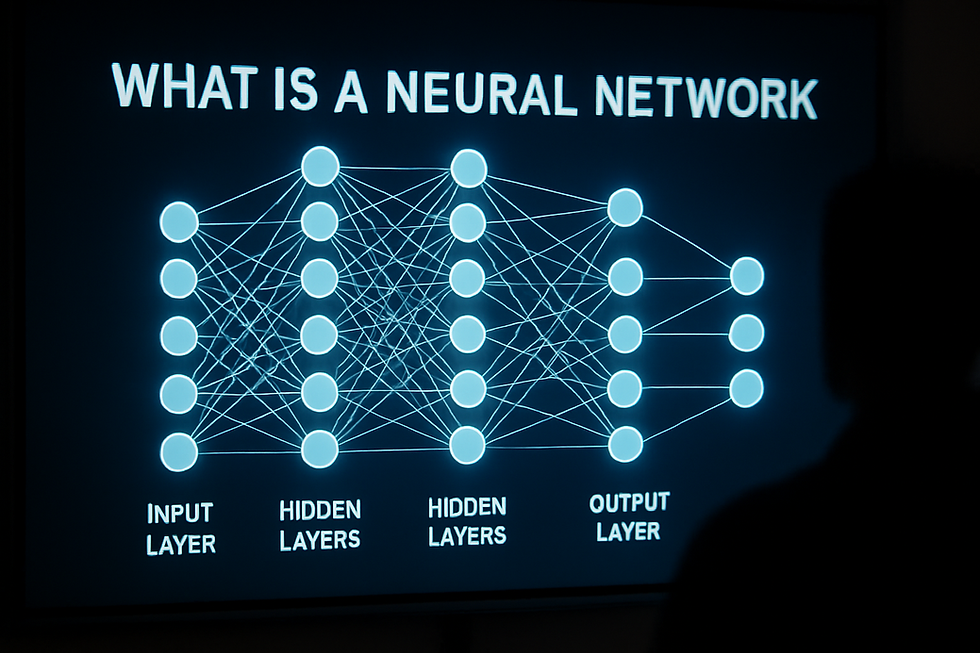

Deep learning is a specialized subset of machine learning that uses artificial neural networks with multiple layers—hence "deep." While traditional machine learning requires humans to engineer features, deep learning automatically learns hierarchical representations from raw data.

The AlexNet Revolution

The deep learning revolution began on September 30, 2012, when AlexNet shocked the computer vision world. Created by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton at the University of Toronto, AlexNet won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) with a top-5 error rate of 15.3%—more than 10.8 percentage points ahead of the second-place finisher (Wikipedia, 2025).

Why it mattered:

AlexNet proved that deep neural networks could outperform traditional machine learning methods on complex, large-scale problems. The victory wasn't just about winning—it validated years of research and sparked the modern AI boom.

Key innovations:

GPU Training: AlexNet trained on 2 Nvidia GTX 580 GPUs, demonstrating that graphics processors could accelerate neural network training dramatically (IEEE Spectrum, 2025)

ReLU Activation: Instead of sigmoid or tanh functions that slow learning, AlexNet used Rectified Linear Unit (ReLU) activation, speeding up training without sacrificing accuracy (Medium, 2024)

Dropout Regularization: A technique to prevent overfitting by randomly "dropping" neurons during training, ensuring the network generalizes well to new data

Data Augmentation: Creating variations of training images through flipping, rotating, and cropping to artificially expand the dataset

Architecture: AlexNet contained eight layers—five convolutional layers and three fully connected layers—with 60 million parameters and 650,000 neurons. Though small by today's standards (modern language models have billions of parameters), it was massive in 2012.

What Made It Possible

Three developments converged to enable AlexNet's success:

Large-Scale Labeled Datasets: Fei-Fei Li's ImageNet, completed in 2009, provided millions of labeled images across thousands of categories—the fuel deep learning needs (IEEE Spectrum, 2025)

GPU Computing: Nvidia's CUDA framework, released in 2007, enabled software access to highly parallel GPU processing, making training practical

Improved Training Methods: Advances in optimization algorithms, activation functions, and regularization techniques made training deep networks feasible

Impact and Legacy

AlexNet's victory triggered an explosion of deep learning research. Within years, virtually all ImageNet competitors used convolutional neural networks. Subsequent architectures like VGGNet (2014), GoogLeNet (2014), ResNet (2015), and EfficientNet (2019) built on AlexNet's foundation.

Yann LeCun described AlexNet as "an unequivocal turning point in the history of computer vision" at the 2012 European Conference on Computer Vision (Wikipedia, 2025).

Geoffrey Hinton, Ilya Sutskever, and Alex Krizhevsky formed DNNResearch shortly after and sold the company and AlexNet source code to Google. Hinton later commented: "Ilya thought we should do it, Alex made it work, and I got the Nobel Prize" (Wikipedia, 2025).

Deep Learning Today

Deep learning now powers:

Computer vision (facial recognition, medical imaging, autonomous vehicles)

Natural language processing (translation, chatbots, content generation)

Speech recognition (virtual assistants, transcription services)

Game playing (AlphaGo, chess engines, video game AI)

Drug discovery (protein folding, molecule generation)

Recommendation systems (content, products, connections)

According to Precedence Research (2025), machine learning (which includes deep learning) held a 36.7% share of the overall AI market in 2024, making it the largest technology segment.

Advantages and Limitations

Advantages

1. Handles Complexity

Machine learning excels at problems too complex for traditional programming. Recognizing speech, understanding images, or predicting market trends involve millions of variables and subtle patterns humans cannot articulate as rules.

2. Improves with Data

Unlike static programs, ML systems get better over time. More data leads to improved accuracy. This self-improving characteristic makes ML ideal for dynamic environments.

3. Discovers Hidden Patterns

ML algorithms identify relationships humans miss. They process thousands of variables simultaneously, uncovering insights buried in data.

4. Automates Decision-Making

Once trained, models make decisions in milliseconds. This speed enables real-time applications like fraud detection, autonomous driving, and high-frequency trading.

5. Reduces Human Error

Consistent, objective decision-making eliminates fatigue, bias (when properly designed), and simple mistakes that affect human performance.

6. Scales Efficiently

A trained model handles millions of decisions without proportional cost increases. One spam filter serves billions of emails at minimal incremental cost.

Limitations

1. Requires Substantial Data

ML algorithms need large datasets to learn effectively. Insufficient data leads to poor performance. Gathering, cleaning, and labeling data is expensive and time-consuming.

2. Computational Demands

Training complex models requires significant computing power. Deep learning models may need weeks of training on expensive GPU clusters. Even inference (making predictions) can be resource-intensive.

3. Black Box Nature

Many ML models, especially deep neural networks, are difficult to interpret. Understanding why a model made a specific decision can be challenging or impossible. This opacity raises concerns in critical applications like medical diagnosis or loan approval.

4. Vulnerable to Poor Data Quality

Garbage in, garbage out. Biased, incomplete, or incorrect training data produces flawed models. Models perpetuate and amplify biases present in training data.

5. Overfitting Risk

Models can become too specialized to training data, performing well on examples they've seen but poorly on new data. Careful validation and regularization techniques are essential.

6. Limited Generalization

ML models excel at their specific trained task but struggle with situations outside their training scope. A model trained to recognize cats in photos won't identify cats in drawings without additional training.

7. Security Vulnerabilities

ML systems can be fooled by adversarial examples—inputs designed to cause misclassification. Small, imperceptible changes to images or text can trick sophisticated models.

8. Maintenance Requirements

The world changes. Customer preferences shift, fraud tactics evolve, market conditions fluctuate. Models require continuous monitoring and retraining to maintain performance.

9. Implementation Challenges

Successfully deploying ML requires specialized expertise. Data scientists, ML engineers, and domain experts must collaborate effectively. Many projects fail not from technical issues but from organizational challenges.

10. Ethical Concerns

ML systems can perpetuate discrimination, invade privacy, or make consequential decisions without human oversight. Responsible AI development requires careful attention to ethical implications.

Common Myths and Facts

Myth 1: AI and Machine Learning Are the Same Thing

Fact: AI is the broad concept of machines performing tasks that typically require human intelligence. Machine learning is a subset of AI focused on learning from data. Not all AI uses machine learning (rule-based systems, for example), and ML is one approach among several to achieving AI.

Myth 2: Machine Learning Will Replace All Human Jobs

Fact: ML automates specific tasks, not entire jobs. Most roles involve diverse responsibilities, many requiring human judgment, creativity, or empathy that ML cannot replicate. ML typically augments human capabilities rather than replacing humans entirely. According to research, AI adoption increases in areas like customer service (38% of AI's business value per BCG), but humans remain central to operations.

Myth 3: More Data Always Means Better Models

Fact: Data quality matters more than quantity. Biased, noisy, or irrelevant data degrades performance regardless of volume. A small, high-quality dataset often outperforms a large, messy one.

Myth 4: Machine Learning Models Are Objective and Unbiased

Fact: ML models reflect biases in training data and design choices. If historical hiring data shows bias against certain groups, an ML hiring system trained on that data will perpetuate that bias. Creating fair, unbiased ML systems requires deliberate effort.

Myth 5: You Need a PhD to Use Machine Learning

Fact: While advanced ML research requires deep expertise, many practical applications use established techniques and user-friendly tools. Libraries like scikit-learn, cloud ML services, and AutoML platforms make ML accessible to developers without specialized degrees.

Myth 6: Machine Learning Can Solve Any Problem

Fact: ML works best for specific problem types: pattern recognition, prediction, optimization. It struggles with problems requiring common sense reasoning, causal understanding, or operating in environments vastly different from training conditions.

Myth 7: Once Trained, Models Work Forever

Fact: Models degrade over time as the world changes. Customer behavior shifts, fraud tactics evolve, languages develop. Continuous monitoring and periodic retraining are essential for maintaining performance.

Myth 8: Machine Learning Is Too Expensive for Small Businesses

Fact: While large-scale implementations can be costly, cloud services and open-source tools have democratized ML. Small businesses can start with modest investments using pre-trained models, cloud APIs, and incremental implementation.

Implementation Challenges and How to Address Them

Challenge 1: Data Availability and Quality

Problem: ML requires substantial amounts of clean, relevant, representative data. Many organizations lack sufficient data or have data quality issues.

Solutions:

Start with data audit: assess what you have and what you need

Implement data collection systems early

Use data augmentation techniques to expand limited datasets

Consider transfer learning—using models pre-trained on large datasets

Partner with data providers when internal data is insufficient

Challenge 2: Lack of ML Expertise

Problem: Data scientists and ML engineers are scarce and expensive. According to industry surveys, 89.6% of Fortune 1000 CIOs reported increasing investment in generative AI (Itransition, 2024), intensifying competition for talent.

Solutions:

Use managed ML services (AWS SageMaker, Google Cloud AI, Azure ML)

Leverage AutoML platforms that automate model selection and tuning

Partner with ML consultants or development firms for initial projects

Invest in training existing technical staff

Start with simple problems using established techniques

Challenge 3: Computational Costs

Problem: Training large models requires expensive hardware and can take weeks or months.

Solutions:

Use cloud computing for flexibility—pay only for resources you use

Start with smaller models and scale up if needed

Use pre-trained models and fine-tune for your specific needs

Consider model compression and optimization techniques

Evaluate if simpler traditional ML methods might suffice

Challenge 4: Model Interpretability

Problem: Complex models act as "black boxes," making decisions without clear explanations. This is problematic in regulated industries or high-stakes applications.

Solutions:

Use inherently interpretable models (decision trees, linear models) when explainability is critical

Apply model interpretation techniques (SHAP values, LIME)

Create model documentation explaining training data, features, and limitations

Implement human oversight for critical decisions

Consider hybrid approaches combining interpretable and complex models

Challenge 5: Integration with Existing Systems

Problem: ML models must work within existing IT infrastructure, databases, and workflows.

Solutions:

Start with pilot projects to identify integration challenges

Use standard APIs and microservices architecture

Ensure monitoring and version control from the start

Plan for model updates without system downtime

Involve IT and operations teams early in planning

Challenge 6: Managing Expectations

Problem: Stakeholders often have unrealistic expectations about ML capabilities, timeline, and costs.

Solutions:

Educate stakeholders about ML possibilities and limitations

Start with well-defined, achievable projects

Set clear metrics for success upfront

Communicate progress and challenges transparently

Demonstrate value quickly with proof-of-concept projects

Challenge 7: Ethical and Regulatory Concerns

Problem: ML systems can perpetuate bias, violate privacy, or make consequential decisions without proper oversight. Regulations like GDPR impose requirements on automated decision-making.

Solutions:

Conduct bias audits on training data and model outputs

Implement fairness metrics and constraints

Ensure transparency in how models make decisions

Get legal review for applications in regulated domains

Create governance frameworks for ML development and deployment

Allow human review and appeals for important decisions

The Future of Machine Learning

Market Growth Trajectory

Machine learning will continue explosive growth. Multiple research firms project the market reaching between $282 billion (Grand View Research) and $1.4 trillion (Precedence Research) by the early 2030s, with compound annual growth rates of 30-38%.

This growth reflects increasing adoption across industries. According to McKinsey research cited by DemandSage (2024), 50% of surveyed respondents had adopted artificial intelligence and machine learning in at least one business function by 2024.

Key Trends Shaping the Future

1. Generative AI Integration

Generative AI, which creates new content like text, images, and code, is expected to grow at a 22.9% CAGR from 2025 to 2034 (Precedence Research, 2025). The combination of traditional ML prediction with generative AI creation enables entirely new applications.

According to MIT Sloan research (June 2025), generative AI is taking over some tasks traditionally performed by machine learning, particularly when dealing with everyday language or common images. However, traditional ML remains superior for privacy-sensitive tasks, highly specific domain knowledge, and existing well-functioning systems.

2. Edge Computing and ML

Machine learning is moving from cloud servers to edge devices—smartphones, IoT sensors, autonomous vehicles. This shift enables:

Real-time processing without network latency

Enhanced privacy by keeping data local

Reduced bandwidth costs

Operation in environments with limited connectivity

3. AutoML and Democratization

Automated machine learning platforms are making ML accessible to non-experts. These systems automatically handle:

Feature engineering

Algorithm selection

Hyperparameter tuning

Model deployment

This democratization means more businesses can leverage ML without extensive data science teams.

4. Explainable AI (XAI)

As ML systems make increasingly important decisions, understanding their reasoning becomes critical. Research focuses on developing models that provide clear explanations for their predictions—essential for regulated industries and building user trust.

5. Federated Learning

This approach trains models across decentralized devices while keeping data local. Benefits include:

Enhanced privacy protection

Reduced data transfer costs

Leveraging data that cannot be centralized for legal or practical reasons

6. Quantum Machine Learning

Quantum computers promise to revolutionize ML by solving certain optimization problems exponentially faster. While practical quantum advantage remains years away, research progresses steadily.

7. Few-Shot and Zero-Shot Learning

New techniques enable models to learn from very few examples or even generalize to completely new tasks without specific training. This addresses the data hunger that currently limits ML applications.

Industry-Specific Predictions

Healthcare: ML will become standard in diagnosis, treatment planning, and drug discovery. Personalized medicine based on genetic and lifestyle data will expand. Expect continued growth with the healthcare segment projected to grow at 19.1% CAGR (Precedence Research, 2025).

Finance: Algorithmic trading, fraud detection, and risk assessment will become more sophisticated. Real-time credit decisions and fully automated financial advising will expand.

Retail: The potential impact of generative AI on retail may range between $400 billion and $660 billion annually through streamlined customer service, marketing, sales, and supply chain management (Itransition, 2024).

Manufacturing: Predictive maintenance will become ubiquitous. Autonomous factories with minimal human intervention will grow. Digital twins—virtual replicas of physical systems—will enable optimization through ML.

Transportation: Autonomous vehicles will expand beyond passenger cars to trucks, buses, delivery robots, and drones. ML-powered logistics optimization will transform supply chains.

Challenges Ahead

Data Privacy and Security: Increasing regulation and public concern about data use will shape ML development. Privacy-preserving techniques like federated learning and differential privacy will become essential.

Energy Consumption: Training large models consumes significant energy. Recent investments reflect this—in June 2025, AWS announced a $5.3 billion investment in North Carolina to expand AI-driven cloud infrastructure (SkyQuestTechnology, 2025). Developing more efficient algorithms and hardware is crucial for sustainability.

Bias and Fairness: Addressing algorithmic bias will remain a central challenge. Expect increasing regulatory requirements for fairness audits and transparency.

Skills Gap: Demand for ML expertise will outpace supply. Organizations must invest in training and develop tools that make ML accessible to broader teams.

Regulatory Landscape: Governments worldwide are developing AI regulations. The EU's AI Act, for example, classifies AI systems by risk and imposes requirements accordingly. Navigating evolving regulations will be crucial for ML deployment.

Long-Term Outlook

Machine learning is transitioning from experimental technology to essential infrastructure. Within a decade, ML capabilities will be embedded throughout business operations, often invisibly. The question won't be whether to use ML but how to use it effectively and responsibly.

The technology will continue advancing, but the rate of progress may moderate as we approach fundamental limits of current architectures. The next major breakthrough—possibly through new computing paradigms like quantum computing or novel neural architectures—could trigger another revolution.

One thing is certain: machine learning will be central to technological and economic progress for the foreseeable future. Organizations that master ML capabilities will have significant competitive advantages.

Frequently Asked Questions

Q1: What is the difference between AI, machine learning, and deep learning?

Artificial intelligence is the broad concept of machines performing tasks requiring human intelligence. Machine learning is a subset of AI focused on systems that learn from data. Deep learning is a subset of machine learning using multi-layered neural networks. Think of them as nested categories: AI contains ML, which contains deep learning.

Q2: How much data do I need to start with machine learning?

This depends on problem complexity and algorithm choice. Simple problems might need hundreds of examples. Complex image recognition could require thousands or millions. Start with whatever data you have—you can always collect more. Transfer learning lets you leverage pre-trained models, reducing data requirements significantly.

Q3: Can machine learning work with small datasets?

Yes, with the right approach. Techniques include transfer learning (starting with a pre-trained model), data augmentation (creating variations of existing data), and using simpler algorithms that work well with limited data. However, deep learning typically requires substantial data to perform well.

Q4: Is machine learning only for large companies?

No. Cloud platforms, open-source libraries, and pre-trained models have democratized ML. Small businesses can start with modest investments. Many cloud providers offer pay-as-you-go ML services, making experimentation affordable. Start small, prove value, then scale.

Q5: How long does it take to implement a machine learning project?

Timeline varies dramatically. A simple proof-of-concept might take weeks. Production-ready systems typically take months. Complex projects can take a year or more. Factors include data availability, problem complexity, team expertise, and integration requirements. Start with pilot projects to build capabilities and prove value.

Q6: What programming languages are used for machine learning?

Python dominates ML development due to extensive libraries (scikit-learn, TensorFlow, PyTorch, pandas). R is popular for statistical analysis. Java and C++ are used for production systems requiring high performance. JavaScript enables ML in web browsers. Most practitioners start with Python.

Q7: Do I need expensive hardware to train machine learning models?

Not necessarily. Cloud services let you rent powerful hardware only when needed. Many problems work fine on standard computers. Deep learning benefits from GPUs, but cloud providers offer GPU instances by the hour. Start with CPU training; upgrade to GPUs if training time becomes problematic.

Q8: How do I know if my problem is suitable for machine learning?

ML works well when: you have data available, patterns exist in that data, you can define clear objectives, and traditional programming is impractical. ML struggles when: data is scarce, the problem requires common sense reasoning, or rules are simple enough to code directly.

Q9: Will machine learning replace data analysts and programmers?

ML is a tool that augments human capabilities. Analysts still need to understand business context, ask the right questions, and interpret results. Programmers still design systems, integrate components, and maintain infrastructure. ML automates specific tasks but creates demand for people who can work effectively with these technologies.

Q10: How accurate are machine learning predictions?

Accuracy varies by application and data quality. Some systems achieve human-level or superhuman performance (image classification, game playing). Others provide modest improvements over traditional methods. Accuracy alone isn't the only metric—consider false positive rates, false negative rates, and consequences of different error types.

Q11: What is the difference between training data and test data?

Training data teaches the model—the algorithm learns patterns from these examples. Test data evaluates performance on unseen examples. This split prevents overfitting: memorizing training data rather than learning generalizable patterns. Typical splits are 70-80% training, 20-30% testing, sometimes with a separate validation set.

Q12: Can machine learning models explain their predictions?

Some can, some cannot. Simple models like decision trees and linear regression are inherently interpretable. Complex models like deep neural networks are often "black boxes." Techniques like SHAP values and LIME can provide insights into any model's predictions, though explanations may be approximate.

Q13: How often should I retrain my machine learning model?

This depends on how quickly your domain changes. High-frequency trading models might retrain daily. Fraud detection systems might retrain weekly or monthly. Customer segmentation might retrain quarterly. Monitor performance metrics—when accuracy drops below acceptable levels, retrain. Some systems retrain automatically on a schedule.

Q14: What are the ethical concerns with machine learning?

Key concerns include: algorithmic bias and discrimination, privacy violations through data collection and analysis, lack of transparency in decision-making, job displacement, autonomous weapons, deepfakes and misinformation, and concentration of power among organizations with most data and computing resources. Responsible AI development requires addressing these issues proactively.

Q15: Is machine learning just statistics?

ML and statistics overlap significantly but have different emphases. Statistics focuses on inference—understanding relationships and testing hypotheses. ML focuses on prediction—making accurate forecasts. ML uses many statistical techniques but also draws from computer science, particularly for scaling to large datasets and automating learning processes.

Q16: What industries benefit most from machine learning?

All industries benefit, but leaders include healthcare (diagnosis, drug discovery), finance (fraud detection, trading), retail (recommendations, inventory), manufacturing (predictive maintenance, quality control), and technology (search, translation, content recommendation). According to market data, manufacturing holds 18.88% of the ML market, followed by finance at 15.42%.

Q17: How do I start learning machine learning?

Begin with fundamentals: basic statistics, linear algebra, and programming (Python recommended). Take online courses (Coursera, edX, fast.ai). Practice on real datasets (Kaggle competitions). Start with simple algorithms and gradually progress to complex ones. Build projects to solidify understanding. Join ML communities for support and learning.

Q18: What is overfitting and how do I prevent it?

Overfitting occurs when a model learns training data too well, including noise and outliers, performing poorly on new data. Prevention techniques include: using more training data, simplifying the model, applying regularization (adding penalties for complexity), using dropout in neural networks, and validating on separate test data.

Q19: What's the difference between supervised and unsupervised learning?

Supervised learning trains on labeled data—you provide correct answers. The model learns to map inputs to outputs. Unsupervised learning works with unlabeled data, finding hidden patterns without being told what to look for. Use supervised learning when you know what you want to predict. Use unsupervised learning for exploration and discovery.

Q20: How much does it cost to implement machine learning?

Costs vary enormously. A simple cloud-based implementation might cost thousands of dollars. Enterprise-wide deployments can cost millions. Major factors include: data infrastructure, computing resources, personnel (data scientists, engineers), ongoing maintenance, and integration work. Start with pilot projects to estimate costs before full-scale deployment.

Key Takeaways

Machine learning enables computers to learn from data and improve performance without explicit programming for every scenario

The field originated in the 1950s with pioneers like Alan Turing and Arthur Samuel, but achieved mainstream success with AlexNet's 2012 ImageNet victory

Three primary types exist: supervised learning (labeled data), unsupervised learning (pattern discovery), and reinforcement learning (trial and error)

The global ML market reached $35.32 billion in 2024 and is projected to hit $309.68 billion by 2032, growing at 30.5% annually

Real-world applications span healthcare diagnostics, fraud detection, recommendation systems, autonomous vehicles, and predictive maintenance

Deep learning using neural networks revolutionized the field, with AlexNet achieving 15.3% error rate in 2012, beating competitors by 10.9 percentage points

Implementation requires quality data, appropriate algorithms, sufficient computing resources, and expertise in data science and domain knowledge

Common challenges include data availability, computational costs, model interpretability, bias concerns, and integration complexity

Machine learning augments rather than replaces human capabilities, automating specific tasks while creating demand for people who understand the technology

Future trends include generative AI integration, edge computing, AutoML democratization, explainable AI, and federated learning for privacy

Your Next Steps

Assess Your Current State

Identify problems in your organization where ML might add value. Look for tasks involving prediction, pattern recognition, or optimization with available data.

Start with Education

If you're new to ML, take an introductory online course. Options include Andrew Ng's Machine Learning course on Coursera, fast.ai's Practical Deep Learning, or Google's Machine Learning Crash Course.

Inventory Your Data

Evaluate what data you have, its quality, and what additional data you might need. Quality matters more than quantity.

Begin with a Pilot Project

Choose a well-defined, achievable problem. Success builds organizational support for larger initiatives. Consider using cloud ML services for quick wins.

Build or Acquire Expertise

Decide whether to train existing staff, hire specialists, or partner with consultants. Many organizations use a hybrid approach.

Explore Pre-Built Solutions

Many common ML applications (image recognition, text analysis, translation) have pre-trained models available. Start here before building custom solutions.

Join ML Communities

Participate in forums like Kaggle, attend meetups, follow ML researchers on social media. Learning from practitioners accelerates your progress.

Stay Informed

ML evolves rapidly. Follow developments through blogs (Towards Data Science, Machine Learning Mastery), research conferences (NeurIPS, ICML), and industry publications.

Consider Ethical Implications

From the start, think about bias, privacy, transparency, and fairness. Building responsible AI is easier than retrofitting it.

Measure and Iterate

Define success metrics before starting. Track progress, learn from failures, and continuously improve your approach.

Glossary

Algorithm: A set of rules or instructions that a computer follows to solve a problem or complete a task.

Artificial Intelligence (AI): The broad field of computer science focused on creating systems that can perform tasks normally requiring human intelligence.

Backpropagation: An algorithm for training neural networks that calculates gradients by working backwards from output to input layers.

Bias (Statistical): Systematic error in predictions. Can also refer to unfair discrimination encoded in algorithms.

Classification: A supervised learning task where the goal is to assign inputs to predefined categories.

Clustering: An unsupervised learning technique that groups similar data points together.

Convolutional Neural Network (CNN): A type of deep learning model particularly effective for image analysis.

Data Augmentation: Creating variations of training data to artificially expand dataset size and improve model generalization.

Deep Learning: A subset of machine learning using neural networks with multiple layers.

Dropout: A regularization technique that randomly ignores neurons during training to prevent overfitting.

Feature: An individual measurable property or characteristic of data used by ML algorithms.

Feature Engineering: The process of creating or selecting relevant features from raw data.

GPU (Graphics Processing Unit): Hardware originally designed for graphics but now widely used to accelerate machine learning computations.

Hyperparameter: A configuration setting for a learning algorithm that must be set before training begins.

Inference: Using a trained model to make predictions on new data.

Label: The correct output or answer for a training example in supervised learning.

Loss Function: A measure of how wrong a model's predictions are; algorithms minimize this during training.

Neural Network: A machine learning model inspired by the structure of biological brains, consisting of interconnected nodes (neurons) organized in layers.

Overfitting: When a model learns training data too well, including noise, and performs poorly on new data.

Precision: The proportion of positive predictions that are actually correct.

Recall: The proportion of actual positive cases that the model correctly identified.

Regression: A supervised learning task where the goal is to predict continuous numerical values.

Reinforcement Learning: A learning approach where an agent learns by interacting with an environment and receiving rewards or penalties.

ReLU (Rectified Linear Unit): An activation function commonly used in neural networks that outputs zero for negative inputs and the input value for positive inputs.

Supervised Learning: Machine learning using labeled training data where correct outputs are provided.

Test Data: Data held back from training, used to evaluate model performance on unseen examples.

Training: The process of teaching a machine learning model by showing it examples and adjusting parameters to minimize errors.

Transfer Learning: Using a model trained on one task as a starting point for a different but related task.

Underfitting: When a model is too simple to capture patterns in data, performing poorly even on training data.

Unsupervised Learning: Machine learning with unlabeled data where the algorithm must discover patterns independently.

Validation Data: Data used during training to tune hyperparameters and prevent overfitting, separate from both training and test sets.

Sources & References

Fortune Business Insights. (2024). Machine Learning Market Size, Share, Growth | Trends [2032]. Retrieved from https://www.fortunebusinessinsights.com/machine-learning-market-102226

Research Nester. (2025). Machine Learning Market Size, Share & Forecast Insights to 2035. Retrieved from https://www.researchnester.com/reports/machine-learning-market/5169

SkyQuest Technology. (2025). Machine Learning Market Size, Share, and Growth Analysis. Retrieved from https://www.skyquestt.com/report/machine-learning-market

Grand View Research. (2024). Machine Learning Market Size & Share | Industry Report 2030. Retrieved from https://www.grandviewresearch.com/industry-analysis/machine-learning-market

Precedence Research. (2025). Machine Learning Market Size and Forecast 2025 to 2034. Retrieved from https://www.precedenceresearch.com/machine-learning-market

Statista. (2025). Machine Learning - Worldwide | Statista Market Forecast. Retrieved from https://www.statista.com/outlook/tmo/artificial-intelligence/machine-learning/worldwide

Itransition. (2024). Machine Learning Statistics for 2026: The Ultimate List. Retrieved from https://www.itransition.com/machine-learning/statistics

DemandSage. (2025). 70+ Machine Learning Statistics 2026: Industry Market Size. Retrieved from https://www.demandsage.com/machine-learning-statistics/

Market.us. (2025). Machine Learning Market Size, Share | CAGR of 38.3%. Retrieved from https://market.us/report/global-machine-learning-market/

Google Cloud Blog. (2024). Real-world gen AI use cases from the world's leading organizations. Retrieved from https://cloud.google.com/transform/101-real-world-generative-ai-use-cases-from-industry-leaders

InterviewQuery. (2025). Top 17 Machine Learning Case Studies to Look Into Right Now. Retrieved from https://www.interviewquery.com/p/machine-learning-case-studies

DigitalDefynd. (2024). Top 30 Machine Learning Case Studies [2025]. Retrieved from https://digitaldefynd.com/IQ/machine-learning-case-studies/

MIT Sloan. (2025). Machine learning and generative AI: What are they good for in 2025? Retrieved from https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-and-generative-ai-what-are-they-good-for

GeeksforGeeks. (2025). Supervised vs Unsupervised vs Reinforcement Learning. Retrieved from https://www.geeksforgeeks.org/machine-learning/supervised-vs-reinforcement-vs-unsupervised/

TechTarget. (2024). 4 Types of Learning in Machine Learning Explained. Retrieved from https://www.techtarget.com/searchenterpriseai/tip/Types-of-learning-in-machine-learning-explained

DigitalOcean. (2024). Types of Machine Learning: Supervised, Unsupervised and More. Retrieved from https://www.digitalocean.com/resources/articles/types-of-machine-learning

Google for Developers. (2024). What is Machine Learning? Retrieved from https://developers.google.com/machine-learning/intro-to-ml/what-is-ml

Data Science Dojo. (2025). Machine learning 101: The types of ML explained. Retrieved from https://datasciencedojo.com/blog/machine-learning-101/

Britannica. (2024). History of artificial intelligence | Dates, Advances, Alan Turing. Retrieved from https://www.britannica.com/science/history-of-artificial-intelligence

Pigro AI. (2024). History of Artificial Intelligence: the 1950s. Retrieved from https://www.pigro.ai/post/history-of-artificial-intelligence-the-1950s-from-alan-turing-to-john-mccarthy

TechTarget. (2024). History and Evolution of Machine Learning: A Timeline. Retrieved from https://www.techtarget.com/whatis/feature/History-and-evolution-of-machine-learning-A-timeline

Sanfoundry. (2025). A Brief History of Machine Learning and Key Milestones. Retrieved from https://www.sanfoundry.com/brief-history-of-machine-learning-key-milestones/

Linode. (2022). Machine Learning and Artificial Intelligence Background. Retrieved from https://www.linode.com/docs/guides/history-of-machine-learning/

StarTechUP. (2024). History of Machine Learning: The Complete Timeline. Retrieved from https://www.startechup.com/blog/machine-learning-history/

Wikipedia. (2025). AlexNet. Retrieved from https://en.wikipedia.org/wiki/AlexNet

Pinecone. (2024). AlexNet and ImageNet: The Birth of Deep Learning. Retrieved from https://www.pinecone.io/learn/series/image-search/imagenet/

Viso.ai. (2025). AlexNet: Revolutionizing Deep Learning in Image Classification. Retrieved from https://viso.ai/deep-learning/alexnet/

IEEE Spectrum. (2025). How AlexNet Transformed AI and Computer Vision Forever. Retrieved from https://spectrum.ieee.org/alexnet-source-code

Medium. (2024). The Story of AlexNet: A Historical Milestone in Deep Learning. By James Fahey. Retrieved from https://medium.com/@fahey_james/the-story-of-alexnet-a-historical-milestone-in-deep-learning-79878a707dd5

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments