What is a Deep Neural Network (DNN): The Complete Guide to AI's Most Powerful Architecture

- Muiz As-Siddeeqi

- Oct 19, 2025

- 26 min read

Every time you unlock your phone with your face, ask ChatGPT a question, or watch a car navigate city streets without a driver, you're witnessing deep neural networks at work. These digital brains—stacks of interconnected artificial neurons that learn from experience—have quietly revolutionized our world. From predicting protein structures that win Nobel Prizes to translating languages in real-time, DNNs now power the AI tools reshaping medicine, transportation, communication, and scientific discovery. The deep learning market exploded from $96.8 billion in 2024 to a projected $526.7 billion by 2030 (Grand View Research, 2024). This isn't hype. It's a fundamental shift in how machines learn, and understanding DNNs means understanding the technology driving our future.

TL;DR

Deep neural networks (DNNs) are AI systems with multiple hidden layers that learn complex patterns from data

The global deep learning market reached $96.8 billion in 2024 and will hit $526.7 billion by 2030

DNNs power ChatGPT (800 million weekly users), Tesla's self-driving system (4+ million vehicles), and AlphaFold (200+ million protein predictions)

AlexNet's 2012 breakthrough reduced image recognition errors from 26.2% to 15.3%, sparking the modern deep learning revolution

92% of Fortune 500 companies now use DNN-based AI tools

Key challenges: massive computational costs, data requirements, and the "black box" interpretability problem

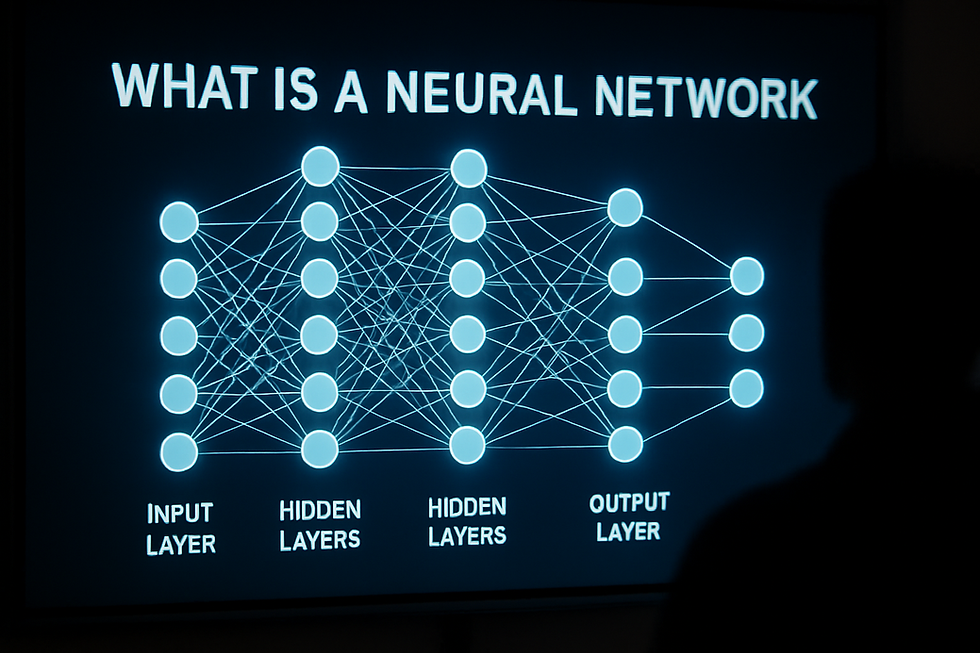

A deep neural network (DNN) is an artificial intelligence system with multiple hidden layers of interconnected nodes that process information hierarchically. Unlike shallow neural networks with 1-2 layers, DNNs contain 3 to 100+ layers, enabling them to learn complex patterns from raw data. Each layer extracts increasingly abstract features—from edges to shapes to complete objects—making DNNs extraordinarily powerful for image recognition, language understanding, and prediction tasks across industries.

Table of Contents

Understanding Deep Neural Networks: Core Concepts

A deep neural network is fundamentally a computational system inspired by biological brains. It consists of layers of artificial neurons that transform input data through mathematical operations to produce useful outputs.

The "deep" in DNN refers to depth—specifically, having multiple hidden layers between input and output. While a shallow neural network might have 1-2 hidden layers, modern DNNs routinely contain 10, 50, or even 100+ layers. This depth enables hierarchical feature learning that shallow networks simply cannot achieve.

Consider how you recognize a friend's face. Your brain doesn't process the entire image at once. First, neurons detect edges and contrasts. Other neurons combine these into features like curves. Higher-level neurons identify eyes, noses, mouths. Finally, the whole pattern matches your friend. DNNs work similarly—each layer builds on the previous one's discoveries.

The neural network market reached $34.52 billion in 2024 and will grow to $537.81 billion by 2034, expanding at 31.60% annually (Precedence Research, October 2025). This explosive growth reflects DNNs' proven ability to solve problems that stumped computers for decades.

The Three Core Components

Input Layer: Receives raw data (images as pixels, text as numbers, audio as waveforms). Each neuron in this layer represents one data feature.

Hidden Layers: The "deep" part. Multiple layers where actual learning happens. Early layers detect simple patterns; deeper layers combine these into abstract concepts. A 50-layer network might have billions of parameters to optimize.

Output Layer: Produces the final answer—a classification (cat or dog), a prediction (stock price), or generated content (text, images).

How Deep Neural Networks Work: Architecture and Mechanics

The Forward Pass: Information Flows Through

Each neuron performs a simple calculation. It receives inputs from the previous layer, multiplies each by a learned weight, sums these products, adds a bias term, then applies an activation function. This transforms the input into an output sent to the next layer.

Output = Activation_Function(Sum(Input × Weight) + Bias)Weights determine how much influence each input has.

Biases allow neurons to shift their activation threshold.

Activation functions (like ReLU, sigmoid, or tanh) introduce non-linearity, enabling the network to learn complex patterns beyond straight lines.

The network processes data from input to output in a "forward pass." For image classification, pixels enter the input layer, transform through hidden layers extracting increasingly sophisticated features, and emerge as class probabilities at the output.

The Backward Pass: Learning from Mistakes

After each forward pass, the network compares its prediction to the correct answer. The difference—the loss or error—measures how wrong the network was.

Learning happens through backpropagation, invented in the 1980s but only made practical for deep networks in the 2000s. The algorithm calculates how much each weight contributed to the error, then adjusts weights to reduce that error. This process repeats millions of times across thousands of training examples.

Think of it like learning to throw darts. Each throw (forward pass) shows where you hit. The distance from the bullseye (loss) tells you what to adjust. Over thousands of throws (training iterations), your aim improves. DNNs do this with mathematical precision across billions of parameters.

Matrix Operations: The Computational Engine

Mathematically, DNNs perform massive matrix multiplications. A single layer might multiply a 1000×1000 matrix by another matrix millions of times during training. This is why graphics processing units (GPUs) revolutionized deep learning—they excel at parallel matrix operations that would take CPUs weeks or months.

Tesla's Full Self-Driving system uses 48 distinct neural networks processing data from 8 cameras simultaneously, requiring 70,000 GPU hours per complete training cycle (FredPope, June 2025).

The Evolution: From Perceptrons to Modern DNNs

The Early Years (1943-1980s)

The journey began in 1943 when Warren McCulloch and Walter Pitts proposed the first mathematical model of artificial neurons. Their work showed that networks of simple units could perform logical operations.

Frank Rosenblatt's Perceptron (1958) was the first implemented neural network, capable of learning simple pattern classification. However, Marvin Minsky and Seymour Papert's 1969 book Perceptrons proved these single-layer networks couldn't solve many problems, triggering the first "AI winter"—a period of reduced funding and interest.

The Backpropagation Revolution (1980s)

Geoffrey Hinton, David Rumelhart, and Ronald Williams popularized backpropagation in 1986, enabling efficient training of networks with hidden layers. Yann LeCun applied this to create LeNet-5 in 1989, successfully reading handwritten zip codes for the U.S. Postal Service—an early commercial success.

Yet deep networks remained impractical. Training was painfully slow on 1990s hardware, and networks with many layers suffered from vanishing gradients where error signals became too weak to update early layers effectively.

The AlexNet Breakthrough (2012)

Everything changed on September 30, 2012. Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton submitted AlexNet to the ImageNet Large Scale Visual Recognition Challenge. Their deep convolutional neural network achieved a 15.3% top-5 error rate—dramatically better than the second-place 26.2% (Krizhevsky et al., Nature 2012).

AlexNet proved three critical elements had converged:

Large datasets (ImageNet's 1.2 million labeled images)

GPU computing power (trained on 2 Nvidia GTX 580 GPUs in Krizhevsky's bedroom)

Improved techniques (ReLU activations, dropout regularization)

Yann LeCun called it "an unequivocal turning point in the history of computer vision" (IEEE Spectrum, March 2025). AlexNet sparked the modern deep learning revolution. Investment in AI startups exploded. Research papers on deep learning increased tenfold within two years.

The Modern Era (2012-Present)

Post-AlexNet innovations include:

ResNet (2015): Introduced skip connections enabling networks with 100+ layers

Transformer architecture (2017): Revolutionized natural language processing, leading to GPT models

AlphaFold (2020): Deep learning solved the 50-year protein folding problem

GPT-4 (2023): Large language models with hundreds of billions of parameters

Diffusion models (2022-2024): Generated photorealistic images and video

Geoffrey Hinton and John Jumper won the 2024 Nobel Prize in Chemistry for AlphaFold's breakthrough in protein structure prediction using deep neural networks (Nature, October 2024).

Types of Deep Neural Network Architectures

Best for: Images, video, spatial data

CNNs use convolutional layers that slide filters across input data, detecting local patterns like edges, textures, and shapes. Pooling layers progressively reduce spatial dimensions while preserving important features. This architecture dramatically reduces parameters compared to fully connected networks.

Real-world use: Image recognition held 43.38% of the deep learning market in 2024 (Grand View Research, 2024). Tesla's autonomous driving system relies on CNNs to process camera feeds and identify vehicles, pedestrians, lanes, and traffic signals.

Best for: Sequential data like text, speech, time series

RNNs maintain internal memory, allowing them to process sequences where order matters. Long Short-Term Memory (LSTM) networks, introduced in 1997, solve RNNs' vanishing gradient problem by using gating mechanisms to control information flow.

Real-world use: Speech recognition, machine translation, financial forecasting. Google Translate uses LSTMs to capture context across entire sentences.

Transformer Networks

Best for: Natural language processing, large-scale pattern recognition

Introduced in 2017's landmark paper "Attention Is All You Need," transformers use self-attention mechanisms to process entire sequences in parallel. They've largely replaced RNNs for language tasks.

Real-world use: ChatGPT, Google's BERT, and all modern large language models. ChatGPT reached 800 million weekly active users by September 2025, up from 400 million in February (NerdyNav, 2025). The model processes 2.5 billion daily prompts (AllAboutAI, October 2025).

Generative Adversarial Networks (GANs)

Best for: Creating new data that resembles training data

GANs pit two networks against each other. A generator creates fake data; a discriminator tries to detect fakes. Through this adversarial process, generators learn to create remarkably realistic images, videos, and audio.

Real-world use: Image synthesis, data augmentation, deepfake creation (and detection), drug discovery molecule generation.

Diffusion Models

Best for: High-quality image and video generation

Diffusion models learn to gradually denoise random noise into coherent images. They've recently surpassed GANs for image quality and controllability.

Real-world use: DALL-E 3, Midjourney, Stable Diffusion. After OpenAI launched ChatGPT's image generation in April 2025, users created 700 million images in seven days (Digital Silk, May 2025).

Architecture | Primary Use | Key Strength | Example Application |

CNN | Images/video | Spatial pattern recognition | Medical image diagnosis |

RNN/LSTM | Sequential data | Temporal dependencies | Speech recognition |

Transformer | Language | Parallel processing, long-range dependencies | ChatGPT, translation |

GAN | Content generation | Realistic synthesis | Image creation |

Diffusion | High-quality generation | Controllable output | DALL-E, Midjourney |

Training Deep Neural Networks: The Learning Process

Data Collection and Preparation

Quality data is everything. The saying "garbage in, garbage out" applies absolutely to DNNs. Successful training requires:

Large datasets: Modern DNNs need thousands to millions of examples

Labeled data: For supervised learning, humans must provide correct answers

Balanced classes: Equal representation prevents bias

Data augmentation: Creating variations (rotations, crops, color shifts) expands effective dataset size

AlexNet trained on 1.2 million ImageNet images. GPT-3 trained on 570 GB of text data from books, websites, and articles (BBC Science Focus, 2024). Data quality often matters more than algorithm sophistication.

The Training Loop

Initialize weights: Start with random small values

Forward pass: Process a batch of training examples

Calculate loss: Measure prediction error using a loss function

Backpropagation: Compute gradients showing how to adjust weights

Update weights: Apply gradients using an optimizer (Adam, SGD, etc.)

Repeat: Iterate millions of times until performance plateaus

Training a state-of-the-art model can take weeks on dozens of GPUs. Tesla's Full Self-Driving system requires 70,000 GPU hours per training cycle, processing 1.5 petabytes of driving data from over 4 million vehicles (FredPope, June 2025).

Hyperparameter Tuning

Hyperparameters—settings like learning rate, batch size, and network depth—dramatically affect performance. Finding optimal values requires extensive experimentation. Common approaches include:

Grid search: Try all combinations of predefined values

Random search: Sample random configurations

Bayesian optimization: Use probabilistic models to guide search

Neural architecture search: Use AI to design AI architectures

Preventing Overfitting

Overfitting occurs when a network memorizes training data instead of learning general patterns. It performs perfectly on training data but fails on new examples. Prevention strategies:

Regularization: Add penalties for complex weights (L1, L2 regularization)

Dropout: Randomly deactivate neurons during training, forcing redundancy

Early stopping: Stop training when validation performance plateaus

Data augmentation: Artificially expand the training set

Cross-validation: Test on multiple data splits to ensure generalization

AlexNet used dropout and aggressive data augmentation, increasing its effective training set by a factor of 2048 (Krizhevsky et al., 2012).

Real-World Case Studies: DNNs in Action

Case Study 1: AlphaFold Solves the Protein Folding Problem

Challenge: Proteins are life's molecular machines. Their 3D structure determines their function. Determining one structure experimentally took months to years and cost hundreds of thousands of dollars. With billions of proteins to map, this was impossibly slow.

Solution: Google DeepMind developed AlphaFold, a deep neural network that predicts protein structures from amino acid sequences. AlphaFold 2 (2020) achieved a median Global Distance Test score of 92.4, comparable to experimental accuracy.

Results:

Predicted 200+ million protein structures—nearly all cataloged proteins known to science

2+ million users in 190 countries accessed the AlphaFold Protein Structure Database

Potentially saved millions of dollars and hundreds of millions of years in research time (DeepMind, 2024)

Won the 2024 Nobel Prize in Chemistry for Demis Hassabis and John Jumper

Impact: AlphaFold 3 (May 2024) extended predictions to protein complexes with DNA, RNA, and ligands, showing 50%+ accuracy improvements for protein-molecule interactions. This accelerates drug discovery, vaccine development, and understanding of disease mechanisms. Isomorphic Labs now collaborates with pharmaceutical companies to design new therapies using AlphaFold predictions (Google DeepMind, November 2024).

Source: Abramson et al., Nature 630, 493-500 (May 2024); DeepMind official announcements

Case Study 2: Tesla's Neural Network Revolution in Self-Driving

Challenge: Building a self-driving system that works everywhere without expensive LiDAR sensors or pre-mapped roads. Traditional rule-based approaches required programming every possible driving scenario—an impossible task.

Solution: Tesla's Full Self-Driving (FSD) system uses 48 distinct neural networks trained end-to-end on real driving data. Version 12 (January 2024) replaced over 300,000 lines of explicit C++ code with neural networks trained on millions of video clips.

Architecture:

8 cameras providing 360-degree coverage

Neural networks transform 2D images into 3D spatial understanding using Bird's Eye View transformations

Training uses 70,000 GPU hours per cycle on 1.5 petabytes of data from 4+ million Tesla vehicles

Networks learn from human driving behavior rather than following programmed rules

Results:

Miles between critical interventions improved 100× with version 12.5 (Q3 2024 earnings call)

FSD v13 expects 1,000× improvement

System develops emergent behaviors never explicitly programmed—understanding social driving cues, navigating construction zones, making judgment calls

Powers 4+ million Tesla vehicles globally

Impact: Tesla's approach proves that vision-only neural networks can achieve autonomous driving without LiDAR or HD maps. The same architecture will power Optimus humanoid robots, demonstrating DNNs as general-purpose learning engines (FredPope, June 2025).

Source: Tesla FSD release notes; Autoweek (January 2024); Applying AI (July 2025)

Case Study 3: ChatGPT Transforms Human-AI Interaction

Challenge: Previous AI chatbots were rigid, context-unaware, and frustrating. Most people found them useless beyond simple queries. Bridging natural language understanding with useful, coherent responses required breakthrough architectures.

Solution: OpenAI's GPT models use transformer-based deep neural networks trained on vast text corpora. GPT-4 (2023) contains hundreds of billions of parameters, learning language patterns, world knowledge, and reasoning abilities from internet text, books, and articles.

Results:

ChatGPT reached 100 million users in 2 months—fastest-growing app in history at the time

800 million weekly active users as of September 2025 (up from 300 million in December 2024)

2.5 billion daily prompts processed by July 2025

10 million paying subscribers to ChatGPT Plus

92% of Fortune 500 companies use OpenAI products

Business Impact:

OpenAI revenue: $3.7 billion in 2024, projected $12.7 billion in 2025

$10 billion annual recurring revenue reached June 2025

Company valued at $157 billion as of October 2025

Teams using GPT-4 show 12% productivity gains and 25% faster task completion (Intelliarts, September 2025)

Adoption:

26% of U.S. teens use ChatGPT for schoolwork (up from 13% in 2023)

20% of U.S. adults use ChatGPT for work-related tasks

53% of U.S. adults who use AI tools choose ChatGPT—the most popular option

Source: Backlinko (August 2025); NerdyNav (October 2025); AllAboutAI (October 2025)

Industry Applications and Market Growth

Market Size and Projections

The deep learning revolution is reshaping global economies. Multiple research firms project explosive growth:

Source | 2024 Market Size | 2030-2034 Projection | CAGR |

Grand View Research | $96.8B | $526.7B (2030) | 31.8% |

Precedence Research | $93.72B | $1,420.29B (2034) | 31.24% |

Fortune Business Insights | $24.53B | $279.60B (2032) | 35.0% |

Market Research Future | $42.60B (DNNs specifically) | $692.13B (2034) | 32.15% |

North America dominated with 38-40% market share in 2024, driven by established IT infrastructure, venture capital, and dense AI talent (Grand View Research, 2024; Precedence Research, 2025).

Industry-Specific Applications

Healthcare (Fastest-growing segment):

Medical image analysis for cancer detection

Drug discovery and molecule design

Patient outcome prediction

Genomics and personalized medicine

Surgical robot guidance

The European Union allocated €7.5 billion (approximately $7.9 billion) for 2021-2027 to encourage AI and deep learning in healthcare, autonomous vehicles, and smart manufacturing (IMARC Group, 2025).

Automotive:

Autonomous driving systems

Predictive maintenance

Quality control in manufacturing

Supply chain optimization

Finance:

Fraud detection and prevention

Algorithmic trading

Credit risk assessment

Customer service chatbots

Retail (Significant 2024 segment):

Demand forecasting

Personalized recommendations

Visual search

Inventory optimization

Customer behavior analysis

Deep learning assists retailers by analyzing customer preferences from previous activities and offering personalized results (Precedence Research, January 2025).

Technology & IT (33% market share in 2024):

Natural language processing

Computer vision systems

Recommendation engines

Cybersecurity threat detection

Manufacturing (34.6% projected CAGR):

Industry 4.0 initiatives

Condition monitoring delivering measurable yield gains

IoT sensor data processing

Quality inspection

Predictive equipment maintenance (Mordor Intelligence, June 2025)

Advantages and Limitations of DNNs

Advantages

Automatic Feature Learning DNNs discover relevant patterns automatically. Traditional machine learning required expert feature engineering—manually designing what the algorithm should look for. DNNs learn optimal features from raw data, often finding patterns humans never considered.

Scalability with Data More data generally improves DNN performance. As datasets grow, DNNs continue learning and refining, while traditional algorithms plateau. This makes DNNs ideal for big data applications.

Versatility Across Domains The same fundamental architecture adapts to images, text, audio, and tabular data. Transfer learning lets networks trained on one task jump-start learning on related tasks.

Superhuman Performance DNNs now exceed human accuracy on many specialized tasks—medical image analysis, game playing (chess, Go), protein structure prediction, and pattern recognition in massive datasets.

Continuous Improvement Networks improve through retraining on new data. Tesla's FSD system updates over-the-air, continuously refining from fleet-wide driving experiences.

Limitations

Data Hunger DNNs need large labeled datasets—often thousands to millions of examples. Acquiring and labeling this data is expensive and time-consuming. Small-data domains remain challenging.

Computational Cost Training large DNNs requires powerful GPUs costing thousands to hundreds of thousands of dollars. ChatGPT costs approximately $700,000 daily to operate, with each query costing around $0.36 (WiserNotify, March 2025). OpenAI expects $5 billion in operational expenses for 2024 (New York Times, 2024).

Black Box Problem DNNs make decisions through billions of mathematical operations. Understanding why a network made a specific prediction is extremely difficult. This opacity raises concerns in high-stakes applications like medical diagnosis or loan approvals.

Overfitting Risk Without proper regularization, DNNs memorize training data rather than learning generalizable patterns. This creates models that fail spectacularly on new examples despite perfect training performance.

Adversarial Vulnerability Small, carefully crafted input perturbations—invisible to humans—can fool DNNs into wildly incorrect predictions. Adding imperceptible noise to a stop sign image might make a self-driving car see it as a speed limit sign.

Energy Consumption Large model training and inference consume massive electricity. ChatGPT's monthly electricity consumption equals nearly 175,000 individuals in a mid-sized city (Science Direct, 2024). Environmental concerns are growing.

Bias Amplification DNNs learn from data that may contain societal biases. Without careful attention, networks can perpetuate or amplify discrimination in hiring, lending, criminal justice, and other sensitive areas.

Common Myths vs Facts About DNNs

Myth 1: DNNs Think Like Human Brains

Fact: Despite biological inspiration, DNNs bear little resemblance to real brains. Biological neurons are far more complex, use different signaling mechanisms, and exhibit plasticity that artificial neurons lack. DNNs perform specific narrow tasks through statistical pattern matching, not general intelligence.

Myth 2: More Layers Always Mean Better Performance

Fact: Deeper isn't automatically better. Very deep networks face vanishing gradients, require exponentially more data and compute, and may overfit. The optimal depth depends on the problem, dataset size, and available resources. Sometimes a well-designed shallow network outperforms a poorly designed deep one.

Myth 3: DNNs Need Minimal Data

Fact: While transfer learning and few-shot learning improve data efficiency, most DNNs still require substantial training data. AlexNet used 1.2 million images. GPT-3 trained on hundreds of billions of words. Small datasets remain challenging unless you can leverage pre-trained models.

Myth 4: DNNs Are Infallible Once Trained

Fact: DNNs fail in numerous ways. They're vulnerable to adversarial attacks, struggle with distribution shift (data different from training), make overconfident wrong predictions, and lack common sense reasoning. Tesla's FSD requires constant driver supervision precisely because DNNs aren't reliable enough for full autonomy.

Myth 5: DNNs Will Soon Achieve General Intelligence

Fact: Current DNNs are narrow AI—expert at specific tasks but unable to generalize beyond training. ChatGPT can't drive cars. AlphaFold can't write poetry. True artificial general intelligence (AGI) remains speculative, with experts disagreeing whether current approaches can ever achieve it.

Myth 6: DNNs Understand What They Learn

Fact: DNNs optimize mathematical functions. They have no understanding, consciousness, or awareness. When ChatGPT describes a sunset, it's generating statistically likely word sequences based on patterns in training data, not experiencing or comprehending beauty.

Building Your First DNN: A Practical Framework

Prerequisites Checklist

Basic Python programming

Understanding of linear algebra (matrices, vectors)

Calculus fundamentals (derivatives, gradients)

Statistics basics (probability, distributions)

Access to Python environment with libraries (PyTorch or TensorFlow, NumPy, Pandas)

Step 1: Define the Problem Clearly

Questions to answer:

What are you predicting? (Classification, regression, generation)

What input data do you have?

How much labeled data is available?

What accuracy is sufficient?

What are computational constraints?

Warning: Many problems don't need DNNs. If simpler methods (logistic regression, random forests) work adequately, use them. DNNs shine when relationships are complex, non-linear, and traditional methods plateau.

Step 2: Gather and Prepare Data

Data collection:

Aim for 1,000+ examples minimum; 10,000+ preferred

Ensure data represents real-world conditions

Check for class imbalance

Verify label quality—mislabeled data destroys performance

Preprocessing:

Normalize/standardize numerical features (zero mean, unit variance)

Handle missing values (imputation or removal)

Encode categorical variables

Split into training (70-80%), validation (10-15%), and test (10-15%) sets

Step 3: Design Architecture

For beginners:

Start simple—2-3 hidden layers with 100-500 neurons each

Use ReLU activation for hidden layers

Use softmax for multi-class classification, sigmoid for binary, linear for regression

Add dropout (0.2-0.5) between layers to prevent overfitting

Common architectures:

Image tasks: Start with pre-trained CNN (ResNet, EfficientNet) via transfer learning

Text tasks: Use transformer models (BERT, GPT) as feature extractors

Tabular data: Fully connected networks with 2-5 hidden layers

Step 4: Configure Training

Loss functions:

Cross-entropy for classification

Mean squared error for regression

Custom losses for specialized tasks

Optimizers:

Adam (adaptive learning rate) works well as default

SGD with momentum for fine-tuning

Learning rate: Start with 0.001, adjust based on validation loss

Batch size:

32-256 examples per batch (smaller for limited memory, larger for stability)

Affects training speed and generalization

Step 5: Train and Monitor

Training loop:

Iterate for multiple epochs (complete passes through data)

Monitor both training and validation loss

Save checkpoints periodically

Implement early stopping when validation loss stops improving

Red flags:

Training loss decreases but validation increases = overfitting

Both losses stuck at high values = underfitting or learning rate issues

Loss becomes NaN = learning rate too high or numerical instability

Step 6: Evaluate and Iterate

Testing:

Evaluate on held-out test set (never seen during training/validation)

Use appropriate metrics: accuracy, precision, recall, F1 score, AUC

Analyze failure cases to understand weaknesses

Improvement strategies:

Add more data (most effective if possible)

Try data augmentation

Adjust architecture (add/remove layers, change neurons)

Tune hyperparameters (learning rate, batch size, regularization)

Use transfer learning if training from scratch underperforms

Step 7: Deploy Responsibly

Production considerations:

Inference speed and latency

Model size and memory requirements

Monitoring for distribution drift

A/B testing against baseline

Regular retraining schedule

Ethical requirements:

Document data sources and limitations

Test for bias across demographic groups

Provide uncertainty estimates where appropriate

Enable human oversight for high-stakes decisions

Future of Deep Neural Networks

Near-Term Trends (2025-2027)

Efficiency Improvements Research focuses on achieving better performance with fewer parameters and less compute. Techniques like model compression, pruning, and quantization enable deployment on edge devices—smartphones, IoT sensors, autonomous vehicles.

Multimodal Models Future systems will seamlessly integrate text, images, audio, and video. GPT-4V already combines vision and language. Gemini handles text, images, audio, and video in a single model. This convergence enables richer, more natural AI interactions.

Edge AI Moving computation from cloud to device protects privacy, reduces latency, and lowers costs. Specialized chips (Apple's Neural Engine, Google's TPU) enable sophisticated DNNs on smartphones and embedded systems.

Mid-Term Developments (2027-2030)

Scientific Discovery Acceleration Following AlphaFold's success, DNNs will tackle other grand challenges: protein design, drug discovery, materials science, fusion energy optimization, climate modeling. The 2024 Nobel Prize for AlphaFold signals scientific community acceptance of AI-driven discovery.

Autonomous Systems Maturity Self-driving vehicles, delivery robots, and warehouse automation will achieve Level 4 autonomy (no human required in specific conditions). Tesla aims for unsupervised FSD and robotaxi services by 2025-2026. Regulatory approvals will lag technology capabilities.

Personalized AI Models will adapt to individual users—learning preferences, communication styles, and domains of expertise. This personalization raises privacy concerns but enables more helpful assistants.

Neural Architecture Search AI will design better AI. Automated architecture search optimizes network structures, discovering novel designs humans wouldn't imagine. This could accelerate progress exponentially.

Long-Term Possibilities (2030+)

Brain-Computer Interfaces Companies like Neuralink work toward direct neural interfaces. Combined with DNNs, this could enable thought-controlled devices, enhanced cognition, and memory augmentation. Ethical implications are profound.

Artificial General Intelligence? Whether current DNN approaches can achieve human-level general intelligence remains hotly debated. Skeptics argue fundamental limitations require entirely new paradigms. Optimists see scaling and architecture improvements continuing toward AGI. Prediction timelines vary from 5 to 50+ years or "never."

Energy-Efficient Computing Neuromorphic chips mimicking biological neurons could reduce DNN energy consumption by orders of magnitude. Companies like Intel (Loihi) and IBM (TrueNorth) prototype spike-based processors potentially enabling vastly larger models with reasonable power budgets.

Challenges Ahead

Alignment and Safety As DNNs grow more capable, ensuring they behave as intended becomes critical. Misaligned powerful AI could cause catastrophic harm, whether through accidents or malicious use. The field of AI safety research addresses these concerns.

Regulation and Governance Governments worldwide develop AI regulations. The EU's AI Act, China's algorithm regulations, and U.S. executive orders establish frameworks. Balancing innovation, safety, fairness, and national competitiveness challenges policymakers.

Environmental Impact Training large models consumes megawatt-hours of electricity. As AI proliferates, carbon footprint concerns grow. Sustainable AI practices—efficient algorithms, renewable energy, carbon accounting—will become essential.

Economic Disruption DNNs will automate knowledge work at unprecedented scale. This threatens millions of jobs while creating new opportunities. Societies must address workforce transitions, education system reforms, and potential inequality increases.

Frequently Asked Questions

1. What's the difference between deep learning and machine learning?

Machine learning is the broad field of algorithms that learn from data without explicit programming. Deep learning is a subset using neural networks with multiple layers. All deep learning is machine learning, but not all machine learning is deep learning. Traditional ML includes decision trees, support vector machines, and random forests alongside neural networks.

2. How many layers make a network "deep"?

No universal threshold exists, but generally 3+ hidden layers qualify as deep. Modern DNNs often have 50-200 layers. Depth enables hierarchical feature learning—early layers detect simple patterns, deeper layers combine these into abstract concepts.

3. Can DNNs run on regular computers or do they need special hardware?

Small DNNs run on laptops or desktop CPUs. Serious training requires GPUs (graphics cards) which parallelize matrix operations. Cloud services (AWS, Google Cloud, Azure) rent GPU instances hourly. State-of-the-art models need clusters of specialized chips (Google's TPUs, Nvidia's A100/H100 GPUs) costing millions.

4. How much data do I need to train a DNN?

It depends on problem complexity and transfer learning availability. From scratch: image classification needs 10,000+ examples; language models need billions of words. With transfer learning (starting from pre-trained models): sometimes hundreds of examples suffice. Quality matters more than quantity—1,000 clean, labeled examples beat 100,000 noisy ones.

5. Why are DNNs called "black boxes"?

DNNs make decisions through billions of mathematical operations across millions of parameters. Tracing why specific inputs produced specific outputs is extremely difficult. Researchers develop interpretability techniques (attention visualization, feature importance, LIME, SHAP) but DNNs remain far less transparent than rule-based systems or decision trees.

6. Can DNNs forget what they learned?

DNNs don't forget like humans. However, "catastrophic forgetting" occurs when training on new tasks overwrites knowledge from previous tasks. Continual learning research addresses this, using techniques like elastic weight consolidation or rehearsal of old examples alongside new ones.

7. What's the difference between supervised, unsupervised, and reinforcement learning?

Supervised: Network learns from labeled examples (input-output pairs). Used for classification and regression. Requires expensive human labeling.

Unsupervised: Network finds patterns in unlabeled data. Used for clustering, dimensionality reduction, and anomaly detection.

Reinforcement: Network learns by trial-and-error, receiving rewards/penalties. Used for game playing, robotics, and decision-making under uncertainty.

8. How long does training a DNN take?

Minutes to months depending on model size, dataset, and hardware. A simple network on a laptop: hours. GPT-3 training: weeks on thousands of GPUs. Tesla's FSD: 70,000 GPU hours per cycle. Ongoing research reduces training time through better algorithms and hardware.

9. Can I use pre-trained models instead of training from scratch?

Absolutely—this is called transfer learning and it's best practice. Models pre-trained on massive datasets (ImageNet for vision, internet text for language) already learned useful features. You fine-tune them on your specific task with far less data and compute. Hugging Face hosts thousands of pre-trained models ready for download.

10. Are DNNs vulnerable to hacking or manipulation?

Yes. Adversarial attacks add imperceptible input perturbations that fool DNNs. Data poisoning corrupts training data. Model stealing replicates networks through queries. Defense research develops robust training, input sanitization, and anomaly detection, but adversarial robustness remains an open problem.

11. How do I know if my DNN is overfitting?

Monitor training and validation loss during training. If training loss decreases while validation loss increases or plateaus, the model is overfitting—memorizing training data rather than learning generalizable patterns. Solutions: more data, data augmentation, regularization (dropout, weight decay), or simpler architecture.

12. What programming language should I use for DNNs?

Python dominates (95%+ of AI research and industry). Key libraries: PyTorch (research favorite, flexible), TensorFlow/Keras (production deployment, Google-backed), JAX (high-performance research). Other options: Julia, C++ (for production optimization). Start with Python and PyTorch or TensorFlow.

13. How much does it cost to train a large DNN?

Varies enormously. Simple models: free (laptop GPU). GPT-3 training: $4-12 million estimated. GPT-4 training likely cost $100+ million. DeepSeek recently trained a 671-billion-parameter model for only $5.6 million using efficient methods (Mordor Intelligence, January 2025). Cloud GPU rental: $1-10+ per hour depending on hardware.

14. Can DNNs be biased? How do I prevent this?

DNNs learn biases present in training data. If data contains societal prejudices, the model will too—sometimes amplifying them. Prevention: diverse representative training data, fairness metrics, bias audits, debiasing techniques, and diverse development teams. Eliminating bias completely remains challenging.

15. What careers involve working with DNNs?

Machine learning engineer, deep learning researcher, data scientist, AI product manager, research scientist, computer vision engineer, NLP engineer, robotics engineer, AI ethicist, and ML operations (MLOps) specialist. Demand for these roles grows rapidly—92% of Fortune 500 companies now use AI tools (OpenAI, 2025).

16. Will DNNs replace human jobs?

DNNs will automate many tasks, displacing some jobs while creating new ones. Historically, technology shifts eliminate old roles but generate different opportunities. Uniquely human skills—creativity, emotional intelligence, complex problem-solving, ethical judgment—remain valuable. Adaptability and continuous learning become essential.

17. How can I stay current with DNN research?

Follow conferences (NeurIPS, ICML, CVPR, ACL), read arXiv preprint server, subscribe to newsletters (The Batch, Import AI, Papers with Code), take online courses (Coursera, fast.ai), and implement papers in code. Join communities (r/MachineLearning, Twitter AI community, local meetups).

18. What's the environmental impact of training DNNs?

Large model training consumes megawatt-hours of electricity, generating significant carbon emissions. GPT-3 training emitted ~552 metric tons CO2 equivalent. Mitigation: efficient algorithms, model compression, renewable energy, and carbon offsets. Smaller targeted models often suffice instead of ever-larger ones.

19. Can DNNs be creative or are they just mimicking patterns?

Philosophical question without consensus. DNNs generate novel combinations of learned patterns—writing poetry, composing music, creating art. Whether this constitutes "creativity" or sophisticated pattern recombination depends on your definition of creativity. They certainly produce outputs humans call creative, but lack consciousness or intentionality.

20. What's next after deep learning?

Candidates include neuromorphic computing (brain-inspired hardware), quantum machine learning, symbolic AI integration (combining logic with learning), and entirely new paradigms we haven't imagined. History suggests breakthroughs come unexpectedly. Current deep learning may be incremental stepping stone or fundamental framework for decades. Time will tell.

Key Takeaways

Deep neural networks are multi-layered AI systems that automatically learn hierarchical features from raw data, eliminating manual feature engineering.

The deep learning market exploded from $96.8 billion (2024) to a projected $526.7 billion by 2030, with North America holding 38-40% market share.

AlexNet's 2012 ImageNet victory (15.3% error vs 26.2% runner-up) triggered the modern deep learning revolution by proving GPUs, big data, and deep architectures could achieve superhuman visual recognition.

Three breakthrough case studies demonstrate DNN impact: AlphaFold predicted 200+ million protein structures (winning 2024 Nobel Prize), Tesla's FSD replaced 300,000 lines of code with neural networks across 4+ million vehicles, and ChatGPT grew to 800 million weekly users (92% Fortune 500 adoption).

Key DNN architectures serve different domains: CNNs for images/video, RNNs/LSTMs for sequences, transformers for language (powering ChatGPT/GPT), GANs and diffusion models for content generation.

Training requires massive data (thousands to millions of examples), substantial compute (GPUs/TPUs), careful hyperparameter tuning, and regularization to prevent overfitting.

Major advantages include automatic feature learning, scalability with data, versatility across domains, and superhuman specialized performance.

Critical limitations involve data hunger, computational costs ($700,000 daily for ChatGPT), black box opacity, adversarial vulnerability, and bias amplification from training data.

Transfer learning enables practical applications by fine-tuning pre-trained models (ImageNet for vision, GPT for language) with far less data and compute than training from scratch.

Future developments focus on efficiency improvements, multimodal integration, edge deployment, scientific discovery acceleration, and addressing challenges in alignment, regulation, sustainability, and economic disruption.

Actionable Next Steps

Learn the fundamentals: Complete Andrew Ng's "Deep Learning Specialization" on Coursera or fast.ai's "Practical Deep Learning for Coders" course. Both are free or low-cost and provide hands-on experience.

Set up your environment: Install Python, PyTorch or TensorFlow, and Jupyter Notebooks on your computer. Alternatively, use free cloud platforms like Google Colab or Kaggle Notebooks that provide GPU access.

Start with transfer learning: Rather than training from scratch, use Hugging Face Transformers library to fine-tune pre-trained models on your specific task. This achieves strong results with minimal data and compute.

Join the community: Participate in Kaggle competitions to practice on real datasets with evaluation metrics. Engage in r/MachineLearning, follow AI researchers on Twitter/X, and attend local meetups or online conferences.

Build a portfolio project: Solve a problem you care about using DNNs. Document your process—data collection, model design, training, evaluation—on GitHub. This demonstrates practical skills to employers.

Stay current: Read 2-3 papers weekly from arXiv.org (filter by cs.AI, cs.LG, cs.CV). Implement interesting papers in code—"learning by building" solidifies understanding far better than passive reading.

Understand the ethics: Study AI fairness, transparency, and safety. Take courses on responsible AI. Consider societal implications of your work. Technical skills without ethical awareness cause harm.

Experiment systematically: Track experiments carefully (use tools like Weights & Biases or MLflow). Document what works and what fails. Understanding why matters more than getting lucky once.

Contribute to open source: Submit improvements to popular libraries, report bugs, write documentation. This builds reputation and understanding of production-quality code.

Specialize strategically: After mastering basics, focus on a domain matching your interests—computer vision, NLP, reinforcement learning, healthcare AI, etc. Depth differentiates you from generalists.

Glossary

Activation Function: Mathematical function applied to neuron output introducing non-linearity. Common types: ReLU, sigmoid, tanh, softmax.

Backpropagation: Algorithm for calculating gradients of loss function with respect to network weights, enabling learning through gradient descent.

Batch: Subset of training examples processed together before updating weights. Batch size affects training speed and stability.

Bias: Constant term added to weighted sum in each neuron, allowing network to learn patterns offset from zero.

Convolutional Neural Network (CNN): Architecture using convolutional layers to detect spatial patterns, primarily for image/video data.

Dropout: Regularization technique randomly deactivating neurons during training to prevent overfitting and encourage redundancy.

Epoch: One complete pass through the entire training dataset. Models train for multiple epochs.

Feature: Measurable property of input data. DNNs automatically learn features rather than requiring manual engineering.

Forward Pass: Process of computing network output from input by passing data through layers sequentially.

Gradient: Mathematical derivative showing how to adjust weights to reduce loss. Computed via backpropagation.

Hyperparameter: Configuration setting chosen before training (learning rate, batch size, layer count) rather than learned from data.

Loss Function: Measures difference between network predictions and correct answers. Training minimizes this loss.

Neural Network: Computational model with interconnected artificial neurons organized in layers, learning from data through weight adjustments.

Neuron: Basic processing unit receiving inputs, applying weights and activation function, producing output.

Overfitting: When network memorizes training data rather than learning generalizable patterns, performing poorly on new examples.

Parameter: Learned value (weights and biases) adjusted during training to minimize loss. Modern DNNs have millions to billions of parameters.

Regularization: Techniques preventing overfitting by constraining model complexity. Examples: L1/L2 penalties, dropout, early stopping.

ReLU (Rectified Linear Unit): Popular activation function: f(x) = max(0, x). Faster training than sigmoid/tanh.

Transfer Learning: Using network pre-trained on large dataset as starting point for related task, requiring less data and compute.

Transformer: Architecture using self-attention mechanisms to process sequences in parallel. Powers modern language models like GPT.

Validation Set: Data subset used to evaluate model during training without being part of training itself, detecting overfitting.

Weight: Learned parameter determining connection strength between neurons. Training adjusts weights to minimize loss.

Sources and References

Market Research and Statistics

Grand View Research. (2024). Deep Learning Market Size And Share | Industry Report 2030. Retrieved from https://www.grandviewresearch.com/industry-analysis/deep-learning-market

Precedence Research. (January 31, 2025). Deep Learning Market Size To Hit USD 1420.29 Bn By 2034. Retrieved from https://www.precedenceresearch.com/deep-learning-market

Precedence Research. (October 6, 2025). Neural Network Market Size, Share and Trends 2025 to 2034. Retrieved from https://www.precedenceresearch.com/neural-network-market

Fortune Business Insights. (2024). Deep Learning Market Growth, Share | Forecast [2032]. Retrieved from https://www.fortunebusinessinsights.com/deep-learning-market-107801

Market Research Future. (2024). Deep Learning Neural Networks DNN Market Growth 2034. Retrieved from https://www.marketresearchfuture.com/reports/deep-learning-neural-networks-market-35651

Mordor Intelligence. (June 22, 2025). Neural Network Software Market Size, Forecast, Growth Drivers 2030. Retrieved from https://www.mordorintelligence.com/industry-reports/neural-network-software-market

IMARC Group. (2025). Deep Learning Market Size, Share, Trends, Report 2033. Retrieved from https://www.imarcgroup.com/deep-learning-market

AlphaFold and Protein Structure Prediction

Abramson, J., et al. (May 2024). "Accurate structure prediction of biomolecular interactions with AlphaFold 3." Nature 630, 493-500. DOI: 10.1038/s41586-024-07487-w

Jumper, J., et al. (July 2021). "Highly accurate protein structure prediction with AlphaFold." Nature 596, 583-589.

Google DeepMind. (November 11, 2024). AlphaFold 3 predicts the structure and interactions of all of life's molecules. Retrieved from https://blog.google/technology/ai/google-deepmind-isomorphic-alphafold-3-ai-model/

Google DeepMind. (2024). AlphaFold. Retrieved from https://deepmind.google/science/alphafold/

Nature. (October 9, 2024). Chemistry Nobel goes to developers of AlphaFold AI that predicts protein structures. Retrieved from https://www.nature.com/articles/d41586-024-03214-7

Tesla Full Self-Driving and Autonomous Vehicles

FredPope.com. (June 24, 2025). Tesla's Neural Network Revolution: How Full Self-Driving Replaced 300,000 Lines of Code with AI. Retrieved from https://www.fredpope.com/blog/machine-learning/tesla-fsd-12

Autoweek. (January 26, 2024). Check Out How Tesla Is Betting on AI in Latest FSD Update. Retrieved from https://www.autoweek.com/news/a46535912/tesla-fsd-ai-neural-networks-update/

Applying AI. (July 8, 2025). Decoding Tesla's Core AI and Hardware Architecture: A CEO's Perspective. Retrieved from https://applyingai.com/2025/07/decoding-teslas-core-ai-and-hardware-architecture-a-ceos-perspective/

OpenTools AI. (April 19, 2025). Tesla's Revamped FSD v12: Shifting from C++ to Python and Neural Networks. Retrieved from https://opentools.ai/news/teslas-revamped-fsd-v12-shifting-from-c-to-python-and-neural-networks

Electrek. (October 2025). Tesla releases FSD v14, first major update in a year. Retrieved from https://electrek.co/2025/10/07/tesla-fsd-v14-release-notes/

ChatGPT and Large Language Models

OpenAI. (2025). How people are using ChatGPT. Retrieved from https://openai.com/index/how-people-are-using-chatgpt/

Backlinko. (August 27, 2025). ChatGPT Statistics 2025: How Many People Use ChatGPT? Retrieved from https://backlinko.com/chatgpt-stats

NerdyNav. (October 2025). Latest ChatGPT Statistics: 800M+ Users, Revenue. Retrieved from https://nerdynav.com/chatgpt-statistics/

AllAboutAI. (October 2025). How Many People Use ChatGPT Daily? (2025 Statistics). Retrieved from https://www.allaboutai.com/resources/how-many-people-use-chatgpt-daily/

DemandSage. (October 2025). Latest ChatGPT Users Stats 2025 (Growth & Usage Report). Retrieved from https://www.demandsage.com/chatgpt-statistics/

Digital Silk. (May 30, 2025). Number Of ChatGPT Users In 2025: Stats, Usage & Impact. Retrieved from https://www.digitalsilk.com/digital-trends/number-of-chatgpt-users/

Business of Apps. (October 2025). ChatGPT Revenue and Usage Statistics (2025). Retrieved from https://www.businessofapps.com/data/chatgpt-statistics/

Intelliarts. (September 12, 2025). 100 ChatGPT Statistics to Know in 2025 & Its Future Trends. Retrieved from https://intelliarts.com/blog/chatgpt-statistics/

AlexNet and Deep Learning History

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). "ImageNet Classification with Deep Convolutional Neural Networks." Advances in Neural Information Processing Systems 25 (NIPS 2012).

IEEE Spectrum. (March 25, 2025). How AlexNet Transformed AI and Computer Vision Forever. Retrieved from https://spectrum.ieee.org/alexnet-source-code

Pinecone. (2024). AlexNet and ImageNet: The Birth of Deep Learning. Retrieved from https://www.pinecone.io/learn/series/image-search/imagenet/

The Turing Post. (April 14, 2025). How ImageNet, AlexNet and GPUs Changed AI Forever. Retrieved from https://www.turingpost.com/p/cvhistory6

Computer History Museum. (2025). AlexNet Source Code Release. GitHub repository. Retrieved from CHM's GitHub.

Technical Resources and Documentation

ScienceDirect. (February 18, 2025). "Advancements in protein structure prediction: A comparative overview of AlphaFold and its derivatives." Retrieved from https://www.sciencedirect.com/science/article/abs/pii/S0010482525001921

ScienceDaily. (November 4, 2024). AI tool AlphaFold can now predict very large proteins. Linköping University. Retrieved from https://www.sciencedaily.com/releases/2024/11/241104112352.htm

Dive into Deep Learning. (2024). 8.1. Deep Convolutional Neural Networks (AlexNet). Retrieved from http://d2l.ai/chapter_convolutional-modern/alexnet.html

Additional Industry Sources

WiserNotify. (March 13, 2025). The Latest ChatGPT Statistics and User Trends (2022-2025). Retrieved from https://wisernotify.com/blog/chatgpt-users/

First Page Sage. (October 2025). ChatGPT Usage Statistics: October 2025. Retrieved from https://firstpagesage.com/seo-blog/chatgpt-usage-statistics/

SkyQuest Technology. (2024). Deep Learning Market Growth Drivers & Forecasts 2024-2031. Retrieved from https://www.skyquestt.com/report/deep-learning-market

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments