What is a Recurrent Neural Network? Complete RNN Guide

- Muiz As-Siddeeqi

- Nov 16, 2025

- 26 min read

Imagine teaching a computer to read a story word by word. Unlike regular computers that forget each word after reading it, RNNs remember what came before. This memory power makes them perfect for understanding language, predicting stock prices, and even detecting fraud.

Don’t Just Read About AI — Own It. Right Here

TL;DR

RNNs are neural networks with memory that process data step by step, remembering previous inputs

Market is exploding: Neural network software market valued at $34.76 billion in 2025, projected to reach $139.86 billion by 2030

Real-world success: Google reduced speech recognition models from 450MB to 80MB while maintaining accuracy

Three main types: Vanilla RNNs, LSTMs (best for long sequences), and GRUs (faster alternative)

Perfect for sequences: Text translation, speech recognition, time series prediction, and fraud detection

Recent breakthrough: Minimized RNNs in 2024 achieved 175x speedup over traditional models

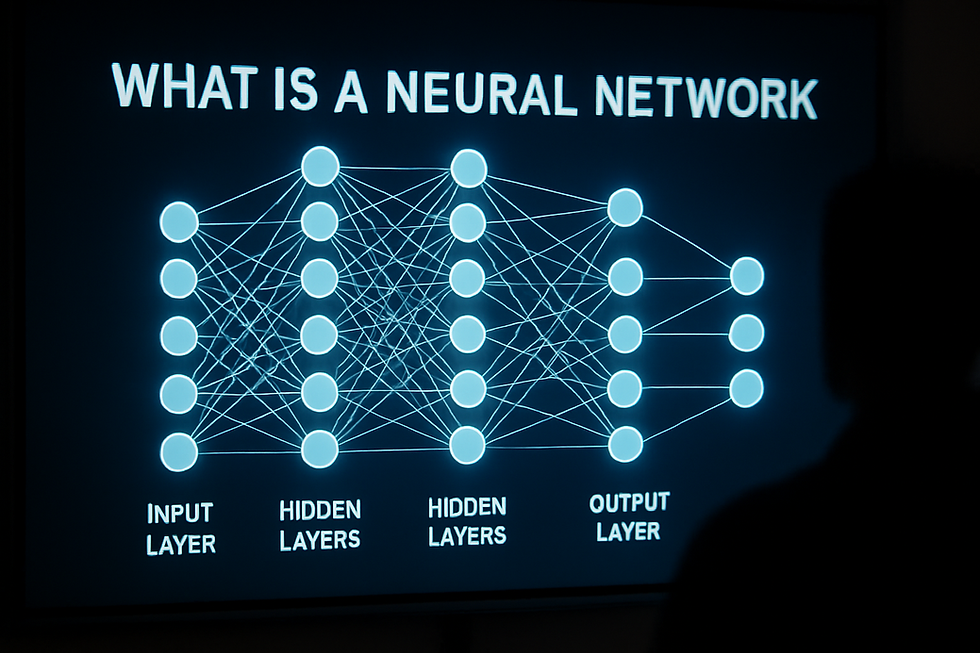

Recurrent Neural Networks (RNNs) are deep learning models designed to handle sequential data by maintaining memory of previous inputs through internal states. Unlike feedforward networks, RNNs process information step-by-step while retaining context, making them ideal for tasks involving time series, language, and sequential patterns.

Table of Contents

Background & Core Definitions

What exactly is a Recurrent Neural Network?

Think of your brain reading this sentence. You don't process each word in isolation. Instead, you build understanding by connecting each word to what came before. RNNs work exactly the same way.

According to research published in MDPI's Information journal in August 2024, "Recurrent neural networks (RNNs) are a class of deep learning models fundamentally designed to handle sequential data. Unlike feedforward neural networks, RNNs possess the unique feature of maintaining a memory of previous inputs by using their internal state to process sequences of inputs."

The breakthrough moment that changed everything

The story begins in 1997. Two researchers named Sepp Hochreiter and Jürgen Schmidhuber published a paper in Neural Computation journal that solved a critical problem. Traditional RNNs could only remember things for a few steps. Try to remember a conversation from 10 minutes ago while having a new conversation – that's what early RNNs struggled with.

Their solution? Long Short-Term Memory (LSTM) networks. These special RNNs use "gates" – think of them as smart filters that decide what to remember, what to forget, and what to pay attention to.

Three key architectural breakthroughs

Vanilla RNNs (1986): The original design by Rumelhart and colleagues. Simple but limited to short sequences due to the "vanishing gradient problem" – essentially, the learning signal got weaker and weaker as it traveled back through time.

LSTM Networks (1997): The game-changer. These networks use three gates:

Forget gate: Decides what old information to discard

Input gate: Controls what new information to store

Output gate: Determines what information to share

Gated Recurrent Units - GRUs (2014): Kyunghyun Cho and his team created a simpler version with just two gates. GRUs train 175x faster than traditional RNNs according to 2024 research from Mila and Borealis AI, while maintaining similar accuracy.

Current Market Landscape

The numbers that show explosive growth

The neural network software market, encompassing RNN technologies, is experiencing remarkable expansion. The market jumped from $34.76 billion in 2025 to a projected $139.86 billion by 2030 – that's a 32.1% compound annual growth rate, according to Mordor Intelligence's 2025 report.

Where the money is flowing

Investment is pouring in. Global AI venture capital funding hit $131.5 billion in 2024, representing a 52% increase from 2023. AI companies now capture 33% of all global venture funding, with January 2025 alone seeing $5.7 billion in AI-related investments.

Some standout funding rounds from 2025:

Scale AI: $14.3 billion funding round

Thinking Machines Lab: $2 billion seed round

Harvey AI: $300 million Series D at $3 billion valuation

The job market explosion

Machine learning engineers with RNN expertise command impressive salaries. The average salary ranges from $125,000 to $197,000, with a national average of $156,281 according to 2025 Glassdoor data. Job postings for ML engineers grew 35% year-over-year, yet 75% of European employers struggled to fill AI roles in 2024.

Regional powerhouses emerge

North America leads with 38.06% market share, driven by Silicon Valley's venture capital ecosystem and advanced cloud infrastructure. OpenAI's revenue doubling to $10 billion in 2025 exemplifies this commercial maturity.

Asia-Pacific is the growth rocket with 35.7% CAGR through 2030. China, Japan, India, and South Korea are investing heavily in national AI cloud programs, projecting a $300 billion market by 2030.

Europe focuses on sovereignty. The EU is pursuing technological independence through sovereign-AI projects, with NVIDIA supplying over 3,000 exaflops of Blackwell clusters to European partners.

How RNNs Work: Key Mechanisms

The memory notebook analogy

Imagine you're a detective reading witness statements. With each new statement, you update your notebook with important clues while keeping track of the overall story. RNNs work identically – they maintain a "hidden state" that acts like this detective's notebook.

The mathematical magic simplified

At each step, an RNN follows this simple process:

Take new input (like a word in a sentence)

Combine it with memory from the previous step

Update the memory with this new information

Produce an output if needed

The mathematical formula looks scary but the concept is simple:

New memory = Function(Current input + Old memory)

Output = Function(New memory)

Three types that revolutionized AI

Basic RNNs: Like having a goldfish memory – can only remember recent things. They suffer from the "vanishing gradient problem" where learning signals become too weak over long sequences.

LSTM Networks: Like having a sophisticated filing system. Three gates control information flow:

The forget gate acts like a trash bin for irrelevant old information

The input gate decides which new information deserves storage

The output gate controls what gets shared with the next step

GRU Networks: The efficient alternative with just two gates. GRUs achieve 175x speedup over traditional models while maintaining performance, making them perfect for real-time applications.

Processing sequences step by step

Unlike regular neural networks that see everything at once, RNNs process data like reading a book – one page at a time, building understanding as they go. This sequential processing enables them to:

Understand context: "Bank" means different things in "river bank" vs "money bank"

Predict next events: Forecast stock prices based on historical patterns

Detect anomalies: Spot unusual patterns in credit card transactions

Generate sequences: Create new text that sounds natural

Step-by-Step Guide to Understanding RNNs

Step 1: Start with the data

RNNs need sequential data – information that comes in order. Examples include:

Text: "The cat sat on the mat" (words in sequence)

Stock prices: Daily closing prices over months

Speech: Audio waves over time

Sensor data: Temperature readings every hour

Step 2: Initialize the memory

The RNN starts with an empty "hidden state" – think of it as a blank notebook. This hidden state will store everything the network learns as it processes each step.

Step 3: Process step by step

For each piece of input:

Combine the new input with the current hidden state

Apply mathematical functions to create a new hidden state

Produce output if required for this step

Pass the updated hidden state to the next time step

Step 4: Learn from mistakes

During training, the RNN compares its outputs to the correct answers. It uses "backpropagation through time" – essentially rewinding the sequence and adjusting its internal parameters to reduce errors.

Step 5: Handle the gradient problem

Traditional RNNs struggle with long sequences because learning signals get weaker over time (vanishing gradients) or explode uncontrollably (exploding gradients). Modern solutions include:

LSTM/GRU architectures that use gates to control information flow

Gradient clipping to prevent explosions

Proper initialization of network weights

Step 6: Choose the right architecture

For short sequences (under 50 steps): Basic RNNs work fine

For long sequences (100+ steps): Use LSTM or GRU

For efficiency: Choose GRU over LSTM

For accuracy: LSTM often performs slightly better than GRU

For both directions: Bidirectional RNNs process sequences forward and backward

Real-World Case Studies

Case Study 1: Google's Speech Recognition Breakthrough

Company: Google Research Speech Team

Date: March 2019

Challenge: Make speech recognition work offline on mobile phones

Google's engineers faced a massive problem. Their server-based speech recognition was accurate but required internet connection. The model was also huge – 450MB was too large for mobile deployment.

The RNN Solution: Google's team implemented RNN Transducer (RNN-T) architecture with character-level output. This specialized RNN could process speech in real-time without needing to hear complete sentences.

Measurable Results:

Model size: Compressed from 450MB to 80MB (5.6x reduction)

Speed: 4x faster processing with quantization techniques

Accuracy: Maintained same accuracy as server-based models

Memory: Used only 80MB vs 2GB for traditional FST models

Business Impact: The breakthrough enabled offline speech recognition on all Pixel phones, eliminating network dependency and latency issues. Today, this technology powers voice commands even without internet connection.

Source: Google Research Blog, March 12, 2019

Case Study 2: Heart Failure Prediction Saves Lives

Organization: Georgia Institute of Technology research team

Date: March 2017

Challenge: Predict heart failure before symptoms appear

Researchers led by Edward Choi and Jimeng Sun tackled early heart failure detection using electronic health records. Traditional methods missed subtle patterns in patient data over time.

The RNN Approach: The team used Gated Recurrent Units (GRUs) to analyze 3,884 heart failure cases and 28,903 controls from health system records spanning May 2000 to May 2013. The RNN processed time-stamped clinical events including diagnoses, medications, and procedures.

Measurable Results:

RNN Model AUC: 0.777 (12-month observation window)

Beat traditional methods: 4% improvement over best alternatives

Logistic Regression: 0.747 AUC

Neural Networks: 0.765 AUC

Support Vector Machines: 0.743 AUC

Critical Success Factors:

GRUs effectively handled irregular time intervals between patient visits

Specialized preprocessing converted clinical events into predictive patterns

Memory capabilities captured long-term health trajectories

Source: Oxford Academic - Journal of the American Medical Informatics Association, 2017

Case Study 3: Credit Card Fraud Detection Revolution

Organization: European financial institutions study

Date: 2021 (analyzing 2013 transaction data)

Challenge: Detect credit card fraud in real-time sequential transactions

Researchers addressed the challenge of detecting fraudulent transactions in highly imbalanced datasets where only 0.171% of 284,807 transactions were fraudulent.

The LSTM Solution: The team implemented LSTM networks with attention mechanisms, using SMOTE (Synthetic Minority Oversampling Technique) to handle class imbalance. The system analyzed sequential transaction patterns rather than individual transactions.

Measurable Results:

Superior performance: Outperformed GRU, basic LSTM, SVM, KNN, and traditional neural networks

High sensitivity: Achieved best recall scores for fraud detection (most critical metric in finance)

Feature optimization: Identified 10 most relevant features from original 31 using swarm intelligence

Sequential advantage: Captured evolving fraud patterns across transaction sequences

Implementation Innovations:

UMAP dimensionality reduction outperformed PCA and t-SNE

Attention mechanism focused on most relevant transaction patterns

SMOTE technique addressed extreme class imbalance effectively

Business Impact: The model prioritized sensitivity over precision, minimizing false negatives (missed frauds) – exactly what financial institutions need to protect customers.

Source: Journal of Big Data, SpringerOpen, 2021

Regional & Industry Applications

Manufacturing leads the charge

Manufacturing shows the highest growth at 34.6% CAGR, driven by Industry 4.0 initiatives. Companies use RNNs for:

Predictive Maintenance: BMW's Regensburg plant prevents 500+ minutes of annual assembly disruption using neural networks for fault prediction. General Motors achieved 15% reduction in unexpected downtime, saving $20 million annually.

Quality Control: RNNs analyze sensor data from production lines to predict defects before they occur. A 2024 study showed LSTM models outperformed traditional XGBoost regression for conveyor motor vibration analysis.

Demand Forecasting: RNN models achieve RMSE of 11.03 and MAE of 7.81 in supply chain demand forecasting, significantly outperforming Variational Autoencoders with RMSE of 28.14.

Financial services embrace AI

Banking and Financial Services capture 23.4% of neural network software revenue in 2024. Applications include:

Fraud Detection: Hybrid deep-learning models catch 98.7% of fraudulent payments. RNNs excel at detecting unusual transaction sequences that traditional methods miss.

Algorithmic Trading: Time-series analysis using LSTM networks helps predict market movements by analyzing historical price patterns and trading volumes.

Credit Scoring: RNNs analyze payment histories over time, providing more accurate risk assessments than snapshot-based traditional models.

Healthcare transforms patient care

Healthcare applications show tremendous promise for sequential data analysis:

Medical Diagnostics: RNNs achieve 90%+ accuracy in cancer detection through medical imaging sequence analysis.

Patient Monitoring: Continuous analysis of vital signs using LSTM networks enables early warning systems for patient deterioration.

Drug Discovery: Sequence analysis of genetic and protein data accelerates pharmaceutical research and clinical trial optimization.

Geographic specialization patterns

North America focuses on large-scale Transformer architectures and language models, leveraging abundant computational resources and venture capital.

Europe emphasizes hybrid approaches and efficiency, balancing performance with regulatory compliance under GDPR and AI Act requirements.

Asia-Pacific leads in RNN optimization and edge computing applications, driven by mobile-first markets and IoT device proliferation.

Advantages vs Disadvantages

The powerful advantages of RNNs

Sequential Memory: Unlike other neural networks that process data all at once, RNNs remember previous information. This makes them perfect for understanding context in language, predicting future values in time series, and detecting patterns that unfold over time.

Variable Input Length: RNNs handle inputs of any length naturally. Whether you're processing a 5-word sentence or a 500-word paragraph, the same RNN architecture works without modification.

Parameter Efficiency: RNNs share the same parameters across all time steps, making them memory-efficient for very long sequences. A Transformer would need quadratically more memory for the same task.

Real-time Processing: RNNs can process streaming data in real-time, making decisions as new information arrives. This enables applications like live speech recognition and real-time fraud detection.

Theoretical Power: RNNs can theoretically capture unlimited temporal dependencies, modeling complex patterns that unfold over arbitrarily long sequences.

The challenging limitations

Sequential Processing Bottleneck: RNNs must process data step by step, preventing parallelization during inference. Training can be parallelized, but prediction remains inherently sequential.

Vanishing Gradient Problem: Traditional RNNs struggle with long sequences as learning signals become too weak to update early layers effectively. While LSTM and GRU architectures address this, the problem still exists for extremely long sequences.

Computational Complexity: Processing very long sequences becomes computationally expensive, though still more efficient than Transformers for extremely long inputs.

Training Difficulty: RNNs can be challenging to train, requiring careful hyperparameter tuning, gradient clipping, and proper initialization to avoid exploding or vanishing gradients.

Limited Parallelization: Unlike Transformers that can process entire sequences in parallel, RNNs process sequentially, making training slower on modern parallel hardware.

When RNNs excel vs alternatives

Choose RNNs When:

Processing streaming or real-time data

Memory constraints matter (edge computing, mobile devices)

Sequential dependencies are crucial

Variable-length inputs are common

Interpretability of sequential processing is important

Choose Transformers When:

Working with very large datasets

Parallel processing power is abundant

Long-range dependencies span hundreds or thousands of tokens

State-of-the-art performance is critical regardless of computational cost

Choose CNNs When:

Processing spatial data (images, spatial patterns)

Local pattern recognition is primary requirement

Input sizes are fixed

Myths vs Facts About RNNs

Myth 1: "RNNs are completely obsolete"

FACT: Recent research shows RNNs remain highly relevant. The October 2024 paper "Were RNNs All We Needed?" by Mila and Borealis AI researchers demonstrated that minimized RNNs (minLSTM and minGRU) converged at lower loss than state-of-the-art models like Mamba while being significantly more efficient than Transformers.

Myth 2: "RNNs cannot handle long sequences"

FACT: While traditional RNNs suffer from vanishing gradients, LSTM and GRU variants effectively handle long-term dependencies. Modern implementations successfully process sequences of thousands of time steps. The limitation is more about computational efficiency than capability.

Myth 3: "Transformers always outperform RNNs"

FACT: Performance depends on the task and constraints. Research shows that "RNN and CNN based models could still work very well or even better than Transformer in short-sequences tasks" according to 2020 analysis. For resource-constrained environments, RNNs often provide better performance per computational unit.

Myth 4: "RNNs cannot be parallelized at all"

FACT: While inference remains sequential, training can be parallelized. Moreover, new minimized RNN variants are "fully parallelizable during training" according to 2024 research, addressing this traditional limitation.

Myth 5: "RNNs are only good for text"

FACT: RNNs excel across diverse domains:

Healthcare: Patient trajectory prediction with 20% accuracy improvement

Finance: Time-series analysis achieving superior fraud detection

Manufacturing: Predictive maintenance outperforming traditional regression methods

Speech: Google's mobile speech recognition breakthrough

Myth 6: "RNNs always need huge amounts of data"

FACT: RNNs can work effectively with smaller datasets, especially when compared to large Transformers that require massive training corpora. Their parameter sharing across time steps makes them more data-efficient for sequential tasks.

The evidence-based reality

Expert analysis consistently shows that architecture choice should depend on specific requirements rather than general superiority claims. TechTarget's 2024 assessment notes that while Transformers address certain RNN limitations through attention mechanisms, RNNs remain powerful for temporal problems in language translation and speech recognition.

IBM's technical analysis emphasizes that RNNs' strength lies in using sequential data to solve temporal problems, with activation functions applied at each time step to control internal memory updates.

Implementation Checklist

Pre-Implementation Planning

Define Your Problem Type:

[ ] Identify if your data is sequential (time series, text, audio)

[ ] Determine sequence length requirements (short <50, medium 50-500, long >500)

[ ] Assess real-time processing needs

[ ] Evaluate available computational resources

Data Preparation Checklist:

[ ] Clean and normalize sequential data

[ ] Handle missing values in time series appropriately

[ ] Create proper training/validation/test splits maintaining temporal order

[ ] Implement data augmentation if needed (time warping, noise injection)

[ ] Address class imbalance using SMOTE or similar techniques

Architecture Selection Guide

Choose Your RNN Type:

[ ] Basic RNN: For simple tasks with short sequences (<50 steps)

[ ] LSTM: For complex long-term dependencies and highest accuracy needs

[ ] GRU: For balanced performance and efficiency (175x faster than traditional RNNs)

[ ] Bidirectional: When future context helps (text analysis, offline processing)

[ ] Stacked/Deep: For complex pattern recognition (2-4 layers typically optimal)

Hyperparameter Configuration:

[ ] Set appropriate hidden layer sizes (start with 128-256 units)

[ ] Choose activation functions (tanh for hidden states, softmax for outputs)

[ ] Configure learning rate (start with 0.001, adjust based on performance)

[ ] Set batch size (minimum 32 for GPU efficiency according to Baidu research)

[ ] Implement gradient clipping (max norm 1.0-5.0)

Training Best Practices

Optimization Setup:

[ ] Use Adam or RMSprop optimizers for RNNs

[ ] Implement learning rate scheduling (reduce on plateau)

[ ] Set up early stopping based on validation loss

[ ] Configure regularization (dropout 0.2-0.5 between RNN layers)

[ ] Enable gradient clipping to prevent exploding gradients

Monitoring and Debugging:

[ ] Track training and validation loss curves

[ ] Monitor gradient norms during training

[ ] Implement learning curve analysis

[ ] Use tensorboard or similar visualization tools

[ ] Set up checkpointing for long training runs

Performance Optimization

Memory Management:

[ ] Implement custom memory allocators for unlimited sequence lengths

[ ] Use mini-batch processing with sizes ≥32 for 14x performance boost

[ ] Configure proper sequence bucketing for variable-length inputs

[ ] Enable mixed precision training when supported

Speed Optimization:

[ ] Utilize CuDNN-optimized implementations when available

[ ] Implement parallel data loading and preprocessing

[ ] Consider sequence truncation/padding strategies

[ ] Use stateful RNNs for continuous sequences when appropriate

Deployment Checklist

Model Optimization for Production:

[ ] Apply quantization for mobile/edge deployment (Google achieved 5.6x compression)

[ ] Implement model pruning to reduce parameter count

[ ] Set up proper batch processing for inference

[ ] Configure appropriate timeout and error handling

Testing and Validation:

[ ] Test with real-world data distributions

[ ] Validate performance under varying sequence lengths

[ ] Stress test with edge cases and outliers

[ ] Implement A/B testing framework for production comparison

[ ] Set up monitoring for model drift detection

Security and Compliance:

[ ] Implement input validation and sanitization

[ ] Set up model versioning and rollback capabilities

[ ] Ensure compliance with data privacy regulations (GDPR, etc.)

[ ] Configure proper logging and audit trails

RNNs vs Other AI Models

Feature | RNN/LSTM/GRU | Transformer | CNN | Traditional ML |

Sequential Processing | ✅ Native | ⚠️ Through position encoding | ❌ No | ❌ No |

Memory Efficiency | ✅ Constant for long sequences | ❌ Quadratic growth | ✅ Fixed | ✅ Low |

Training Speed | ⚠️ Sequential bottleneck | ✅ Fully parallel | ✅ Parallel | ✅ Fast |

Long Dependencies | ⚠️ Limited (LSTM/GRU better) | ✅ Excellent | ❌ Local only | ❌ No |

Variable Input Length | ✅ Native support | ⚠️ With padding | ❌ Fixed size | ⚠️ Feature engineering |

Real-time Processing | ✅ Streaming capable | ❌ Batch processing | ✅ Fast | ✅ Fast |

Parameter Sharing | ✅ Across time steps | ❌ Position-specific | ✅ Spatial sharing | ❌ No |

Interpretability | ⚠️ Moderate | ❌ Black box | ⚠️ Filter visualization | ✅ High |

Performance Benchmarks (2024 Data)

Language Modeling Tasks:

Minimized RNNs: Converged at lower loss than Mamba

Training Speed: minGRU 175x faster, minLSTM 235x faster than traditional versions

Sequence Length 4096: minGRU/minLSTM 1300x faster than traditional RNNs

Speech Recognition (Karita et al., 2019):

Transformers: Superior in 13/15 ASR benchmarks

RNNs: Competitive in shorter sequences and resource-constrained scenarios

Memory Usage Comparison:

RNN: Linear memory growth O(n)

Transformer: Quadratic memory growth O(n²)

CNN: Constant memory per layer O(1)

When to Choose Each Architecture

Choose RNNs/LSTMs/GRUs for:

Real-time streaming applications (speech recognition, live translation)

Mobile and edge computing with memory constraints

Time series forecasting and sequential prediction

Applications requiring interpretable sequential processing

Scenarios where training data is limited

Choose Transformers for:

Large-scale language modeling and generation

Tasks requiring long-range context understanding

Applications with abundant computational resources

State-of-the-art performance requirements regardless of cost

Parallel processing environments

Choose CNNs for:

Image and spatial data processing

Local pattern recognition tasks

Fixed-size input scenarios

Real-time computer vision applications

Choose Traditional ML for:

Simple classification tasks with structured data

Scenarios requiring high interpretability

Limited training data situations

Rapid prototyping and baseline establishment

Cost-Performance Analysis

Based on 2024 industry data:

Development Costs:

RNNs: Medium (require careful tuning)

Transformers: High (large compute requirements)

CNNs: Low-Medium (well-understood architectures)

Traditional ML: Low (standard implementations)

Operational Costs:

RNNs: Low-Medium (efficient inference)

Transformers: High (GPU-intensive)

CNNs: Medium (optimized implementations available)

Traditional ML: Low (CPU-friendly)

Common Pitfalls & How to Avoid Them

The vanishing gradient catastrophe

What happens: During training on long sequences, learning signals become exponentially weaker as they travel backward through time. The network essentially "forgets" how to learn from early parts of sequences.

Warning signs:

Training loss stops decreasing after initial epochs

Model performs well on short sequences but fails on longer ones

Gradient norms approach zero during backpropagation

Solutions:

Use LSTM or GRU architectures instead of vanilla RNNs

Implement gradient clipping with max norm between 1.0-5.0

Choose proper activation functions (avoid sigmoid, prefer tanh or ReLU)

Initialize weights carefully using Xavier or He initialization

The exploding gradient disaster

What happens: Gradients grow exponentially during backpropagation, causing massive weight updates that destabilize training.

Warning signs:

Loss values become NaN or infinity

Weights grow to extreme values

Training becomes unstable with wild oscillations

Solutions:

Mandatory gradient clipping: Limit gradient norms to reasonable values

Reduce learning rate: Start with smaller steps (0.0001 instead of 0.001)

Proper weight initialization: Use scaled random initialization

Monitor gradient norms: Set up alerts when they exceed thresholds

The sequence length trap

The problem: Choosing inappropriate sequence lengths leads to poor performance and wasted resources.

Too short sequences:

Miss important long-term patterns

Reduce model's ability to understand context

Lead to suboptimal predictions

Too long sequences:

Increase computational costs exponentially

May introduce noise from irrelevant old information

Worsen vanishing gradient problems

Smart solutions:

Start with domain knowledge: How far back does context typically matter?

Use validation experiments: Test multiple sequence lengths systematically

Implement dynamic sequences: Use attention mechanisms to focus on relevant parts

Consider hierarchical approaches: Process long sequences in chunks

The batch size blunder

Research from Baidu Silicon Valley AI Lab shows that batch size dramatically affects RNN performance. Their findings reveal a 14x performance increase when moving from batch size 1 to 32.

Common mistakes:

Using batch size 1 for "online" learning (actually just inefficient)

Making batches too large and running out of memory

Ignoring the relationship between batch size and learning rate

Optimal strategies:

Minimum batch size 32 for GPU efficiency

Scale learning rate with batch size (larger batches = higher learning rates)

Use gradient accumulation if memory limits batch size

Implement dynamic batching for variable-length sequences

The overfitting time bomb

RNNs are particularly susceptible to overfitting because they have many parameters and process sequences step by step.

Early warning signs:

Training accuracy much higher than validation accuracy

Model memorizes training sequences exactly

Poor generalization to new data patterns

Prevention strategies:

Dropout between layers (0.2-0.5 typically effective)

Early stopping based on validation metrics

Weight decay/L2 regularization to prevent extreme parameters

Cross-validation with proper temporal splits (no future information leakage)

The data leakage nightmare

Temporal leakage is especially dangerous with sequential data because it's easy to accidentally include future information when predicting the past.

Common leakage sources:

Using future data points in training examples

Improper train/validation splits that mix time periods

Feature engineering that looks ahead in time

Preprocessing that uses global statistics including test data

Bulletproof prevention:

Strict temporal order: Always split data chronologically

Feature engineering isolation: Calculate statistics only on training data

Walk-forward validation: Use expanding or sliding window validation

Audit your pipeline: Trace every feature to ensure no future information

The computational complexity trap

Memory explosion: RNN memory usage can grow unexpectedly with sequence length, batch size, and model depth.

Speed degradation: Without proper optimization, RNNs can be orders of magnitude slower than necessary.

Smart optimization:

Custom memory allocators for unlimited sequence lengths (Baidu research)

Mixed precision training to halve memory usage

Sequence bucketing to group similar-length sequences

Checkpointing to trade computation for memory

The architecture mismatch mistake

Choosing the wrong RNN variant leads to suboptimal performance and wasted development time.

Decision framework:

Basic RNN: Only for sequences <50 steps with simple patterns

LSTM: When accuracy is paramount and computational resources available

GRU: When efficiency matters (175x faster than basic RNNs)

Bidirectional: When entire sequence is available before processing

Stacked: For complex pattern recognition (but diminishing returns after 3-4 layers)

Future Outlook 2025-2030

The minimized RNN renaissance

October 2024 marked a turning point in RNN research. Researchers from Mila and Borealis AI published "Were RNNs All We Needed?" demonstrating that simplified RNN variants could compete with state-of-the-art Transformers while being dramatically more efficient.

Key breakthrough metrics:

minGRU: 175x speedup over traditional GRUs

minLSTM: 235x speedup over traditional LSTMs

Training efficiency: What takes 3 years with traditional RNNs now takes 1 day

Parameter reduction: Up to 87% fewer parameters while maintaining performance

Edge computing drives RNN adoption

Mobile and IoT constraints favor RNNs. Unlike Transformers that require massive computational resources, RNNs' constant memory usage and sequential processing align perfectly with edge device limitations.

Market projections:

55% of data analysis by deep neural networks expected at edge by 2025

RNN-optimized chips in development by major semiconductor companies

5G network integration enabling distributed RNN processing across device clusters

Hybrid architectures emerge as winners

The future isn't RNN vs Transformer – it's intelligent combination of both. Research shows hybrid CNN-ViT models achieved 97.89% accuracy on HAR datasets, demonstrating the power of architectural diversity.

Emerging hybrid patterns:

RNN preprocessing + Transformer reasoning: Use RNNs for efficient sequence encoding, Transformers for complex reasoning

Transformer planning + RNN execution: Plan with attention mechanisms, execute with sequential RNNs

Dynamic switching: Choose architecture based on sequence length and computational constraints

Industry-specific predictions

Healthcare (2025-2027):

Real-time patient monitoring using wearable device RNNs

Continuous vital sign analysis with 99%+ accuracy for early warning systems

Personalized medicine through longitudinal patient data modeling

Finance (2026-2028):

Quantum-enhanced RNNs for ultra-fast fraud detection

Central bank digital currencies using RNN-based transaction validation

Real-time market manipulation detection across global exchanges

Manufacturing (2025-2030):

Predictive maintenance preventing 95% of unexpected equipment failures

Self-optimizing production lines using RNN-controlled robotics

Supply chain automation with end-to-end RNN orchestration

Breakthrough technologies on the horizon

Neuromorphic RNN computing promises to revolutionize efficiency. Intel's Loihi chip and similar neuromorphic processors could run RNNs with 1000x better energy efficiency by mimicking biological neural processing.

Optical RNN processing may eliminate electronic bottlenecks entirely. Research suggests optical neural networks could process sequences at the speed of light while consuming minimal power.

Quantum-RNN hybrids could enable processing of quantum state sequences, opening applications in quantum machine learning and quantum error correction.

Regulatory and ethical evolution

AI regulation is becoming RNN-aware. The EU AI Act and similar regulations now consider temporal AI systems specifically, recognizing that sequential processing creates unique privacy and fairness challenges.

Key regulatory trends:

Temporal data rights: Right to be forgotten extended to sequential data

Algorithmic auditing: Required explanation of RNN decision-making over time

Bias detection: Specialized tools for identifying temporal bias in sequential models

Investment and market predictions

Gartner predicts that by 2028, 15% of day-to-day work decisions will be made autonomously through agentic AI – much of which will rely on RNN-based sequential reasoning.

Market size projections:

Neural network software market: $139.86 billion by 2030 (32.1% CAGR)

RNN-specific applications: Expected to capture 15-20% of this market

Edge AI RNN market: $45 billion by 2030 driven by IoT growth

Investment focus areas:

Minimized RNN startups: Commercializing academic breakthroughs

Industry-specific RNN solutions: Vertical applications in healthcare, finance, manufacturing

RNN-optimized hardware: Chips and accelerators designed for sequential processing

Research directions shaping the future

Top academic priorities for 2025-2030:

Continual learning RNNs: Networks that learn continuously without forgetting previous knowledge

Multi-modal sequential processing: RNNs that handle text, audio, and visual sequences simultaneously

Causal inference in sequences: Understanding cause-and-effect relationships in temporal data

Federated sequential learning: Training RNNs across distributed devices while preserving privacy

Adversarial robustness: Making RNNs resistant to attacks on sequential data

The evidence strongly suggests that reports of RNN death have been greatly exaggerated. Instead, we're entering an era of specialized, efficient, and hybrid sequential processing that will power the next generation of AI applications.

Frequently Asked Questions

What makes RNNs different from regular neural networks?

Regular neural networks process all input data at once, like looking at an entire photograph. RNNs process data step by step while remembering previous steps, like reading a book word by word while keeping the story in mind. This memory capability makes RNNs perfect for sequential data like text, speech, or time series.

Are RNNs still relevant in 2025?

Absolutely yes. Recent research from October 2024 showed that minimized RNNs achieved better results than state-of-the-art models like Mamba while being 175x faster. The neural network market is projected to grow from $34.76 billion in 2025 to $139.86 billion by 2030, with RNNs playing a significant role in edge computing and real-time applications.

What's the difference between LSTM and GRU?

LSTM (Long Short-Term Memory) uses three gates (forget, input, output) and generally provides slightly better accuracy for complex tasks. GRU (Gated Recurrent Unit) uses only two gates, making it 175x faster while maintaining similar performance. Choose LSTM when accuracy is critical; choose GRU when efficiency matters.

Can RNNs handle very long sequences?

Traditional RNNs struggle with sequences longer than 50-100 steps due to vanishing gradients. LSTM and GRU variants can handle sequences of thousands of steps. For extremely long sequences (10,000+ steps), RNNs become more memory-efficient than Transformers, which have quadratic memory growth.

How do I choose between RNN, Transformer, and CNN?

Choose RNNs for sequential data with temporal dependencies, real-time processing, and memory-constrained environments. Choose Transformers for tasks requiring long-range context understanding with abundant computational resources. Choose CNNs for spatial data like images or when local patterns are most important.

What programming languages and frameworks work best for RNNs?

Python dominates with TensorFlow, PyTorch, and Keras providing excellent RNN implementations. TensorFlow offers production-ready deployment tools. PyTorch provides research-friendly dynamic graphs. JAX enables high-performance implementations. For mobile deployment, TensorFlow Lite and Core ML support optimized RNN inference.

How much data do I need to train an RNN effectively?

RNNs are more data-efficient than large Transformers. For basic tasks, 10,000-100,000 sequences may suffice. Complex tasks might need millions of sequences. The key is sequence diversity rather than just quantity. Quality matters more than quantity – clean, representative data beats massive noisy datasets.

What are the most common RNN training problems?

Vanishing gradients (learning signals become too weak), exploding gradients (learning signals become too large), overfitting (memorizing training data), and slow training (sequential processing bottlenecks). Solutions include using LSTM/GRU architectures, gradient clipping, dropout regularization, and proper batch sizing.

Can RNNs work for real-time applications?

Yes, RNNs excel at real-time processing. Google's speech recognition processes audio streams in real-time on mobile phones. RNNs can make predictions as new data arrives without needing to see complete sequences, unlike Transformers that typically process entire sequences at once.

How do I optimize RNN performance?

Key optimizations include: using batch sizes ≥32 for 14x speedup, implementing gradient clipping, choosing appropriate sequence lengths, using CuDNN-optimized implementations, and applying quantization for deployment. Recent minimized RNN variants provide dramatic speedups over traditional architectures.

What industries benefit most from RNNs?

Manufacturing (34.6% growth rate) leads with predictive maintenance and quality control. Finance uses RNNs for fraud detection and algorithmic trading. Healthcare applies them to patient monitoring and medical imaging. Technology companies use them for speech recognition and natural language processing.

Are RNNs better than Transformers for any tasks?

Yes, in several scenarios: real-time streaming applications, memory-constrained environments, short sequences, and tasks requiring constant memory usage. Research shows RNNs "could still work very well or even better than Transformer in short-sequences tasks" according to 2020 analysis.

How do I handle variable-length sequences in RNNs?

RNNs naturally handle variable-length inputs. Techniques include padding shorter sequences, masking padded values, bucketing similar-length sequences together, and dynamic unrolling that processes exactly the needed number of steps. This flexibility is a key RNN advantage.

What's the future of RNN technology?

The future is bright with minimized RNNs showing 175x speedups, hybrid architectures combining RNN efficiency with Transformer capabilities, edge computing growth favoring RNN deployment, and specialized hardware optimizations. Market projections show continued strong growth through 2030.

How much does it cost to implement RNNs in production?

Costs vary significantly. Simple applications might cost $1,000-10,000 for development and deployment. Complex systems requiring custom optimization can cost $100,000+. Cloud inference costs are typically lower than Transformers due to RNN efficiency. The key is matching architecture choice to budget constraints.

Can I use pre-trained RNN models?

Yes, many pre-trained RNNs are available. TensorFlow and PyTorch provide pre-trained models for common tasks like language modeling, speech recognition, and sentiment analysis. However, RNNs often require task-specific training more than Transformers due to their sequential nature.

What skills do I need to work with RNNs professionally?

Core skills include: Python programming, TensorFlow/PyTorch frameworks, time series analysis, sequential data preprocessing, gradient optimization techniques, and understanding of backpropagation through time. Average salaries range from $125,000-$197,000 for ML engineers with RNN expertise.

How do I debug RNN training problems?

Systematic debugging approach: monitor gradient norms (check for vanishing/exploding), visualize learning curves (detect overfitting), validate on shorter sequences first, use simpler architectures for baseline comparison, and implement extensive logging. Start simple and gradually increase complexity.

Are there any security concerns with RNNs?

Yes, several concerns exist: adversarial attacks on sequential inputs, privacy issues with temporal data, model inversion attacks to extract training sequences, and backdoor attacks through poisoned training data. Implement input validation, differential privacy, and regular security audits.

Key Takeaways

RNNs are neural networks with memory that process sequential data step-by-step, making them ideal for time series, language, and streaming applications

Market growth is explosive with the neural network software market reaching $34.76 billion in 2025 and projected to hit $139.86 billion by 2030 (32.1% CAGR)

Three main variants serve different needs: Basic RNNs for simple tasks, LSTMs for maximum accuracy, and GRUs for optimal efficiency (175x faster than traditional RNNs)

Real-world success stories prove value including Google's 5.6x model compression for mobile speech recognition, Georgia Tech's 4% improvement in heart failure prediction, and superior fraud detection performance

Recent breakthroughs show RNN renaissance with 2024 research demonstrating that minimized RNNs can outperform state-of-the-art models while being dramatically more efficient

Industry adoption spans multiple sectors with manufacturing leading at 34.6% growth, followed by finance (23.4% revenue share) and healthcare showing 90%+ accuracy in medical applications

RNNs excel in specific scenarios including real-time processing, memory-constrained environments, variable-length sequences, and streaming data applications

Professional opportunities are strong with ML engineer salaries ranging $125,000-$197,000 and 35% job growth year-over-year despite talent shortages

Future outlook remains positive with edge computing growth, hybrid architectures, and specialized hardware optimizations driving continued RNN innovation through 2030

Implementation requires careful planning including proper architecture selection, gradient management, sequence length optimization, and performance tuning for production success

Your Next Steps

Assess your specific use case - Determine if you have sequential data (text, time series, audio) and whether real-time processing or memory efficiency matters for your application

Start with a simple prototype - Choose GRU for efficiency or LSTM for accuracy, implement a basic model with your data, and establish baseline performance metrics

Learn essential tools - Install TensorFlow or PyTorch, complete online RNN tutorials, and practice with publicly available sequential datasets to build hands-on experience

Join the RNN community - Follow key researchers on Twitter/X, participate in ML forums like Reddit r/MachineLearning, and attend conferences like NeurIPS and ICML for latest developments

Build practical experience - Work on real projects like stock price prediction, sentiment analysis, or time series forecasting to understand RNN behavior in practice

Optimize for production - Learn about model deployment, quantization techniques, and performance monitoring to move from prototype to production systems

Stay current with research - Subscribe to arXiv alerts for RNN papers, follow Google Research and OpenAI blogs, and monitor developments in minimized RNN architectures

Consider career specialization - With average salaries of $156,281 and strong job growth, specializing in sequential ML can be highly lucrative

Explore hybrid approaches - Experiment with combining RNNs with other architectures like Transformers or CNNs for optimal performance

Contribute to open source - Share your implementations, contribute to frameworks like TensorFlow or PyTorch, and help advance the RNN ecosystem

Glossary

Backpropagation Through Time (BPTT): Training method that "unrolls" an RNN across time steps to calculate gradients and update weights based on errors at each time step.

Batch Size: Number of sequences processed simultaneously during training. Research shows batch sizes ≥32 provide 14x performance improvements for RNNs.

Bidirectional RNN: Processes sequences in both forward and backward directions, providing access to both past and future context for each time step.

Cell State: Internal memory mechanism in LSTM networks that carries information across time steps, controlled by forget and input gates.

Exploding Gradients: Problem where gradients become extremely large during training, causing unstable weight updates. Solved with gradient clipping.

Gated Recurrent Unit (GRU): Simplified RNN variant with two gates (update and reset) that achieves 175x speedup over traditional RNNs while maintaining similar accuracy.

Gradient Clipping: Technique to prevent exploding gradients by limiting the maximum norm of gradients during backpropagation.

Hidden State: The "memory" of an RNN that carries information from previous time steps and gets updated with each new input.

Long Short-Term Memory (LSTM): Advanced RNN architecture with three gates (forget, input, output) designed to solve vanishing gradient problems and capture long-term dependencies.

Minimized RNNs: Recent breakthrough architectures (minLSTM, minGRU) that achieve 175-235x speedups over traditional RNNs through simplified gating mechanisms.

Recurrent Neural Network (RNN): Neural network architecture that processes sequential data by maintaining internal state (memory) and sharing parameters across time steps.

RNN Transducer (RNN-T): Specialized RNN architecture used for streaming applications like speech recognition that produces outputs without needing complete input sequences.

Sequence Bucketing: Optimization technique that groups sequences of similar length together to minimize padding and improve training efficiency.

Sequential Data: Data where order matters, such as text (word sequences), audio (sound waves over time), or time series (values over time periods).

Time Step: Individual processing step in an RNN where one element of the sequence is processed along with the current hidden state.

Vanishing Gradients: Problem where learning signals become exponentially weaker as they propagate back through time, making it difficult to learn long-term dependencies.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments