What is Artificial Neural Network (ANN): The Complete Guide to AI's Brain-Like Technology

- Muiz As-Siddeeqi

- Nov 18, 2025

- 26 min read

The AI Revolution is Here - And It Thinks Like You

Imagine a computer that learns like a child discovering the world - making connections, recognizing patterns, and getting smarter with every experience. This isn't science fiction anymore. Artificial neural networks are powering the AI revolution happening right now, from the voice assistant in your phone to the car that can drive itself. These brain-inspired computer systems are behind every major AI breakthrough of the past decade, and they're about to transform every industry on Earth.

Don’t Just Read About AI — Own It. Right Here

TL;DR: What You Need to Know About Artificial Neural Networks

Neural networks are computer systems inspired by human brains that learn from data to make predictions and decisions

Market exploding: Neural network software market reached $34.76 billion in 2025, projected to hit $139.86 billion by 2030

Real applications today: Diagnosing diseases, powering self-driving cars, recommending Netflix shows, detecting fraud

Simple concept: Artificial neurons receive inputs, process them, and pass signals to other neurons - just like brain cells

Major breakthrough: Can learn complex patterns that traditional programming cannot handle

Future potential: Small efficient models, reasoning capabilities, and hardware-based networks coming by 2025-2026

What is an Artificial Neural Network?

An artificial neural network is a computer system inspired by the human brain that learns to recognize patterns and make decisions from data. It consists of interconnected artificial neurons that process information in layers, adjusting their connections through training to solve complex problems like image recognition, language translation, and prediction.

Table of Contents

Background & Definitions

The story of artificial neural networks begins long before computers existed. In 1676, mathematician Gottfried Wilhelm Leibniz published the chain rule of calculus - unknowingly creating a foundation for how modern neural networks learn. But the real breakthrough came in 1943 when neurophysiologist Warren McCulloch and logician Walter Pitts created the first mathematical model of a neural network.

Their idea was revolutionary: what if we could build machines that think like brains? Instead of following rigid programming rules, these machines would learn from experience, just like humans do.

What Makes Neural Networks Special

Traditional computers follow instructions step by step. If you want a computer to recognize a cat in a photo, you'd have to program every possible rule: "cats have pointed ears," "cats have whiskers," "cats have four legs." But what about cats sitting down? Cats with hidden ears? This approach quickly becomes impossible.

Neural networks flip this approach entirely. Instead of programming rules, you show the network thousands of cat photos labeled "cat" and thousands of non-cat photos labeled "not cat." The network figures out the patterns on its own. This is machine learning in action.

Core Components Explained Simply

Artificial Neurons: Think of these as tiny decision-makers. Each neuron receives multiple signals (inputs), weighs their importance, adds them up, and decides whether to send a signal forward. Just like brain cells, but made of math instead of biology.

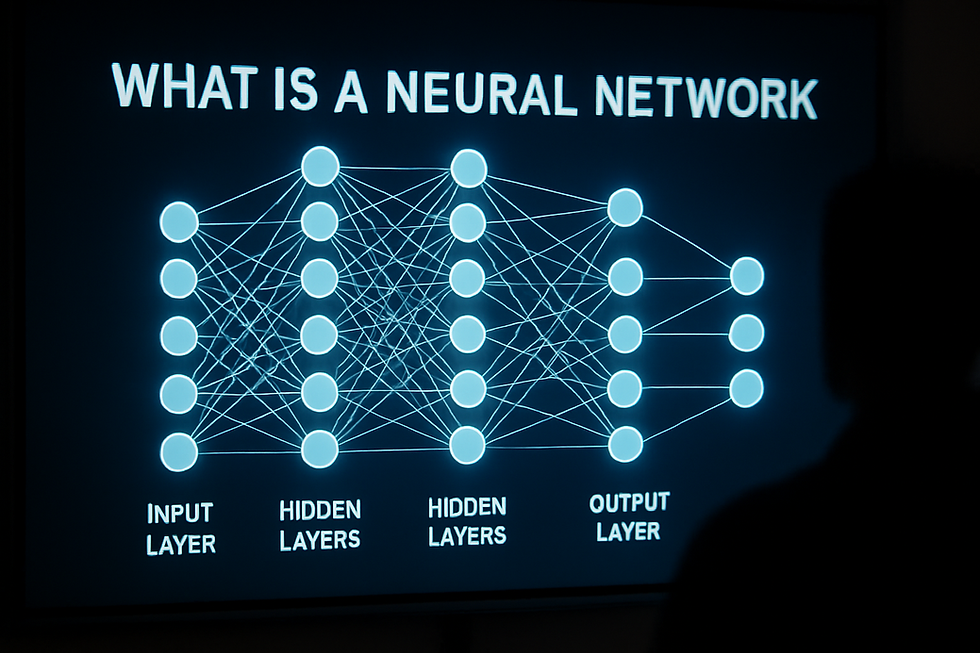

Layers: Neurons are organized in layers. The first layer receives raw data (like pixels in an image). Hidden layers in the middle process and combine information. The final layer gives you the answer (like "this is a cat").

Weights and Connections: These determine how much influence each input has. During learning, the network adjusts these weights to improve its performance. It's like strengthening useful connections in your brain while weakening unhelpful ones.

Current Landscape

The artificial neural network industry is experiencing explosive growth that even experts struggle to keep up with. The numbers tell an incredible story of rapid adoption across every sector of the global economy.

Market explosion drives unprecedented growth

The neural network software market reached $34.76 billion in 2025 and is projected to grow at a staggering 32.10% CAGR to reach $139.86 billion by 2030, according to Mordor Intelligence. To put this in perspective, this market is doubling approximately every 2.5 years.

The broader deep learning market, which includes neural networks, hit $93.72 billion in 2024 with projections reaching $1.42 trillion by 2034 - a 31.24% CAGR that represents one of the fastest-growing technology sectors in history.

Leading companies reshape entire industries

Google/Alphabet leads with TensorFlow (their open-source neural network framework), DeepMind's breakthrough proteins research, and Gemini AI models. Their neural networks power search results for billions of people daily and recently achieved a Nobel Prize for AlphaFold2's protein structure predictions.

Microsoft invested $31 billion in data centers and acquired 485,000 NVIDIA chips specifically for AI projects in 2024. Their Azure AI platform provides neural network capabilities to enterprises worldwide, while their partnership with OpenAI brings neural network technology to millions through ChatGPT and Copilot.

NVIDIA dominates the hardware side, capturing 43% of global AI chip spending in 2024. Their GPUs train most of the world's neural networks, making them essential infrastructure for the AI revolution. Their recent GB200 Superchips and neural shading technology show they're pushing into new applications beyond traditional computing.

Investment reaches unprecedented levels

Global AI investment hit $109.1 billion in the US alone in 2024, with neural networks capturing a significant portion. Notable funding rounds include:

Databricks: $10 billion Series J (December 2024)

OpenAI: $6.6 billion Series B (October 2024)

Anthropic: $4 billion corporate round (November 2024)

Healthcare AI startups specifically raised $5.6 billion in 2024, growing nearly 3x year-over-year, with most using neural network technologies for medical imaging, drug discovery, and diagnostic support.

Regional adoption patterns reveal global race

North America maintains its lead with 38.06% of global neural network software revenue in 2024, driven by established tech giants and venture capital ecosystem. The US AI market alone reached $50.16 billion in 2024.

Asia-Pacific shows the fastest growth at 35.7% CAGR through 2030, led by China's 83% corporate AI adoption rate and India's 60% adoption rate. China leads global AI adoption at 60%, while India benefits from strong government support through NASSCOM initiatives.

Europe holds 24.97% of global AI market share with Germany leading at $25.7 billion AI market value. European companies focus heavily on regulatory compliance and responsible AI development, driven by the EU AI Act requirements.

How Neural Networks Work

Understanding how neural networks function doesn't require a PhD in mathematics. The basic concept mirrors how your own brain processes information, but uses computer calculations instead of biological neurons.

The biological inspiration behind artificial networks

Your brain contains roughly 86 billion neurons connected by 100 trillion synapses. When you see a dog, specific neurons fire, sending electrical signals through your neural pathways. Your brain recognizes "dog" not through a programmed checklist, but because millions of neurons work together, each contributing a small piece to the recognition process.

Artificial neural networks copy this approach using mathematical neurons instead of biological ones. Each artificial neuron receives inputs (like dendrites), processes them (like a cell body), and sends outputs (like axons) to other neurons.

Step-by-step process: from input to decision

Input Layer: This receives raw data. For image recognition, each pixel becomes an input. For text analysis, each word or character becomes an input. The input layer simply passes information forward - it doesn't make any decisions.

Hidden Layers: This is where the magic happens. Multiple hidden layers process information in increasingly sophisticated ways. The first hidden layer might detect simple edges and colors. Deeper layers combine these to recognize shapes, then objects, then complete scenes. Modern networks can have hundreds of hidden layers.

Output Layer: This gives you the final answer. For cat recognition, it might output "85% probability this is a cat." For language translation, it outputs translated text. For stock prediction, it outputs buy/sell recommendations.

Learning happens through repetition and adjustment

Neural networks learn through a process called backpropagation, invented by Seppo Linnainmaa in 1970 and popularized by David Rumelhart, Geoffrey Hinton, and Ronald Williams in 1986.

Here's how it works in simple terms:

Forward Pass: Data flows through the network, layer by layer, producing a prediction

Error Calculation: Compare the network's guess with the correct answer

Backward Pass: Calculate how much each connection contributed to the error

Weight Adjustment: Modify connections to reduce future errors

Repeat: Process thousands or millions of examples until the network learns

This process resembles how children learn. Show a child many pictures while saying "dog" or "cat," and eventually they learn to distinguish between the two. Neural networks follow the same pattern but can process millions of examples much faster than humans.

Different types solve different problems

Feedforward Networks send information in one direction only, from input to output. These work well for basic classification tasks like spam detection or simple image recognition.

Convolutional Neural Networks (CNNs) specialize in processing images and videos. Invented by Kunihiko Fukushima in 1979 and perfected by Yann LeCun at Bell Labs in 1989, CNNs use "filters" that scan across images to detect features like edges, textures, and shapes.

Recurrent Neural Networks (RNNs) have memory - they can use information from previous inputs. This makes them perfect for processing sequences like speech, text, or time series data. However, traditional RNNs struggle with long sequences.

Long Short-Term Memory (LSTM) networks, created by Sepp Hochreiter and Jürgen Schmidhuber in 1997, solved the memory problem. LSTMs can remember important information for long periods while forgetting irrelevant details. They revolutionized speech recognition, machine translation, and language modeling.

Transformer Networks, introduced in 2017 with the paper "Attention Is All You Need," process all parts of input simultaneously using "attention" mechanisms. This makes them much faster to train than RNNs and capable of handling very long sequences. Transformers power modern language models like GPT and BERT.

Step-by-Step Learning Process

Let's follow a neural network as it learns to recognize handwritten digits - the "Hello World" of machine learning that launched practical neural network applications.

Phase 1: Network initialization and data preparation

The network starts with random weights and connections. It knows nothing about digits and would perform no better than random guessing. We feed it the MNIST dataset - 60,000 handwritten digit images each labeled with the correct number (0-9).

Each image is 28x28 pixels, creating 784 input neurons (one per pixel). The network has several hidden layers with hundreds of neurons each, and 10 output neurons (one for each digit 0-9).

Phase 2: First attempts and massive errors

When shown the first handwritten "7," the network's random weights produce nonsense. Maybe it outputs 40% confidence for "3," 35% for "1," and only 8% for "7." This is completely wrong, creating a large error signal.

The network calculates exactly how wrong each connection was. Connections that pushed toward the wrong answer get weakened. Connections that pointed toward the right answer (even accidentally) get strengthened.

Phase 3: Pattern recognition emerges gradually

After processing thousands of examples, subtle patterns begin emerging. Neurons in early layers start detecting horizontal lines, vertical lines, and curves. Middle layers combine these to recognize shapes like loops and intersections. Final layers combine shapes to recognize complete digits.

This process mirrors human learning. Children first see individual strokes and lines, then learn to combine them into letters and numbers. The difference is neural networks can process millions of examples in hours rather than years.

Phase 4: Fine-tuning and expertise development

After seeing all 60,000 training examples multiple times, the network becomes quite skilled. It learns that "1" usually has vertical lines, "0" has closed loops, and "8" has two stacked loops. But it also learns subtle variations - that handwritten "7" sometimes has horizontal lines across the middle, or that "9" can look similar to "4" in certain handwriting styles.

Phase 5: Real-world testing and deployment

The trained network gets tested on 10,000 new handwritten digits it has never seen before. Modern networks achieve over 99% accuracy on this task - better than many humans reading messy handwriting.

This same learning process, scaled up with more data and computing power, enables neural networks to recognize faces, translate languages, drive cars, and diagnose diseases.

Real-World Case Studies

Case Study 1: Google's diabetic retinopathy detection saves sight worldwide

Company: Google AI in collaboration with Moorfields Eye Hospital

Timeline: 2015-2017 development, 2018+ global deployment

Problem: India faces a critical shortage of 127,000 eye doctors, with 45% of patients suffering preventable vision loss before diagnosis.

Google trained an Inception Neural Network on over 128,000 retinal photographs to detect diabetic retinopathy, a leading cause of blindness. The system achieved 97.5% sensitivity and 93.4% specificity - matching or exceeding board-certified ophthalmologists.

Measurable outcomes: The system can analyze thousands of images per hour, reduced screening costs by an estimated 70% per patient, and enabled screening in remote areas previously without specialist access. Dr. Andrew Beam from Harvard Medical School called it "the brave new world in medicine."

Business impact: The system is now deployed across multiple countries including India, Thailand, and the UK, potentially preventing millions of cases of preventable blindness worldwide.

Case Study 2: Tesla's end-to-end neural network revolutionizes autonomous driving

Company: Tesla, Inc.

Timeline: 2014-2024 (ongoing evolution)

Problem: Traditional autonomous driving systems required 300,000+ lines of hand-coded rules that couldn't handle infinite real-world driving variations.

Tesla developed HydraNet and end-to-end neural networks using data from over 500,000 vehicles globally. Their system processes 8 camera feeds in real-time using modified ResNet-50 architectures and Transformer networks for temporal reasoning.

Measurable outcomes: FSD v12 reduced the system from 300,000 lines of code to approximately 3,000 lines. The neural network processes 80+ frames per second and enables the vehicle to handle previously impossible scenarios like construction zones and emergency vehicles automatically.

Business impact: Tesla's FSD capability generates $15,000-20,000 per vehicle in additional revenue and contributed significantly to Tesla's $800+ billion market valuation. The technology has moved the entire automotive industry toward learning-based rather than rule-based systems.

Case Study 3: Airbus manufacturing gets 1000x speed improvement

Company: Airbus with Accenture Labs

Timeline: 2020-2023 implementation

Problem: Aircraft final assembly inspection required time-intensive manual processes prone to human error.

Airbus implemented computer vision neural networks analyzing multiple camera feeds covering assembly stations. The system uses CNNs for object detection and motion recognition networks to automatically detect assembly completion.

Measurable outcomes: The system processes millions of images per minute compared to hundreds manually - a 1000x speed improvement. It dramatically improved reading accuracy, eliminated human error in assembly step logging, and reduced final assembly inspection time significantly.

Business impact: The system is scalable across Airbus's global manufacturing network, providing significant cost savings while maintaining enhanced safety standards and freeing workers for higher-value tasks.

Case Study 4: Netflix neural networks drive 80% of viewing decisions

Company: Netflix, Inc.

Timeline: 2006-present (continuous evolution)

Problem: With thousands of titles and diverse user preferences, Netflix needed to solve the discovery problem to prevent user churn.

Netflix developed a multi-architecture approach combining CNNs for image processing, RNNs and LSTMs for viewing pattern analysis, and Variational Autoencoders for user preference modeling. The system creates unique homepages for each of 260+ million subscribers.

Measurable outcomes: 80%+ of viewing activity comes from recommendations. The system achieves 0.88 RMSE accuracy and generates recommendations in milliseconds. Netflix estimates the recommendation system saves $1 billion annually through reduced churn.

Business impact: The recommendation quality serves as a major competitive differentiator and enables data-driven content development, including successful original programming investments.

Case Study 5: Bayesian neural networks personalize diabetes treatment

Organization: Multi-institutional healthcare research consortium

Timeline: 2022-2024

Problem: Diabetes treatment outcomes vary significantly due to individual factors, but conventional models lack uncertainty quantification for clinical decisions.

Researchers developed Bayesian Neural Networks using TensorFlow Probability to predict HbA1c level changes while providing uncertainty estimates. The system treats network weights as probability distributions rather than fixed values.

Measurable outcomes: The system achieved 85.41% accuracy, 84.87% precision, and 88.91% F1-Score with well-calibrated uncertainty intervals. This enabled personalized treatment strategies with clinically relevant confidence measures.

Business impact: The framework enables tailored treatment strategies, improved patient outcomes, reduced unnecessary interventions, and better resource allocation. The approach is applicable to other chronic conditions beyond diabetes.

Regional & Industry Variations

Healthcare leads adoption with 27% market share

Healthcare represents the largest application segment for neural networks, driven by FDA approvals increasing from 6 AI-enabled medical devices in 2015 to 223 in 2023. AI could slash drug discovery costs by up to 70%, with companies like Isomorphic Labs preparing AI-designed drugs for human trials in 2024.

Regional patterns: North America leads healthcare AI adoption due to established healthcare IT infrastructure and regulatory clarity. Asia-Pacific shows rapid growth due to large population bases ideal for training medical AI systems. Europe focuses on privacy-compliant medical AI development under GDPR frameworks.

Financial services prioritize fraud detection and algorithmic trading

The BFSI (Banking, Financial Services, and Insurance) sector represents 23.4% of neural network software market revenue in 2024. 58% of finance companies embraced AI technologies in 2024, a 21% increase from 2023.

Google's banking clients reported 60% fewer false positives and 50% faster detection-to-action time for fraud detection. Financial institutions average 3.7x ROI for generative AI implementations, with top performers achieving 10.3x ROI.

Manufacturing experiences fastest growth at 34.6% CAGR

77% of manufacturers implemented AI in 2024, up from 70% in 2023. The manufacturing sector is projected to gain $3.8 trillion by 2035 from AI adoption through predictive maintenance, quality control, and production optimization.

Regional focus: Asia-Pacific leads manufacturing AI adoption due to high manufacturing concentration. Europe emphasizes automotive applications, while North America focuses on aerospace and high-tech manufacturing.

Automotive drives deep learning with 39.6% market share

The automotive neural network software market reached $37.5 billion in 2023 with over 32% expected CAGR from 2024-2032. Major applications include autonomous driving systems, ADAS (Advanced Driver Assistance Systems), and predictive maintenance.

Tesla, Waymo, and traditional automakers are deploying neural networks for perception systems processing camera, LiDAR, and radar data in real-time.

Pros & Cons Analysis

Advantages that drive widespread adoption

Pattern Recognition Superiority: Neural networks excel at recognizing complex patterns that traditional programming cannot handle. They can identify subtle relationships in data that humans might miss entirely.

Adaptive Learning: Unlike traditional software that requires manual updates, neural networks improve automatically as they process more data. This self-improvement capability makes them invaluable for dynamic environments.

Versatile Applications: The same basic neural network architecture can handle image recognition, language translation, speech processing, and prediction tasks with appropriate training data.

Scalability: Modern neural networks can process massive datasets and handle millions of simultaneous users, making them suitable for global applications like search engines and social media platforms.

Real-Time Processing: Once trained, neural networks can make decisions in milliseconds, enabling applications like autonomous driving and high-frequency trading that require instant responses.

Disadvantages and limitations that require consideration

Data Dependency: Neural networks require massive amounts of high-quality training data. Poor or biased data leads to poor performance, and collecting sufficient data can be expensive and time-consuming.

Black Box Problem: Understanding why a neural network made a specific decision can be extremely difficult. This lack of interpretability creates challenges in regulated industries like healthcare and finance.

Computational Requirements: Training large neural networks requires significant computing resources, often costing millions of dollars and consuming enormous amounts of electricity.

Overfitting Risk: Networks may memorize training data rather than learning generalizable patterns, leading to poor performance on new, unseen data.

Adversarial Vulnerability: Small, carefully crafted changes to inputs can cause neural networks to make drastically wrong predictions, creating security concerns.

Training Complexity: Designing effective neural network architectures requires expertise, and training can take weeks or months even with powerful hardware.

Myths vs Facts

Myth 1: "Bigger models are always better"

Fact: MIT Technology Review identifies small language models as a key 2025 breakthrough. Smaller, more efficient models can match large models on specific tasks while consuming significantly less computational resources. Google's Gemma models and other efficient architectures prove that targeted optimization often outperforms brute-force scaling.

Myth 2: "Neural networks learn exactly like human brains"

Fact: While inspired by brains, artificial neural networks operate fundamentally differently. Human brains use highly efficient bio-pulse signals with only small fractions of neurons firing simultaneously, while traditional neural networks activate all components for every input. This is why researchers are developing dynamic neural networks that better mimic brain efficiency.

Myth 3: "Transformers will dominate AI indefinitely"

Fact: Multiple viable alternatives are emerging rapidly. RWKV models combine transformer training efficiency with RNN inference capabilities. State space models like Mamba offer constant memory usage during inference. Hybrid architectures like StripedHyena-Hessian-7B show competitive performance with improved efficiency.

Myth 4: "AI systems are black boxes by nature"

Fact: Significant research focuses on explainable AI and interpretability. Enhanced model interpretability was identified as a major 2024 trend, with tools being developed for understanding decision-making processes. Regulatory frameworks like the EU AI Act embed transparency requirements, driving innovation in interpretable AI systems.

Myth 5: "Neural networks work perfectly for all problems"

Fact: Neural networks have specific limitations including catastrophic forgetting, adversarial vulnerability, and struggles with systematic generalization. They're particularly poor for some regression tasks where traditional statistical methods outperform them. Success requires careful problem selection and architectural choices.

Myth 6: "AI will replace all human workers"

Fact: Current evidence shows AI augments rather than replaces human workers in most applications. Healthcare AI assists doctors but requires human oversight. Manufacturing AI optimizes processes but needs human maintenance. The most successful implementations combine human expertise with AI capabilities.

Comparison Tables

Neural Network Types Comparison

Type | Best For | Training Speed | Memory Usage | Accuracy | Real-time Performance |

Feedforward | Simple classification | Fast | Low | Good | Excellent |

CNN | Images, video | Medium | Medium | Excellent | Good |

RNN | Sequences, text | Slow | High | Good | Medium |

LSTM | Long sequences | Slow | High | Excellent | Medium |

Transformer | Language, translation | Medium | Very High | Excellent | Good |

RWKV | Mixed tasks | Fast | Low | Very Good | Excellent |

Market Segments by Growth Rate

Industry | 2024 Market Share | CAGR 2024-2030 | Key Applications | Leading Companies |

Healthcare | 27% | 29.1% | Medical imaging, drug discovery | Google AI, Isomorphic Labs |

BFSI | 23.4% | 28.6% | Fraud detection, algorithmic trading | JPMorgan, Goldman Sachs |

Manufacturing | 18% | 34.6% | Quality control, predictive maintenance | Siemens, GE |

Automotive | 15% | 32% | Autonomous driving, ADAS | Tesla, Waymo |

Technology | 12% | 30% | Recommendation systems, search | Google, Microsoft, Meta |

Regional Market Analysis

Region | 2024 Market Share | CAGR | AI Adoption Rate | Investment 2024 | Key Strengths |

North America | 38.06% | 30.5% | 65% | $109.1B | Venture capital, tech giants |

Asia-Pacific | 32% | 35.7% | 60% | $8.8B | Manufacturing, population scale |

Europe | 24.97% | 28% | 58% | $10.9B | Regulation, responsible AI |

Others | 4.97% | 32% | 45% | $2.2B | Emerging markets |

Pitfalls & Risks

Technical risks that can derail projects

Data Quality Problems: Garbage in, garbage out remains the fundamental rule. Biased training data leads to biased AI systems that can perpetuate discrimination. Insufficient data results in poor generalization. Outdated data causes models to fail when conditions change.

Overfitting Dangers: Networks may achieve 99% accuracy on training data but fail completely on new data. This happens when models memorize specific examples rather than learning general patterns. Complex networks are particularly susceptible to overfitting.

Adversarial Attacks: Small, invisible changes to inputs can fool neural networks completely. A stop sign with carefully placed stickers might be classified as a speed limit sign by an autonomous vehicle's vision system, creating serious safety risks.

Catastrophic Forgetting: When neural networks learn new tasks, they often forget previously learned information completely. This makes them unsuitable for applications requiring continuous learning without data retention.

Business and operational risks

Massive Resource Requirements: Training state-of-the-art models can cost millions of dollars in computing resources and electricity. OpenAI reportedly spent over $100 million training GPT-4, putting advanced AI capabilities beyond the reach of most organizations.

Talent Scarcity: AI experts command salaries of $300,000-$500,000+ annually, and demand far exceeds supply. Many projects fail not due to technical issues but due to lack of qualified personnel.

Regulatory Compliance: The EU AI Act, effective August 2, 2025, requires extensive documentation, risk assessment, and monitoring for AI systems. Non-compliance can result in fines up to 4% of global revenue.

Vendor Lock-in: Dependence on specific cloud platforms, frameworks, or hardware creates strategic risks. NVIDIA's GPU dominance means supply constraints can halt entire AI projects.

Ethical and societal risks

Bias and Discrimination: AI systems trained on historical data perpetuate existing inequalities. Hiring algorithms discriminate against women, facial recognition fails for minorities, and loan approval systems exhibit racial bias.

Privacy Violations: Neural networks trained on personal data may inadvertently memorize and reveal private information. Membership inference attacks can determine if specific individuals were included in training datasets.

Job Displacement: While AI creates new jobs, it also eliminates others. Manufacturing automation reduces factory employment, algorithmic trading displaces human traders, and AI writing tools threaten content creation jobs.

Misinformation and Deepfakes: Generative neural networks can create realistic fake images, videos, and text that are increasingly difficult to detect, threatening democratic processes and social trust.

Risk mitigation strategies

Data Governance: Implement rigorous data quality checks, bias detection systems, and diverse dataset collection processes. Regular auditing ensures training data remains representative and current.

Model Validation: Use separate validation datasets, cross-validation techniques, and adversarial testing to ensure robust performance on unseen data.

Interpretability Tools: Deploy explainable AI systems, particularly in regulated industries. Tools like LIME, SHAP, and attention visualization help understand model decisions.

Continuous Monitoring: Implement systems to detect model drift, performance degradation, and adversarial attacks in production environments.

Ethical AI Frameworks: Adopt responsible AI principles, diverse development teams, and stakeholder consultation processes throughout the development lifecycle.

Future Outlook

Small models revolutionize accessibility by 2025

MIT Technology Review identifies 2025 as the breakthrough year for small language models that can compete with large models on specific tasks while consuming significantly fewer resources. This democratizes AI capabilities for organizations without massive computing budgets.

Google's Gemma models and similar efficient architectures prove that targeted optimization often outperforms brute-force scaling. This trend will make neural networks accessible to small businesses, educational institutions, and developing countries previously excluded by computational requirements.

Reasoning capabilities become standard

Following OpenAI's o1 release in September 2024, models that "reason" step-by-step will become standard by 2025-2026. Google's Gemini 2.0 Flash Thinking and similar systems show that reasoning-enhanced models provide more reliable and explainable outputs.

This development addresses one of neural networks' biggest weaknesses - the black box problem - by making decision processes more transparent and verifiable.

Hardware integration transforms efficiency

Logic-gate networks using computer chip hardware directly consume "hundreds of thousands" times less energy than traditional neural networks. While training currently takes hundreds of times longer, breakthrough optimizations expected by 2025-2026 could revolutionize AI efficiency.

Neuromorphic hardware that better mimics biological neural processes shows promise for approaching human brain efficiency levels, potentially enabling AI systems that operate on minimal power consumption.

Alternative architectures challenge transformer dominance

RWKV models combining transformer training efficiency with RNN inference capabilities are scaling to 14+ billion parameters. State space models like Mamba offer constant memory usage during inference, addressing one of transformers' major limitations.

Hybrid architectures combining multiple approaches show competitive performance with improved efficiency, suggesting the future lies in specialized architectures rather than one-size-fits-all solutions.

Regulatory frameworks drive innovation

The EU AI Act implementation beginning February 2, 2025 will create global standards similar to GDPR's impact on privacy. This drives innovation in:

Explainable AI systems that meet transparency requirements

Bias detection and mitigation tools for fair AI deployment

Privacy-preserving techniques like federated learning and differential privacy

Audit and monitoring systems for continuous compliance

Scientific applications accelerate discovery

Following the 2024 Nobel Prize for AlphaFold, 2025-2030 will see AI-powered breakthroughs in materials science, drug development, and climate research. Meta's materials science datasets and similar initiatives will accelerate scientific discovery across disciplines.

Quantum-neural hybrid systems, while still in early research, show potential for revolutionary AI architectures beyond current paradigms, likely emerging after 2030.

Industry transformation accelerates

Healthcare will see continued expansion with AI-designed drugs entering human trials, personalized medicine becoming standard, and diagnostic AI achieving clinical deployment globally.

Manufacturing will achieve higher automation levels with 77%+ of manufacturers using AI by 2025, driven by predictive maintenance, quality control, and production optimization delivering measurable ROI.

Automotive will progress toward full autonomy with neural network improvements in perception, decision-making, and safety systems, potentially enabling commercial robotaxi services by 2027-2028.

Market consolidation vs democratization

The next 2-3 years will determine whether AI capabilities concentrate among a few tech giants or democratize across industries. Small model trends, open-source frameworks, and cloud accessibility support democratization, while massive training costs and hardware requirements favor consolidation.

NVIDIA faces legitimate competition from Amazon, Broadcom, and AMD chips, particularly for inference tasks. US CHIPS Act spending will begin showing material domestic production results, potentially reshuffling hardware supply chains.

Frequently Asked Questions

What exactly is an artificial neural network in simple terms?

An artificial neural network is a computer system inspired by the human brain that learns to recognize patterns and make decisions from data. Instead of following pre-programmed rules, it adjusts its internal connections through training to solve problems like recognizing images, translating languages, or making predictions.

How are neural networks different from regular computer programs?

Regular programs follow specific instructions written by programmers: "If this, then that." Neural networks learn from examples: show them thousands of cat photos labeled "cat" and they figure out what makes a cat a cat. Traditional programs are deterministic (same input = same output), while neural networks are probabilistic (giving confidence scores for their predictions).

Do neural networks really think like human brains?

Not exactly. While inspired by brains, they work differently. Human brains use efficient bio-electrical pulses with only small portions active at once. Neural networks process information through mathematical calculations with all components active for every input. They're more like simplified mathematical models inspired by brain concepts than actual brain replicas.

How much data do neural networks need to work properly?

It depends on the problem complexity. Simple tasks might need thousands of examples, while complex problems like language understanding require millions or billions of examples. For image recognition, tens of thousands of labeled images per category typically produce good results. The quality of data matters more than quantity - clean, representative datasets outperform larger messy ones.

What makes some neural networks better than others?

Several factors determine performance: Architecture design (how neurons connect), training data quality (clean, representative examples), computational resources (processing power and time), hyperparameter tuning (adjusting learning settings), and problem suitability (matching network type to task requirements). The best networks optimize all these factors together.

Can small businesses use neural networks or are they only for big tech companies?

Small businesses can definitely use neural networks today. Cloud platforms like Google Cloud AI, AWS SageMaker, and Microsoft Azure provide pre-built neural network services without requiring massive infrastructure. Open-source frameworks like TensorFlow and PyTorch offer free development tools. Many applications can run on standard business hardware or affordable cloud computing.

What are the biggest mistakes people make with neural networks?

Common mistakes include: Using insufficient or poor-quality training data, choosing wrong network types for problems, overfitting to training data, ignoring interpretability requirements, underestimating computational needs, skipping proper validation testing, and deploying systems without monitoring for performance drift or bias.

How do I know if my problem is suitable for neural networks?

Neural networks excel at pattern recognition, prediction from complex data, classification tasks, image/video analysis, natural language processing, and sequential data analysis. They're less suitable for simple rule-based problems, exact mathematical calculations, small dataset problems, applications requiring perfect accuracy, or situations needing complete interpretability.

What programming skills do I need to work with neural networks?

Python is the most popular language, with libraries like TensorFlow, PyTorch, and Keras. Basic statistics and linear algebra help understand underlying concepts. Data manipulation skills using pandas and numpy are essential. However, many tools now offer no-code or low-code options for common applications, making neural networks accessible to non-programmers.

How long does it take to train a neural network?

Training time varies enormously: Simple networks might train in minutes on a laptop, medium complexity tasks typically require hours to days on standard hardware, while large language models can take weeks or months on specialized supercomputers costing millions of dollars. Most business applications fall in the hours-to-days category.

Are neural networks secure and can they be hacked?

Neural networks face several security challenges: Adversarial attacks use specially crafted inputs to fool models, data poisoning corrupts training datasets, model inversion can extract private information, and backdoor attacks insert malicious behaviors. However, security techniques like robust training, input validation, differential privacy, and continuous monitoring help mitigate these risks.

What happens when neural networks make mistakes?

Neural network errors can range from harmless misclassifications to serious safety issues. Mitigation strategies include: confidence thresholds (only acting on high-confidence predictions), human oversight for critical decisions, ensemble methods (combining multiple models), continuous monitoring for performance drift, and fallback systems when AI fails.

How do neural networks handle new situations they haven't seen before?

This is called generalization - a key challenge for neural networks. Well-trained networks can handle similar but new situations reasonably well, transfer learning adapts existing networks to new domains, few-shot learning helps models learn from minimal new examples, but truly novel situations often require retraining or human intervention.

What's the difference between AI, machine learning, and neural networks?

Artificial Intelligence is the broad goal of making machines intelligent. Machine Learning is a subset of AI focused on learning from data rather than explicit programming. Neural Networks are one type of machine learning inspired by brain structures. Other machine learning methods include decision trees, support vector machines, and statistical models.

Can neural networks explain their decisions?

Traditional neural networks are often "black boxes" - difficult to interpret. However, explainable AI techniques like attention visualization, feature importance analysis, and decision pathway tracing help understand neural network decisions. Regulatory requirements are driving development of more interpretable architectures, especially for healthcare and finance applications.

What computer hardware do I need for neural networks?

CPUs can handle small networks and prototyping, GPUs (like NVIDIA RTX series) dramatically accelerate training for most applications, cloud computing provides access to powerful hardware without large upfront costs, specialized AI chips (like Google's TPUs) optimize for specific neural network operations, but most learning and experimentation can start with standard laptops and free cloud resources.

How do I validate that a neural network is working correctly?

Validation strategies include: holdout testing on data never seen during training, cross-validation for robust performance estimates, A/B testing in real-world deployments, performance metrics appropriate to your problem (accuracy, precision, recall), bias testing across different demographics or conditions, and adversarial testing to find failure modes.

What are the costs involved in developing neural network applications?

Costs vary widely: Development time (weeks to months of expert work), computational resources ($100s to $millions for training), data collection and labeling (often the largest cost), infrastructure (cloud services or hardware), personnel (AI talent commands high salaries), and ongoing maintenance (monitoring, updates, retraining). Many projects start with proof-of-concepts using free tools and scale up based on results.

How do neural networks handle privacy and data protection?

Privacy-preserving techniques include: differential privacy (adding mathematical noise to protect individuals), federated learning (training without centralizing data), homomorphic encryption (computing on encrypted data), data anonymization and synthetic data generation. Regulatory frameworks like GDPR and emerging AI legislation require specific privacy protections for AI systems.

What's the future job market for neural network skills?

The field shows explosive growth with roles including: Machine Learning Engineers (building and deploying models), Data Scientists (analyzing data and developing algorithms), AI Researchers (advancing the field), AI Ethics Specialists (ensuring responsible development), Product Managers (overseeing AI products), and domain specialists (applying AI in specific industries). Salaries range from $100K-$500K+ depending on experience and location.

Key Takeaways

Neural networks are pattern-learning systems inspired by brains but operating through mathematical calculations rather than biological processes

Market growth is explosive with neural network software reaching $34.76 billion in 2025, projected to hit $139.86 billion by 2030 at 32.10% CAGR

Real applications deliver measurable results including Google's 97.5% accuracy in diabetic retinopathy detection, Tesla's end-to-end driving systems, and Netflix's $1 billion annual savings from recommendations

Multiple architectures serve different purposes from CNNs for image processing to LSTMs for sequential data, with emerging alternatives like RWKV and state space models challenging transformer dominance

Small models will democratize access in 2025, enabling organizations without massive computational resources to deploy effective AI solutions

Data quality matters more than quantity - clean, representative datasets consistently outperform larger but messy training data

Interpretability becomes critical as regulatory frameworks like the EU AI Act (effective 2025) require explainable AI systems for high-risk applications

Hardware evolution promises efficiency gains with logic-gate networks potentially reducing energy consumption by 100,000x while neuromorphic chips approach brain-like efficiency

Regional adoption patterns vary with North America leading investment ($109B in 2024), Asia-Pacific showing fastest growth (35.7% CAGR), and Europe focusing on responsible AI development

Business success requires holistic approach combining technical expertise, domain knowledge, ethical considerations, regulatory compliance, and continuous monitoring for optimal outcomes

Actionable Next Steps

Start with a clear business problem - Identify specific challenges where pattern recognition or prediction could add value, focusing on areas where you have quality data available

Assess your data readiness - Inventory existing datasets, evaluate their quality and completeness, and determine whether you have sufficient examples for neural network training

Choose appropriate development tools - Begin with user-friendly platforms like Google Cloud AutoML, AWS SageMaker, or Microsoft Azure AI Studio for proof-of-concept projects

Build internal AI literacy - Invest in training for your team through online courses (Coursera, edX, fast.ai), workshops, or hiring AI-experienced personnel

Start small with pilot projects - Select low-risk applications that demonstrate value quickly, using pre-trained models or cloud services to minimize initial complexity

Establish data governance practices - Implement processes for data quality, bias detection, privacy protection, and regulatory compliance from the beginning

Plan for scalability and monitoring - Design systems that can grow with success, including performance tracking, model updating, and failure detection mechanisms

Connect with AI community resources - Join industry groups, attend conferences, and engage with open-source projects to stay current with rapid developments

Evaluate ethical and regulatory requirements - Review applicable regulations (GDPR, upcoming AI Act requirements), establish ethical guidelines, and plan for transparency needs

Consider strategic partnerships - Collaborate with AI consultancies, research institutions, or technology vendors to accelerate development and reduce risk

Glossary

Activation Function: Mathematical function that determines whether a neuron should be activated based on its inputs. Common types include ReLU, sigmoid, and tanh.

Artificial Intelligence (AI): Broad field focused on creating machines that can perform tasks typically requiring human intelligence.

Backpropagation: Algorithm for training neural networks by calculating how much each connection contributed to errors and adjusting weights accordingly.

Bias: In neural networks, adjustable parameters that help models fit data better. In AI ethics, unfair discrimination in AI system outputs.

Catastrophic Forgetting: Problem where neural networks lose previously learned information when learning new tasks.

Convolutional Neural Network (CNN): Type of neural network specialized for processing grid-like data such as images, using filters to detect features.

Deep Learning: Machine learning using neural networks with many layers (typically 3+ hidden layers) that can learn complex patterns.

Epoch: One complete pass through all training data during neural network training.

Feedforward Network: Neural network where information flows in one direction from input to output without loops.

Gradient Descent: Optimization algorithm that adjusts neural network weights to minimize errors by moving in the direction of steepest error reduction.

Hidden Layer: Intermediate layers in neural networks that process information between input and output layers.

Hyperparameters: Configuration settings for neural networks (like learning rate, number of layers) that are set before training begins.

Long Short-Term Memory (LSTM): Type of recurrent neural network designed to remember important information over long sequences while forgetting irrelevant details.

Machine Learning: Subset of AI focused on systems that learn and improve from data rather than explicit programming.

Neural Network: Computing system inspired by biological brains, consisting of interconnected artificial neurons that process information.

Neuron: Basic processing unit in neural networks that receives inputs, processes them, and produces outputs.

Overfitting: Problem where neural networks memorize training data rather than learning generalizable patterns, leading to poor performance on new data.

Recurrent Neural Network (RNN): Type of neural network with connections that loop back, allowing them to maintain memory of previous inputs.

Reinforcement Learning: Machine learning approach where systems learn through trial and error, receiving rewards or penalties for actions.

Supervised Learning: Machine learning using labeled training data where the correct answers are provided during training.

Transformer: Type of neural network that processes all parts of input simultaneously using attention mechanisms, commonly used for language tasks.

Unsupervised Learning: Machine learning approach that finds patterns in data without labeled examples or correct answers.

Weights: Adjustable parameters in neural networks that determine the strength of connections between neurons.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments