Understanding Convolutional Neural Networks (CNNs): Complete Guide

- Muiz As-Siddeeqi

- Nov 17, 2025

- 29 min read

Imagine if a computer could look at a photo and instantly recognize your cat, diagnose a medical condition, or guide a self-driving car safely down the highway. This isn't science fiction—it's the reality of Convolutional Neural Networks (CNNs), the breakthrough technology that taught machines to "see" and understand images like humans do.

CNNs have quietly revolutionized everything from your smartphone camera to life-saving medical equipment. They process over 4 billion photos uploaded to social media daily, help radiologists detect cancer with 99% accuracy, and enable autonomous vehicles to navigate complex roads. Behind these remarkable achievements lies an elegant mathematical concept inspired by how our own brains process visual information.

Don’t Just Read About AI — Own It. Right Here

TL;DR - Key Takeaways

CNNs are specialized neural networks that excel at recognizing patterns in images by mimicking human visual processing

They revolutionized AI in 2012 when AlexNet achieved breakthrough performance, reducing computer vision errors by 40%

Real-world applications include medical diagnosis, autonomous vehicles, manufacturing quality control, and smartphone features

Market value reached $22.93 billion in 2024 and will grow to $330 billion by 2034 with 30.6% annual growth

They work by using mathematical filters to detect edges, textures, and complex patterns through multiple processing layers

Major tech companies invest billions with $100+ billion in AI venture capital funding during 2024 alone

What are Convolutional Neural Networks?

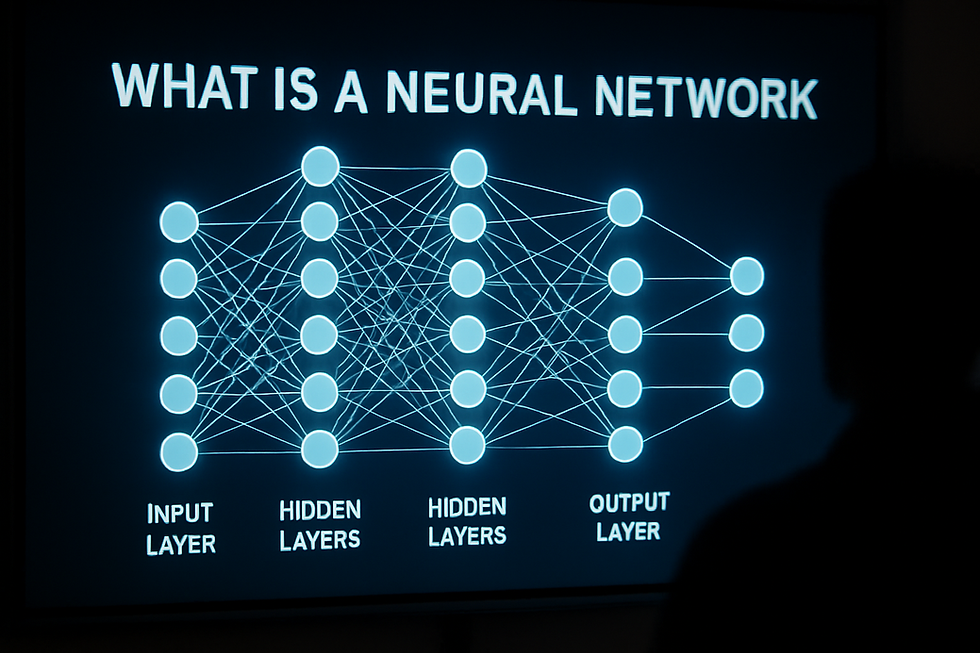

Convolutional Neural Networks (CNNs) are specialized artificial neural networks designed to process visual data like images and videos. They use mathematical operations called convolutions to automatically detect patterns, edges, and features, enabling computers to recognize objects, faces, and scenes with human-level or superior accuracy.

Table of Contents

Background & Historical Development

The story of CNNs begins not in Silicon Valley, but in a 1950s neuroscience laboratory where researchers David Hubel and Torsten Wiesel discovered how cats' brains process visual information. Their groundbreaking work revealed that the visual cortex contains specialized cells that respond to specific patterns—simple cells detect edges and lines, while complex cells recognize more sophisticated shapes.

From biological inspiration to digital reality

Kunihiko Fukushima, a Japanese computer scientist, translated these biological insights into the first artificial neural network architecture in 1980. His Neocognitron, published in Biological Cybernetics, featured the world's first convolutional and downsampling layers. However, Fukushima's creation lacked a crucial learning mechanism—backpropagation—limiting its practical applications.

The breakthrough came from Yann LeCun at AT&T Bell Labs. In 1989, LeCun successfully applied backpropagation to CNNs for handwritten ZIP code recognition, processing mail for the US Postal Service. This work culminated in LeNet-5 in November 1998, the first commercially successful CNN that achieved 99.05% accuracy on handwritten digit recognition while processing millions of bank checks daily.

The deep learning revolution

For over a decade, CNNs remained a niche technology overshadowed by other machine learning approaches. Everything changed on September 30, 2012, when Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton submitted AlexNet to the ImageNet competition.

AlexNet's performance was revolutionary—achieving 15.3% top-5 error rate compared to the previous best of 26.2%. This dramatic 10.9% improvement wasn't just incremental progress; it represented a paradigm shift from hand-crafted feature extraction to learned representations. Training on two NVIDIA GTX 580 GPUs in Krizhevsky's bedroom, AlexNet proved that deep learning could solve real-world computer vision problems at scale.

Architecture evolution timeline

The success of AlexNet sparked rapid innovation:

2014 brought VGGNet from Oxford University, demonstrating that deeper networks (16-19 layers) using uniform 3×3 filters could achieve superior performance. The same year, Google's GoogLeNet won ImageNet with only 6.67% error rate while using 15 times fewer parameters than AlexNet through innovative Inception modules.

2015 marked another breakthrough with ResNet by Microsoft Research. By introducing skip connections, ResNet solved the vanishing gradient problem and enabled training of networks with 152+ layers. For the first time, an AI system surpassed human performance on ImageNet classification.

Current Landscape with Recent Statistics

Market explosion and investment surge

The CNN and computer vision market has entered a phase of explosive growth. Market valuations for 2024 range from $17.84 billion to $22.93 billion depending on scope definitions, with all major research firms projecting compound annual growth rates exceeding 20%.

Precedence Research projects the AI-enhanced computer vision market will reach $330.42 billion by 2034, representing a 30.58% CAGR. This growth is fueled by unprecedented investment—global AI venture capital funding reached $100+ billion in 2024, up 80% from $55.6 billion in 2023.

Recent architectural innovations

Despite competition from Vision Transformers, CNNs continue evolving with breakthrough architectures in 2024-2025:

RepViT (2024) represents a renaissance in mobile CNNs, achieving >80% ImageNet accuracy with 1.0ms latency on iPhone 12—the first lightweight model to reach this milestone. Large Kernel CNNs have demonstrated superior Weakly Supervised Object Localization performance, automatically resolving traditional activation mapping problems.

Multi-Stream CNNs optimized in February 2025 achieved remarkable robustness metrics: 0.931 noise resistance and 0.950 occlusion sensitivity in medical applications, while delivering 0.969 data scalability efficiency in e-commerce implementations.

Industry adoption acceleration

Enterprise adoption has skyrocketed to 78% of organizations using AI in 2024, according to Stanford's AI Index 2025. Manufacturing leads with 40-48% market share, driven by Industry 4.0 initiatives achieving up to 30% reduction in defect rates. The automotive sector shows the highest growth rate, propelled by autonomous vehicle development and advanced driver assistance systems.

Healthcare AI investment reached $5.6 billion in 2024, with CNN-based medical imaging systems often exceeding human expert accuracy in pattern recognition tasks. One notable example: COVID-19 detection systems now achieve >96.72% F1 scores with >99.33% specificity.

Key Mechanisms: How CNNs Actually Work

Understanding CNNs requires breaking down their core operations into simple, digestible concepts. Think of a CNN as a sophisticated pattern detection machine that processes images through multiple specialized layers.

Convolution: The fundamental operation

Imagine examining a photograph with a special magnifying glass that can only see small 3×3 pixel squares at a time. This magnifying glass contains nine special numbers called a filter or kernel. Here's how it works:

Place the filter on the top-left corner of your image

Multiply each pixel value under the filter by the corresponding filter number

Add all these products together to create one new number

Slide the filter one pixel right and repeat

Continue across the entire image row by row

This process creates a feature map—a new image highlighting specific patterns. Different filters detect different features: some find vertical edges, others horizontal lines, and still others identify textures or corners.

Mathematical representation: If we have a 5×5 image and apply a 3×3 filter, the output size equals (5-3+2×0)/1 + 1 = 3×3 assuming no padding and stride of 1.

Pattern hierarchy development

CNNs build understanding through layers of increasing complexity:

First layers detect simple patterns: edges where dark meets light, corners where edges intersect, basic shapes like circles or rectangles

Middle layers combine simple patterns into more complex features: combinations of edges form wheels, windows, or facial features

Deep layers recognize complete objects: faces, cars, animals, or medical anomalies by combining complex features

This hierarchical approach mimics human visual processing—we don't consciously analyze individual edges but automatically recognize complete objects through unconscious pattern assembly.

Pooling: Efficient information compression

After detecting features through convolution, CNNs typically have too much information. Pooling layers solve this by creating smaller, more manageable representations while preserving essential information.

Max pooling is the most common approach:

Divide the feature map into non-overlapping 2×2 squares

Take the largest number from each square

Use this maximum as one pixel in the compressed image

The result: 75% size reduction (from 6×6 to 3×3) while keeping the strongest features

This process provides translation invariance—a cat remains recognizable whether it appears in the top-left or center of an image.

Step-by-Step CNN Process

Let's trace how a CNN processes a color photograph to identify a cat:

Input preprocessing (Step 1)

Original image: 224×224 color photograph Digital representation: Three layers (red, green, blue) with pixel values from 0-255 Normalization: Values scaled to 0-1 range for computational stability

First convolutional layer (Step 2)

Operation: 64 different 3×3 filters scan the image Feature detection: Each filter specializes in specific patterns—vertical edges, horizontal lines, diagonal textures Output: 64 feature maps, each 224×224 pixels, highlighting different image aspects Computation: ~92 million calculations (224×224×3×64×9 operations)

Activation function (Step 3)

ReLU application: Apply f(x) = max(0,x) to every pixel in all feature maps Purpose: Introduces non-linearity, allowing the network to learn complex patterns Effect: Negative values become zero, positive values unchanged Biological parallel: Neurons either fire or don't—no negative firing rates

First pooling layer (Step 4)

Max pooling operation: 2×2 windows with stride 2 Size reduction: From 224×224 to 112×112 per feature map Information preservation: Retains strongest features while reducing computational load Translation invariance: Object recognition becomes position-independent

Deep layer progression (Steps 5-10)

Multiple conv-ReLU-pool cycles: Each iteration detects increasingly complex patterns Spatial size decreases: 112×112 → 56×56 → 28×28 → 14×14 → 7×7 Feature depth increases: 64 → 128 → 256 → 512 feature maps Pattern complexity grows: Simple edges → textures → object parts → complete objects

Fully connected layers (Steps 11-12)

Flattening: Convert 7×7×512 feature maps into single vector of 25,088 numbers Dense connections: Each neuron connects to all neurons in previous layer Pattern integration: Combine all detected features for final decision Dimensionality: Often reduces to 4,096 → 1,000 → number of classes

Output generation (Step 13)

Classification layer: Produces probability scores for each possible category Softmax activation: Ensures all probabilities sum to 100% Decision: Highest probability determines the prediction Example output: 87% cat, 8% dog, 3% rabbit, 2% other animals

Real-World Case Studies

Case Study 1: COVID-19 detection at Beijing Youan Hospital

Organization: Beijing Youan Hospital (China's leading infectious disease treatment facility) Implementation date: 2020, published January 2021 Challenge: Rapid, accurate COVID-19 diagnosis during pandemic peak

Beijing Youan Hospital deployed a Convolutional Neural Network Classification Framework (CNNCF) for automated COVID-19 detection from chest X-rays and CT scans. The system used multi-stage CNN architecture with ResBlock components and Control Gate Blocks, trained on four public datasets plus hospital data.

Quantifiable outcomes: The system achieved >96.72% F1 score with >99.33% specificity and 95.16% sensitivity—performance comparable to seventh-year respiratory residents and senior radiologists. Most remarkably, it outperformed three of five expert physicians in diagnostic accuracy while processing images in real-time.

Business impact: Diagnostic time decreased from hours to seconds, crucial during pandemic surges. The system provided automated auxiliary testing when RT-PCR results were uncertain, addressing physician workload during critical periods. This implementation demonstrated CNN's potential to augment healthcare capabilities during global health emergencies.

Case Study 2: NVIDIA self-driving car breakthrough

Organization: NVIDIA Corporation Implementation date: 2016 (based on 10+ years DARPA research) Challenge: End-to-end autonomous vehicle control without hand-crafted rules

NVIDIA's DAVE-2 system represented a paradigm shift in autonomous driving—a single CNN learning steering commands directly from camera images. The 9-layer architecture (5 convolutional + 3 fully connected layers) contained 250,000 parameters and 27 million connections, trained on just 72 hours of human driving data.

Quantifiable outcomes: The system achieved 98% autonomous driving time on typical routes with zero interventions during 10-mile highway segments on Garden State Parkway. Real-time processing at 30 frames per second enabled effective performance across highways, local roads, and residential streets in various weather conditions.

Business impact: This breakthrough demonstrated end-to-end deep learning feasibility for autonomous vehicles, reducing dependence on hand-crafted feature extraction and rule-based systems. The approach became foundational for modern autonomous vehicle development across the industry. Data collection spanned Illinois, Michigan, Pennsylvania, New York, and New Jersey, proving generalization across diverse environments.

Case Study 3: Taiwan semiconductor quality control revolution

Organization: Taiwan Semiconductor Industry (90% of global advanced semiconductor production) Implementation date: 2025 Challenge: Automated defect detection in integrated circuit packaging

Taiwan's semiconductor manufacturers implemented CNN-based defect detection systems using Scanning Acoustic Tomography (SAT) for integrated circuit quality control. The 7-layer CNN architecture employed flood-fill algorithms for image augmentation with optimized batch sizes and amplification rates.

Quantifiable outcomes: The optimal configuration (40× amplification rate with batch size 32) achieved 99% detection accuracy with <0.4% missed detection rate and 0.1% false alarm rate. The system accurately identified secondary glue defects and sealing hole defects (mold voids) while significantly reducing processing time compared to manual inspection.

Business impact: Implementation reduced operator eye strain and fatigue-related turnover while enhancing productivity in semiconductor manufacturing. Automated quality control replaced manual visual inspections, reducing costs through improved defect detection efficiency. A major communications company producing first-responder radios achieved one-month payback periods with significant reductions in quality escapes and inspection time.

Case Study 4: Agricultural yield prediction at Iowa State University

Organization: Iowa State University (Industrial and Manufacturing Systems Engineering & Department of Agronomy) Implementation date: 2016-2018 validation years (published 2019) Challenge: Accurate crop yield prediction across the entire US Corn Belt

Researchers developed a CNN-RNN hybrid framework for crop yield prediction covering 1,176 counties for corn and 1,115 counties for soybeans across 13 Corn Belt states. The system combined convolutional networks (W-CNN and S-CNN) with LSTM-enhanced recurrent networks, using weather, soil, and management data from 1980-2017.

Quantifiable outcomes: The system achieved 9% RMSE for corn yield prediction and 8% for soybean prediction relative to average yields—substantially outperforming Random Forest, Deep Fully Connected Neural Networks, and LASSO methods. Predictions remained accurate when tested on previously unseen locations using 5-fold cross-validation.

Business impact: Enhanced agricultural planning and resource allocation decisions across the Corn Belt. The system improved import/export decision-making for global food production while capturing genetic improvements, weather dependencies, and soil spatial relationships. Applications extended to new hybrid performance prediction in untested locations, supporting agricultural innovation.

Case Study 5: Amazon Go cashierless retail transformation

Organization: Amazon Implementation date: Store launches 2018+ Challenge: Completely automated retail experience without traditional checkout

Amazon's "Just Walk Out" technology combines computer vision, deep learning, and sensor fusion—similar to self-driving car technology—for real-time customer and product tracking. The system uses smartphone app authentication, automatic item tracking, and automated billing without human cashiers.

Quantifiable outcomes: The system achieved approximately 33% reduction in shopping steps by eliminating the checkout process entirely. Real-time tracking and billing provided high-confidence item identification and quantity tracking across multiple store deployments.

Industry-wide retail impact: CNN implementations deliver 15-30% reduction in shrinkage, 20-25% decrease in out-of-stock situations, and 10-20% increases in customer satisfaction scores. Operational efficiency improvements range from 15-25% with ROI timeframes of 12-18 months for typical implementations. The visual search market projects growth to $29.27 billion by 2025 with early adopters achieving 30% potential increases in digital commerce revenue.

Industry Applications Across Sectors

Healthcare and medical imaging leadership

CNNs have revolutionized medical diagnostics with accuracy rates often exceeding human expert performance. Tuberculosis detection systems achieve AUC of 0.99 compared to 0.95 for radiologists, while breast cancer detection reaches 99.17% accuracy on standard datasets. Real-time analysis replaces manual interpretation, addressing healthcare resource constraints globally.

Applications span comprehensive medical specialties: oncology for cancer detection and tumor segmentation, neurology for brain MRI analysis and neurological disorder detection, cardiology for cardiac imaging and heart disease diagnosis, ophthalmology for retinal disease detection, and dermatology for skin cancer identification.

Market impact: Healthcare AI investment reached $5.6 billion in 2024, with CNNs forming the backbone of diagnostic imaging systems worldwide. The technology addresses critical healthcare challenges including physician shortages, diagnostic consistency, and early disease detection.

Automotive and autonomous systems

CNNs remain the dominant technology for spatial information processing in autonomous vehicles. Integration with LiDAR enhances 3D perception capabilities while real-time object detection using YOLO and SSD architectures enables vehicle control, motion planning, and environmental perception.

Autonomous Mobile Robot applications utilize SSD MobileNetv2 FPN Lite 320×320 architectures for real-world vehicle datasets, achieving high accuracy with fast inference suitable for embedded control systems. Applications include object detection for cars, motorcycles, people, and rickshaws under various lighting conditions.

Safety statistics underscore the importance: with 1.19 million annual road traffic deaths globally according to WHO data, computer vision becomes crucial for accident prevention through advanced driver assistance systems and fully autonomous operation.

Manufacturing and Industry 4.0

Manufacturing represents 40-48% of the computer vision market, driven by quality control, defect detection, and assembly line automation applications. Industry 4.0 adoption, predictive maintenance, and automated inspection systems deliver up to 30% reduction in defect rates.

Smart factory implementations are accelerating, with 86% of manufacturing executives believing smart factory solutions will drive competitiveness. CNN applications include surface defect detection, assembly quality control, and automated inspection systems with accuracy achievements of 96.2-99% and ROI timeframes ranging from one month to 18 months.

The semiconductor industry exemplifies this transformation—Taiwan produces 90% of advanced semiconductors using CNN-powered quality control systems that achieve 99% detection accuracy while eliminating operator fatigue and improving productivity.

Agriculture and precision farming

CNN applications in agriculture focus on crop monitoring, yield prediction, disease detection, and automated harvesting. The Iowa State University case study demonstrates 8-9% RMSE in yield prediction across multi-state coverage with multi-year prediction capabilities.

Precision agriculture technologies enable farmers to optimize resource allocation, reduce environmental impact, and maximize crop yields. Applications include aerial crop monitoring using drone imagery, automated irrigation systems responsive to crop stress indicators, and early disease detection preventing widespread crop losses.

Regional applications vary by geography—North American focus on corn and soybean production, Asian emphasis on rice and vegetables, and European concentration on wheat and specialty crops. The technology supports global food security through improved agricultural efficiency and productivity.

Retail and e-commerce transformation

The retail computer vision market will reach $46.96 billion by 2030, driven by cashierless stores, visual search capabilities, inventory automation, and customer behavior analysis. Operational improvements include 15-30% shrinkage reduction and 20-25% out-of-stock reduction with 10-20% customer satisfaction improvements.

Visual search represents a major growth area with Pinterest processing 600+ million visual searches monthly as of 2018. Applications include product identification, style matching, inventory management, and personalized recommendations based on visual preferences rather than text queries.

Innovation areas continue expanding: automated checkout systems eliminate traditional cash registers, inventory robots monitor shelf stock in real-time, and customer behavior analytics optimize store layouts for improved shopping experiences.

Regional Variations and Global Adoption

North America: Market leadership and innovation

North America commands 38-41% of the global market with the US representing $7.75 billion in AI computer vision market size for 2024. Projected growth to $111.75 billion by 2034 reflects a 30.60% CAGR, driven by heavy government and military investment, tech giant presence (Google, Microsoft, NVIDIA, Amazon), strong IoT infrastructure, and advanced autonomous vehicle development.

Key growth drivers include defense and security applications, healthcare system modernization, and manufacturing automation. Silicon Valley's venture capital ecosystem continues attracting global AI talent and investment, with $42% of total US venture capital flowing to AI companies in 2024.

Regional specializations include West Coast focus on consumer technology and autonomous vehicles, East Coast emphasis on financial services and healthcare applications, and Midwest concentration on agricultural and manufacturing automation.

Asia-Pacific: Fastest growing region

Asia-Pacific leads global growth rates across all major research firms, driven by China's significant computer vision investments, India's rapid adoption in manufacturing and healthcare, Japan's advanced robotics integration, and South Korea's AI research and semiconductor leadership.

China dominates in autonomous vehicles, smart cities, and precision agriculture applications. Government AI initiatives and massive infrastructure investments support nationwide technology deployment. India emerges as a major market for healthcare diagnostics and manufacturing automation, leveraging cost advantages and technical expertise.

Regional advantages include large-scale manufacturing capabilities, government support for AI adoption, growing middle-class consumer markets, and significant R&D investments. The region's diverse economic development stages create opportunities for CNN applications across different technology maturity levels.

Europe: Regulatory leadership and automotive focus

European markets show steady growth with focus on automotive applications (Mercedes-Benz, BMW, Volkswagen), manufacturing automation, and ethical AI development. Key markets include Germany, UK, France, and Netherlands with strong emphasis on regulatory compliance and privacy protection.

EU AI ethics regulations shape adoption patterns, emphasizing explainable AI and algorithmic accountability. This regulatory environment creates competitive advantages for European companies in healthcare, financial services, and government applications requiring high ethical standards.

Regional specializations include German strength in automotive and industrial automation, UK leadership in financial technology applications, French focus on aerospace and defense applications, and Nordic emphasis on environmental and sustainability applications.

Advantages and Disadvantages

Key advantages of CNNs

Automatic feature learning eliminates the need for manual feature engineering. Traditional computer vision required experts to handcraft features for specific tasks—edge detectors, texture analyzers, shape descriptors. CNNs learn these features automatically from data, often discovering patterns humans never considered.

Translation invariance enables object recognition regardless of position. A CNN trained to recognize cats will identify them whether they appear in the top-left corner or center of an image. This robustness makes CNNs practical for real-world applications where objects appear in unpredictable locations.

Hierarchical pattern recognition builds understanding from simple to complex. Early layers detect basic patterns like edges and corners, while deeper layers combine these into sophisticated representations. This mirrors human visual processing and enables recognition of complex objects from simple building blocks.

Parameter efficiency through weight sharing dramatically reduces model complexity. Instead of learning separate parameters for each image location, CNNs use the same filters across the entire image. This approach reduces parameters from millions to thousands while improving generalization.

Proven commercial success across industries validates CNN effectiveness. From medical diagnosis to autonomous vehicles, CNNs have demonstrated superior performance in diverse real-world applications with measurable business impact and return on investment.

Significant limitations and challenges

Large data requirements pose major implementation barriers. CNNs typically require thousands or millions of labeled examples for effective training. Creating high-quality datasets is expensive and time-consuming, particularly for specialized domains like medical imaging where expert annotation is required.

Computational intensity demands powerful hardware resources. Training state-of-the-art CNNs requires high-end GPUs costing thousands of dollars with substantial electricity consumption. This creates barriers for smaller organizations and developing regions with limited technological infrastructure.

Black box nature limits interpretability and trust. CNNs make decisions through millions of parameters interacting in complex ways, making it nearly impossible to understand why specific predictions were made. This opacity creates challenges for applications requiring explainability, such as healthcare and legal systems.

Overfitting susceptibility occurs when models learn training data too specifically. CNNs may achieve perfect accuracy on training data while performing poorly on new examples. Preventing overfitting requires careful regularization, data augmentation, and validation techniques.

Domain specificity limits generalization across different problem types. A CNN trained for medical imaging won't work for autonomous driving without complete retraining. Transfer learning helps but doesn't eliminate the need for domain-specific adaptation and fine-tuning.

Comparison with alternative approaches

Traditional computer vision using hand-crafted features requires domain expertise but offers interpretability. Performance is typically lower than CNNs but computation requirements are modest. This approach remains viable for applications with limited data or explainability requirements.

Vision Transformers (ViTs) represent the newest alternative, achieving superior performance on some tasks. However, they require even more data than CNNs and lack the inductive biases that make CNNs naturally suited for images. Hybrid approaches combining CNNs and Transformers show promise.

Support Vector Machines and Random Forests offer simpler alternatives with good interpretability. Performance is generally lower than CNNs but training is faster and requires less data. These methods remain valuable for applications where simplicity and explainability outweigh accuracy requirements.

Myths vs Facts About CNNs

Myth 1: CNNs will replace all other machine learning methods

Fact: CNNs excel at image-related tasks but are not universal solutions. Traditional machine learning methods remain superior for many applications involving structured data, small datasets, or interpretability requirements. XGBoost and Random Forest still outperform CNNs for tabular data problems like fraud detection or customer segmentation.

Myth 2: CNNs perfectly mimic human vision

Fact: While inspired by biological vision, CNNs process information very differently from human brains. Humans can recognize objects from single examples while CNNs require thousands of training samples. Human vision integrates context, memory, and reasoning in ways current CNNs cannot replicate.

Myth 3: More data always improves CNN performance

Fact: Data quality matters more than quantity. CNNs can overfit to biased or low-quality datasets, leading to poor real-world performance. Carefully curated smaller datasets often outperform massive datasets with inconsistent labeling or domain mismatches.

Myth 4: CNNs are becoming obsolete due to Vision Transformers

Fact: CNNs remain dominant in production applications due to efficiency advantages and proven reliability. RepViT and other recent CNN architectures continue achieving state-of-the-art results. Hybrid CNN-Transformer approaches often outperform pure Transformer models, suggesting complementary rather than replacement relationships.

Myth 5: CNNs require minimal preprocessing

Fact: Successful CNN deployment requires careful data preprocessing, augmentation, and validation strategies. Image normalization, proper train/validation splits, and domain-specific augmentation significantly impact model performance. Poor preprocessing can render even powerful CNN architectures ineffective.

Myth 6: All CNNs are computationally expensive

Fact: MobileNet, EfficientNet, and other lightweight architectures enable CNN deployment on smartphones and embedded devices. Model compression, quantization, and pruning techniques can reduce computational requirements by 10-100x while maintaining accuracy.

Comparison Tables and Benchmarks

CNN Architecture Comparison

Architecture | Year | Layers | Parameters | ImageNet Top-5 Error | Key Innovation |

LeNet-5 | 1998 | 7 | 60K | N/A (MNIST only) | First successful CNN |

AlexNet | 2012 | 8 | 60M | 15.3% | GPU training, ReLU, Dropout |

VGGNet-19 | 2014 | 19 | 144M | 7.3% | Uniform 3×3 architecture |

GoogLeNet | 2014 | 22 | 4M | 6.67% | Inception modules |

ResNet-152 | 2015 | 152 | 60M | 3.57% | Skip connections |

EfficientNet-B7 | 2019 | N/A | 66M | 1.6% | Compound scaling |

RepViT | 2024 | N/A | Variable | >80% | Mobile optimization |

Performance by Industry Application

Industry | Typical Accuracy | Processing Speed | ROI Timeframe | Primary Benefit |

Healthcare | 95-99% | Real-time | 12-24 months | Diagnostic accuracy |

Manufacturing | 96-99% | Real-time | 1-18 months | Quality control |

Automotive | 98% autonomous | 30 FPS | 2-5 years | Safety improvement |

Agriculture | 91-92% prediction | Daily/seasonal | 1-3 years | Yield optimization |

Retail | 85-99% | Real-time | 12-18 months | Operational efficiency |

Regional Market Size Comparison (2024)

Region | Market Size (USD) | Growth Rate (CAGR) | Key Drivers |

North America | $7.75B | 30.6% | Tech giants, defense spending |

Asia-Pacific | Variable | Highest | Manufacturing, government initiatives |

Europe | Estimated $4-5B | 20-25% | Automotive, regulations |

Rest of World | Estimated $2-3B | 25-35% | Emerging market adoption |

Hardware Requirements by Use Case

Use Case | GPU Memory | CPU Cores | System RAM | Training Time | Inference Speed |

Research/Development | 16GB+ | 8+ | 32GB+ | Days-weeks | Variable |

Production Training | 8-16GB | 8+ | 32GB+ | Hours-days | N/A |

Edge Deployment | Optional | 4 | 4-8GB | N/A | <100ms |

Mobile Apps | N/A | Variable | 2-4GB | N/A | <50ms |

Enterprise Inference | 4-8GB | 4-8 | 16GB+ | N/A | <10ms |

Technical Implementation Details

Modern development frameworks

TensorFlow/Keras dominance in production stems from comprehensive deployment tools including TF Serving for model hosting, TF Lite for mobile deployment, and TensorBoard for visualization. Market share analysis shows TensorFlow leading production deployments at 55% while PyTorch dominates research applications at 65%.

PyTorch's research popularity derives from dynamic computation graphs enabling easier debugging and experimentation. TorchScript capabilities allow conversion to static graphs for production deployment, bridging the research-production gap.

Emerging alternatives include JAX for high-performance computing, MXNet for distributed training, and specialized frameworks like ONNX for model interoperability across platforms.

Hardware acceleration technologies

GPU architectures evolved specifically for CNN workloads. NVIDIA's Tensor Cores provide up to 10x performance improvements for mixed-precision training. RTX 2070/2080 Ti represent minimum requirements for serious development while A100 and H100 systems enable large-scale training.

Specialized AI chips are emerging for inference deployment. Google's TPUs optimize TensorFlow workloads, Intel's Neural Compute Sticks enable edge deployment, and Qualcomm's AI Engine powers mobile applications.

Memory optimization techniques include gradient checkpointing to reduce memory usage during training, mixed-precision training using 16-bit floats, and model parallelism for extremely large networks.

Training optimization strategies

Data augmentation significantly improves generalization through rotation, scaling, cropping, and color adjustment. Modern augmentation techniques include Mixup, CutMix, and AutoAugment for automatic augmentation strategy discovery.

Regularization prevents overfitting through dropout (randomly setting neurons to zero), batch normalization (normalizing layer inputs), and weight decay (penalizing large weights). Batch normalization is now standard in most CNN architectures.

Optimization algorithms have evolved from basic SGD to Adam, AdamW, and specialized optimizers like LAMB for large-batch training. Learning rate scheduling through cosine annealing, warm restarts, and adaptive methods improves convergence.

Deployment and production considerations

Model compression techniques reduce deployment size and computational requirements. Quantization converts 32-bit weights to 8-bit integers, achieving 4x size reduction with minimal accuracy loss. Pruning removes unimportant connections, potentially reducing parameters by 90%.

Edge deployment optimization uses specialized formats like ONNX, TensorRT, and Core ML for different hardware platforms. Mobile deployment requires careful model architecture selection with MobileNet and EfficientNet families specifically designed for resource constraints.

Production monitoring tracks model performance degradation over time through data drift detection, accuracy monitoring, and automated retraining pipelines. MLOps practices ensure reliable deployment and maintenance of CNN systems at scale.

Future Outlook and Predictions

Market growth projections with specific timelines

2025-2027 acceleration phase expects continued double-digit growth across all segments with computer vision markets reaching $20.31-30.22 billion in 2025. Asia-Pacific will maintain fastest growth rates while manufacturing and automotive lead implementation. Edge computing integration with 5G networks will enable new real-time applications.

2030 maturity milestone projects $45.91-63.48 billion market size with $13 trillion additional global economic activity from AI. Up to 30% of current work hours may be automated, requiring massive workforce retraining initiatives. Regulatory frameworks will mature, creating standardized approaches to AI deployment.

2032-2034 market expansion could reach $190.9-330.42 billion depending on technological breakthroughs and adoption rates. Full integration of AGI capabilities is expected during this period with complete automation of visual inspection processes across manufacturing.

2040 transformation endpoint anticipates $15.5-22.9 trillion annual AI economic value with computer vision ubiquitous across all industries. Fully autonomous systems and advanced robotics integration will reshape entire economic sectors.

Technological evolution trends

Hybrid CNN-Transformer architectures are emerging as the dominant approach, combining CNN's local feature extraction with Transformer's global attention mechanisms. RepViT and similar architectures demonstrate this convergence, achieving superior efficiency and performance.

Attention mechanisms integration will enhance CNN interpretability and performance. Self-attention layers can identify which image regions most influence decisions, addressing the black-box criticism while maintaining computational efficiency.

Automated Neural Architecture Search (NAS) will optimize CNN designs for specific applications and hardware constraints. AI-designed architectures already outperform human-designed networks in some domains, suggesting future CNNs may be entirely machine-created.

Biological inspiration renewal through cortex-inspired architectures, spiking neural networks, and neuromorphic computing could create more brain-like artificial vision systems with dramatically improved energy efficiency.

Application domain expansions

Environmental monitoring applications will leverage satellite imagery and drone-collected data for climate change tracking, deforestation monitoring, and natural disaster prediction. CNN accuracy in satellite image analysis already exceeds human performance for many tasks.

Space exploration and astronomy present new frontiers for CNN applications. Automated celestial object detection, exoplanet discovery, and space debris tracking require computer vision capabilities operating in extreme environments with minimal human oversight.

Scientific research acceleration through automated image analysis in microscopy, particle physics, and materials science. CNNs can identify patterns in scientific data invisible to human researchers, potentially accelerating discovery processes across multiple disciplines.

Creative industries transformation including automated video editing, content generation, and artistic style transfer. CNNs enable new forms of creative expression while automating routine production tasks in entertainment and media industries.

Societal and economic implications

Workforce displacement and creation will require comprehensive retraining programs. 40% of workers expect significant reskilling according to McKinsey surveys, with 70% of AI investment focusing on people and processes rather than technology alone.

Privacy and surveillance concerns will intensify as CNN capabilities improve. Facial recognition accuracy approaching 99% enables unprecedented surveillance capabilities, requiring careful balance between security benefits and privacy rights.

Digital divide expansion may occur between organizations with CNN capabilities and those without. Democratizing AI access through cloud services and pre-trained models becomes crucial for equitable technological benefit distribution.

Regulatory framework development will shape CNN deployment across industries. EU AI Act and similar regulations will establish standards for high-risk applications while promoting innovation in beneficial use cases.

Frequently Asked Questions

What exactly are Convolutional Neural Networks and how do they work?

Convolutional Neural Networks are specialized artificial neural networks designed to process visual data like images and videos. They work by using mathematical operations called convolutions to automatically detect patterns, edges, and features in images. Think of them as sophisticated pattern recognition systems that slide small filters across images to identify important visual elements, then combine these elements to make decisions about what they're seeing.

Why are CNNs better than traditional computer vision methods?

CNNs automatically learn features from data instead of requiring human experts to hand-craft feature detectors. Traditional methods needed programmers to manually design edge detectors, shape recognizers, and texture analyzers for each specific task. CNNs discover these patterns themselves during training, often finding features humans never considered. This automatic learning makes CNNs more accurate and adaptable to new problems.

How much data do CNNs need to work effectively?

Data requirements vary significantly by application complexity. Simple tasks like digit recognition might work with thousands of examples, while complex applications like medical diagnosis typically require hundreds of thousands or millions of labeled images. However, transfer learning allows CNNs trained on large datasets (like ImageNet) to adapt to new tasks with much smaller datasets—sometimes just hundreds of examples.

What hardware is required to train and run CNNs?

Training requirements are substantial—typically 8-16GB GPU memory, 8+ CPU cores, and 32GB+ system RAM for serious development. Popular choices include NVIDIA RTX 2070/2080 Ti or newer graphics cards. However, inference (using trained models) has much lower requirements. Smartphones can run optimized CNN models, and even Raspberry Pi computers can handle simple CNN applications.

Can CNNs work on smartphones and edge devices?

Yes! Specialized architectures like MobileNet and EfficientNet are designed specifically for mobile deployment. These models achieve high accuracy while using minimal computational resources. Technologies like TensorFlow Lite and PyTorch Mobile optimize CNNs for smartphones, enabling real-time applications like camera filters, object recognition, and augmented reality features.

How accurate are CNNs compared to human experts?

CNN accuracy varies by application but often matches or exceeds human performance. In medical imaging, CNNs achieve 95-99% accuracy rates, sometimes outperforming radiologists. For general image classification on ImageNet, the best CNNs achieve better than 99% accuracy compared to human performance around 95%. However, humans excel in tasks requiring context, reasoning, and learning from few examples.

What industries benefit most from CNN technology?

Healthcare leads in high-impact applications with medical imaging diagnosis. Manufacturing uses CNNs extensively for quality control and defect detection. Automotive industry relies on CNNs for autonomous driving and safety systems. Retail implements visual search and inventory management. Agriculture applies CNNs for crop monitoring and yield prediction. Security and surveillance use CNNs for threat detection and identification.

Are CNNs being replaced by newer AI technologies like Vision Transformers?

CNNs remain dominant in production applications due to efficiency advantages and proven reliability. While Vision Transformers show promise for some tasks, they typically require more data and computational resources than CNNs. Recent developments like RepViT demonstrate CNNs continuing to evolve and improve. Many state-of-the-art systems use hybrid approaches combining both technologies rather than replacing one with the other.

How long does it take to train a CNN model?

Training time depends on model complexity, dataset size, and hardware capabilities. Simple models on small datasets might train in hours on modern GPUs. Complex models on large datasets can require days or weeks. However, transfer learning significantly reduces training time—adapting pre-trained models to new tasks often takes just hours instead of days. Most practical applications use transfer learning to minimize training requirements.

What are the main challenges in implementing CNNs in business?

Major challenges include data collection and labeling costs, hardware infrastructure requirements, talent shortage in AI expertise, and ensuring model reliability in production. Many organizations struggle with data quality—CNNs require large amounts of high-quality, properly labeled training data. Integration with existing systems and maintaining model performance over time also present ongoing challenges requiring specialized expertise.

How do CNNs handle different image sizes and formats?

Modern CNNs handle variable input sizes through several techniques. Images are typically resized to standard dimensions during preprocessing (like 224x224 pixels). Some architectures use Global Average Pooling to accept variable sizes. Data augmentation techniques help models generalize across different formats, scales, and orientations. Preprocessing pipelines automatically handle format conversion, normalization, and standardization.

Can CNNs work with video data or just static images?

CNNs excel at video analysis through several approaches. 3D CNNs process video as sequences of frames with temporal convolutions. Two-stream networks analyze spatial and temporal information separately. CNN-RNN hybrids use CNNs for frame analysis and recurrent networks for temporal modeling. Applications include action recognition, video summarization, object tracking, and anomaly detection in surveillance systems.

What makes some CNN architectures better than others?

Architecture effectiveness depends on the specific application requirements. ResNet's skip connections enable very deep networks, making it excellent for complex recognition tasks. MobileNet prioritizes efficiency for mobile deployment. VGG's uniform structure makes it easy to understand and modify. EfficientNet optimizes the balance between accuracy and computational cost. The "best" architecture depends on whether you prioritize accuracy, speed, memory usage, or interpretability.

How do CNNs detect objects in images versus classifying entire images?

Object detection requires more sophisticated architectures than simple classification. Classification CNNs output single labels for entire images. Object detection networks like YOLO and R-CNN identify multiple objects and their locations within images. These systems use region proposal networks, anchor boxes, and non-maximum suppression to find and classify multiple objects simultaneously while providing bounding box coordinates for each detection.

What is transfer learning and why is it important for CNNs?

Transfer learning allows CNNs trained on large datasets (like ImageNet with 1.2 million images) to adapt to new tasks with much smaller datasets. Pre-trained networks already learned general visual features like edges, textures, and shapes. Fine-tuning these networks for new tasks requires less data, computational resources, and training time. This makes CNN technology accessible to organizations without massive datasets or computing infrastructure.

How do CNNs handle bias and fairness concerns?

CNNs can perpetuate or amplify biases present in training data. If training datasets under-represent certain demographics, the resulting models may perform poorly for those groups. Addressing bias requires diverse, representative training data, careful evaluation across demographic groups, and ongoing monitoring of model performance. Techniques like data augmentation, adversarial training, and fairness constraints help mitigate bias, but human oversight remains essential.

What are the energy and environmental impacts of CNN training?

Training large CNN models requires significant computational resources and energy consumption. High-end GPU systems can consume 1000+ watts during training, with carbon footprints comparable to automobile emissions over training periods. However, once trained, inference requires much less energy—mobile CNNs operate on battery power. Techniques like model compression, efficient architectures, and renewable energy for data centers help reduce environmental impact.

How do CNNs compare to the human brain's visual processing?

While inspired by biological vision, CNNs process information very differently from human brains. Human vision integrates massive parallel processing, contextual understanding, memory, and reasoning. Humans can recognize objects from single examples while CNNs require thousands of training samples. However, CNNs can process visual information much faster than humans and don't suffer from fatigue or attention limitations. Both systems build hierarchical representations, but through very different mechanisms.

What careers and job opportunities exist in CNN and computer vision?

Career opportunities span multiple roles: Machine Learning Engineers design and implement CNN systems, Data Scientists analyze performance and optimize models, Computer Vision Researchers develop new architectures and techniques, AI Product Managers oversee CNN-based product development, and MLOps Engineers handle production deployment and maintenance. Related fields include robotics, autonomous vehicles, medical imaging, and digital media. Strong programming skills, mathematical background, and domain expertise are typically required.

How can someone get started learning about CNNs?

Begin with online courses covering machine learning fundamentals and computer vision. Popular platforms include Coursera's Deep Learning Specialization, edX MIT courses, and YouTube tutorials. Hands-on practice using frameworks like TensorFlow or PyTorch with datasets like CIFAR-10 or MNIST provides practical experience. Projects might include image classification, object detection, or style transfer. Join communities like Kaggle for competitions and practical experience. Mathematics background in linear algebra and calculus helps but isn't strictly required for applied work.

Key Takeaways

CNNs revolutionized computer vision by automatically learning visual features from data, eliminating the need for hand-crafted feature extraction and achieving human-level or superior accuracy across numerous applications

Market growth is explosive with valuations reaching $22.93 billion in 2024 and projected growth to $330.42 billion by 2034, driven by $100+ billion in AI venture capital investment and widespread industry adoption

Real-world applications deliver measurable business value across healthcare (95-99% diagnostic accuracy), manufacturing (96-99% defect detection), automotive (98% autonomous performance), agriculture (8-9% prediction error), and retail (15-30% operational improvements)

Technical innovation continues accelerating with breakthrough architectures like RepViT achieving >80% ImageNet accuracy at 1.0ms mobile latency, large kernel CNNs solving traditional computer vision limitations, and hybrid CNN-Transformer approaches optimizing performance

Geographic adoption patterns show clear leadership with North America commanding 38-41% market share, Asia-Pacific achieving fastest growth rates, and regional specializations emerging based on economic strengths and technological infrastructure

Implementation barriers are decreasing through transfer learning reducing data requirements, mobile-optimized architectures enabling smartphone deployment, cloud services democratizing access, and automated tools simplifying development workflows

Future trajectory indicates continued dominance despite Vision Transformer competition, with CNNs evolving through attention integration, automated architecture search, and specialized domain applications while maintaining efficiency advantages

Economic impact extends far beyond technology sectors with McKinsey projecting $13 trillion additional global GDP by 2030, workforce transformation requiring 40% of workers to reskill, and entire industries restructuring around AI capabilities

Success factors for organizations include focusing on high-quality data over quantity, choosing appropriate architectures for specific requirements, implementing proper MLOps practices, and balancing innovation with ethical considerations and bias mitigation

The technology remains rapidly evolving with breakthrough applications emerging in environmental monitoring, space exploration, scientific research, and creative industries while addressing societal challenges in privacy, fairness, and digital accessibility

Actionable Next Steps

Assess your organization's CNN readiness by evaluating current data assets, identifying image/video analysis opportunities, and determining technical infrastructure requirements for potential pilot projects

Start with transfer learning experiments using pre-trained models on platforms like Google Colab, Kaggle, or AWS SageMaker to test CNN capabilities on your specific data without major infrastructure investment

Identify high-impact use cases within your industry by reviewing the documented case studies and performance benchmarks, focusing on applications with clear ROI metrics and measurable business outcomes

Build technical capabilities through online courses (Coursera Deep Learning Specialization, Fast.ai), hands-on projects with open datasets, and participation in computer vision competitions on Kaggle or similar platforms

Evaluate vendor solutions for your specific needs, comparing build-versus-buy decisions based on your organization's technical expertise, timeline requirements, and budget constraints

Develop data strategy by cataloging existing image/video assets, establishing data quality standards, creating labeling workflows, and implementing data governance practices for AI initiatives

Create pilot project roadmap starting with low-risk, high-value applications, defining success metrics, establishing timelines, and planning for scale-up based on initial results

Establish ethical AI practices by implementing bias detection procedures, ensuring diverse representation in training data, creating model interpretability requirements, and developing ongoing monitoring protocols

Plan workforce development through employee training programs, hiring strategies for AI talent, partnership opportunities with universities or consultants, and change management processes for AI adoption

Monitor industry developments by following key research conferences (CVPR, NeurIPS), subscribing to AI newsletters, joining professional networks, and participating in industry associations relevant to your sector

Glossary

Activation Function: Mathematical function determining neuron output based on input strength. Common types include ReLU (returns zero for negative inputs, unchanged for positive), Sigmoid (outputs between 0-1), and Tanh (outputs between -1 and 1).

Backpropagation: Training algorithm that adjusts network weights by calculating error gradients and propagating them backward through the network layers to minimize prediction errors.

Batch Normalization: Technique that normalizes inputs to each layer during training, accelerating convergence and enabling use of higher learning rates while reducing internal covariate shift.

Convolutional Layer: Core CNN component that applies learnable filters to input data, detecting features like edges, textures, and patterns through mathematical convolution operations.

Dropout: Regularization technique that randomly sets a fraction of neurons to zero during training, preventing overfitting and improving model generalization to new data.

Feature Map: Output of convolutional layer showing where specific features were detected in the input image, created by applying filters across the entire input.

Filter/Kernel: Small matrix of learnable weights that slides across input data during convolution, designed to detect specific patterns or features like edges, corners, or textures.

Fully Connected Layer: Traditional neural network layer where each neuron connects to every neuron in the previous layer, typically used in final CNN stages for classification.

Gradient: Mathematical measure of how much a function's output changes relative to changes in its input, used during training to determine weight adjustment directions.

ImageNet: Large visual database containing over 14 million labeled images across 22,000 categories, serving as standard benchmark for computer vision algorithm evaluation.

Learning Rate: Hyperparameter controlling how much to adjust model weights during training; too high causes instability, too low causes slow convergence.

Max Pooling: Downsampling operation that takes the maximum value from each pooling window, reducing spatial dimensions while preserving important features and providing translation invariance.

Overfitting: Problem where models memorize training data too specifically, achieving high training accuracy but poor performance on new, unseen data.

Padding: Adding zeros or other values around input borders to control output size after convolution and prevent information loss at image edges.

Parameters: Learnable weights and biases in neural network that get adjusted during training to minimize prediction errors and improve model performance.

Pooling Layer: CNN component that reduces spatial dimensions of feature maps through operations like max pooling or average pooling, decreasing computational requirements.

ReLU: Rectified Linear Unit activation function that outputs zero for negative inputs and unchanged value for positive inputs, most commonly used in modern CNNs.

Residual Connection: Skip connection in ResNet architectures that allows information to flow directly to later layers, solving vanishing gradient problem in very deep networks.

Stride: Step size for filter movement during convolution or pooling operations; larger strides create smaller output dimensions and reduce computational requirements.

Transfer Learning: Technique using pre-trained models as starting points for new tasks, leveraging learned features to reduce training time and data requirements.

Vanishing Gradient Problem: Training difficulty in deep networks where gradients become very small in early layers, preventing effective weight updates and learning.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments