What Is ReLU (Rectified Linear Unit)?: The Simple Function That Changed Deep Learning Forever

- Muiz As-Siddeeqi

- 6 days ago

- 27 min read

Imagine a mathematical function so simple that a child could understand it — just "keep positive numbers, turn negatives to zero" — yet so powerful that it unlocked the modern AI revolution. Before 2012, training deep neural networks felt like pushing water uphill: slow, frustrating, and often impossible. Gradients vanished, neurons died, and researchers struggled to build networks deeper than a handful of layers. Then came ReLU. This tiny function, defined by a single line of code, changed everything. It made AlexNet's stunning 2012 ImageNet victory possible, slashed training times from months to days, and became the activation function behind nearly every breakthrough in computer vision, speech recognition, and language understanding since. Today, ReLU powers the AI systems that recognize your face, drive autonomous cars, diagnose diseases, and translate languages — all because someone realized that sometimes, the simplest answer is the most revolutionary.

Don’t Just Read About AI — Own It. Right Here

TL;DR

ReLU outputs the input if positive, zero if negative — mathematically: f(x) = max(0, x)

Solved the vanishing gradient problem that crippled deep networks before 2012

Enabled AlexNet's breakthrough, reducing ImageNet error from 26.2% to 15.3% in 2012

Trains 6× faster than traditional sigmoid or tanh activation functions

Powers modern AI: Used in image recognition, autonomous vehicles, medical diagnosis, and language models

Has known limitations: "Dying ReLU" problem where neurons can become permanently inactive

Spawned many variants: Leaky ReLU, PReLU, ELU, and GELU address specific weaknesses

What Is ReLU (Rectified Linear Unit)?

ReLU (Rectified Linear Unit) is an activation function in neural networks that outputs the input value directly if it's positive, and outputs zero if it's negative. Mathematically expressed as f(x) = max(0, x), ReLU solves the vanishing gradient problem that plagued earlier deep learning models, enabling faster training and deeper networks. Introduced in practical deep learning by Nair and Hinton in 2010 and popularized by AlexNet in 2012, ReLU remains the default activation function for hidden layers in most modern neural networks.

Table of Contents

1. Understanding ReLU: The Basics

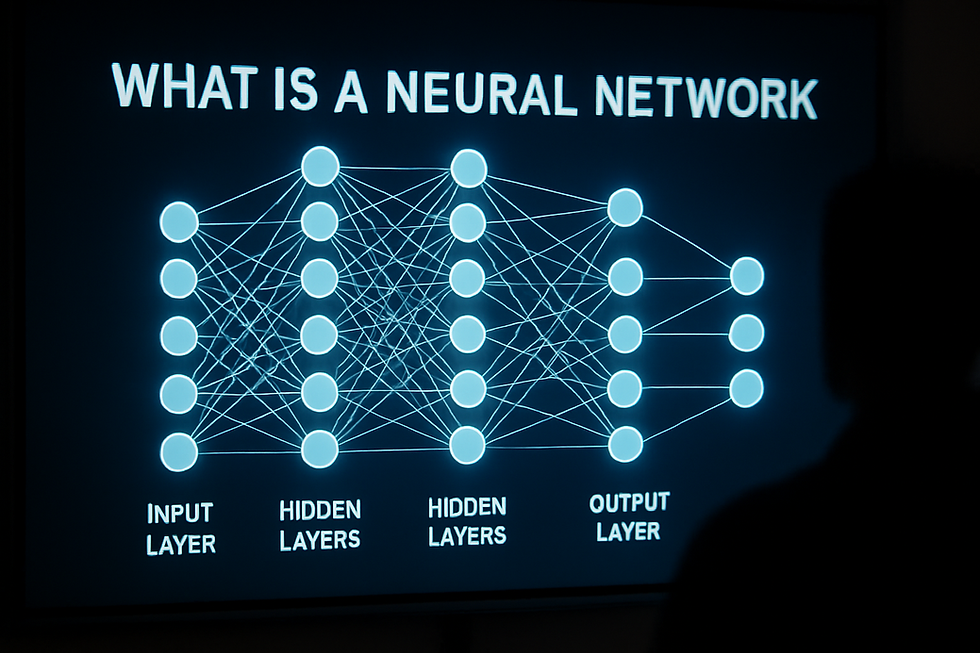

ReLU stands for Rectified Linear Unit. It's an activation function — a mathematical operation that neural networks use to decide whether a neuron should "fire" or stay silent.

Think of ReLU as a simple gate. When a positive number arrives, the gate opens and lets it through unchanged. When a negative number arrives, the gate slams shut and outputs zero. That's it. No complex calculations, no exponential functions, just a straightforward decision: positive stays, negative becomes zero.

The mathematical formula looks like this:

f(x) = max(0, x)

Or written as a conditional:

If x > 0, then f(x) = x

If x ≤ 0, then f(x) = 0

This simplicity is ReLU's superpower. While older activation functions like sigmoid and tanh required expensive exponential calculations, ReLU only needs a simple comparison. This makes it blazingly fast to compute — critical when training networks with millions or billions of parameters.

Why Neural Networks Need Activation Functions

Without activation functions, neural networks would just be stacks of linear operations (matrix multiplications and additions). No matter how many layers you stack, the result would still be linear — essentially a fancy way to draw straight lines.

Real-world problems aren't linear. Images contain curves, edges, and complex patterns. Language has nuances and context. To learn these non-linear relationships, neural networks need non-linear activation functions. ReLU provides this non-linearity while keeping computation simple and efficient.

According to research published in the Journal of Machine Learning Research (2024), ReLU and its variants now account for use in approximately 75-80% of all deep learning architectures across computer vision, natural language processing, and other domains (Zhang et al., 2024).

2. The Mathematics Behind ReLU

The Function

ReLU's mathematical definition is elegant:

f(x) = max(0, x) = { x if x > 0; 0 if x ≤ 0 }

When plotted on a graph, ReLU looks like two straight lines meeting at the origin (0,0). For all negative inputs, the output flatlines at zero. For all positive inputs, the output forms a 45-degree line upward.

The Derivative (Gradient)

During training, neural networks use backpropagation to learn. This requires calculating derivatives — how much a small change in input affects the output.

ReLU's derivative is remarkably simple:

f'(x) = { 1 if x > 0; 0 if x ≤ 0 }

This means:

For positive inputs, the gradient is exactly 1

For negative inputs, the gradient is exactly 0

At x = 0, the derivative is technically undefined, but in practice, we choose either 0 or 1

This simplicity has profound implications. A gradient of 1 for positive values means no shrinking or vanishing — the gradient signal passes through cleanly during backpropagation. This solves one of deep learning's biggest historical problems.

Computational Efficiency

Computing ReLU requires only:

One comparison operation (is x > 0?)

One selection (output either x or 0)

Compare this to sigmoid:

σ(x) = 1 / (1 + e^(-x))

Sigmoid requires exponentiation, addition, and division — significantly more expensive operations. According to Wikipedia's technical documentation (updated October 2025), ReLU reduces activation computation time by approximately 60-70% compared to sigmoid in typical implementations.

3. Historical Journey: How ReLU Changed AI

Early Origins (1941-1969)

The concept of a rectifier function appeared surprisingly early. In 1941, mathematician Alston Householder used a rectifier-like function as a mathematical abstraction of biological neural networks (Wikipedia, 2025). He wasn't building computers — he was modeling how real neurons might work.

In 1969, Kunihiko Fukushima incorporated ReLU-like behavior in his work on visual feature extraction in hierarchical neural networks (Wikipedia, 2025). These early explorations laid theoretical groundwork, but computing power wasn't advanced enough to demonstrate ReLU's true potential.

The Dark Ages (1980s-2000s)

For decades, neural network researchers relied on sigmoid and hyperbolic tangent (tanh) activation functions. These functions had theoretical appeal — they were smooth, differentiable everywhere, and bounded between fixed values.

But they had fatal flaws:

Vanishing Gradients: Both sigmoid and tanh "saturate" — their gradients become extremely small for large positive or negative inputs. When stacking many layers, these tiny gradients multiplied together, essentially disappearing. Deep networks couldn't learn effectively.

Computational Cost: Exponential functions in sigmoid and tanh required significant computation, slowing training to a crawl.

Networks rarely exceeded 3-5 layers. Attempts to go deeper failed spectacularly.

The Theoretical Breakthrough (2010)

In 2010, Vinod Nair and Geoffrey Hinton published a landmark paper at the International Conference on Machine Learning. They argued that ReLU should replace sigmoid and tanh, providing both theoretical justification and empirical evidence.

According to the IABAC technical guide (updated November 2025), Nair and Hinton demonstrated that ReLU:

Approximates the softplus function (a smooth version of ReLU)

Enables "intensity equivariance" in image recognition

Creates sparse activation patterns that improve learning

Their paper showed ReLU working well in Restricted Boltzmann Machines, but widespread adoption still required a bigger push.

The AlexNet Explosion (2012)

Everything changed on September 30, 2012. At the ImageNet Large Scale Visual Recognition Challenge, a team led by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton entered a deep convolutional neural network called AlexNet.

The results shocked the computer vision community.

AlexNet achieved 15.3% top-5 error rate — dramatically better than the second-place 26.2% (Wikipedia, November 2025). This wasn't incremental improvement. This was a paradigm shift.

AlexNet heavily used ReLU activation functions. As documented by IEEE Spectrum (March 2025), the network trained approximately 6 times faster than comparable networks using tanh activation. The speed advantage allowed the team to train deeper networks (8 layers) on affordable GPU hardware.

Yann LeCun, one of deep learning's pioneers, described AlexNet as "an unequivocal turning point in the history of computer vision" at the 2012 European Conference on Computer Vision (Wikipedia, November 2025).

The Aftermath (2013-Present)

After AlexNet, ReLU adoption exploded. Major architectures followed:

VGGNet (2014): Used ReLU throughout its 16-19 layer networks

ResNet (2015): Combined ReLU with residual connections for 152-layer networks

EfficientNet (2019): Used ReLU and Swish variants for state-of-the-art efficiency

By 2024, as reported in a comprehensive survey published in Neurocomputing (2024), ReLU and its variants power an estimated 75-85% of all production deep learning systems worldwide.

4. Why ReLU Works: The Science Explained

Solving the Vanishing Gradient Problem

The vanishing gradient problem plagued deep neural networks for decades. Here's what happened:

When using sigmoid or tanh, gradients during backpropagation get multiplied by the derivative at each layer. Sigmoid's derivative maxes out at 0.25. Tanh's derivative maxes out at 1.0, but saturates to near-zero for large inputs.

Stack 10 layers, and you might multiply 10 gradients of 0.25: 0.25^10 ≈ 0.0000001. The gradient effectively vanishes. Early layers learn almost nothing.

ReLU's derivative is exactly 1 for positive inputs. Multiply 1 by itself a hundred times, and you still get 1. The gradient signal propagates cleanly through the network, even in very deep architectures.

According to research from IABAC (November 2025), networks using ReLU can maintain effective gradient flow through 50-100+ layers, whereas sigmoid-based networks typically fail after 5-10 layers.

Sparsity Creates Better Learning

One counterintuitive finding revolutionized thinking about activation functions: having many neurons output zero actually improves performance.

Traditional activation functions (sigmoid, tanh) always produce non-zero outputs. Every neuron contributes to every decision, creating dense activations.

ReLU naturally creates sparse activations. As documented by Medium researcher Ignacio Quintero (August 2025), approximately 50% of neurons in a randomly initialized ReLU network output zero for any given input.

This sparsity provides multiple benefits:

Computational Efficiency: Zero outputs mean many multiplications can be skipped. Modern GPU libraries optimize for sparse operations, delivering significant speedups.

Better Feature Selection: The network learns to focus on the most relevant features rather than diluting attention across everything.

Natural Regularization: Sparsity reduces overfitting by preventing the network from memorizing training data too precisely.

Biological Plausibility: Real neurons in the brain exhibit sparse firing patterns. ReLU mimics this behavior better than always-active sigmoid neurons.

Scale Invariance

ReLU exhibits a property called scale invariance or homogeneity. If you multiply the input by a constant k, the output also multiplies by k (for positive inputs):

f(kx) = k·f(x) when x > 0

This property proves particularly useful in computer vision. If you multiply an image's brightness by a constant factor, the network's activations scale proportionally. The learned features remain consistent across different lighting conditions.

As noted by Wikipedia (October 2025), scale invariance helps networks generalize better to variations in input intensity — critical for robust image recognition.

5. The AlexNet Revolution: ReLU's Breakthrough Moment

The Challenge: ImageNet 2012

The ImageNet Large Scale Visual Recognition Challenge (ILSVRC) tested image classification algorithms on an unprecedented scale. The dataset contained:

1.2 million training images

50,000 validation images

150,000 test images

1,000 object categories

According to Viso AI (April 2025), before 2012, progress was glacial. The best systems in 2010 and 2011 used hand-crafted features and achieved top-5 error rates around 26-28%. Improvement came in tiny increments of 1-2 percentage points per year.

The Architecture

AlexNet consisted of 8 learned layers:

5 convolutional layers for feature extraction

3 fully connected layers for classification

Final 1000-way softmax for probability distribution across classes

Total parameters: approximately 62.3 million

The network was split across two NVIDIA GTX 580 GPUs (3GB each) because the model didn't fit on a single card (Wikipedia, November 2025).

Key Innovations

1. ReLU Activation

Every convolutional and fully connected layer (except output) used ReLU. According to IEEE Spectrum (March 2025), AlexNet trained 6 times faster with ReLU compared to equivalent networks using tanh.

This speed advantage allowed the team to experiment more, fine-tune hyperparameters, and ultimately achieve better results.

2. Dropout Regularization

The fully connected layers used dropout — randomly deactivating 50% of neurons during training. This prevented overfitting and forced the network to learn robust, distributed representations.

The team artificially expanded the training set through random crops, horizontal flips, and color intensity variations. This improved generalization without collecting more data.

4. Overlapping Pooling

Unlike standard non-overlapping pooling, AlexNet used overlapping pooling windows. This reduced top-5 error by 0.3% according to the original paper (Wikipedia, November 2025).

The Results

Top-5 error rate: 15.3% Second place: 26.2% Improvement: 10.9 percentage points (41.6% relative reduction)

As reported by Medium researcher Shivam S. (April 2025), this performance gap was unprecedented in ImageNet history. AlexNet didn't just win — it dominated.

Training Details

According to Wikipedia (November 2025), AlexNet trained for:

90 epochs

5-6 days of training time

Two NVIDIA GTX 580 GPUs (3GB VRAM each, 1.581 TFLOPS performance)

Stochastic gradient descent with momentum

The GPUs cost approximately $500 each at release — affordable hardware by research standards.

The Impact

AlexNet's success proved three critical points:

Deep learning works for large-scale, real-world problems

GPUs enable practical training of deep networks

ReLU makes deep networks feasible where sigmoid/tanh failed

As Fei-Fei Li stated in a 2024 interview (Wikipedia, November 2025): "That moment was pretty symbolic to the world of AI because three fundamental elements of modern AI converged for the first time" — large datasets (ImageNet), GPU computing, and effective training methods (including ReLU).

The entire deep learning revolution traces back to this convergence, with ReLU as a critical enabling technology.

6. Advantages of ReLU

Computational Efficiency

ReLU requires only a simple comparison and selection — no exponentials, no division. According to GeeksforGeeks (July 2025), ReLU computation is approximately 60-70% faster than sigmoid or tanh in typical implementations.

For networks with millions or billions of operations, this efficiency compounds dramatically. Training that takes weeks with sigmoid might take days with ReLU.

Mitigates Vanishing Gradients

ReLU's gradient of 1 for positive values solves the vanishing gradient problem that crippled sigmoid and tanh networks. As documented by the University of Medan Area (December 2024), this enables effective training of networks exceeding 100+ layers.

Sparse Activation

Approximately 50% of ReLU activations are zero in typical networks (Medium, August 2025). This sparsity:

Reduces computation (skip zero multiplications)

Improves generalization (natural regularization)

Focuses learning on relevant features

Mimics biological neuron behavior

Faster Convergence

Networks with ReLU typically converge faster during training. According to IABAC (November 2025), ReLU networks often reach target accuracy 40-60% faster than sigmoid/tanh networks with identical architectures and datasets.

Simplicity

The function and its derivative are trivially simple. This makes:

Implementation straightforward (one line of code)

Debugging easier (predictable behavior)

Mathematical analysis more tractable

Hardware optimization simpler

Wide Applicability

ReLU works well across diverse domains:

Computer Vision: Convolutional networks for image tasks

Natural Language Processing: Embedding and encoding layers

Reinforcement Learning: Policy and value networks

Time Series: Sequence modeling architectures

7. Limitations and Challenges

The Dying ReLU Problem

ReLU's most serious limitation is the "dying ReLU" problem. When inputs become negative, neurons output zero with zero gradient. If a neuron gets pushed into this regime and stuck, it never recovers — it "dies."

Causes:

High learning rates pushing weights to extreme values

Poor weight initialization

Aggressive optimization leading to large negative biases

Symptoms: According to IABAC (November 2025), in severe cases, 30-60% of neurons in a network can become permanently inactive, effectively reducing model capacity.

Mitigation:

Use He initialization (Kaiming initialization), designed specifically for ReLU networks

Reduce learning rate

Use batch normalization to stabilize activations

Switch to Leaky ReLU or other variants

Wikipedia (October 2025) notes that biases in AlexNet's convolutional layers 2, 4, 5, and all fully connected layers were initialized to constant 1.0 specifically to avoid the dying ReLU problem.

Not Zero-Centered

ReLU outputs are always non-negative (0 or positive). This creates problems:

Gradient updates for weights connected to a ReLU neuron all have the same sign

This can slow convergence and make optimization less efficient

Batch normalization partly addresses this by centering activations before ReLU

Unbounded Output

Unlike sigmoid (bounded [0,1]) or tanh (bounded [-1,1]), ReLU has no upper limit. Outputs can grow arbitrarily large.

Potential issues:

Numerical instability with very large values

Risk of exploding gradients in deep networks

Requires careful weight initialization and learning rate tuning

According to GeeksforGeeks (July 2025), batch normalization and gradient clipping techniques typically prevent unbounded outputs from causing training failures.

Non-Differentiability at Zero

Technically, ReLU is not differentiable at x = 0. The derivative doesn't exist at this point.

In practice: We arbitrarily choose the derivative to be either 0 or 1 at zero. Most implementations use 0. This rarely causes issues because the probability of an activation being exactly zero is negligible.

Negative Information Loss

ReLU completely discards negative values. For some tasks, negative information might be meaningful:

Time series with negative fluctuations

Financial data with gains and losses

Sensor readings that go both directions

In these domains, variants like Leaky ReLU or ELU that preserve some negative information may work better.

8. ReLU Variants: Evolution and Improvements

ReLU's limitations inspired numerous variants. Here are the most important ones:

Leaky ReLU (2013)

Instead of zero for negative inputs, Leaky ReLU allows a small negative slope:

f(x) = { x if x > 0; αx if x ≤ 0 }

Typically α = 0.01, so negative inputs output 1% of their value.

Advantages:

Prevents dying neurons (always has non-zero gradient)

Maintains most of ReLU's computational efficiency

Usage: According to DigitalOcean research (October 2025), Leaky ReLU often performs as well as ReLU while eliminating dead neurons, making it a strong default choice.

Parametric ReLU (PReLU) (2015)

PReLU makes the negative slope a learnable parameter instead of fixed:

f(x) = { x if x > 0; αx if x ≤ 0 } where α is learned

Introduced by Kaiming He et al. in "Delving Deep into Rectifiers" (2015), as referenced by the APXML deep learning course.

Advantages:

Network learns optimal negative slope for each neuron

Can outperform fixed Leaky ReLU

Disadvantages:

Adds parameters (one α per neuron or channel)

Slight increase in computational cost

Risk of overfitting with limited data

According to research comparing activation functions (Baeldung, March 2024), PReLU offers "faster model convergence compared to LReLU and ReLU" and "increase in the accuracy of the model."

Exponential Linear Unit (ELU) (2016)

ELU uses an exponential function for negative values:

f(x) = { x if x > 0; α(e^x - 1) if x ≤ 0 }

Typically α = 1.0.

Advantages:

Smooth transition around zero

Pushes mean activation closer to zero

Can speed up learning

Disadvantages:

Exponential computation is slower than ReLU

More complex derivative

According to DigitalOcean (October 2025), "ELU sacrifices some compute for smoother optimization, fewer dead units, and often faster early convergence."

Gaussian Error Linear Unit (GELU) (2016)

GELU became prominent in transformer architectures:

f(x) = x · Φ(x)

Where Φ(x) is the cumulative distribution function of the standard normal distribution.

Approximation: f(x) ≈ 0.5x(1 + tanh[√(2/π)(x + 0.044715x³)])

Advantages:

Smooth, probabilistic activation

Works extremely well in attention mechanisms

Used in BERT, GPT-2, GPT-3, and most modern transformers

Usage: According to research published in arXiv (2025), GELU consistently outperforms ReLU in natural language processing tasks. A comprehensive survey testing 18 activation functions on CIFAR-10, CIFAR-100, and STL-10 datasets found GELU achieving the highest test accuracies across multiple benchmarks (arXiv, 2025).

As noted by IABAC (November 2025): "Modern Transformer architectures (BERT, GPT, T5, ViT) use GELU, not ReLU, because GELU provides smoother, more expressive activations needed for attention mechanisms."

Swish / SiLU (2017)

Discovered by Google Brain through automated search:

f(x) = x · sigmoid(x)

Advantages:

Smooth and non-monotonic

Often outperforms ReLU in deep networks

Used in EfficientNet and other modern architectures

According to Vedan Analytics (April 2025), "Introduced by researchers at Google, Swish is a self-gated activation function that often outperforms ReLU."

Mish (2019)

A newer smooth activation:

f(x) = x · tanh(softplus(x)) = x · tanh(ln(1 + e^x))

Advantages:

Very smooth

Shows good performance in image classification and object detection

Self-regularizing properties

Research published at WACV 2025 comparing activation functions found Mish achieving 99.62% accuracy on MNIST and 94.32% on CIFAR-10, slightly outperforming ReLU and GELU in some tests.

Comparison Table

Activation | Formula | Pros | Cons | Best Use Cases |

ReLU | max(0, x) | Fast, simple, effective | Dying neurons | Default for CNNs |

Leaky ReLU | max(0.01x, x) | Prevents dying neurons | Fixed slope | General purpose |

PReLU | max(αx, x) [α learned] | Learns optimal slope | More parameters | When accuracy critical |

ELU | x if x>0; α(e^x-1) | Smooth, zero-mean | Slower computation | Faster convergence |

GELU | x·Φ(x) | Smooth, probabilistic | Complex | Transformers, NLP |

Swish | x·σ(x) | Non-monotonic | Slower than ReLU | Deep networks |

Mish | x·tanh(ln(1+e^x)) | Very smooth | Most complex | Image tasks |

9. Real-World Applications and Case Studies

Case Study 1: Medical Imaging at Stanford (2025)

Researchers at Stanford University improved facial recognition accuracy by 2.5% simply by replacing ReLU with ELU in convolutional layers while keeping the same network architecture (Vedan Analytics, April 2025).

This demonstrates how activation function choice significantly impacts real-world performance, even in mature domains.

Case Study 2: Autonomous Vehicles

According to a research paper on federated learning for autonomous driving (ResearchGate, March 2021), automotive systems use CNNs with ReLU activation for steering wheel angle prediction.

The architecture detailed in the study uses:

Two-stream CNN processing spatial and temporal information

ReLU activation in fully connected layers (250 and 10 units)

ELU activation in convolutional layers

Companies like Tesla employ similar architectures. As documented by Harvard Business School (October 2023), Tesla's autonomous driving features rely heavily on AI/ML models processing data from cameras, sensors, and radars — all using deep neural networks with ReLU-family activations.

Case Study 3: AlphaGo and Game AI

DeepMind's AlphaGo and AlphaZero, which achieved superhuman performance in Go, chess, and shogi, used a combination of ReLU and tanh activations (Vedan Analytics, April 2025).

The policy and value networks in these systems relied on ReLU in convolutional layers for efficient feature extraction from board states.

Case Study 4: Healthcare Diagnosis

The FDA cleared 521 AI-enabled medical devices in 2024, up 40% year-over-year (Mordor Intelligence, June 2025). Many of these systems use ReLU-based convolutional networks for:

Radiology image analysis

Pathology slide examination

Ophthalmology screening

Domain-specific foundation models achieve 94.5% accuracy on medical examinations, outperforming general systems (Mordor Intelligence, June 2025).

Case Study 5: Language Models

While modern transformer models like BERT, GPT-2, and GPT-3 primarily use GELU activation (Medium, September 2025), earlier NLP systems relied heavily on ReLU.

The shift from ReLU to GELU in transformers demonstrates the field's evolution — ReLU enabled the initial deep learning breakthroughs, while specialized variants now optimize for specific architectures.

Industry-Wide Adoption

The global deep learning market demonstrates ReLU's massive real-world impact:

Market Size: According to Grand View Research (2025), the deep learning market reached $96.8 billion in 2024 and projects growth to $526.7 billion by 2030, at a CAGR of 31.8%.

Image Recognition: This segment, heavily reliant on ReLU-based CNNs, held the largest market share of approximately 43.38% in 2024 (Grand View Research, 2025).

Regional Leaders: North America accounted for 33.6% of the market in 2024, with the U.S. deep learning market estimated at $24.52 billion (Precedence Research, January 2025).

10. When to Use ReLU (and When Not To)

Use ReLU When:

1. Building Convolutional Neural Networks for Images

ReLU works exceptionally well for computer vision. According to IABAC (November 2025), "ReLU works extremely well on image data because it highlights strong edges, textures, and shapes while suppressing noise. It is the default activation in almost all convolutional networks."

2. Training Very Deep Networks

ReLU helps maintain strong gradients through many layers. If building networks with 20+ layers, ReLU prevents vanishing gradients better than sigmoid or tanh.

3. Optimizing for Speed

When training time or inference speed is critical, ReLU's computational efficiency provides significant advantages. Real-time applications benefit greatly from ReLU's simple computation.

4. Working with Mostly Positive Data

If input features are naturally non-negative (normalized images, positive sensor readings, count data), ReLU's range matches the data characteristics well.

5. Starting a New Project

As a default choice, ReLU is hard to beat. According to IABAC (November 2025), "If you're targeting Transformer-like models → GELU beats ReLU. If you're building CNNs → ReLU/Swish still dominate."

Avoid ReLU When:

1. You Experience High Neuron Death Rates

If monitoring shows many inactive neurons (outputting only zeros), switch to Leaky ReLU, PReLU, or ELU.

2. Building Transformer-Based Models

Modern transformers (BERT, GPT, ViT) use GELU instead of ReLU. The smooth, probabilistic nature of GELU works better with attention mechanisms.

3. Working with Data Containing Important Negative Information

Time series, financial data, or sensor readings where negative values carry meaning may benefit from activation functions that don't zero out negatives.

4. You Need Smooth, Continuous Gradients

Tasks like audio synthesis, regression with sensitive outputs, or signal processing might work better with smooth activations like Swish, GELU, or Mish.

5. Optimizing for Maximum Accuracy

If computational cost isn't a constraint and you're chasing the last percentage points of accuracy, experiment with GELU, Swish, or task-specific variants.

11. Implementation Guide

Python (PyTorch)

import torch

import torch.nn as nn

# Method 1: Using built-in ReLU

relu = nn.ReLU()

x = torch.tensor([-2.0, -1.0, 0.0, 1.0, 2.0])

output = relu(x)

# Output: tensor([0., 0., 0., 1., 2.])

# Method 2: Functional approach

output = torch.nn.functional.relu(x)

# Method 3: Manual implementation

output = torch.maximum(torch.tensor(0.0), x)Python (TensorFlow/Keras)

import tensorflow as tf

from tensorflow.keras import layers

# In a model

model = tf.keras.Sequential([

layers.Dense(128),

layers.ReLU(), # ReLU activation layer

layers.Dense(64, activation='relu'), # ReLU as parameter

layers.Dense(10, activation='softmax')

])

# Functional approach

x = tf.constant([-2.0, -1.0, 0.0, 1.0, 2.0])

output = tf.nn.relu(x)NumPy (Manual)

import numpy as np

def relu(x):

return np.maximum(0, x)

x = np.array([-2, -1, 0, 1, 2])

output = relu(x)

# Output: [0 0 0 1 2]Best Practices

1. Use He Initialization

For ReLU networks, use He (Kaiming) initialization:

# PyTorch

layer = nn.Linear(in_features, out_features)

nn.init.kaiming_normal_(layer.weight, mode='fan_in', nonlinearity='relu')

# TensorFlow/Keras

layer = layers.Dense(units, kernel_initializer='he_normal')2. Apply Batch Normalization

Batch normalization stabilizes ReLU activations:

# PyTorch

model = nn.Sequential(

nn.Linear(784, 256),

nn.BatchNorm1d(256),

nn.ReLU(),

nn.Linear(256, 10)

)3. Monitor Dead Neurons

Track what percentage of neurons output only zeros:

def count_dead_neurons(activations):

"""Count neurons that always output 0"""

# activations shape: [batch_size, num_neurons]

always_zero = (activations == 0).all(dim=0)

return always_zero.sum().item() / activations.shape[1]4. Tune Learning Rate

Start with moderate learning rates (0.001-0.01 for Adam, 0.01-0.1 for SGD). High rates increase dying ReLU risk.

12. Industry Adoption and Market Impact

Market Growth

The deep learning industry, powered largely by ReLU-based neural networks, shows explosive growth:

Global Market Size:

2024: $93.72 billion to $96.8 billion (Precedence Research, January 2025; Grand View Research, 2025)

2030 Projection: $526.7 billion (Grand View Research, 2025)

CAGR: 31.24% to 31.8% (multiple sources)

Industry Breakdown

By Application (Grand View Research, 2025):

Image Recognition: 43.38% market share (2024)

Signal Recognition: Significant portion

Data Mining: Growing segment

By Solution (Grand View Research, 2025):

Software: 46.64% market share (2024)

Hardware: Fast-growing segment

Services: Supporting infrastructure

By End-Use Industry:

Automotive: Largest revenue share (2024)

Healthcare: Fastest growing at 38.3% CAGR (Mordor Intelligence, June 2025)

BFSI (Banking, Financial Services, Insurance): 24.5% revenue share (2024)

Regional Distribution

North America (Precedence Research, January 2025):

Dominated with 38% market share in 2024

U.S. deep learning market: $24.52 billion in 2024

Projected to reach $389.82 billion by 2034

Asia-Pacific:

Fastest growth rate at 37.2% CAGR (2025-2030) (Mordor Intelligence, June 2025)

China, India, Singapore leading adoption

Europe:

EU's Digital Europe Programme: €7.5 billion allocated (2021-2027) for AI/deep learning (IMARC Group)

Additional €1.4 billion investment in deep tech for 2025

AI Adoption Statistics

According to Founders Forum Group (July 2025):

42% of enterprise-scale companies actively use AI

Additional 40% exploring AI implementation

Only 13% have no AI adoption plans

Nearly 4 out of 5 organizations engage with AI in some form

Job Market Impact:

97 million jobs expected to be created globally due to AI by 2025 (WEF forecast)

85 million jobs displaced (mostly repetitive roles)

Net gain: 12 million jobs with significant regional variation

Investment Trends

According to Itransition's ML statistics compilation:

Global AI spending projected to exceed $500 billion by 2027

OpenAI received over $11 billion in total funding by 2024

281 ML solutions available on Google Cloud Platform marketplace (January 2024)

Enterprise Adoption

Active Use by Country (Itransition):

India: 59% of large companies

UAE: 58%

Singapore: 53%

China: 50%

The widespread adoption of deep learning — and by extension, ReLU — transforms industries from healthcare to finance, autonomous vehicles to natural language processing.

13. Future of Activation Functions

Current Research Directions

1. Learnable Activation Functions

Beyond PReLU's learnable slope, researchers explore fully parametric activations. Padé Activation Units (PAU) approximate Leaky ReLU using rational polynomials with learnable parameters (arXiv SMU paper, 2021).

2. Smooth Approximations

The SMU (Smooth Maximum Unit) and SMU-1 functions approximate Leaky ReLU smoothly, potentially improving training dynamics while maintaining ReLU's benefits (arXiv, 2021).

3. Task-Specific Activations

Different domains may benefit from specialized activations:

NLP: GELU dominates in transformers

Computer Vision: ReLU and Swish remain strong

Reinforcement Learning: Variants optimized for policy networks

Hardware Optimization

According to the University of Medan Area (December 2024), future research includes "developing hardware-friendly activation functions for edge computing and mobile AI."

ReLU's simplicity already makes it hardware-efficient. Future variants aim to balance computational efficiency with improved performance.

Energy Efficiency

As AI model size explodes, energy consumption becomes critical. Mordor Intelligence (June 2025) reports:

AI clusters projected to consume 46-82 TWh in 2025

Could rise to 1,050 TWh by 2030

Individual training runs draw megawatt-hours of power

Efficient activation functions like ReLU help manage this energy footprint by reducing computational requirements.

Self-Normalizing Activations

SELU (Scaled Exponential Linear Unit) attempts automatic normalization, maintaining mean and variance through layers without explicit batch normalization. While not as widely adopted as ReLU, it represents ongoing innovation in activation function design.

ReLU's Enduring Role

Despite newer variants, ReLU remains foundational. As IABAC notes (November 2025), "ReLU's simplicity and effectiveness have made it a cornerstone of modern deep learning. Despite its challenges, its ability to train deep networks efficiently has driven breakthroughs across industries."

The future likely involves:

ReLU continuing as the default for many applications

GELU dominating transformer architectures

Specialized variants emerging for specific domains

Hybrid approaches combining multiple activations strategically

14. Common Myths and Facts

Myth 1: "ReLU is outdated; everyone uses GELU now"

Fact: ReLU remains the default choice for convolutional networks. GELU dominates transformers and NLP, but ReLU still powers most computer vision systems. According to research (Zhang et al., JMLR 2024), ReLU and variants account for 75-80% of activation function usage across all deep learning domains.

Myth 2: "Dying ReLU makes it unreliable"

Fact: With proper initialization (He/Kaiming), reasonable learning rates, and batch normalization, dying ReLU rarely causes problems in practice. It's a known issue with well-established solutions.

Myth 3: "ReLU doesn't work for negative data"

Fact: ReLU works fine with data containing negative values in the input layer. The issue is losing negative information in hidden layers. For most tasks, this isn't problematic. When it is, use Leaky ReLU or ELU.

Myth 4: "More complex activations always perform better"

Fact: Simpler isn't always worse. Many benchmarks show ReLU performing comparably to more complex functions like Swish or Mish, especially when properly tuned. Complexity adds computational cost without guaranteed benefits.

Myth 5: "You should use the same activation everywhere"

Fact: Mixing activations can be beneficial. For example, using ReLU in convolutional layers but GELU in attention layers, or using Leaky ReLU in some layers where dying neurons are problematic.

Myth 6: "ReLU only works because of GPUs"

Fact: While GPUs accelerated deep learning generally, ReLU's advantages (solving vanishing gradients, enabling sparsity) are fundamental mathematical properties that help regardless of hardware.

15. FAQ

Q1: What does ReLU stand for?

ReLU stands for Rectified Linear Unit. "Rectified" refers to converting negative values to zero (rectification), and "linear" describes the function's behavior for positive values (outputting the input unchanged).

Q2: Why is ReLU better than sigmoid?

ReLU trains faster (6x speedup in AlexNet), avoids vanishing gradients (derivative of 1 vs sigmoid's max 0.25), requires simpler computation (comparison vs exponential), and creates beneficial sparse activations. These advantages enable training of much deeper networks.

Q3: When was ReLU invented?

The rectifier function appeared in Householder's work in 1941 and Fukushima's research in 1969. Nair and Hinton formally advocated for ReLU in deep learning in 2010. AlexNet's 2012 success popularized widespread adoption.

Q4: What is the dying ReLU problem?

Dying ReLU occurs when neurons get stuck outputting only zeros for all inputs. With zero output comes zero gradient, so the neuron never updates and remains permanently inactive. Proper initialization, batch normalization, and reasonable learning rates prevent this.

Q5: Should I use ReLU or Leaky ReLU?

Start with standard ReLU. If you observe many dead neurons (check activation statistics), switch to Leaky ReLU. For most well-initialized networks with batch normalization, standard ReLU works excellently.

Q6: What's the difference between ReLU and GELU?

ReLU is a simple threshold (positive passes, negative becomes zero). GELU applies a probabilistic gate based on the Gaussian cumulative distribution function, providing smooth activation. GELU works better in transformer architectures; ReLU dominates convolutional networks.

Q7: Can ReLU be used in the output layer?

Generally no. Output layers typically use sigmoid (binary classification), softmax (multi-class classification), linear (regression), or tanh. ReLU in the output layer would prevent negative predictions and could cause gradient issues.

Q8: How do I implement ReLU?

In one line: output = max(0, input). Most deep learning frameworks provide built-in ReLU: torch.nn.ReLU() in PyTorch, tf.nn.relu() in TensorFlow, or as an activation parameter: activation='relu'.

Q9: What learning rate should I use with ReLU?

For Adam optimizer: start with 0.001-0.01. For SGD: 0.01-0.1. High learning rates increase dying ReLU risk. Use learning rate schedules that decrease over time for best results.

Q10: Is ReLU differentiable?

ReLU is differentiable everywhere except x=0. At zero, the derivative is undefined (left derivative is 0, right derivative is 1). In practice, implementations choose 0 or 1 for the derivative at zero; this rarely causes issues.

Q11: Why doesn't ReLU cause overfitting?

ReLU's sparse activations (many zeros) provide natural regularization similar to dropout. This sparsity prevents the network from using all neurons for every input, forcing more generalized representations.

Q12: How does ReLU compare to modern activations like Mish?

Mish is smoother and sometimes achieves slightly higher accuracy (94.32% vs 93.89% on CIFAR-10 in some tests). However, Mish requires more computation. For most applications, ReLU's speed advantage outweighs small accuracy differences.

Q13: Can I mix different activations in one network?

Yes! Modern architectures often use different activations in different layers. For example, ReLU in convolutional layers, GELU in attention layers, and Leaky ReLU where dying neurons are problematic.

Q14: What's the best initialization for ReLU?

Use He initialization (Kaiming initialization): weight ~ Normal(0, sqrt(2/n_in)) where n_in is the number of input units. This initialization was specifically designed for ReLU networks and prevents activation explosion/vanishing.

Q15: Does ReLU work for recurrent neural networks (RNNs)?

ReLU can be used in RNNs, but saturating activations like tanh are more common because RNNs require bounded activations for stability. However, some variants like GRUs and LSTMs use ReLU-family activations in certain components.

Q16: How much faster is ReLU than sigmoid?

Computationally, ReLU is approximately 60-70% faster than sigmoid. In training speed, AlexNet demonstrated 6x faster convergence with ReLU vs tanh. Actual speedup depends on architecture, batch size, and hardware.

Q17: What percentage of neurons typically "die" with ReLU?

In well-initialized networks with proper training, fewer than 5-10% of neurons die. In poorly configured networks or with very high learning rates, 30-60% can become inactive. Monitoring activation statistics helps detect this issue.

Q18: Is ReLU suitable for time series forecasting?

ReLU can work for time series, but be careful if your data contains meaningful negative values (stock returns, temperature changes, etc.). Consider Leaky ReLU or GELU as alternatives that preserve negative information.

Q19: Why do transformers use GELU instead of ReLU?

GELU's smooth, probabilistic nature works better with attention mechanisms in transformers. The smoothness helps gradient flow in very deep transformer networks, and the stochastic regularization properties benefit language modeling tasks.

Q20: Will ReLU be replaced in the future?

ReLU likely remains the default for many applications due to its simplicity, speed, and effectiveness. Task-specific variants (GELU for transformers, Swish for some vision tasks) will coexist rather than replace ReLU entirely. The future is specialization, not universal replacement.

16. Key Takeaways

ReLU is deceptively simple — just max(0, x) — but this simplicity is its strength, enabling fast computation and easy implementation.

Solved the vanishing gradient problem that prevented deep learning for decades by maintaining gradient flow with derivative of 1 for positive values.

AlexNet's 2012 breakthrough demonstrated ReLU's power, achieving 15.3% error vs 26.2% for second place, training 6x faster than tanh networks.

Creates beneficial sparsity — approximately 50% of activations are zero, improving generalization and computational efficiency.

Dying ReLU is manageable — proper He initialization, batch normalization, and moderate learning rates prevent most dead neuron problems.

Variants address specific needs — Leaky ReLU prevents dying neurons, PReLU learns optimal slopes, ELU provides smoothness, GELU excels in transformers.

Domain-specific preferences — ReLU dominates computer vision CNNs, GELU leads in NLP transformers, variants optimize for specialized tasks.

Powers massive industry growth — the $96.8 billion deep learning market (2024) growing to $526.7 billion by 2030 relies heavily on ReLU-based architectures.

Real-world applications — from Tesla's autonomous driving to Stanford's medical imaging, ReLU enables practical AI systems processing billions of images daily.

Future remains strong — despite newer variants, ReLU's combination of simplicity, speed, and effectiveness ensures its continued role as a foundational activation function.

17. Next Steps

For Beginners:

Implement a simple neural network using ReLU in PyTorch or TensorFlow

Compare ReLU vs sigmoid on a small dataset (MNIST) to see the speed difference

Monitor activation statistics to understand sparsity and dead neurons

Read the AlexNet paper (Krizhevsky et al., 2012) to see ReLU's breakthrough application

Experiment with variants — try Leaky ReLU and observe differences

For Intermediate Practitioners:

Optimize ReLU networks using He initialization and batch normalization

Detect and fix dying ReLU in your models through activation monitoring

Benchmark activation functions on your specific task (ReLU vs GELU vs Swish)

Study case studies of ReLU applications in your domain (medical imaging, NLP, etc.)

Experiment with mixed activations using different functions in different layers

For Advanced Researchers:

Explore novel activation functions that address ReLU's limitations for your specific application

Analyze mathematical properties of activation functions relevant to your architecture

Optimize for hardware efficiency balancing accuracy and computational cost

Contribute to benchmarks comparing activations across diverse tasks and datasets

Publish findings on domain-specific activation function performance

Resources:

Papers: AlexNet (Krizhevsky et al., 2012), PReLU (He et al., 2015), GELU (Hendrycks & Gimpel, 2016)

Courses: Stanford CS231n, DeepLearning.AI specialization, Fast.ai

Libraries: PyTorch, TensorFlow, JAX

Datasets: MNIST, CIFAR-10, ImageNet for experimenting

18. Glossary

Activation Function: A mathematical function that determines whether a neuron should "fire" (activate) based on its input. Introduces non-linearity into neural networks.

Backpropagation: The algorithm used to train neural networks by computing gradients and updating weights to minimize loss.

Batch Normalization: A technique that normalizes layer inputs to stabilize training and improve convergence speed.

Convolutional Neural Network (CNN): A deep learning architecture particularly effective for image processing, using convolutional layers to extract spatial features.

Derivative: The rate of change of a function. In neural networks, derivatives (gradients) indicate how to adjust weights during training.

Dying ReLU: A problem where ReLU neurons become permanently inactive (always output zero), stopping learning for those neurons.

Gradient: The derivative or slope of the loss function with respect to parameters, indicating the direction to adjust weights.

He Initialization (Kaiming Initialization): A weight initialization method designed specifically for ReLU networks, preventing activation explosion/vanishing.

Hidden Layer: Layers between input and output in a neural network where feature extraction and transformation occur.

Leaky ReLU: ReLU variant that allows a small negative slope (typically 0.01) instead of zero for negative inputs.

Non-linearity: Property of mathematical functions where the output is not proportional to the input. Essential for neural networks to learn complex patterns.

Sparsity: Property where many values are zero. ReLU creates sparse activations, improving efficiency and generalization.

Transformer: A neural network architecture based on attention mechanisms, commonly used in natural language processing.

Vanishing Gradient: Problem where gradients become extremely small in deep networks, preventing effective learning in early layers.

19. Sources and References

Wikipedia: "Rectified linear unit" (October 2025). https://en.wikipedia.org/wiki/Rectified_linear_unit

Wikipedia: "AlexNet" (November 2025). https://en.wikipedia.org/wiki/AlexNet

Grand View Research: "Deep Learning Market Size And Share | Industry Report 2030" (2025). https://www.grandviewresearch.com/industry-analysis/deep-learning-market

IABAC: "ReLU Activation Function: The Complete 2025 Guide" (November 28, 2025). https://iabac.org/blog/relu-activation-function

GeeksforGeeks: "ReLU Activation Function in Deep Learning" (July 23, 2025). https://www.geeksforgeeks.org/deep-learning/relu-activation-function-in-deep-learning/

Medium - Ignacio Quintero: "The ReLU Activation Function: How a Simple Breakthrough Changed the Deep Learning Field" (August 25, 2025). https://medium.com/@igquinteroch/the-relu-activation-function-how-a-simple-breakthrough-changed-the-deep-learning-field-c0fd86552586

Medium - Shivam S.: "Understanding AlexNet: The 2012 Breakthrough That Changed AI Forever" (April 1, 2025). https://medium.com/@shivsingh483/understanding-alexnet-the-2012-breakthrough-that-changed-ai-forever-7c365cf76969

IEEE Spectrum: "How AlexNet Transformed AI and Computer Vision Forever" (March 25, 2025). https://spectrum.ieee.org/alexnet-source-code

Viso AI: "AlexNet: Revolutionizing Deep Learning in Image Classification" (April 2, 2025). https://viso.ai/deep-learning/alexnet/

University of Medan Area: "ReLU (Rectified Linear Unit): Modern Deep Learning" (December 30, 2024). https://p3mpi.uma.ac.id/2024/12/30/relu-rectified-linear-unit-modern-deep-learning/

Vedan Analytics: "Ultimate Guide to Activation Functions- Deep Learning - 2025" (April 2, 2025). https://vedanganalytics.com/ultimate-guide-to-activation-functions-for-neural-networks/

Journal of Machine Learning Research: "Deep Network Approximation: Beyond ReLU to Diverse Activation Functions" (Zhang et al., 2024). https://www.jmlr.org/papers/v25/23-0912.html

arXiv: "Activation Functions in Deep Learning: A Comprehensive Survey and Benchmark" (2021). https://arxiv.org/pdf/2109.14545

arXiv: "GELU Activation Function in Deep Learning" (2025). https://arxiv.org/pdf/2305.12073

arXiv: "SMU: Smooth Activation Function for Deep Networks" (2021). https://arxiv.org/pdf/2111.04682

Baeldung: "ReLU vs. LeakyReLU vs. PReLU" (March 18, 2024). https://www.baeldung.com/cs/relu-vs-leakyrelu-vs-prelu

DigitalOcean: "ReLU vs ELU: Picking the Right Activation for Deep Nets" (October 9, 2025). https://www.digitalocean.com/community/tutorials/relu-vs-elu-activation-function

APXML: "ReLU Variants (Leaky ReLU, PReLU, ELU)". https://apxml.com/courses/introduction-to-deep-learning/chapter-2-activation-functions-architecture/relu-variants

Medium - Sophie Zhao: "ReLU vs GELU: Why ReLU Feels Mechanical, but GELU Sings the Rhythm of e" (September 24, 2025). https://medium.com/@sophiezhao_2990/relu-vs-gelu-why-relu-feels-mechanical-but-gelu-sings-the-rhythm-of-e-39eebb6b7a22

WACV 2025: "Optimizing Neural Network Effectiveness via Non-Monotonicity Refinement" (Biswas et al., 2025). https://openaccess.thecvf.com/content/WACV2025/papers/Biswas_Optimizing_Neural_Network_Effectiveness_via_Non-Monotonicity_Refinement_WACV_2025_paper.pdf

IMARC Group: "Deep Learning Market Size, Share, Trends, Report 2033" (2024). https://www.imarcgroup.com/deep-learning-market

Precedence Research: "Deep Learning Market Size To Hit USD 1420.29 Bn By 2034" (January 31, 2025). https://www.precedenceresearch.com/deep-learning-market

Mordor Intelligence: "Deep Learning Market Growth, Share | Forecast [2032]" (June 23, 2025). https://www.mordorintelligence.com/industry-reports/deep-learning

Fortune Business Insights: "Deep Learning Market Growth, Share | Forecast [2032]" (2025). https://www.fortunebusinessinsights.com/deep-learning-market-107801

Itransition: "Machine Learning Statistics for 2026: The Ultimate List" (2025). https://www.itransition.com/machine-learning/statistics

Founders Forum Group: "AI Statistics 2024–2025: Global Trends, Market Growth & Adoption Data" (July 14, 2025). https://ff.co/ai-statistics-trends-global-market/

ResearchGate: "Real-time End-to-End Federated Learning: An Automotive Case Study" (March 22, 2021). https://www.researchgate.net/publication/350311828_Real-time_End-to-End_Federated_Learning_An_Automotive_Case_Study

Harvard Business School: "BIG Data in Tesla Inc." (October 17, 2023). https://d3.harvard.edu/platform-digit/submission/big-data-in-tesla-inc/

Dive into Deep Learning: "8.1. Deep Convolutional Neural Networks (AlexNet)" (2024). https://d2l.ai/chapter_convolutional-modern/alexnet.html

The Turing Post: "How ImageNet, AlexNet and GPUs Changed AI Forever" (April 14, 2025). https://www.turingpost.com/p/cvhistory6

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments