What is a Transformer Model? The AI Architecture That Changed Everything

- Muiz As-Siddeeqi

- Oct 22, 2025

- 25 min read

Seven years ago, eight Google researchers published a paper with an audacious title that sounded almost poetic: "Attention Is All You Need." They had no idea they were about to trigger the biggest revolution in artificial intelligence since the invention of the computer itself. Today, every time you use ChatGPT, ask Google a question, or watch Netflix recommend your next obsession, you're experiencing the power of transformer models. These elegant mathematical structures have gone from costing $930 to train in 2017 to requiring $191 million for Google's Gemini Ultra in 2024—and they're reshaping healthcare, finance, education, and nearly every corner of our digital lives.

TL;DR

Transformers are neural networks that use attention mechanisms to process sequential data like text and images

Released in 2017 by Google researchers, transformers replaced older recurrent neural networks (RNNs)

Major models include GPT-4 (1.8 trillion parameters), BERT, Claude, and Vision Transformers for images

Training costs exploded from $930 (2017 Transformer) to $78-191 million (GPT-4 and Gemini Ultra, 2024)

Adoption surged: 65% of organizations now regularly use generative AI, up 49% from 2023

Applications span healthcare diagnostics, financial analysis, content creation, and scientific research

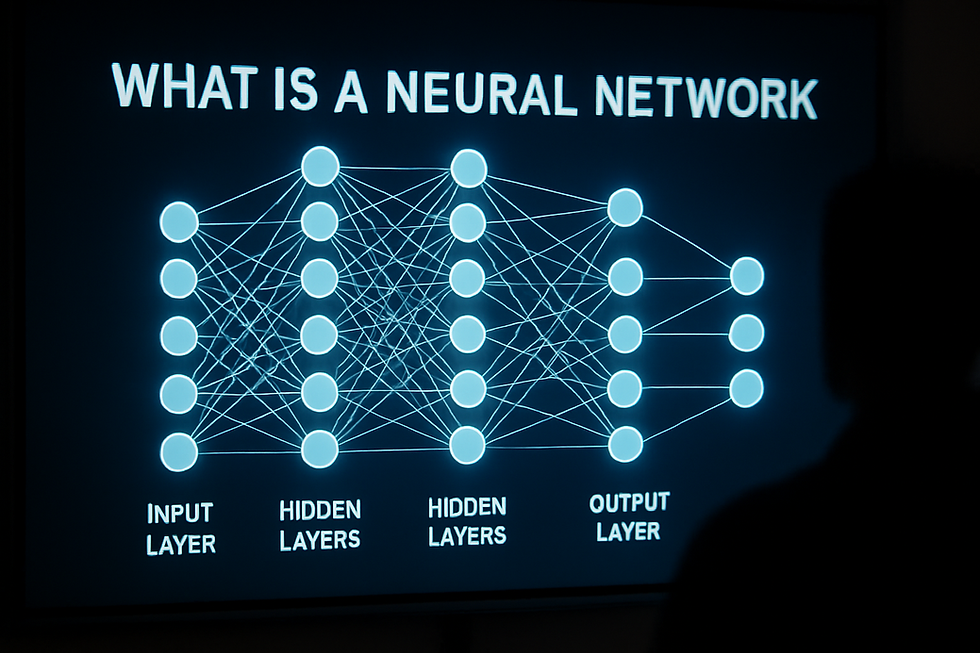

A transformer model is a type of neural network architecture that uses self-attention mechanisms to process sequential data in parallel. Unlike previous models that processed information step-by-step, transformers analyze all parts of an input simultaneously, making them faster and more effective at understanding relationships between words, images, or other data points.

Table of Contents

The Birth of Transformers: A Revolution in 2017

The Paper That Changed AI Forever

On June 12, 2017, eight researchers from Google Brain and Google Research submitted a paper to arXiv that would fundamentally reshape artificial intelligence. Titled "Attention Is All You Need," the paper introduced the transformer architecture—a novel approach to processing sequential data that dispensed entirely with the recurrent and convolutional layers that had dominated the field (Vaswani et al., NeurIPS 2017).

The team included Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan Gomez, Łukasz Kaiser, and Illia Polosukhin—all listed as equal contributors with randomized author order. Their creation was remarkably efficient: the original transformer model trained for just 12 hours on 8 NVIDIA P100 GPUs, costing approximately $930 (Stanford AI Index 2024).

The Problem They Solved

Before transformers, natural language processing relied heavily on recurrent neural networks (RNNs) and long short-term memory (LSTM) networks. These architectures processed text sequentially—one word at a time, from left to right—which created three major problems:

Sequential processing bottleneck: RNNs couldn't parallelize computation because each step depended on the previous one. Training was slow and expensive.

Vanishing gradients: When sequences got long, the model struggled to maintain connections between distant words. Understanding a pronoun that referred to a noun mentioned 50 words earlier? Nearly impossible.

Limited context windows: The models had trouble capturing long-range dependencies in text, making them poor at tasks requiring understanding of entire paragraphs or documents.

The transformer solved all three problems with a single elegant mechanism: self-attention.

Initial Performance That Stunned Researchers

The original transformer achieved remarkable results on machine translation tasks. On the WMT 2014 English-to-German translation benchmark, it scored 28.4 BLEU (Bilingual Evaluation Understudy), surpassing previous best results by over 2 BLEU points. On English-to-French translation, it achieved 41.8 BLEU after just 3.5 days of training—a fraction of the time required by earlier models (Vaswani et al., 2017).

As of 2025, the "Attention Is All You Need" paper has been cited more than 173,000 times, placing it among the top ten most-cited papers of the 21st century (Wikipedia, 2025).

How Transformer Models Actually Work

The Self-Attention Mechanism: The Core Innovation

At the heart of every transformer lies the self-attention mechanism. This allows the model to weigh the importance of different words in a sequence when processing each word. Think of it like reading a sentence where certain words jump out at you because they're crucial for understanding the meaning.

When processing the sentence "The animal didn't cross the street because it was too tired," self-attention helps the model determine that "it" refers to "animal" rather than "street" by calculating attention scores between all word pairs simultaneously.

How attention works mathematically:

Each word is converted into three vectors: Query (Q), Key (K), and Value (V)

The model calculates attention scores by measuring similarity between Queries and Keys

These scores determine how much each word should "pay attention" to every other word

The final output is a weighted sum of Values based on these attention scores

This happens in parallel for all words at once—the key advantage over sequential RNNs.

Multi-Head Attention: Seeing Multiple Perspectives

Transformers don't use just one attention mechanism—they use multiple "heads" that learn to focus on different aspects of the relationships between words. One head might specialize in syntactic relationships (subject-verb agreement), while another captures semantic meaning, and yet another handles long-distance dependencies.

The original transformer used 8 attention heads. Modern large language models like GPT-4 use far more, enabling richer understanding of text (Vaswani et al., 2017).

Positional Encoding: Maintaining Word Order

Since transformers process all words simultaneously rather than sequentially, they need a way to understand word order. Without this, "Dog bites man" would be indistinguishable from "Man bites dog."

Transformers solve this through positional encoding—mathematical patterns added to word embeddings that encode each word's position in the sequence. This allows the model to understand both what words mean and where they appear (AWS, 2024).

Feed-Forward Networks: Adding Depth

After the attention mechanism processes the input, each transformer layer includes a feed-forward neural network that further transforms the data. These networks add non-linear processing power, enabling the model to learn complex patterns.

Modern transformers stack many of these layers. BERT-Base uses 12 layers, BERT-Large uses 24, and GPT-4 reportedly uses 120 layers, each adding more sophisticated understanding (SemiAnalysis, 2023).

The Three Types of Transformer Architectures

The original 2017 transformer had both an encoder and decoder. Since then, researchers discovered that using just one part often works better for specific tasks.

Encoder-Only Transformers: Understanding and Classification

What they do: Process input to create deep understanding without generating new sequences.

Best for: Classification tasks, question answering, named entity recognition, sentiment analysis.

Key example: BERT (Bidirectional Encoder Representations from Transformers), released by Google in 2018. BERT reads text in both directions simultaneously—analyzing "The cat sat on the ___" by looking at both the words before and after the blank.

BERT revolutionized search. When Google integrated BERT into its search engine in 2019, it improved understanding of 1 in 10 searches—one of the biggest leaps in search quality in years (IBM, 2025).

Variants: RoBERTa, ALBERT, DistilBERT, BioBERT (for biomedical text), ClinicalBERT (for medical records).

Decoder-Only Transformers: Generation and Creation

What they do: Generate new sequences by predicting the next token based on previous context.

Best for: Text generation, code writing, chatbots, creative writing, summarization.

Key examples: The entire GPT family (GPT-1, GPT-2, GPT-3, GPT-3.5, GPT-4), LLaMA (Meta), Claude (Anthropic), PaLM (Google).

GPT-3, released in 2020, had 175 billion parameters and was trained on 300 billion tokens of text. It could write essays, answer questions, generate code, and even create poetry with minimal instruction (PYMNTS, 2025).

GPT-4, released in March 2023, took this further with an estimated 1.8 trillion parameters across 120 layers. According to analysis by Klu.ai, it was trained on 13 trillion tokens—equivalent to roughly 10 million books (Wikipedia, 2025).

Encoder-Decoder Transformers: Translation and Transformation

What they do: Use both components—encoder understands input, decoder generates output.

Best for: Machine translation, summarization, text-to-text tasks.

Key examples: T5 (Text-to-Text Transfer Transformer), BART, mT5 (multilingual).

These models excel when the output structure differs significantly from the input structure, such as translating between languages or converting long articles into short summaries (MathWorks, 2024).

From $930 to $191 Million: The Economics of Training

The Exponential Growth in Costs

The cost of training cutting-edge transformer models has exploded at a staggering rate:

Model | Year | Parameters | Training Cost | Organization |

Original Transformer | 2017 | ~65 million | $930 | |

BERT-Base | 2018 | 110 million | ~$7,000 (est.) | |

GPT-3 | 2020 | 175 billion | $4.6 million | OpenAI |

PaLM (540B) | 2022 | 540 billion | $12.4 million | |

GPT-4 | 2023 | ~1.8 trillion | $78-100 million | OpenAI |

Gemini Ultra | 2023 | ~1.5 trillion | $191 million |

Sam Altman, CEO of OpenAI, stated publicly that training GPT-4 cost more than $100 million (Wikipedia, 2025).

Why Costs Skyrocketed

Several factors drove this 200,000-fold cost increase:

Scale explosion: GPT-4 has roughly 27,000 times more parameters than the original transformer. Each parameter requires computation during training.

Data volume: Modern models train on trillions of tokens. GPT-4 trained on 13 trillion tokens compared to the original transformer's much smaller dataset (The Decoder, 2023).

Compute requirements: Training GPT-4 consumed an estimated 2.1 × 10²⁵ floating-point operations (FLOPs)—21 billion petaFLOPs of computation (CUDO Compute, 2025).

Hardware expenses: Training runs on thousands of specialized GPUs. NVIDIA GPUs remain the industry standard, with the company holding about 80% of the AI chip market as of 2024 (Visual Capitalist, 2024).

Human feedback integration: Modern models like GPT-4 and Claude undergo extensive alignment training with human feedback, requiring armies of human contractors to evaluate outputs and improve model behavior.

The Billion-Dollar Horizon

Anthropic CEO Dario Amodei has predicted that training the most sophisticated next-generation models could cost $1 billion or more (PYMNTS, 2025). This creates a significant barrier to entry, concentrating AI development power among well-funded organizations.

Yet remarkably, inference costs (running already-trained models) have decreased dramatically, making AI accessible to millions of users through services like ChatGPT, Claude, and Google's Gemini.

Major Transformer Models You Need to Know

GPT Family: The Text Generation Powerhouses

GPT-1 (2018): 117 million parameters. Proved that pre-training on large text corpora followed by task-specific fine-tuning worked remarkably well.

GPT-2 (2019): 1.5 billion parameters. OpenAI initially refused to release it due to concerns about misuse—a decision that sparked debate about AI safety and openness.

GPT-3 (2020): 175 billion parameters. The breakthrough that brought transformers into mainstream awareness. Could perform tasks with just a few examples (few-shot learning) rather than requiring extensive training.

GPT-3.5 (2022): The engine behind the original ChatGPT that launched in November 2022. Within two months, ChatGPT reached 100 million monthly active users—the fastest-growing consumer application in history (Founders Forum, 2025).

GPT-4 (2023): OpenAI's most capable model, with an estimated 1.8 trillion parameters organized in a Mixture of Experts (MoE) architecture using 16 expert networks (The Decoder, 2023).

BERT and Its Descendants

Google's BERT (Bidirectional Encoder Representations from Transformers) came in two sizes:

BERT-Base: 110 million parameters, 12 layers

BERT-Large: 340 million parameters, 24 layers

BERT's bidirectional training—reading text from both directions simultaneously—enabled unprecedented understanding of context. When Google integrated BERT into search in 2019, search quality improved dramatically for complex queries (IBM, 2025).

Domain-specific BERT variants:

BioBERT: Trained on biomedical literature (PubMed abstracts and articles)

ClinicalBERT: Specialized for clinical notes and electronic health records

FinBERT: Optimized for financial text analysis

SciBERT: Trained on scientific papers across disciplines

Claude: Constitutional AI

Anthropic's Claude family (Claude 1, 2, and 3 with variants Haiku, Sonnet, and Opus) represents a different approach to transformer development. Founded by former OpenAI researchers, Anthropic developed "Constitutional AI"—a method to train AI models to align with human ethical values through a documented constitution of principles (PYMNTS, 2025).

Claude models are decoder-only transformers trained with reinforcement learning from human feedback (RLHF) but with an emphasis on safety, helpfulness, and harmlessness.

LLaMA: Open-Source Innovation

Meta's LLaMA (Large Language Model Meta AI) family made waves by releasing relatively smaller yet highly capable models:

LLaMA 1 (2023): 7B, 13B, 33B, and 65B parameter versions

LLaMA 2 (2023): Open-sourced for commercial use

LLaMA 3 (2024): Further improvements in capability

LLaMA's open release spurred thousands of derivative projects and fine-tuned models, democratizing access to powerful transformer technology (Heidloff, 2024).

Real-World Applications and Case Studies

Healthcare: Transforming Medical Practice

Transformers are revolutionizing healthcare across multiple fronts.

Case Study 1: Medical Imaging Analysis

Researchers at the University of Florida developed transformer-based systems for analyzing medical images, achieving a 90% detection rate for malignancies in medical imaging—a substantial improvement over previous convolutional neural network approaches (MDPI, 2025).

Vision Transformers (ViT) applied to biomedical images demonstrated breakthrough performance on the MedMNIST v2 dataset:

BloodMNIST classification: 97.90% accuracy

BreastMNIST classification: 90.38% accuracy

PathMNIST classification: 94.62% accuracy

RetinaMNIST classification: 57% accuracy

Case Study 2: Clinical Documentation and Coding

The University of Florida team also applied transformers to clinical notes, achieving a 30% boost in named entity recognition accuracy for extracting medical concepts, diagnoses, and treatments from unstructured clinical text (MDPI, 2025).

This has immediate practical impact: automating clinical documentation saves physicians hours per day, reduces billing errors, and improves patient care by ensuring accurate record-keeping.

Case Study 3: Healthcare Cost Prediction

In September 2024, researchers introduced the Large Medical Model (LMM)—a generative pre-trained transformer trained on medical event sequences from over 140 million longitudinal patient claims records. The model demonstrated state-of-the-art performance in predicting healthcare costs and identifying risk factors, with potential savings from the estimated $1 trillion in wasteful U.S. healthcare spending (arXiv, 2024).

With U.S. healthcare spending approaching $5 trillion annually and 25% estimated to be wasteful, such predictive models could save hundreds of billions of dollars while improving patient outcomes.

Finance: Risk Assessment and Market Analysis

Case Study 4: Morgan Stanley's Knowledge Assistant

Morgan Stanley deployed GPT-4 to power a knowledge assistant for its financial advisors. The system can instantly retrieve and synthesize information from thousands of internal documents, research reports, and market analyses—work that previously required hours of manual research (Founders Forum, 2025).

Financial advisors using the system report productivity improvements of 60-70%, allowing them to spend more time on client relationships and strategic planning rather than information gathering.

Customer Service: Scaling Human Touch

Case Study 5: Klarna's AI Assistant

In 2024, Swedish fintech company Klarna deployed an AI assistant powered by transformer models that reduced customer support volume by 66%. The system handles routine inquiries, freeing human agents to focus on complex cases requiring empathy and nuanced judgment (Founders Forum, 2025).

The AI assistant now handles the work equivalent to 700 full-time customer service agents, responding to customers in 35 languages, 24/7. Customer satisfaction scores remained high, and resolution times dropped from 11 minutes to under 2 minutes for AI-handled queries.

Supply Chain and Operations

Case Study 6: IKEA's Predictive Returns

IKEA deployed generative AI based on transformer models to analyze customer support logs and predict product return issues before they become widespread problems. The system identifies patterns in customer complaints and product defects, enabling proactive quality improvements and reducing return rates (Founders Forum, 2025).

Case Study 7: Unilever's Document Automation

Unilever automated internal documents, policies, and supply chain emails using large language models. This reduced document processing time by 50% and improved accuracy in supply chain coordination across its global operations (Founders Forum, 2025).

Education and Writing Assistance

Case Study 8: English Writing Instruction

Researchers developed a transformer-based multidimensional feedback system for English writing instruction that provides real-time suggestions on grammar, vocabulary, sentence structure, and logical coherence. The system, built on a fine-tuned BERT model, delivers feedback with a delay of just 1.8 seconds and demonstrably improves writing quality for non-native learners (Nature Scientific Reports, 2025).

The system was tested in 2024 with students across multiple educational institutions and showed measurable improvement in writing proficiency over traditional instruction methods.

Vision Transformers: Beyond Text

The Breakthrough in Computer Vision

In 2020, Google Research released the Vision Transformer (ViT), adapting the transformer architecture for image recognition. The paper "An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale" by Alexey Dosovitskiy, Neil Houlsby, and colleagues demonstrated that transformers could match or exceed convolutional neural networks (CNNs) on image classification tasks (Google Research, 2021).

How ViT Works

Instead of processing images pixel by pixel, ViT divides images into fixed-size patches (typically 16×16 pixels), treats each patch as a token (like a word in text), and processes them through a transformer encoder.

For a 224×224 pixel image with 16×16 patches:

Number of patches: (224/16) × (224/16) = 14 × 14 = 196 patches

Each patch becomes an embedded vector

Positional encodings preserve spatial relationships

Transformer attention learns which image regions are important for classification

ViT outperforms traditional CNNs by approximately 4× in terms of computational efficiency and accuracy when trained on large datasets (Viso.ai, 2024).

Applications of Vision Transformers

Medical imaging: ViT models achieve breakthrough accuracy in detecting cancers, classifying skin conditions, and analyzing X-rays and MRIs.

Autonomous vehicles: Self-driving systems use ViT for object detection, lane recognition, and scene understanding.

Weather prediction: In 2024, researchers proposed a 113-billion-parameter ViT model for weather and climate prediction, trained on the Frontier supercomputer with throughput of 1.6 exaFLOPs (Wikipedia, 2025).

Agriculture: ViT helps identify plant diseases, estimate crop yields, and optimize farming practices through drone imagery analysis.

Manufacturing quality control: Automated visual inspection systems use ViT to detect defects in products with superhuman accuracy.

Notable ViT Variants

Swin Transformer (2021): Uses shifted window mechanism to efficiently process high-resolution images. Achieved state-of-the-art results on object detection datasets like COCO (arXiv, 2023).

CaiT (Constrained Attention for Image Transformers): Improved computational efficiency while maintaining high performance through constrained attention mechanisms.

Multiscale Vision Transformers (MS-ViT): Process images at multiple resolutions simultaneously, capturing both fine-grained details and global context.

<a name="limitations-and-challenges"></a>

Current Limitations and Challenges

Despite their revolutionary impact, transformer models face significant challenges.

Computational Requirements and Environmental Cost

Training large transformer models requires massive computational resources. GPT-4's training consumed enough electricity to power thousands of homes for a year. The carbon footprint of training a single large language model can equal that of five cars over their entire lifetimes, including manufacturing (Stanford AI Index, 2024).

Organizations invested an average of $110 million on generative AI initiatives in 2024, putting such technology out of reach for most researchers and small companies (AmplifAI, 2025).

Hallucinations and Accuracy Issues

Transformers can "hallucinate"—confidently generating false information that sounds plausible. This happens because models learn statistical patterns in training data without true understanding of truth or logic.

A study found that even advanced models like GPT-4 occasionally produce factually incorrect information, cite non-existent research papers, or make up statistics. This poses risks in high-stakes domains like medicine, law, and journalism.

Context Window Limitations

Most transformer models have limited context windows—the amount of text they can process at once. While newer models like GPT-4 support up to 32,768 tokens (roughly 25,000 words), longer documents still require workarounds like chunking and summarization.

This limitation stems from the quadratic computational cost of self-attention: doubling the context length quadruples the computation time.

Data Quality and Bias

Transformers learn from training data, which means they inherit its biases and limitations. Models trained on internet text absorb societal biases around gender, race, and culture. Medical models trained primarily on data from Western countries may perform poorly on patients from other regions.

A 2024 qualitative study with 28 participants identified multiple risks of using transformer models in healthcare, including data privacy concerns, bias amplification, lack of interpretability, and the need for rigorous validation before clinical deployment (Journal of Medical Systems, 2024).

Data Efficiency Challenges

Vision Transformers, while powerful, are less data-efficient than CNNs. Training ViT from scratch on small datasets often fails because transformers have many parameters and need massive amounts of data to learn effectively. Most practical ViT applications require pre-training on huge datasets (like ImageNet with 14 million images) followed by fine-tuning on specific tasks (V7 Labs, 2024).

Interpretability Problems

Understanding why a transformer makes a particular decision remains difficult. The attention mechanism provides some insight—researchers can visualize which words or image regions the model focuses on—but the interaction of billions of parameters across dozens of layers creates a "black box" problem.

This lack of interpretability raises concerns in regulated industries. Healthcare providers need to explain why an AI system recommended a particular diagnosis. Financial institutions must justify credit decisions. Current transformers struggle to provide clear, auditable reasoning.

<a name="future-of-transformers"></a>

What's Next: The Future of Transformers

Emerging Trends and Innovations

Sparse Transformers: Researchers are developing models that use sparse attention patterns instead of computing attention between all token pairs. This reduces computational cost from quadratic to linear, enabling much longer context windows.

Mixture of Experts (MoE): Like GPT-4, future models will increasingly use MoE architectures that activate only relevant subsets of parameters for each input, dramatically improving efficiency.

Multimodal Integration: The boundary between text, image, audio, and video transformers is dissolving. Models like GPT-4o ("omni") can process and generate content across all these modalities simultaneously (Wikipedia, 2025).

Efficient Architectures: New normalization techniques (RMSNorm replacing LayerNorm), pre-normalization strategies, and grouped-query attention are making transformers faster and more stable during training (Medium, 2025).

Market Growth and Adoption

The global generative AI market, dominated by transformer-based models, showed explosive growth:

Market size increased by $22 billion from 2023 to 2024

65% of organizations regularly use generative AI in 2024, up from 33% in 2023

Private generative AI funding increased 8× from 2022 to reach $25.2 billion in 2023

Over 4 billion prompts are issued daily across major LLM platforms (OpenAI, Claude, Gemini, Mistral)

Impact on the Job Market

While 75% of workers worry AI will make some jobs obsolete, the picture is more nuanced. The World Economic Forum forecasts that AI will create 97 million new jobs while displacing 85 million by 2025—a net gain of 12 million positions (Synthesia, 2025).

New roles emerging around transformers include:

Prompt engineers who optimize inputs to AI systems

AI safety researchers who ensure models behave ethically

Model fine-tuning specialists who adapt pre-trained transformers for specific domains

AI trainers who provide human feedback for model improvement

92% of U.S.-based developers in large companies now use AI coding tools, with GitHub reporting that developers write code 55% faster using AI assistants like Copilot (Synthesia, 2025).

Scientific Applications

Transformers are accelerating scientific discovery:

Protein structure prediction: AlphaFold 2 (partially based on transformer architectures) solved the protein folding problem, predicting 3D structures from amino acid sequences with near-experimental accuracy.

Drug discovery: Pharmaceutical companies use transformers to analyze molecular structures, predict drug interactions, and identify promising compounds from billions of possibilities.

Materials science: Transformers help design new materials by learning patterns from vast databases of chemical properties.

Climate modeling: Large-scale ViT models process satellite imagery and sensor data to improve weather forecasting and climate change projections.

<a name="pros-and-cons"></a>

Pros and Cons

Advantages of Transformer Models

Parallelization: Unlike RNNs that process sequentially, transformers handle all tokens simultaneously, enabling massive computational speedups on modern GPUs.

Long-range dependencies: Self-attention captures relationships between distant elements in sequences, crucial for understanding context in long documents.

Transfer learning: Pre-trained transformers can be fine-tuned for specific tasks with relatively little data, democratizing access to powerful AI.

Scalability: Transformers benefit from scale—larger models generally perform better, following predictable scaling laws.

Versatility: The same architecture works for text, images, audio, video, protein sequences, and other sequential data.

State-of-the-art performance: Transformers hold records across numerous benchmarks in NLP, computer vision, and other domains.

Disadvantages and Limitations

Computational expense: Training costs have reached $100-200 million for cutting-edge models, limiting development to well-funded organizations.

Environmental impact: The energy consumption and carbon footprint of training and running large transformers raises sustainability concerns.

Data requirements: Transformers need vast amounts of training data to reach their full potential, especially in computer vision applications.

Quadratic complexity: Self-attention has quadratic computational cost relative to sequence length, limiting context window sizes.

Hallucinations: Models can generate convincing but false information without reliable mechanisms to detect or prevent this.

Lack of interpretability: Understanding why transformers make specific decisions remains challenging, problematic for high-stakes applications.

Bias amplification: Models inherit and can amplify biases present in training data.

Context limitations: Even advanced models struggle with tasks requiring context beyond their fixed window size.

Myths vs Facts

Myth 1: "Transformers Actually Understand Language"

Fact: Transformers are sophisticated pattern-matching systems that learn statistical relationships in data. They don't possess understanding, consciousness, or reasoning in any human-like sense. They excel at predicting what words or tokens should come next based on patterns they've learned, but this is fundamentally different from comprehension.

Myth 2: "Bigger Models Are Always Better"

Fact: While larger models generally perform better, there are diminishing returns and practical limits. A 2024 study showed that carefully trained smaller models can sometimes outperform larger ones on specific tasks. Model efficiency, training data quality, and architecture design matter as much as raw parameter count (arXiv, 2024).

Myth 3: "Transformers Will Replace All Human Jobs"

Fact: Transformers augment human capabilities rather than replace humans entirely. They excel at pattern recognition, data processing, and generation of standard content, but struggle with tasks requiring genuine creativity, ethical judgment, emotional intelligence, and adaptation to truly novel situations. The job market is shifting, not disappearing.

Myth 4: "Transformers Are Only for Natural Language Processing"

Fact: Transformers now power applications in computer vision, protein folding, weather prediction, financial analysis, and many other domains. The architecture's strength lies in processing sequential or spatial patterns, which appear throughout science and industry.

Myth 5: "AI Models Like ChatGPT Have Access to Real-Time Information"

Fact: Base transformer models are frozen at their training cutoff date. They don't automatically update with new information. Modern systems like ChatGPT and Claude sometimes use retrieval mechanisms or search tools to access current information, but the underlying transformer model itself contains only patterns from its training data.

Myth 6: "Transformers Can't Be Biased Because They're Mathematical"

Fact: Transformers learn from human-generated data, which contains human biases. They can amplify biases around gender, race, age, and culture. Addressing bias requires careful curation of training data, evaluation of model outputs, and ongoing monitoring in deployment.

Myth 7: "Training a Transformer Is Easy Once You Have the Data"

Fact: Training large transformers requires expertise in distributed computing, optimization techniques, numerical stability, and hyperparameter tuning. The original "Attention Is All You Need" paper noted that transformers need careful learning rate warmup and other tricks to converge properly. Modern techniques like pre-normalization, RMSNorm, and grouped-query attention required years of research to develop.

FAQ

1. What is the difference between a transformer and a neural network?

A transformer is a specific type of neural network architecture. All transformers are neural networks, but not all neural networks are transformers. Transformers are distinguished by their use of self-attention mechanisms and parallel processing, while traditional neural networks might use convolution (CNNs) or recurrence (RNNs).

2. Why are transformers better than LSTMs?

Transformers outperform LSTMs in three key ways:

(1) they process sequences in parallel rather than sequentially, making training much faster

(2) they capture long-range dependencies more effectively through self-attention

(3) they scale better—larger transformers generally perform better, while LSTMs hit diminishing returns.

3. How long does it take to train a transformer model?

Training time varies enormously. The original 2017 transformer trained in 12 hours on 8 GPUs. GPT-3 trained for weeks on thousands of GPUs. GPT-4 likely trained for months. Smaller models for specific tasks can train in hours or days. Pre-trained models can be fine-tuned for new tasks in hours.

4. Can I train my own transformer model?

Yes, but scale determines feasibility. You can train small transformers on a single GPU for specific tasks using frameworks like Hugging Face Transformers, PyTorch, or TensorFlow. Training large general-purpose models like GPT-4 requires resources accessible only to major tech companies. Most practical applications use pre-trained models fine-tuned on custom data.

5. What programming languages are used to build transformers?

Python dominates transformer development. Popular frameworks include PyTorch, TensorFlow, and JAX. Hugging Face's Transformers library provides pre-trained models and tools. For deployment, models may be optimized using C++, Rust, or other languages for speed.

6. How much data does a transformer need for training?

It depends on the model size and task. The original transformer trained on millions of sentence pairs. GPT-3 trained on 300 billion tokens (roughly 400 billion words). Modern large models use trillions of tokens. For fine-tuning pre-trained models, you might need only thousands of examples for good performance on specific tasks.

7. What is attention mechanism in simple terms?

Attention is a way for the model to focus on relevant parts of the input when processing each element. Think of reading a sentence where you automatically emphasize certain words that matter for understanding. Mathematically, attention computes weighted sums of values based on similarity between queries and keys.

8. Why do transformer models sometimes give wrong answers?

Transformers generate outputs based on statistical patterns in training data, not logical reasoning or fact-checking. They can produce "hallucinations"—confident-sounding but false information—because they're optimizing for plausible-sounding text rather than truth. They also lack real-world grounding and can't verify claims against external sources without additional tools.

9. What is the context window in transformers?

The context window is the maximum length of input the model can process at once. GPT-3 handled 2,048 tokens (roughly 1,500 words). GPT-4 supports up to 32,768 tokens (about 25,000 words). Longer inputs must be chunked or summarized. Newer techniques like sparse attention are enabling longer context windows.

10. How do transformers handle different languages?

Multilingual transformers are trained on text from many languages simultaneously. Models like mBERT, XLM-R, and multilingual versions of GPT handle 100+ languages. They learn that concepts have similar meanings across languages, enabling translation and cross-lingual understanding. Performance varies—models perform better on well-represented languages in training data.

11. What's the difference between GPT and BERT?

GPT is decoder-only and generates text by predicting the next token. It reads text left-to-right and excels at generation tasks. BERT is encoder-only and reads text bidirectionally (both directions simultaneously). It's better for understanding and classification tasks. They represent different design philosophies for different use cases.

12. Can transformers work with small datasets?

Not well without pre-training. Transformers have many parameters and need large datasets to train from scratch. However, pre-trained transformers can be fine-tuned on small datasets (hundreds or thousands of examples) for specific tasks—a technique called transfer learning. This is the standard approach for practical applications.

13. How do Vision Transformers differ from text transformers?

Vision Transformers (ViT) adapt the transformer architecture for images by splitting images into patches and treating each patch as a token. The core attention mechanism is the same, but ViT adds specialized positional encodings for 2D spatial information. ViT can match or exceed CNNs on image tasks when trained on large datasets.

14. What is the role of tokens in transformers?

Tokens are the basic units that transformers process. In text, a token might be a word, part of a word (subword), or character. "Tokenization" breaks input into these units. In images, tokens are patches. In audio, they're time slices. Token choice affects model performance—byte-pair encoding (BPE) is popular for text.

15. Why is ChatGPT so good at conversation?

ChatGPT combines a powerful transformer architecture (GPT-3.5 or GPT-4) with fine-tuning on conversational data and reinforcement learning from human feedback (RLHF). Human trainers ranked different responses, teaching the model what makes good conversation. It learns to maintain context, ask clarifying questions, and respond helpfully.

16. What is few-shot learning in transformers?

Few-shot learning means the model performs a task with just a few examples provided in the prompt, without additional training. GPT-3 pioneered this—you can give it 2-3 examples of a task (like translating English to French), and it generalizes the pattern to new inputs. This emergent capability scales with model size.

17. Can transformers generate images?

Yes, but through different mechanisms. Models like DALL-E and Stable Diffusion 3 use transformer architectures adapted for image generation. They might process text prompts with transformers and generate images using diffusion models, or tokenize images and use autoregressive transformers (like Parti). Sora uses transformers for video generation.

18. How do companies like OpenAI and Anthropic make money from transformers?

They offer API access (developers pay per token processed), subscription services (ChatGPT Plus, Claude Pro), enterprise licenses, and custom model training. The business model is monetizing inference—charging for queries to already-trained models—rather than recouping training costs directly.

19. What is the temperature parameter in transformer models?

Temperature controls randomness in generation. Low temperature (near 0) makes outputs more deterministic and focused—the model picks the highest-probability tokens. High temperature (near 1 or above) increases randomness and creativity—the model samples from a broader probability distribution. Users adjust temperature based on whether they want consistency or variety.

20. Will there be transformers larger than GPT-4?

Almost certainly. Research continues pushing scale boundaries. However, we may see a shift toward more efficient architectures, mixture-of-experts designs, and specialized models rather than simply increasing parameter counts. The industry is also exploring alternatives to transformers, like state space models (e.g., Mamba), that could offer better efficiency.

Key Takeaways

Transformers revolutionized AI through self-attention mechanisms that process sequences in parallel, replacing sequential RNNs and dramatically improving speed and accuracy

Costs exploded 200,000-fold from $930 for the original 2017 transformer to $191 million for Google's Gemini Ultra, concentrating cutting-edge AI development among well-funded organizations

Three architecture types dominate: Encoder-only (BERT) for understanding, decoder-only (GPT) for generation, and encoder-decoder for transformation tasks

Adoption accelerated dramatically: 65% of organizations now regularly use generative AI, double the rate from 2023, with returns of $3.70 per dollar invested

Real-world impact is massive: From 90% cancer detection rates in medical imaging to 66% reduction in customer support volume at Klarna to 55% faster code writing with AI assistants

Vision Transformers extended the revolution beyond text to images, achieving 4× better efficiency than CNNs and enabling breakthroughs in autonomous vehicles, medical diagnostics, and weather prediction

Significant challenges remain: Hallucinations, computational costs, limited context windows, bias amplification, environmental impact, and interpretability problems

The field is evolving rapidly: Sparse transformers, mixture-of-experts, multimodal integration, and efficient architectures are pushing boundaries while reducing costs

Actionable Next Steps

Start experimenting with pre-trained models: Create a free account on Hugging Face and explore their model hub. Try fine-tuning a small BERT model on your own text classification task using their tutorials.

Take a foundational course: Enroll in "Natural Language Processing with Transformers" on Coursera or DeepLearning.AI's courses on large language models to build theoretical understanding.

Use transformers for your work: If you work in content creation, try ChatGPT or Claude as writing assistants. If you code, experiment with GitHub Copilot. Document what works and what doesn't.

Stay informed about developments: Follow key researchers on Twitter/X, read the AI Index annual reports from Stanford, and subscribe to newsletters like The Batch by DeepLearning.AI.

Evaluate transformer solutions for your industry: Research domain-specific models (ClinicalBERT for healthcare, FinBERT for finance, CodeBERT for software development) and assess how they might solve problems in your field.

Consider the ethical implications: If you're deploying transformers in your organization, establish guidelines for responsible use, monitor for bias, and implement human oversight for high-stakes decisions.

Contribute to open source: Join projects on Hugging Face or GitHub that fine-tune models for specific languages or domains, especially for underrepresented communities and applications.

Invest in AI literacy: Whether you're technical or non-technical, understanding transformers is becoming essential. Share knowledge within your organization about both capabilities and limitations.

Glossary

Attention Mechanism: A technique that allows models to focus on relevant parts of input when processing each element, weighing importance of different positions.

BERT (Bidirectional Encoder Representations from Transformers): Google's encoder-only transformer that reads text bidirectionally for understanding and classification tasks.

Context Window: The maximum length of input a transformer can process simultaneously, measured in tokens.

Decoder: The part of a transformer that generates output sequences, often used for text generation.

Embedding: Conversion of words, images, or other data into numerical vector representations that capture semantic meaning.

Encoder: The part of a transformer that processes and understands input data.

Fine-tuning: Adapting a pre-trained model to a specific task or domain with additional training on task-specific data.

GPT (Generative Pre-trained Transformer): OpenAI's decoder-only transformer family specializing in text generation.

Hallucination: When a transformer generates false information that sounds plausible but isn't based on training data or reality.

LLM (Large Language Model): A transformer model with billions of parameters trained on vast text data.

Mixture of Experts (MoE): Architecture where multiple specialized sub-models (experts) handle different aspects of the task.

Multi-head Attention: Using multiple attention mechanisms in parallel to learn different types of relationships.

Parameter: Numerical weight in the model learned during training. Modern transformers have billions to trillions of parameters.

Positional Encoding: Information added to inputs to represent the position of elements in a sequence.

Pre-training: Initial training on large general datasets before fine-tuning for specific tasks.

RLHF (Reinforcement Learning from Human Feedback): Training method where humans rate model outputs to improve behavior.

Self-attention: Attention mechanism where elements of a sequence attend to other elements within the same sequence.

Token: Basic unit of input/output (word, subword, character, image patch) that transformers process.

Transfer Learning: Using knowledge from training on one task to improve performance on another task.

Transformer: Neural network architecture using self-attention to process sequential data in parallel.

Vision Transformer (ViT): Transformer architecture adapted for image recognition by treating image patches as tokens.

Sources & References

Vaswani, A., Shazeer, N., Parmar, N., et al. (2017). "Attention Is All You Need." Advances in Neural Information Processing Systems (NeurIPS). https://arxiv.org/abs/1706.03762

Stanford University (2024). "AI Index 2024 Annual Report." Stanford Institute for Human-Centered AI. https://spectrum.ieee.org/ai-index-2024

AmplifAI (2025, June). "60+ Generative AI Statistics You Need to Know in 2025." https://www.amplifai.com/blog/generative-ai-statistics

MathWorks (2024, October 31). "Transformer Models: From Hype to Implementation." https://blogs.mathworks.com/deep-learning/2024/10/31/transformer-models-from-hype-to-implementation/

Founders Forum Group (2025, July). "AI Statistics 2024–2025: Global Trends, Market Growth & Adoption Data." https://ff.co/ai-statistics-trends-global-market/

Nature Scientific Reports (2025, June). "The usage of a transformer based and artificial intelligence driven multidimensional feedback system in english writing instruction." https://www.nature.com/articles/s41598-025-05026-9

AWS (2024). "What are Transformers in Artificial Intelligence?" https://aws.amazon.com/what-is/transformers-in-artificial-intelligence/

IBM (2025, May). "How BERT and GPT models change the game for NLP." https://www.ibm.com/think/insights/how-bert-and-gpt-models-change-the-game-for-nlp

Wikipedia (2025). "GPT-4." https://en.wikipedia.org/wiki/GPT-4

Wikipedia (2025). "Attention Is All You Need." https://en.wikipedia.org/wiki/Attention_Is_All_You_Need

PYMNTS (2025, February). "AI Cheat Sheet: Large Language Foundation Model Training Costs." https://www.pymnts.com/artificial-intelligence-2/2025/ai-cheat-sheet-large-language-foundation-model-training-costs/

CUDO Compute (2025, May). "What is the cost of training large language models?" https://www.cudocompute.com/blog/what-is-the-cost-of-training-large-language-models

Visual Capitalist (2024, June). "Visualizing the Training Costs of AI Models Over Time." https://www.visualcapitalist.com/training-costs-of-ai-models-over-time/

The Decoder (2023, July). "GPT-4 architecture, datasets, costs and more leaked." https://the-decoder.com/gpt-4-architecture-datasets-costs-and-more-leaked/

SemiAnalysis (2023, July). "GPT-4 Architecture, Infrastructure, Training Dataset, Costs, Vision, MoE." https://semianalysis.com/2023/07/10/gpt-4-architecture-infrastructure/

MDPI Computers (2025, April). "Advancing Predictive Healthcare: A Systematic Review of Transformer Models in Electronic Health Records." https://www.mdpi.com/2073-431X/14/4/148

PMC (2024). "Transformers and large language models in healthcare: A review." https://pmc.ncbi.nlm.nih.gov/articles/PMC11638972/

PubMed (2024, June). "Transformers and large language models in healthcare." https://pubmed.ncbi.nlm.nih.gov/38878555/

BMC Medical Informatics and Decision Making (2024, August). "Transformer models in biomedicine." https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-024-02600-5

JMIR Medical Informatics (2024, November). "Task-Specific Transformer-Based Language Models in Health Care: Scoping Review." https://medinform.jmir.org/2024/1/e49724

Journal of Medical Systems (2024, February). "Transformer Models in Healthcare: A Survey and Thematic Analysis of Potentials, Shortcomings and Risks." https://pubmed.ncbi.nlm.nih.gov/38367119/

arXiv (2024, September). "Introducing the Large Medical Model." https://arxiv.org/abs/2409.13000

Viso.ai (2024). "Vision Transformer: A New Era in Image Recognition." https://viso.ai/deep-learning/vision-transformer-vit/

MDPI Applied Sciences (2025, January). "Vision Transformers for Image Classification: A Comparative Survey." https://www.mdpi.com/2227-7080/13/1/32

V7 Labs (2024). "Vision Transformer: What It Is & How It Works." https://www.v7labs.com/blog/vision-transformer-guide

Wikipedia (2025). "Vision transformer." https://en.wikipedia.org/wiki/Vision_transformer

Nature Scientific Reports (2024, May). "Implementing vision transformer for classifying 2D biomedical images." https://www.nature.com/articles/s41598-024-63094-9

Synthesia (2025, August). "AI Statistics 2025: Top Trends, Usage Data and Insights." https://www.synthesia.io/post/ai-statistics

Heidloff, Niklas (2024, November). "Foundation Models, Transformers, BERT and GPT." https://heidloff.net/article/foundation-models-transformers-bert-and-gpt/

Medium (2025, February). "The Evolution of Transformer Architecture: From 2017 to 2024." https://medium.com/@arghya05/the-evolution-of-transformer-architecture-from-2017-to-2024-5a967488e63b

arXiv (2024, December). "GPT or BERT: why not both?" https://arxiv.org/abs/2410.24159

arXiv (2023, December). "A Comprehensive Study of Vision Transformers in Image Classification Tasks." https://arxiv.org/html/2312.01232v1

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments