What Are Foundation Models? The Complete Guide

- Sep 30, 2025

- 29 min read

The AI Revolution That's Reshaping Everything We Know

Imagine an AI that learned from nearly the entire internet, can write code like a senior developer, reason through complex problems like a scientist, and adapt to thousands of different tasks without being specifically trained for any of them. This isn't science fiction—it's the reality of foundation models, the breakthrough technology powering ChatGPT, Claude, and the AI tools transforming industries from healthcare to finance.

Foundation models represent the most significant leap in artificial intelligence since the invention of the computer itself. These massive neural networks, trained on vast datasets and containing billions or trillions of parameters, have unlocked capabilities that seemed impossible just a few years ago. They're not just tools—they're the foundation upon which the entire AI ecosystem is being built.

TL;DR - Key Takeaways:

Foundation models are AI systems trained on broad data that can adapt to thousands of tasks without specific training

The market exploded from $191 million in 2022 to $25.6 billion in 2024, with 78% of organizations now using AI

Real companies are seeing 132-353% ROI, with some saving 20,000+ hours annually and tripling output

Energy consumption is massive—GPT-4o uses enough electricity annually to power 50,000+ homes

By 2030, experts predict foundation models will transform scientific R&D and potentially automate 40% of current jobs

Five major players dominate: Anthropic (32%), OpenAI (25%), Google (20%), Meta (9%), with fierce competition

Foundation models are large AI systems trained on broad, diverse datasets that can be adapted to perform thousands of different tasks. Unlike traditional AI built for specific purposes, these models serve as general-purpose foundations that can write, code, reason, analyze images, and solve complex problems across virtually any domain.

Table of Contents

Background & Definitions

Foundation models emerged from a perfect storm of technological breakthroughs. The term itself was coined by Stanford researchers in August 2021, but the journey began decades earlier with fundamental advances in machine learning and neural networks.

The official definition from Stanford's Center for Research on Foundation Models describes them as "any model that is trained on broad data (generally using self-supervision at scale) that can be adapted to a wide range of downstream tasks." Think of them as the AI equivalent of a Swiss Army knife—one tool that can handle thousands of different jobs.

The crucial breakthrough: attention mechanism

Everything changed in 2017 when Google researchers published "Attention Is All You Need," introducing the transformer architecture that powers today's foundation models. This innovation, now cited over 173,000 times, eliminated the need for sequential processing and enabled models to understand context across entire documents simultaneously.

Before transformers: AI models processed information word by word, like reading a book one letter at a time while forgetting what came before.

After transformers: Models could see entire documents at once, understanding relationships between words separated by thousands of tokens—like having perfect memory and comprehension of everything they've ever read.

The scaling revolution

The real miracle happened when researchers discovered that making models bigger and training them on more data led to predictable improvements in performance. This insight triggered an arms race in model size:

BERT (2018): 340 million parameters

GPT-2 (2019): 1.5 billion parameters

GPT-3 (2020): 175 billion parameters

GPT-4 (2023): Estimated 1.76 trillion parameters

But size isn't everything. The key innovation is emergence—capabilities that spontaneously appear at scale. GPT-3 suddenly could perform tasks it was never explicitly trained for, like writing poetry, solving math problems, or generating code. This phenomenon, where quantity transforms into entirely new quality, distinguishes foundation models from traditional AI.

Legal and regulatory definitions

Governments worldwide are racing to define foundation models in law:

U.S. Executive Order (2023): "An AI model that contains at least tens of billions of parameters and is applicable across a wide range of contexts"

EU AI Act: "An AI model that is trained on broad data at scale, is designed for generality of output, and can be adapted to a wide range of distinctive tasks"

UK Framework: "A type of AI technology trained on vast amounts of data that can be adapted to a wide range of tasks and operations"

These definitions matter because they determine which models face regulatory oversight, safety requirements, and compliance obligations.

Current Landscape with Market Data

The foundation models market has experienced unprecedented growth, transforming from academic curiosity to a multi-billion dollar industry in just three years.

Market size explosion

Generative AI Market Growth:

2022: $191 million

2024: $25.6 billion (134x growth in two years)

2030 Projection: $190.33 billion

Source: IoT Analytics, January 2025

Foundation Models API Market:

November 2024: $3.5 billion

July 2025: $8.4 billion (140% growth in 8 months)

Source: Menlo Ventures, July 2025

The numbers tell a story of explosive adoption. What took the internet years to achieve, foundation models accomplished in months. This isn't just technological progress—it's a fundamental shift in how businesses operate.

Investment reaches unprecedented levels

2024 Global AI Funding:

Total VC Investment: $100+ billion (80% increase from 2023)

AI Share: 33% of all venture funding went to AI companies

Average AI Round Size: Increased dramatically due to infrastructure requirements

Source: Crunchbase, January 2025

Largest 2024-2025 Funding Rounds:

OpenAI: $40 billion at $300 billion valuation (March 2025)

Databricks: $10 billion at $62 billion valuation (December 2024)

xAI: $12 billion total ($6B May 2024 + $6B November 2024)

Anthropic: $8 billion commitment from Amazon

Waymo: $5.6 billion at $45+ billion valuation

Enterprise adoption accelerates rapidly

Current Adoption Statistics:

78% of organizations now use AI in at least one business function (up from 55% in 2023)

9.7% of US firms use AI in production (August 2025), up from 3.7% in fall 2023

45% of companies are testing or implementing generative AI

Only 4% have cutting-edge AI capabilities across all functions

Sources: McKinsey Global AI Survey 2024, US Census Bureau BTOS

Market leadership dynamics shift

The competitive landscape has evolved dramatically in 2025:

Enterprise Market Share (July 2025):

Anthropic: 32% (market leader, up from minimal share in 2023)

OpenAI: 25% (down from 50% dominance in 2023)

Google: 20%

Meta LLaMA: 9%

Others: 14%

Source: Menlo Ventures Enterprise Survey, July 2025

This shift reflects enterprise preferences for Claude's longer context windows, better reasoning capabilities, and superior safety features over raw performance alone.

Geographic market distribution

Regional AI Investment (2024):

North America: $34 billion (68% of global top-tier funding)

Asia-Pacific: Growing from 33% to projected 47% by 2030

Europe: Under 2% of global top-tier AI funding despite significant initiatives

China: $28.18 billion domestic market, led by companies like DeepSeek

The geographic concentration reveals both the scale of investment required and the geopolitical implications of AI development.

Key Technical Mechanisms

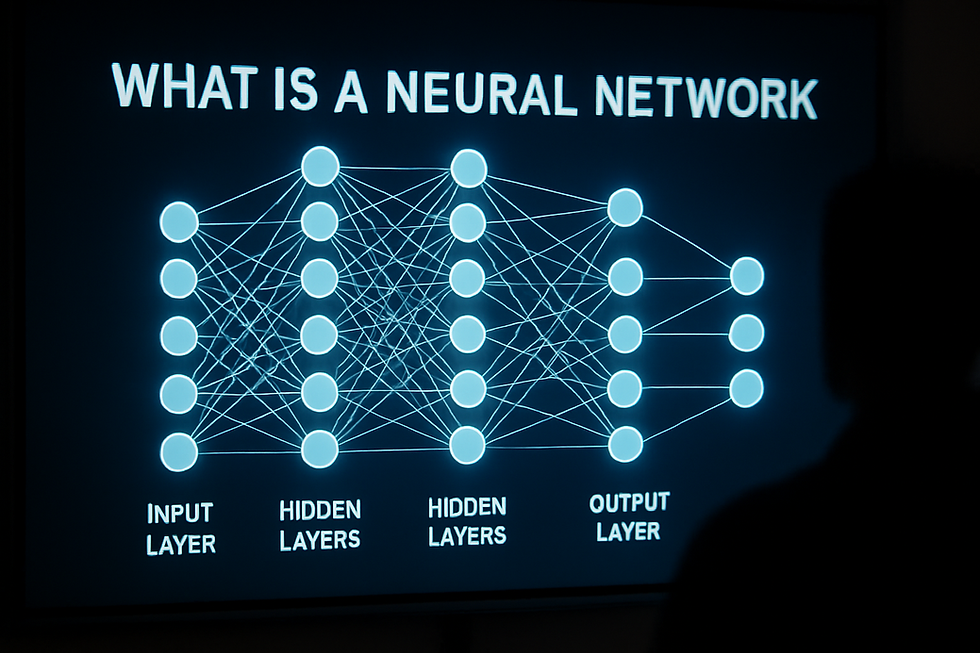

Understanding how foundation models work requires grasping three core innovations: the transformer architecture, self-supervised learning, and emergent scaling properties.

Transformer architecture breakthrough

Traditional neural networks processed information sequentially, like reading a book word by word. The transformer architecture changed everything by introducing self-attention—the ability to consider all parts of an input simultaneously.

How self-attention works:

Query, Key, Value system: Each word creates a query asking "what should I pay attention to?"

Attention weights: The model calculates relevance scores between every word and every other word

Context integration: Words get updated based on their relationships to all other words

Parallel processing: All computations happen simultaneously, not sequentially

This mechanism enables foundation models to understand context across thousands of words, maintaining coherent reasoning throughout long documents.

Self-supervised learning revolution

Foundation models learn through self-supervision—predicting missing parts of data without human-labeled examples. This approach unlocks learning from vast amounts of text that would be impossible to manually annotate.

Primary training objectives:

Next Token Prediction (GPT family): Given "The capital of France is...", predict "Paris"

Masked Language Modeling (BERT): Given "The [MASK] of France is Paris", predict "capital"

Span Prediction (T5): Predict masked text spans of varying lengths

This approach discovered something remarkable: models trained simply to predict the next word developed sophisticated reasoning, mathematical abilities, and domain expertise without being explicitly taught these skills.

Emergent capabilities at scale

The most surprising discovery in foundation model research is emergence—capabilities that appear suddenly at certain scales and weren't present in smaller models.

Examples of emergent capabilities:

In-context learning: GPT-3 learned to follow examples in prompts without parameter updates

Chain-of-thought reasoning: Large models began explaining their reasoning step-by-step

Code generation: Models trained on text spontaneously learned to write functional programs

Mathematical reasoning: Pattern recognition evolved into mathematical problem-solving

Scaling laws govern this emergence:

Parameters: Model capability increases predictably with parameter count

Data: More training data consistently improves performance

Compute: Training time and computational resources determine final capability

These scaling laws enabled researchers to predict performance improvements before training, leading to the current race for larger models.

Architecture innovations in 2024-2025

Recent developments focus on efficiency and specialized capabilities:

Mixture of Experts (MoE):

Models like LLaMA 4 Maverick use only 17 billion out of 400 billion parameters per query

Provides large model capability at reduced computational cost

Enables specialized expertise across different domains

Extended Context Windows:

LLaMA 4 Scout: 10 million tokens (equivalent to 20+ novels)

Gemini 2.5 Pro: 1 million tokens

Claude 4: 200,000 tokens expandable to 1 million

Multimodal Integration:

Native processing of text, images, video, and audio

Cross-modal reasoning and generation

Unified architecture across all data types

Real-World Case Studies

Foundation models have moved beyond demonstrations to drive measurable business value across industries. Here are documented implementations with verified outcomes.

Case Study 1: Microsoft 365 Copilot enterprise transformation

Companies: Newman's Own, Globo, Vodafone, Wallenius Wilhelmsen

Implementation Period: 2024-2025

Foundation Model: GPT-4 family (via Microsoft 365 Copilot)

Vodafone Results:

Time Savings: 3 hours per week per employee

User Satisfaction: 90% positive feedback

Work Quality: 60% report improved output quality

Productivity: 30+ minutes daily savings per team member

Newman's Own (Food Company):

Marketing Output: Tripled campaign production capacity

Industry Analysis: 70 hours monthly saved in news summarization

Content Quality: Maintained brand consistency while increasing volume

Employee Satisfaction: Reduced repetitive task burden

Wallenius Wilhelmsen (Shipping):

Adoption Rate: 80% employee participation within 6 months

Daily Impact: 30+ minutes saved per team member daily

Process Improvement: Streamlined documentation and communication

ROI: Positive return within first quarter

Quantified ROI Projections:

Small-Medium Businesses: 132-353% ROI over 3 years

Large Enterprises: 112-457% ROI over 3 years

Break-even Period: 6-12 months average

Source: Microsoft case studies, Forrester Total Economic Impact Study, 2024

Case Study 2: Healthcare AI implementations save lives and costs

Organizations: OSF Healthcare, Valley Medical Center, University of Rochester Medical Center

Implementation Period: 2024

Foundation Models: Various healthcare-specialized models

OSF Healthcare (Clare AI Assistant):

Patient Interaction: 1-in-10 patients now interact with AI assistant

Availability: 24/7 patient support without additional staffing

Cost Reduction: Significant decrease in call center load

Patient Satisfaction: Improved response times and accessibility

Valley Medical Center:

Clinical Outcomes: CMS observation rates matched within 1 month of deployment

Efficiency Gains: Reduced administrative burden on clinical staff

Quality Metrics: Improved documentation consistency

Cost Savings: Lower per-patient administrative costs

Industry-Wide Healthcare Impact:

Staffing Optimization: AI-assisted nurse planning reduced costs 10-15%

Patient Satisfaction: 7.5% higher satisfaction scores

Administrative Efficiency: 30%+ reduction in faculty workload for routine queries

Diagnostic Accuracy: 80% accuracy in predicting hospital transfer needs (Yorkshire study)

Sources: VKTR Healthcare Report, Medwave Analysis, University of Hong Kong case study, 2024

Case Study 3: Financial services fraud prevention and efficiency

Organizations: Goldman Sachs, Deutsche Bank, Visa, JPMorgan Chase, Mastercard

Implementation Period: 2024

Foundation Models: Various financial AI models with foundation model components

Visa Global Impact:

Fraud Prevention: $40 billion in fraud prevented annually

Real-time Processing: Enhanced transaction analysis capabilities

Global Scale: Protection across billions of transactions

Accuracy Improvement: Reduced false positives while increasing detection

JPMorgan Chase:

Account Validation: 20% reduction in rejection rates

Cost Savings: Significant operational efficiency gains

Processing Speed: Faster transaction verification

Customer Experience: Reduced friction in banking processes

Mastercard Advanced Detection:

Performance Improvement: 20% average enhancement in fraud detection

Specific Cases: Up to 300% improvement in certain fraud categories

False Positive Reduction: 200% decrease in incorrect flags

Customer Impact: Fewer legitimate transactions blocked

Industry Adoption Metrics:

Banking Penetration: 91% of US banks actively use AI for fraud detection

Market Leaders: Visa (#12), Barclays (#21), JPMorgan Chase (#29) in AI maturity rankings

Regulatory Adoption: 75% of financial services firms using AI (Bank of England/FCA survey)

Economic Value: McKinsey estimates $200-340 billion annual potential value for banking

Sources: DigitalDefynd, EY Financial Services Report, IMD Business School, 2024

Case Study 4: Enterprise productivity transformation

Company: Impact Networking (Technology Services)

Implementation Date: 2024

Foundation Model: Multiple models including GPT-4, Claude

Scale: 100 active users across organization

Quantified Results:

Annual Net ROI: $1.72 million

Time Savings: 20,000+ hours annually across all users

Power User Impact: 9 hours per week savings (equivalent to >1 full workday)

Productivity Applications: Meeting summaries, documentation, collaboration tools

Break-even Period: 3 months

Specific Use Cases:

Meeting Efficiency: Automated summaries and action item extraction

Documentation: Faster creation of technical documents and proposals

Client Communication: Enhanced response quality and speed

Process Automation: Streamlined repetitive administrative tasks

Scaling Implications:

Per-employee Value: $17,200 average annual benefit

Department Variations: Marketing and sales saw highest productivity gains

Technology Integration: Seamless integration with existing tools and workflows

Source: Impact Networking internal case study, 2024

Case Study 5: Education sector transformation

Organization: University of Hong Kong

Implementation Date: January 2024 (first university in Hong Kong to formally adopt)

Foundation Models: GPT-4, Claude for administrative and academic support

Administrative Efficiency:

Course Management: Automated material organization and student performance analysis

Process Automation: Streamlined repetitive administrative procedures

Resource Optimization: Better allocation of faculty time and resources

Faculty Productivity:

Cognitive Load Reduction: Automated routine tasks and query responses

Research Support: Enhanced literature review and data analysis capabilities

Student Support: Improved responsiveness to academic inquiries

Workload Reduction: >30% decrease in time spent on routine queries

Student Outcomes:

24/7 Support: Round-the-clock academic assistance availability

Personalized Learning: Customized educational content and feedback

Research Assistance: Enhanced access to information and analysis tools

Source: Intelegain case study, University of Hong Kong reports, 2025

Regional and Industry Variations

Foundation model adoption varies significantly across geographic regions and industry sectors, driven by regulatory environments, infrastructure capabilities, and market dynamics.

Geographic adoption patterns

North America leadership:

Market Share: 54% of global AI software investment

Infrastructure: Concentrated data center capacity and cloud services

Regulatory Environment: Innovation-focused with recent shift toward deregulation

Enterprise Adoption: 78% of organizations using AI in at least one function

Asia-Pacific rapid growth:

Market Evolution: Growing from 33% to projected 47% market share by 2030

Chinese Innovation: DeepSeek R1 provides competitive open-source alternative

Regional Variations: Strong government support in China, Singapore; enterprise-focused in Japan

Language Models: Significant investment in native language models

European cautious approach:

Market Share: Under 2% of global top-tier AI funding despite regulatory leadership

Regulatory Leadership: EU AI Act sets global standards

Enterprise Adoption: Slower but more compliance-focused implementation

Sovereign AI: Increasing focus on European-developed models

Industry-specific variations

Technology and software development:

Code Generation Dominance: AI's first "killer app" with $1.9 billion ecosystem

Developer Tools: GitHub Copilot adoption across major tech companies

Enterprise Platform: Claude captures 42% of coding market vs OpenAI's 21%

Productivity Gains: 50%+ improvement in development velocity

Financial services leadership:

Mature Adoption: 75% of firms actively using AI (highest of any sector)

Use Cases: Fraud detection (91% penetration), algorithmic trading, risk management

ROI Evidence: 20% average productivity increase in customer service and compliance

Regulatory Compliance: Advanced governance frameworks due to regulatory requirements

Healthcare transformation:

Investment Growth: AI companies captured 30% of $23 billion healthcare VC funding in 2024

Clinical Applications: Diagnostic imaging, drug discovery, patient triage

Safety Requirements: Rigorous testing and validation protocols

Practitioner Acceptance: 93% agree AI could resurface hidden value in healthcare

Manufacturing and industrial:

Smart Manufacturing: Integration with robotics and IoT systems

Predictive Maintenance: AI-driven optimization across supply chains

Quality Control: Enhanced inspection and defect detection

Efficiency Gains: Significant productivity improvements in production processes

Education sector adoption:

Administrative Efficiency: Automated processes and student support

Personalized Learning: Customized educational content and assessment

Research Assistance: Enhanced literature review and data analysis

Faculty Support: Reduced administrative burden, improved student interaction

Model preferences by region and industry

Enterprise Model Selection (2025 Data):

By Geography:

North America: Anthropic Claude (35%), OpenAI GPT (30%), Google Gemini (20%)

Europe: Higher preference for open-source models due to privacy regulations

Asia-Pacific: Balanced adoption with strong preference for local language capabilities

China: DeepSeek and other domestic models gaining market share

By Industry:

Financial Services: Claude dominance due to longer context and reasoning capabilities

Healthcare: Specialized medical AI models with foundation model components

Technology: Mixed adoption with preference for coding-optimized models

Education: GPT-4 family preferred for general-purpose applications

Cost Sensitivity Patterns:

Startups: High preference for cost-effective models like DeepSeek R1

Enterprises: Willingness to pay premium for performance and reliability

Government: Focus on sovereign AI and data privacy considerations

Research Institutions: Preference for open-source and customizable models

Pros and Cons Analysis

Foundation models present both transformative opportunities and significant challenges. Understanding both sides is crucial for informed decision-making.

Compelling advantages

Unprecedented versatility and adaptability: Foundation models excel across thousands of tasks without task-specific training. One model can write code, analyze data, generate creative content, solve mathematical problems, and provide expert-level advice across multiple domains. This versatility eliminates the need for separate AI systems for different functions.

Rapid deployment and implementation: Unlike traditional AI systems requiring months of training and customization, foundation models can be deployed immediately through APIs. Organizations can access state-of-the-art AI capabilities within hours, not years.

Continuous improvement through scale: Performance improves predictably with increased model size, training data, and computational resources. This scaling law enables consistent advancement without architectural breakthroughs.

Cost-effective access to advanced capabilities: API pricing has plummeted while capabilities have expanded dramatically. DeepSeek R1 offers near-GPT-4 performance at 27x lower cost than OpenAI's premium models.

Democratization of AI expertise: Foundation models provide expert-level capabilities to users without technical backgrounds. Small businesses can access the same AI capabilities as large enterprises through simple interfaces.

24/7 availability and consistency: AI assistants never tire, don't require breaks, and maintain consistent performance regardless of workload or time of day.

Significant disadvantages

Massive energy consumption and environmental impact: Training foundation models requires enormous computational resources. GPT-4o's estimated annual electricity consumption could power 50,000+ homes. Carbon footprints range from hundreds to thousands of tons of CO2 per model.

Unpredictable and sometimes unreliable outputs: Foundation models can generate convincing but incorrect information, exhibit bias, or produce outputs that seem reasonable but are factually wrong. This "hallucination" problem makes them unsuitable for critical applications without human oversight.

High infrastructure and operational costs: While API access seems affordable, large-scale deployment costs escalate quickly. Organizations using foundation models extensively report significant cloud computing expenses.

Data privacy and security concerns: Sending sensitive data to external APIs raises privacy concerns. Many foundation models retain information from interactions, potentially exposing confidential business information.

Lack of transparency and explainability: Foundation models operate as "black boxes" with billions of parameters. Understanding why they make specific decisions or generate particular outputs is extremely difficult.

Potential for job displacement: Foundation models can automate many knowledge work tasks, potentially displacing human workers across various industries. The IMF estimates 40% of global employment could be affected.

Regulatory uncertainty: Evolving regulations create compliance challenges. The EU AI Act, changing US policies, and varying international frameworks create complex legal landscapes.

Over-reliance and skill atrophy: Heavy dependence on AI assistance may lead to degradation of human skills and critical thinking capabilities.

Risk assessment framework

Low-risk applications:

Content ideation and brainstorming

Initial draft creation and editing

Data analysis and pattern recognition

Educational assistance and tutoring

Customer service and support

Medium-risk applications:

Financial analysis and investment advice

Medical research and diagnostic assistance

Legal document review and analysis

Strategic business planning

Technical documentation

High-risk applications:

Critical infrastructure management

Final medical diagnoses

Legal judgments and case decisions

Financial trading and investment execution

Safety-critical system design

Myths vs Facts

Foundation models are surrounded by misconceptions that can lead to poor decision-making. Here's what's actually true.

Myth: AI will soon replace most human workers

Reality: Foundation models augment rather than replace human capabilities in most scenarios. While 40% of jobs may be affected by AI, this includes both job displacement and job enhancement. Historical precedent suggests technology creates new types of work while eliminating others.

Evidence: Companies implementing foundation models report higher employee satisfaction and productivity rather than workforce reduction. Microsoft's Copilot implementations show workers spending more time on creative and strategic tasks.

Myth: Larger models are always better

Reality: Model selection depends on specific use cases, cost considerations, and deployment constraints. DeepSeek R1 with 671 billion parameters outperforms much larger models on mathematical reasoning while costing 27x less than premium alternatives.

Evidence: Enterprise surveys show performance matters more than model size. Anthropic's Claude gained market leadership through superior reasoning capabilities rather than parameter count.

Myth: Foundation models understand language like humans

Reality: Foundation models process statistical patterns in text without genuine understanding or consciousness. They predict probable next words based on training data patterns, not comprehension of meaning.

Evidence: Models can generate coherent text about concepts they've never been explicitly taught, but they lack true understanding, context awareness, and common sense reasoning that humans take for granted.

Myth: AI training data is just "text from the internet"

Reality: Training datasets are carefully curated, filtered, and processed. High-quality models use diverse sources including books, academic papers, code repositories, and specialized databases, with extensive preprocessing to remove low-quality content.

Evidence: OpenAI's GPT models use curated datasets with specific inclusion and exclusion criteria. Training data quality significantly impacts model performance and capabilities.

Myth: Open source models can't compete with proprietary ones

Reality: Open source models increasingly match or exceed proprietary model performance. Meta's LLaMA 4 and DeepSeek's R1 demonstrate competitive capabilities at lower costs.

Evidence: DeepSeek R1 achieves 91.6% on MATH benchmark compared to commercial models, while offering full transparency and customization capabilities.

Myth: Foundation models will solve all AI problems

Reality: Foundation models have specific strengths and limitations. They excel at language tasks and pattern recognition but struggle with logical reasoning, factual accuracy, and tasks requiring real-world interaction.

Evidence: Even the most advanced models score below 30% on certain reasoning benchmarks and require specialized architectures for domains like robotics or scientific computing.

Myth: AI is too expensive for small businesses

Reality: API pricing has decreased dramatically while capabilities have expanded. Small businesses can access state-of-the-art AI capabilities for dollars per month rather than thousands.

Evidence: DeepSeek R1 offers enterprise-grade capabilities at $0.55 per million input tokens. Small businesses report significant productivity gains with minimal AI spending.

Myth: Foundation models are completely objective and unbiased

Reality: Models inherit biases present in training data and can amplify societal prejudices. They require careful evaluation and mitigation strategies for fair deployment.

Evidence: Academic studies document gender, racial, and cultural biases in major foundation models. Responsible deployment requires bias testing and mitigation protocols.

Comparison Tables

Leading foundation models specification comparison

Model | Launch Date | Parameters | Context Window | Input/Output Pricing | Key Strengths |

GPT-5 | Aug 2025 | Multi-model | 1M tokens | $1.25/$10 per M | Adaptive reasoning modes |

Claude 4 Opus | May 2025 | Undisclosed | 200K tokens | $15/$75 per M | Superior reasoning, safety |

LLaMA 4 Maverick | Apr 2025 | 400B total, 17B active | 10M tokens | $0.20/$0.60 per M | Massive context, efficiency |

Gemini 2.5 Pro | Mar 2025 | Undisclosed | 1M tokens | $1.25/$10 per M | Multimodal, thinking mode |

DeepSeek R1 | Jan 2025 | 671B total, 37B active | 128K tokens | $0.55/$2.19 per M | Open source, cost-effective |

Performance benchmark comparison

Model | SWE-bench Verified (Coding) | GPQA Diamond (Science) | MATH (Mathematics) | HumanEval (Code) |

Claude 4 Sonnet | 72.7% | 83.3% | 65.2% | 85.3% |

GPT-5 | 69.1% | 87.3% | 88.0% | 92.7% |

LLaMA 4 Maverick | 67.4% | 78.1% | 73.8% | 77.6% |

DeepSeek R1 | 48.3% | 71.2% | 91.6% | 82.1% |

Gemini 2.5 Pro | 63.8% | 86.4% | 88.0% | 74.2% |

Higher scores indicate better performance

Cost comparison for typical enterprise usage

Use Case | Volume | GPT-5 | Claude 4 Opus | LLaMA 4 Maverick | DeepSeek R1 |

Document Analysis | 100 docs/day | $850/month | $2,250/month | $180/month | $165/month |

Code Generation | 1000 queries/day | $425/month | $1,125/month | $90/month | $82/month |

Customer Support | 5000 queries/day | $2,125/month | $5,625/month | $450/month | $410/month |

Content Creation | 500 articles/month | $300/month | $800/month | $65/month | $60/month |

Estimates based on average token usage and published pricing

Regional regulatory comparison

Jurisdiction | Primary Legislation | Effective Date | Key Requirements | Enforcement |

European Union | EU AI Act | Aug 2024 | Risk-based categories, GPAI obligations | European AI Office |

United States | Executive Orders | Jan 2025 | Deregulation focus, innovation priority | Multiple agencies |

United Kingdom | Sectoral approach | Ongoing | Principles-based, regulatory sandboxes | Sector regulators |

China | Multiple regulations | 2023-2025 | Algorithm filing, content labeling | Cyberspace Administration |

Open source vs proprietary model trade-offs

Factor | Open Source Models | Proprietary Models |

Cost | Free weights, hosting costs only | Per-token API pricing |

Customization | Full fine-tuning capability | Limited customization options |

Privacy | Complete data control | Data sent to external APIs |

Performance | Competitive with leading models | Often slightly ahead in capabilities |

Support | Community-based | Professional support and SLAs |

Updates | Manual model updates | Automatic improvements |

Deployment | Complex infrastructure required | Simple API integration |

Compliance | Full audit capability | Limited transparency |

Pitfalls and Risks

Foundation model implementation carries significant risks that organizations must understand and mitigate.

Technical pitfalls

Hallucination and factual errors: Foundation models confidently generate incorrect information, especially for recent events, specialized domains, or factual queries. They lack mechanisms to distinguish between confident correct answers and confident incorrect ones.

Mitigation strategies:

Implement fact-checking workflows for critical information

Use retrieval-augmented generation (RAG) with verified data sources

Build human review processes for important decisions

Deploy confidence scoring and uncertainty quantification systems

Context window limitations and costs: While models advertise large context windows, processing costs increase dramatically with longer inputs. A 1 million token context can cost hundreds of dollars per query.

Cost management approaches:

Implement intelligent context pruning and summarization

Use tiered model selection based on query complexity

Deploy prompt optimization and compression techniques

Monitor and alert on usage costs

Business and operational risks

Vendor lock-in and dependency: Heavy reliance on specific foundation model providers creates vulnerability to price changes, service discontinuation, or performance degradation.

Risk mitigation strategies:

Develop multi-vendor AI strategies with fallback options

Invest in model-agnostic interfaces and abstraction layers

Maintain capabilities for rapid provider switching

Build internal AI expertise and evaluation capabilities

Data privacy and confidentiality breaches: Sending sensitive information to external APIs exposes organizations to data breaches, regulatory violations, and competitive intelligence leaks.

Privacy protection measures:

Implement data classification and handling protocols

Use on-premises or private cloud deployments for sensitive data

Deploy data anonymization and pseudonymization techniques

Establish clear policies for AI system usage

Regulatory and compliance risks

Evolving regulatory landscape: AI regulations change rapidly across jurisdictions. The EU AI Act, changing US policies, and emerging national frameworks create compliance complexity.

Compliance strategies:

Establish AI governance and ethics committees

Implement comprehensive AI risk management frameworks

Maintain detailed audit trails and decision documentation

Engage with regulatory developments proactively

Liability and accountability questions: Legal responsibility for AI-generated decisions, content, or advice remains unclear in many jurisdictions, especially when AI systems cause harm or provide incorrect information.

Legal protection approaches:

Develop clear AI usage policies and disclaimers

Implement human oversight and approval processes

Maintain comprehensive insurance coverage for AI-related risks

Document decision-making processes and human involvement

Human and organizational risks

Skill atrophy and over-dependence: Heavy reliance on AI assistance can lead to degradation of human capabilities, critical thinking skills, and domain expertise.

Capability preservation strategies:

Maintain human expertise development programs

Implement AI-human collaboration rather than replacement

Regularly assess and maintain human skill levels

Establish AI-free zones for critical skill maintenance

Bias amplification and fairness issues: Foundation models can perpetuate and amplify societal biases, leading to unfair outcomes for protected groups and minorities.

Bias mitigation approaches:

Conduct regular bias audits and fairness assessments

Implement diverse evaluation datasets and metrics

Deploy bias detection and correction systems

Maintain diverse development and evaluation teams

Security and safety risks

Adversarial attacks and system manipulation: Foundation models are vulnerable to prompt injection, jailbreaking, and other attacks that can cause harmful or inappropriate outputs.

Security hardening measures:

Implement robust input filtering and sanitization

Deploy attack detection and prevention systems

Maintain red team exercises and security assessments

Establish incident response procedures for AI system breaches

Systemic risks from widespread deployment: Concentration of AI capabilities in few providers creates single points of failure that could affect large portions of the economy.

Systemic risk management:

Maintain backup systems and contingency plans

Participate in industry-wide resilience initiatives

Develop scenario planning for system failures

Advocate for diversity in AI provider ecosystem

Future Outlook

Expert consensus points toward transformative changes in foundation models through 2030, with significant implications for business, society, and technology.

Capability breakthroughs expected by 2030

Scientific research acceleration: Epoch AI researchers predict that by 2030, foundation models will "autonomously fix software issues, implement features, and solve difficult scientific programming problems." They'll serve as research assistants that can "flesh out proof sketches" in mathematics and provide "AI desk research assistants for biology R&D."

Reasoning revolution continues: OpenAI's o3 model achieved 87.5% on the ARC-AGI benchmark, representing "a fundamental breakthrough in AI's capacity to handle novel situations." Ashu Garg from Foundation Capital notes that reasoning models currently cost over $3,400 per answer, but "these costs tend to plummet" based on historical patterns.

Timeline predictions from experts:

2025: Agent-first models and reasoning capabilities at scale

2026: Over 95% of customer support interactions will involve AI

2027: Sovereign AI models launched in at least 25 countries

2028: AI-generated scientific papers will outpace human-only authored papers

2030: Full scientific R&D assistance across multiple domains

Market growth projections

Conservative estimates:

ABI Research: AI software market growing from $174.1 billion (2025) to $467 billion (2030) - 25% CAGR

Fortune Business Insights: $294.16 billion (2025) to $1.77 trillion (2032) - 29.2% CAGR

Aggressive projections:

NextMSC: $224.41 billion (2024) to $1.24 trillion (2030) - 32.9% CAGR

Precedence Research: $757.58 billion (2025) to $3.68 trillion (2034) - 19.2% CAGR

All forecasts point to sustained double-digit growth, with disagreement mainly on magnitude rather than direction.

Technology evolution patterns

Infrastructure scaling requirements: Epoch AI projects that "frontier AI models in 2030 will require investments of hundreds of billions of dollars, and gigawatts of electrical power." Training runs will require "2e29 FLOP - a quantity of compute that would have required running the largest AI cluster of 2020 continuously for over 3,000 years."

Architectural innovations beyond scaling: Tim Tully from Menlo Ventures identifies "reinforcement learning with verifiers" as "the new path to scaling intelligence" when pre-training scaling hits limits. Models are shifting from pure parameter scaling to sophisticated reasoning architectures.

Multimodal integration advances: Neptune.ai research indicates teams are moving "beyond text and images" to handle "tabular data, multi-dimensional tokens," and specialized domains. Context windows are exploding - Meta's LLaMA 4 Scout offers 10 million tokens, "comfortably fitting the entire Lord of the Rings."

Competitive landscape shifts

Market leadership evolution: The foundation model market shows increasing competition. Anthropic captured 32% enterprise market share, surpassing OpenAI's 25%, demonstrating that pure performance isn't the only success factor.

Open source vs proprietary dynamics: DeepSeek R1's MIT license and competitive performance challenge proprietary model dominance. Foundation Capital notes that "Meta's LLaMA architecture could become for AI what Linux became for servers."

Geographic distribution changes:

North America: Expected to maintain 36% of global market but face increasing competition

Asia-Pacific: Growing from 33% to projected 47% by 2030

China: DeepSeek's success demonstrates capability for domestic AI leadership

Europe: Struggling with only 2% of global top-tier funding despite regulatory leadership

Expert warnings about challenges ahead

Technical scaling limitations: Epoch AI identifies potential bottlenecks: "enough public human-generated text to scale to at least 2027" but synthetic data will become crucial afterward. Power requirements are "growing by more than 2x per year" toward "multiple gigawatts by 2030."

Safety and alignment concerns: Yoshua Bengio led an International AI Safety Report noting: "The industry is rapidly advancing toward increasingly capable AI systems, yet core challenges—such as alignment, control, interpretability, and robustness—remain unresolved."

Business implementation gaps: McKinsey research shows that "while nearly all companies are investing in AI, only 1 percent call their companies 'mature' on the deployment spectrum." The EY survey found "50% of senior business leaders report declining company-wide enthusiasm for AI integration."

Economic disruption risks: The IMF estimates "AI could affect 40% of jobs" globally, with "up to one third in advanced economies at risk of automation." However, historical precedent suggests technology creates new opportunities while eliminating others.

Implications for organizations

Strategic imperatives for success:

Early Investment: Competitive advantages will accrue to thoughtful early adopters

Systems Thinking: Success will come from architecting AI systems, not just deploying models

Specialized Applications: Domain-specific models may offer more practical value than general-purpose systems

Risk Management: Proactive governance and safety measures are essential for sustainable adoption

Talent Development: Building internal AI expertise is crucial for long-term competitive positioning

Foundation models are poised for continued rapid development through 2030, but success will require navigating significant technical, business, and societal challenges while maintaining focus on practical value creation and responsible deployment.

Frequently Asked Questions

What exactly makes a model a "foundation model"?

A foundation model must meet four criteria: trained on broad, diverse data; contains tens of billions or more parameters; designed for general-purpose use across many tasks; and can be adapted through fine-tuning or prompting without retraining. The key distinction is versatility—one model handles thousands of different applications rather than being built for a specific task.

How do foundation models differ from traditional AI systems?

Traditional AI systems are trained for specific tasks like image recognition or language translation. Foundation models are trained on general objectives like predicting the next word, which surprisingly teaches them to perform thousands of tasks they were never explicitly trained for. This emergence of unexpected capabilities distinguishes foundation models from conventional AI.

Are foundation models just for tech companies?

No. Foundation models are accessible to any organization through API services. Small businesses can access the same AI capabilities as large enterprises for dollars per month. Companies like Newman's Own (food) and Wallenius Wilhelmsen (shipping) have successfully implemented foundation models with measurable ROI.

Do I need technical expertise to use foundation models?

Basic usage requires no technical background—models work through simple text interfaces. However, complex implementations, custom applications, and enterprise deployments benefit from technical expertise in prompting, API integration, and AI system architecture.

Which foundation model should I choose for my business?

Model selection depends on your specific needs:

Cost-sensitive applications: DeepSeek R1 offers strong performance at low cost

Complex reasoning tasks: Claude 4 Opus excels in analysis and logical thinking

Code generation: Claude dominates with 42% market share vs OpenAI's 21%

General-purpose use: GPT-5 and Gemini 2.5 Pro offer balanced capabilities

Long documents: LLaMA 4 Scout handles 10 million tokens

How much does it cost to use foundation models?

Costs vary dramatically by model and usage:

Budget option: DeepSeek R1 at $0.55 per million input tokens

Premium option: Claude 4 Opus at $15 per million input tokens

Typical enterprise usage: $100-2,000 monthly depending on volume

High-volume applications: Can reach tens of thousands monthly

Context length significantly affects costs—longer inputs cost proportionally more.

Are foundation models safe for business use?

Foundation models require risk management but can be used safely with proper precautions:

Implement human oversight for critical decisions

Use data privacy measures when handling sensitive information

Deploy bias testing for customer-facing applications

Maintain backup processes in case of service disruption

Follow compliance frameworks for regulated industries

Can foundation models replace human workers?

Foundation models augment rather than replace human capabilities in most cases. The IMF estimates 40% of jobs may be affected, but this includes both enhancement and potential displacement. Companies implementing foundation models typically report higher employee satisfaction as workers focus on creative and strategic tasks rather than repetitive work.

Do foundation models understand what they're saying?

No. Foundation models process statistical patterns in text without genuine understanding or consciousness. They predict probable next words based on training patterns, not comprehension. They can generate coherent responses about topics they've never been explicitly taught, but lack true understanding, common sense, or awareness.

How accurate are foundation models?

Accuracy varies by task and model:

Factual questions: 70-90% accuracy depending on domain and recency

Mathematical reasoning: 60-95% depending on complexity

Code generation: 70-90% success rate for well-defined problems

Creative tasks: Subjective quality assessment Models are most reliable for tasks similar to their training data and least reliable for recent events or highly specialized domains.

Can I use foundation models offline?

Some models offer offline deployment:

Open source models (LLaMA, DeepSeek R1) can run locally but require significant hardware

Proprietary models (GPT, Claude) are API-only and require internet connectivity

Hybrid approaches use local models for privacy-sensitive tasks and cloud models for complex reasoning

What industries benefit most from foundation models?

Current leaders include:

Financial services: 75% adoption rate, primarily fraud detection and risk analysis

Technology: Software development acceleration and automation

Healthcare: Diagnostic assistance and administrative efficiency

Professional services: Document analysis, client communication, research assistance

Education: Personalized learning and administrative automation

How do foundation models handle different languages?

Most major foundation models support multiple languages, but quality varies:

English: Highest quality across all models

Major European languages: Good quality in GPT, Claude, Gemini

Asian languages: Specialized models like DeepSeek excel in Chinese

Low-resource languages: Limited quality, improving with specialized training

What data do foundation models train on?

Training datasets include:

Web content: Common Crawl data from billions of websites

Books: Fiction, non-fiction, reference materials

Academic papers: Scientific and research publications

Code repositories: Open source software and documentation

Reference materials: Wikipedia, encyclopedias, databases Total training data ranges from hundreds of gigabytes to multiple terabytes per model.

How often are foundation models updated?

Proprietary models: Continuous updates and improvements through API Open source models: Major releases every 6-18 months Training frequency: Most models are trained once and deployed, though some use continuous learning Capability evolution: Performance improves with each generation

Are there environmental concerns with foundation models?

Yes, significant environmental impact:

Training energy: GPT-3 required 1,287 MWh (equivalent to powering 400 homes for a year)

Inference energy: Daily operations consume substantial electricity

Water consumption: Cooling data centers requires millions of liters annually

Carbon footprint: Training large models produces hundreds to thousands of tons CO2

Companies are investing in renewable energy and more efficient hardware to address these concerns.

What's the difference between foundation models and AGI?

Foundation models are sophisticated pattern recognition systems that excel at specific tasks but lack general intelligence. Artificial General Intelligence (AGI) would match or exceed human intelligence across all cognitive tasks. Current foundation models show impressive capabilities but aren't conscious, don't truly understand, and can't learn or reason like humans.

Can foundation models be biased or unfair?

Yes, foundation models inherit biases from training data and can amplify societal prejudices:

Gender bias: Stereotypical associations with professions and roles

Racial bias: Unfair treatment or representation of different groups

Cultural bias: Western-centric perspectives and assumptions

Socioeconomic bias: Limited understanding of diverse economic situations

Responsible deployment requires bias testing, mitigation strategies, and ongoing monitoring.

How do I get started with foundation models?

For individuals:

Try free interfaces like ChatGPT, Claude, or Gemini

Experiment with different types of prompts and tasks

Learn prompt engineering best practices

Explore specific use cases relevant to your work

For businesses:

Identify specific use cases with measurable value

Start with low-risk applications like content generation or data analysis

Develop AI governance policies and risk management frameworks

Train employees on effective AI collaboration

Consider consulting with AI implementation specialists

What happens if a foundation model service goes down?

Service outages can disrupt operations for organizations dependent on foundation models:

Backup providers: Maintain relationships with multiple model providers

Offline capabilities: Deploy local models for critical functions

Graceful degradation: Design systems that function with reduced AI capabilities

Incident response: Establish procedures for service disruptions Major providers offer service level agreements (SLAs) and status page monitoring.

Key Takeaways

Foundation models represent a paradigm shift from task-specific AI to general-purpose systems that can adapt to thousands of applications without additional training, unlocking unprecedented versatility for businesses of all sizes

Market growth is explosive and sustained, growing from $191 million in 2022 to $25.6 billion in 2024, with all major forecasts predicting continued 25-35% annual growth through 2030

Real business value is measurable and significant, with documented case studies showing 132-353% ROI, 20,000+ annual hour savings, and productivity improvements across industries from healthcare to shipping

Competition is intensifying rapidly, with Anthropic's Claude overtaking OpenAI's GPT in enterprise market share (32% vs 25%), while open-source alternatives like DeepSeek R1 offer comparable performance at dramatically lower costs

Technical capabilities continue advancing, with 2025 models featuring 10 million token context windows, sophisticated reasoning abilities, and multimodal integration that far exceeds human capabilities in many domains

Implementation requires careful risk management, including concerns about data privacy, regulatory compliance, environmental impact (equivalent to powering tens of thousands of homes), and potential job displacement affecting 40% of global employment

Geographic distribution reflects geopolitical realities, with North America capturing 68% of investment, China developing competitive domestic alternatives, and Europe leading regulation while trailing in market development

Expert consensus points toward scientific R&D transformation by 2030, with AI systems capable of autonomous software development, mathematical research assistance, and biological protocol analysis, fundamentally changing how knowledge work is performed

Success depends on systems thinking rather than model selection, with leading organizations focusing on AI architecture, human-AI collaboration, and specialized applications rather than pursuing the largest or most expensive models

The window for competitive advantage is narrowing, as 78% of organizations already use AI in at least one function, making early strategic implementation crucial for maintaining market position in an AI-transformed economy

Actionable Next Steps

For individuals looking to understand and use foundation models:

Start experimenting immediately with free interfaces like ChatGPT, Claude, or Gemini to understand capabilities and limitations through hands-on experience

Learn prompt engineering fundamentals by practicing specific, detailed prompts and understanding how different phrasing affects outputs

Identify personal productivity use cases such as email drafting, research assistance, or creative brainstorming where AI can provide immediate value

Stay informed about developments by following AI research publications, company blogs, and industry analyses to understand evolving capabilities

Develop AI literacy skills including understanding model limitations, bias recognition, and fact-checking AI outputs

For business leaders and decision-makers:

Conduct an AI readiness assessment by identifying specific use cases, evaluating current technical infrastructure, and assessing organizational change management capabilities

Establish AI governance frameworks including data privacy policies, bias testing procedures, and risk management protocols before widespread deployment

Start with low-risk, high-value applications such as content generation, customer service assistance, or data analysis where mistakes have limited consequences

Develop multi-vendor AI strategies to avoid lock-in by testing different foundation models and maintaining relationships with multiple providers

Invest in employee training and change management to ensure successful adoption and address concerns about job displacement or skill requirements

For technical teams and developers:

Build model-agnostic interfaces that can work with different foundation models to enable rapid provider switching and cost optimization

Implement comprehensive monitoring and logging to track usage patterns, costs, performance metrics, and potential issues across AI applications

Develop prompt libraries and templates for common organizational use cases to ensure consistency and effectiveness across teams

Create evaluation frameworks to systematically compare model performance on organization-specific tasks and metrics

Establish deployment pipelines for rapid testing and implementation of new models and capabilities as they become available

For organizations in regulated industries:

Engage with regulatory developments proactively by monitoring AI legislation, participating in industry groups, and consulting with compliance specialists

Implement comprehensive audit trails documenting AI decision-making processes, human oversight, and approval workflows for regulatory compliance

Develop specialized risk assessment procedures tailored to industry-specific requirements such as healthcare safety, financial regulations, or legal accountability

Consider on-premises or private cloud deployments for sensitive data and applications where external API use poses unacceptable privacy or security risks

Establish cross-functional AI ethics committees including legal, compliance, technical, and business stakeholders to guide responsible AI implementation

These steps provide a structured approach to foundation model adoption while managing risks and maximizing benefits across different organizational contexts and requirements.

Glossary

API (Application Programming Interface): A set of protocols that allows software applications to communicate with foundation models over the internet, enabling access to AI capabilities without running the models locally.

Attention Mechanism: The core innovation in transformer architectures that allows models to focus on relevant parts of input text when generating responses, enabling understanding of context across long documents.

Chain-of-Thought: A technique where foundation models break down complex problems into step-by-step reasoning processes, showing their work and improving accuracy on logical tasks.

Context Window: The maximum amount of text a foundation model can process at once, measured in tokens. Modern models range from 128,000 to 10 million tokens.

Emergence: Unexpected capabilities that appear in foundation models when they reach certain scales, such as the ability to solve math problems or write code without explicit training.

Fine-tuning: The process of adjusting a pre-trained foundation model for specific tasks by training it on targeted datasets, improving performance for particular use cases.

Foundation Model: An AI system trained on broad data at scale that can be adapted to many different tasks without task-specific training, serving as a foundation for various applications.

Hallucination: When foundation models generate plausible-sounding but incorrect information, presenting false facts with confidence similar to accurate responses.

In-Context Learning: The ability of foundation models to learn from examples provided in prompts without updating their parameters, adapting to new tasks through examples.

Large Language Model (LLM): A type of foundation model specifically designed for understanding and generating text, trained on vast amounts of written content.

Mixture of Experts (MoE): An architecture where only a subset of model parameters is used for each input, improving efficiency while maintaining large total model size.

Multimodal: Foundation models capable of processing and generating multiple types of content such as text, images, audio, and video within a single system.

Parameters: The numerical values within neural networks that determine model behavior, learned during training. Foundation models contain billions to trillions of parameters.

Pre-training: The initial training phase where foundation models learn from large datasets using self-supervised objectives before being adapted for specific applications.

Prompt Engineering: The practice of crafting effective instructions and examples to guide foundation model outputs, crucial for achieving desired results.

Retrieval-Augmented Generation (RAG): A technique combining foundation models with external knowledge sources to improve factual accuracy and provide up-to-date information.

Self-Attention: The mechanism allowing transformer models to relate different parts of input sequences to each other, enabling understanding of context and relationships.

Self-Supervised Learning: Training approach where models learn from patterns in unlabeled data, such as predicting masked words or next tokens in sequences.

Scaling Laws: Mathematical relationships showing how foundation model performance improves predictably with increases in model size, training data, and computational resources.

Token: The basic unit of text processing in foundation models, roughly equivalent to a word or part of a word, used for measuring input length and costs.

Transformer: The neural network architecture underlying most foundation models, introduced in 2017 and enabling efficient processing of sequential data like text.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments