What is Adversarial Autoencoders (AAEs)

- Muiz As-Siddeeqi

- Dec 4, 2025

- 22 min read

Imagine a machine that learns to paint like Picasso by studying thousands of paintings, then creates entirely new artwork that captures the master's style but shows scenes never painted before. That power—to learn patterns and generate new, meaningful content—sits at the heart of adversarial autoencoders. These models changed how computers understand and create data, from discovering life-saving drugs to detecting hidden flaws in manufacturing.

Don’t Just Read About AI — Own It. Right Here

TL;DR

Adversarial Autoencoders (AAEs) merge standard autoencoders with adversarial training to create powerful generative models

Introduced in November 2015 by Alireza Makhzani and colleagues at University of Toronto, Google Brain, and OpenAI

AAEs excel at semi-supervised learning, drug discovery, anomaly detection, and dimensionality reduction

Successfully tested in vitro for generating novel drug inhibitors for diseases like rheumatoid arthritis and psoriasis

Multi-adversarial autoencoders (MAAE) introduced in 2024 improve stability and training speed with multiple discriminators

Training involves two alternating phases: reconstruction (autoencoder) and regularization (adversarial network)

Adversarial Autoencoders (AAEs) are probabilistic autoencoders that use generative adversarial networks to match the latent code distribution with an arbitrary prior distribution. They combine an encoder-decoder architecture with a discriminator network, training through alternating reconstruction and adversarial phases to generate realistic data while ensuring the latent space follows a desired distribution.

Table of Contents

Understanding the Foundations

Before diving into adversarial autoencoders, you need to grasp two fundamental concepts: autoencoders and adversarial training.

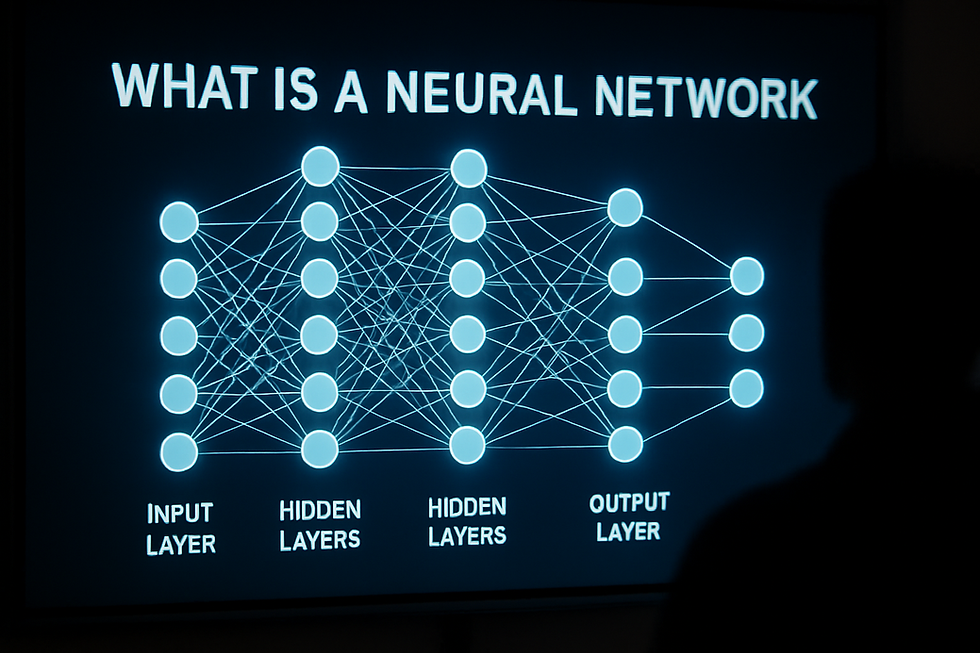

What Are Autoencoders?

Autoencoders are neural networks that learn to compress data into a smaller representation (encoding) and then reconstruct the original input from this compressed form (decoding). Think of them as intelligent compression algorithms that capture the essence of your data.

A standard autoencoder has two parts:

Encoder: Compresses input into a latent representation

Decoder: Reconstructs the original input from the latent code

The network learns by minimizing reconstruction error—the difference between input and output.

The Problem with Traditional Autoencoders

Traditional autoencoders suffer from a critical weakness: their latent space often contains "holes"—regions that don't map to meaningful data. If you randomly sample from these holes and feed them to the decoder, you get garbage output.

This limitation makes traditional autoencoders poor generative models. They can compress and reconstruct, but they struggle to generate entirely new, realistic samples.

Enter Adversarial Training

Adversarial training, introduced through Generative Adversarial Networks (GANs) by Ian Goodfellow and colleagues in 2014, provides a solution. GANs use two competing networks:

A generator creates fake samples

A discriminator tries to distinguish real from fake samples

This competition drives both networks to improve, ultimately producing highly realistic generated data.

The Birth of Adversarial Autoencoders

In November 2015, Alireza Makhzani, Jonathon Shlens, Navdeep Jaitly, and Ian Goodfellow introduced Adversarial Autoencoders to solve the latent space problem. AAEs use adversarial training to force the encoder's latent distribution to match a chosen prior distribution (typically Gaussian).

This elegant combination ensures that:

The autoencoder learns meaningful representations

The latent space has no holes

Any point sampled from the prior maps to realistic output

How Adversarial Autoencoders Work

AAEs operate through a clever two-phase training process that alternates between reconstruction and regularization.

The Core Concept

AAEs match the aggregated posterior of the hidden code vector with an arbitrary prior distribution, ensuring that generating from any part of prior space results in meaningful samples. The encoder serves double duty: it compresses data for the autoencoder AND acts as the generator for the adversarial network.

Phase 1: Reconstruction

In this phase, the AAE behaves like a standard autoencoder:

Input data passes through the encoder

The encoder produces a latent representation

The decoder reconstructs the input from this representation

The network minimizes reconstruction loss (typically mean squared error)

This phase ensures the model learns to capture important features in the data.

Phase 2: Regularization (Adversarial Training)

Here the adversarial magic happens:

The discriminator receives two types of inputs:

Real samples from the prior distribution (e.g., Gaussian noise)

Fake samples from the encoder's output

The discriminator learns to tell them apart

The encoder learns to fool the discriminator

This phase forces the latent space to follow the desired prior distribution.

Why This Matters

By enforcing a specific prior distribution, AAEs ensure:

No dead zones: Every point in the latent space maps to something meaningful

Smooth interpolation: Moving between points produces gradual, sensible changes

Controllable generation: You can sample from the prior to generate new data

The Architecture Deep Dive

Let's examine each component of an AAE architecture.

Component 1: The Encoder

The encoder compresses input data into a latent space representation, transforming input into a compact representation. For image data, this typically uses convolutional layers. For sequential data, recurrent or attention-based layers work better.

The encoder learns to extract the most important features needed for both reconstruction and maintaining the prior distribution.

Component 2: The Decoder

The decoder mirrors the encoder's structure in reverse. It takes the latent code and reconstructs the original input. The decoder learns a deep generative model that maps the imposed prior to the data distribution.

Component 3: The Discriminator

The discriminator is a neural network that attempts to distinguish between latent codes produced by the encoder and samples from the prior distribution. It's typically a simple feedforward network with a few hidden layers ending in a single output neuron (for binary classification).

The discriminator provides the adversarial signal that shapes the encoder's behavior.

Layer Configurations

For molecular fingerprint generation, researchers developed a 7-layer AAE architecture with the latent middle layer serving as a discriminator. The specific architecture depends heavily on your data type and task requirements.

For images, typical architectures use:

Encoder: Multiple convolutional layers with batch normalization and ReLU activation

Decoder: Transposed convolutions (sometimes called deconvolutions)

Discriminator: 2-3 fully connected layers

Training Process and Optimization

Training an AAE requires careful orchestration of multiple objectives.

Loss Functions

AAEs optimize two main objectives:

Reconstruction Loss: Measures how well the decoder reconstructs inputs. Common choices include:

Mean Squared Error (MSE) for continuous data

Binary Cross-Entropy for binary data

Adversarial Loss: Standard GAN loss for the discriminator and encoder.

The total loss balances these objectives, often weighted by hyperparameters.

Training Algorithm

The training process alternates between updating different components:

Update autoencoder (encoder + decoder):

Sample a mini-batch of real data

Pass through encoder and decoder

Compute reconstruction loss

Backpropagate and update encoder and decoder weights

Update discriminator:

Sample real data and encode it

Sample from the prior distribution

Train discriminator to classify real vs fake

Update discriminator weights

Update encoder (adversarial):

Sample real data and encode it

Train encoder to fool the discriminator

Update encoder weights

Hyperparameter Considerations

Critical hyperparameters include:

Learning rates (often different for autoencoder vs discriminator)

Batch size

Latent space dimensionality

Number of discriminator updates per generator update

Weight decay and dropout rates

The adversarial training process can be unstable, requiring careful tuning of hyperparameters.

Convergence Challenges

Like GANs, AAEs can suffer from:

Mode collapse: Encoder produces limited variety in latent codes

Oscillation: Training alternates without converging

Vanishing gradients: Discriminator becomes too strong, providing no learning signal

Recent improvements address these issues through techniques like spectral normalization, gradient penalties, and careful learning rate scheduling.

AAEs vs VAEs vs GANs

Understanding how AAEs compare to related approaches helps you choose the right tool.

Variational Autoencoders (VAEs)

VAEs, first introduced by Diederik Kingma and Max Welling in 2013, also regularize the latent space using probabilistic principles.

Key Differences:

VAEs use KL divergence to match the prior; AAEs use adversarial training

AAEs can impose arbitrary prior distributions while VAEs typically use Gaussian priors

On MNIST with 100 and 1000 labels, AAEs significantly outperformed VAEs in semi-supervised settings

VAEs optimize a tractable lower bound; AAEs use implicit distribution matching

When to Choose VAEs:

You need probabilistic interpretations

Training stability is critical

You prefer simpler mathematics

When to Choose AAEs:

You want flexible prior distributions

Semi-supervised learning is your goal

Better performance matters more than interpretability

Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, consist of a generator and discriminator playing a minimax game.

Key Differences:

GANs excel at generating sharp, high-quality, realistic samples

AAEs provide an encoder for inference; standard GANs don't

GANs are less stable and more difficult to train than AAEs

AAEs combine generative and compression capabilities

When to Choose GANs:

Image quality is paramount

You only need generation (not encoding)

You have resources for extensive hyperparameter tuning

When to Choose AAEs:

You need both encoding and generation

Semi-supervised learning applications

More stable training is preferred

Performance Comparison

GANs typically produce sharper, more realistic images than VAEs, while VAEs provide better coverage of the data distribution. AAEs offer a middle ground—better image quality than VAEs with more stable training than GANs.

According to Coursera's analysis from May 2025, GANs are better for generating multimedia like images and videos, while VAEs excel at signal analysis and anomaly detection.

Hybrid Approaches

VAE-GANs combine VAE's ability to generate meaningful data representations with GAN's talent for producing high-quality, realistic images. These hybrids demonstrate the complementary nature of these techniques.

Real-World Applications

AAEs solve practical problems across multiple industries. Let's explore their most impactful applications.

1. Drug Discovery and Molecular Design

AAEs accelerate drug discovery by generating novel molecular structures with desired properties, accomplishing in hours what traditional pipelines require months to achieve.

The process works like this:

Train the AAE on molecular fingerprints with known properties

Use the latent space to represent molecular features

Generate new molecules by sampling and decoding

Filter candidates based on predicted properties

The AAE model significantly enhances capacity and efficiency in developing new molecules with specific anticancer properties.

2. Semi-Supervised Classification

AAEs can be used for semi-supervised classification, disentangling style and content of images, and unsupervised clustering. This makes them valuable when labeled data is scarce.

The semi-supervised AAE architecture separates:

Class label information (supervised component)

Style/content information (unsupervised component)

All AAE models train end-to-end, whereas semi-supervised VAE models must be trained one layer at a time. This end-to-end training provides both practical and performance advantages.

3. Anomaly Detection

AAE-based anomaly detection frameworks capture the normality distribution of high-dimensional images and identify abnormalities in industrial settings.

The approach leverages AAEs' ability to learn normal data patterns. Anomalies produce high reconstruction errors because they deviate from learned patterns.

Applications include:

Manufacturing defect detection

Financial fraud detection

Infrastructure monitoring

Cybersecurity threat detection

4. Dimensionality Reduction and Visualization

AAEs compress high-dimensional data into lower-dimensional representations while preserving important structure. This enables:

Data visualization in 2D or 3D

Feature extraction for downstream tasks

Data compression for storage or transmission

AAEs learn manifolds that exhibit sharp transitions, indicating the coding space is filled with no "holes," unlike VAEs which show gaps in coverage.

5. Time Series Generation

The AVATAR framework, introduced in January 2025, combines AAEs with autoregressive learning for time series generation. This addresses unique challenges in temporal data:

Maintaining temporal dependencies

Capturing conditional distributions at each time step

Generating realistic sequential patterns

Applications include financial forecasting, sensor data augmentation, and synthetic time series for testing.

6. Image Generation and Editing

AAEs generate realistic images and enable controlled editing by manipulating latent codes. You can:

Generate faces with specific attributes

Interpolate between images smoothly

Edit specific features while preserving others

7. Medical Imaging

AAEs generate molecular structures that could induce desired gene expression changes, validated on the LINCS L1000 dataset. This enables:

Drug response prediction

Personalized medicine applications

Understanding drug-gene interactions

Case Study 1: Drug Discovery at Insilico Medicine

Background: Insilico Medicine applied deep adversarial autoencoders for generating novel molecular fingerprints with defined parameters for anticancer therapy.

The Challenge: Traditional drug discovery is slow, expensive, and has high failure rates. Identifying promising drug candidates requires screening millions of compounds, most of which prove ineffective.

The AAE Solution: Researchers developed a 7-layer AAE architecture with the latent middle layer serving as a discriminator, using binary fingerprints and molecular concentration as input/output.

Implementation Details:

Trained on NCI-60 cell line assay data for 6,252 compounds profiled on the MCF-7 cell line

Introduced a neuron in the latent layer for growth inhibition percentage

Negative values indicated reduction in tumor cells after treatment

Results: The AAE output screened 72 million compounds in PubChem and selected candidate molecules with potential anti-cancer properties.

Real-World Impact: The team proposed an entangled conditional adversarial autoencoder that generates molecular structures based on properties like activity against specific proteins, solubility, and ease of synthesis. A molecule generated for Janus kinase 3 inhibition was tested in vitro and showed good activity and selectivity.

This case demonstrates AAEs' ability to:

Learn complex molecular patterns

Generate novel, chemically valid structures

Predict biological activity

Accelerate drug discovery timelines from months to hours

Publication Date: December 2016 in Oncotarget

Case Study 2: Semiconductor Defect Detection

Background: A company used Adversarial Autoencoders with Deep Support Vector Data Description (DSVDD) prior for one-class classification on wafer maps in semiconductor manufacturing.

The Challenge: Semiconductor manufacturers face quality control challenges:

Defects are rare (highly imbalanced data)

Defect patterns constantly evolve

Manual inspection is slow and inconsistent

False alarms are costly

The AAE Solution: The proposed method performs one-class classification on wafer maps, learning normal patterns and flagging deviations as defects.

Why AAEs Work Here:

Semi-supervised approach works with limited defect examples

Learns complex spatial patterns in wafer maps

Adapts to new defect types without retraining from scratch

Provides explainable defect localization

Results: The method helps manufacturers identify defects and improve yield rates.

Industry Impact: Semiconductor companies report:

Reduced manual inspection time

Earlier defect detection

Higher yield rates

Faster response to process variations

This application showcases AAEs' strength in:

Handling imbalanced datasets

Learning from normal data only

Detecting novel anomalies

Operating in high-stakes manufacturing environments

Case Study 3: Medical Image Analysis

Background: Researchers applied AAEs to high-content image generation for drug discovery using cellular imaging data.

The Challenge: High-content image-based drug discovery screens generate immense amounts of data requiring automated analysis, but face challenges due to data requirements and limited preclinical investigation data.

The AAE Approach: While the study primarily used DCGANs, researchers explored adversarial autoencoder architectures for generating lead molecules as an architectural variation in GAN design.

Applications:

Data Augmentation: Generate synthetic cellular images to supplement limited real data

Feature Extraction: Learn representations useful for drug response prediction

Quality Control: Identify imaging artifacts and anomalies

Technical Implementation:

Trained on high-content images of monocytes and bacteria

Used adversarial training to ensure generated images match real image distributions

Evaluated quality by comparing feature distributions

Results: The augmented dataset yielded better classification performance compared to using only real images, published in September 2020.

Research Impact: This work demonstrates how AAEs and related architectures:

Overcome data scarcity in medical research

Maintain biological realism in synthetic data

Enable better model training with limited samples

Accelerate drug screening workflows

Advantages and Limitations

Understanding AAEs' strengths and weaknesses helps you deploy them effectively.

Advantages

1. Flexible Prior Distributions AAEs can impose arbitrary prior distributions on the latent space, not just Gaussian priors. This flexibility enables:

Mixture of Gaussians for multi-modal data

Categorical distributions for discrete attributes

Custom priors matching domain knowledge

2. End-to-End Training All AAE models train end-to-end, whereas semi-supervised VAE models must be trained layer-by-layer. This simplifies implementation and often improves performance.

3. Better Latent Space Structure By imposing a prior on the latent space, AAEs ensure encoded representations are well-structured and meaningful. No dead zones means reliable generation.

4. Semi-Supervised Learning Excellence On MNIST with 100 and 1000 labels, AAEs significantly outperformed VAEs in semi-supervised classification.

5. Stable Generation Unlike GANs, AAEs provide:

Explicit encoding of data points

Inference capabilities

More stable training dynamics

6. Interpretable Latent Space The structured latent space enables:

Smooth interpolation between samples

Controlled attribute manipulation

Meaningful clustering

Limitations

1. Training Instability The adversarial training process can be unstable, requiring careful tuning of hyperparameters and sensitive to training configuration.

2. Computational Complexity Implementing and training AAEs is more complex than traditional autoencoders, necessitating deeper understanding of both autoencoders and adversarial networks.

3. Hyperparameter Sensitivity AAEs require tuning:

Learning rates for three components

Balance between reconstruction and adversarial losses

Network architectures for each component

Training schedule and update frequencies

4. Mode Collapse Risk While less prone than GANs, AAEs can still experience mode collapse where the encoder produces limited variety.

5. Image Quality Trade-offs GANs typically generate sharper, higher-quality samples than AAEs, though AAEs offer better stability and inference capabilities.

6. Black Box Nature Like all deep learning models, AAEs lack full interpretability, making it hard to understand why specific latent codes produce certain outputs.

Recent Innovations and Research

The field continues evolving with exciting developments.

Multi-Adversarial Autoencoders (MAAE)

In October 2024, researchers published Multi-adversarial Autoencoder (MAAE) in Expert Systems with Applications, extending the AAE framework by incorporating multiple discriminators and enabling soft-ensemble feedback.

Key Innovations:

Multiple discriminators provide richer feedback signals

Learnable parameter dynamically balances mutual information and inference quality

Adaptive adjustment optimizes representation richness and task performance

MAAE demonstrates improved stability and faster convergence while extracting meaningful features, with extensive experiments on MNIST, CIFAR10, and CelebA showing superior results.

AVATAR for Time Series

The AVATAR framework, introduced in January 2025 on arXiv, combines AAEs with autoregressive learning for time series generation.

Novel Components:

Integration of autoencoder with supervisor network

Supervised loss for learning temporal dynamics

Distribution loss for aligning latent representation with Gaussian prior

Joint training mechanism with combined loss

Experiments demonstrate significant improvements in both quality and practical utility of generated time series data across various datasets with diverse characteristics.

Spectral Constraint AAEs

Researchers developed spectral constraint adversarial autoencoders for hyperspectral anomaly detection, published in 2019. This incorporates spectral angle distance into the AAE loss function to enforce spectral consistency in hyperspectral images.

Applications in Sports Science

A December 2024 study in Sensors-Basel explored AAEs for assessing and visualizing fatigue in athletes through two-dimensional latent space representation. The research used AAEs for:

Human activity recognition

Dimensionality reduction of movement data

Fatigue pattern identification

Semi-supervised and conditional approaches

Implementation Considerations

Successfully deploying AAEs requires attention to several practical factors.

Choosing the Right Architecture

For Image Data:

Use convolutional layers in encoder/decoder

Consider residual connections for deeper networks

Add batch normalization for stability

For Sequential Data:

RNN or LSTM layers for temporal dependencies

Attention mechanisms for long sequences

Causal convolutions as lightweight alternative

For Tabular Data:

Fully connected layers with dropout

Embedding layers for categorical features

Careful normalization of inputs

Latent Space Dimensionality

The latent space dimension should match the intrinsic dimensionality of the data—5 to 8 dimensions for MNIST according to Makhzani et al. 2015.

Too low: Information loss, poor reconstruction Too high: Overfitting, computational waste Just right: Meaningful representation, good generalization

Training Best Practices

Start Simple:

Train basic autoencoder first

Add adversarial component gradually

Tune one hyperparameter at a time

Monitor Multiple Metrics:

Reconstruction loss

Discriminator accuracy (should stay around 50-60%)

Generated sample quality

Latent space distribution matching

Use Learning Rate Schedules:

Often decrease learning rate over time

Consider separate schedules for different components

Watch for signs of instability

Regularization Techniques:

Dropout in encoder/decoder

Weight decay

Gradient clipping

Spectral normalization on discriminator

Data Preprocessing

Critical steps include:

Normalization (typically to [-1, 1] or [0, 1])

Data augmentation for small datasets

Handling missing values appropriately

Balancing classes in semi-supervised scenarios

Computational Resources

Training AAEs requires:

GPU for reasonable training times

Memory for storing three networks

Storage for checkpoints and generated samples

Consider:

Starting with smaller models on subset of data

Using mixed precision training to save memory

Distributing training across multiple GPUs if available

Industry Adoption and Market Outlook

The generative AI market, including technologies like AAEs, experiences explosive growth.

Market Size and Growth

The global generative AI market was forecast to increase between 2024 and 2030 by 320 billion U.S. dollars (+887.41%), reaching 356.05 billion dollars by 2030 according to Statista data from April 2024.

The global generative AI market size was valued at USD 16.87 billion in 2024 and is projected to reach USD 109.37 billion by 2030, growing at a CAGR of 37.6% from 2025 to 2030.

Technology Adoption

By technology segment, the generative AI market includes Transformers, Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Diffusion Networks, with data showing year-over-year growth for VAEs at 9.5% from 2024-2029.

From nearly $15.01 billion in 2023, the generative AI market grew to $23.1 billion in 2024, projected to reach $90.61 billion by 2028 at a CAGR of 43.28%, according to The Business Research Company report from July 2024.

Regional Distribution

In global comparison, the United States represents the largest market size at $27.51 billion in 2025.

North America dominated the generative AI market with a 40.8% share in 2024, driven by leading companies researching and developing generative AI applications.

Industry Applications

Key sectors adopting AAEs and related generative models include:

Healthcare and Life Sciences:

Drug discovery pipelines

Medical image analysis

Personalized medicine

Biomarker development

Manufacturing:

Quality control and defect detection

Predictive maintenance

Process optimization

Synthetic data generation for testing

Financial Services:

Fraud detection systems

Risk modeling

Algorithmic trading

Anomaly detection in transactions

Research and Development:

Materials science

Molecular design

Synthetic data for AI training

Simulation and modeling

Investment Trends

Codeium, specializing in generative AI-powered coding tools, secured $65 million in Series B funding in January 2024, led by Kleiner Perkins. This investment supports advancement of code-biased Large Language Models and demonstrates continued investor confidence in generative AI technologies.

Common Pitfalls and How to Avoid Them

Learn from others' mistakes to deploy AAEs successfully.

Pitfall 1: Imbalanced Loss Weights

Problem: Reconstruction loss dominates, discriminator barely trains, or vice versa.

Solution:

Start with equal weights

Monitor both losses during training

Adjust if one component stops improving

Use validation metrics to guide tuning

Pitfall 2: Wrong Prior Distribution

Problem: Chosen prior doesn't match data characteristics.

Solution:

Analyze your data's natural structure

Use Gaussian for continuous, unimodal data

Consider mixture of Gaussians for multi-modal data

Match prior complexity to data complexity

Pitfall 3: Discriminator Too Strong

Problem: Discriminator perfectly separates real/fake, encoder receives no gradient signal.

Solution:

Update discriminator less frequently than encoder

Use label smoothing (0.9 instead of 1.0 for real labels)

Add noise to discriminator inputs

Use spectral normalization

Pitfall 4: Insufficient Latent Dimensions

Problem: Latent space too small, information bottleneck causes poor reconstruction.

Solution:

Start with higher dimensions, gradually reduce

Monitor reconstruction quality

Visualize latent space to check for crowding

Balance compression with information preservation

Pitfall 5: Poor Data Preprocessing

Problem: Unnormalized or improperly scaled data causes training instability.

Solution:

Normalize inputs to consistent range

Handle outliers appropriately

Ensure consistent preprocessing in training and inference

Document preprocessing steps

Pitfall 6: Ignoring Mode Collapse

Problem: Encoder maps many inputs to same latent code, decoder generates limited variety.

Solution:

Monitor diversity metrics on generated samples

Use minibatch discrimination

Try different prior distributions

Ensure sufficient model capacity

Pitfall 7: Overfitting to Training Data

Problem: Model memorizes training examples rather than learning generalizable patterns.

Solution:

Use validation set to monitor generalization

Add regularization (dropout, weight decay)

Generate samples not in training set

Consider data augmentation

Future Directions

Several exciting research directions promise to advance AAE capabilities.

Enhanced Stability and Convergence

Researchers work on:

Better training algorithms that reduce oscillation

Adaptive learning rate schedules specific to AAEs

Theoretical understanding of convergence properties

Novel regularization techniques

Conditional Generation

Extending AAEs with conditional capabilities enables:

Generating samples with specific attributes

Controlled editing of existing samples

Multi-modal generation

Fine-grained attribute manipulation

Larger-Scale Applications

As computational resources grow:

AAEs on higher-resolution images

Longer sequence modeling

Multi-modal data (combining text, image, audio)

Real-time applications

Integration with Other Techniques

Hybrid approaches combining AAEs with:

Attention mechanisms

Transformer architectures

Diffusion models

Neural architecture search

Explainability and Interpretability

Making AAEs more interpretable through:

Disentangled representations

Causal understanding of latent factors

Visualization techniques

Theoretical foundations

Domain-Specific Architectures

Specialized AAEs for:

3D data (point clouds, meshes)

Graph-structured data

Scientific domains (protein folding, material design)

Edge computing and mobile devices

FAQ

1. What's the main difference between AAEs and VAEs?

AAEs use adversarial training to match the latent distribution to a prior, while VAEs use KL divergence. AAEs can impose arbitrary prior distributions and significantly outperformed VAEs on MNIST semi-supervised tasks with 100 and 1000 labels. AAEs also train end-to-end rather than layer-by-layer.

2. Can AAEs generate higher quality images than VAEs?

Generally yes. AAE models learn manifolds with sharp transitions indicating filled coding space with no "holes," while VAEs exhibit systematic differences and gaps in coverage. However, GANs still produce sharper, higher-quality samples than both AAEs and VAEs.

3. How much training data do I need for an AAE?

It depends on your task complexity. For semi-supervised learning, AAEs excel with limited labeled data. The original AAE paper demonstrated competitive results on MNIST (60,000 training images), Street View House Numbers, and Toronto Face datasets. Start with thousands of samples and scale up as needed.

4. What programming frameworks support AAE implementation?

AAEs can be implemented in:

PyTorch (most popular currently)

TensorFlow/Keras

JAX

Theano (older implementations)

Most modern implementations use PyTorch for flexibility and ease of debugging.

5. Are AAEs suitable for production applications?

Yes, but with caveats. AAEs have been successfully tested in vitro for drug discovery applications, and deployed in semiconductor manufacturing for defect detection. However, training instability and hyperparameter sensitivity require careful engineering for production deployment.

6. How do I choose between AAE, VAE, and GAN?

Choose based on your priorities:

AAE: Need both encoding and generation, semi-supervised learning, balance between stability and quality

VAE: Need theoretical guarantees, probabilistic interpretation, simplicity

GAN: Pure generation, highest image quality, have resources for extensive tuning

7. Can AAEs work with small datasets?

Yes, especially for semi-supervised scenarios. AAEs can leverage both labeled and unlabeled data effectively. Techniques like data augmentation, transfer learning, and careful regularization help when data is limited.

8. What's the typical training time for an AAE?

Highly variable based on:

Dataset size and complexity

Model architecture

Hardware (GPU vs CPU)

Desired quality

On a modern GPU, expect:

Simple datasets (MNIST): Minutes to hours

Complex datasets (high-res images): Hours to days

Large-scale applications: Days to weeks

9. How do I debug AAE training problems?

Key debugging steps:

Train autoencoder alone first (should reconstruct well)

Add adversarial component gradually

Monitor discriminator accuracy (target 50-60%)

Visualize generated samples frequently

Check latent space distribution matches prior

Use smaller models for faster iteration

10. Are there pre-trained AAE models available?

Pre-trained AAEs are less common than for VAEs or GANs. Most applications require training on domain-specific data. However, you can find:

Research code with trained checkpoints

Transfer learning from related architectures

Community implementations on GitHub

11. Can AAEs handle multi-modal data?

Yes, with appropriate architecture modifications. Researchers have developed AAEs for:

Images with labels

Text and images together

Audio and visual data

Multiple sensor modalities

The key is designing encoders/decoders that handle each modality appropriately.

12. What causes mode collapse in AAEs and how do I fix it?

Mode collapse occurs when the encoder maps diverse inputs to similar latent codes. AAEs are less prone to mode collapse than GANs, but it can still happen. Solutions include:

Increase encoder capacity

Use minibatch discrimination

Add regularization to encourage diversity

Try different prior distributions

Monitor generated sample diversity

13. How important is the choice of prior distribution?

Very important. AAEs' ability to impose arbitrary prior distributions is a key advantage over VAEs. Match your prior to data characteristics:

Gaussian for continuous, unimodal data

Mixture of Gaussians for clustered data

Categorical for discrete attributes

Custom priors encoding domain knowledge

14. Can I use AAEs for real-time applications?

Once trained, AAEs can perform inference quickly:

Encoding: Fast (single forward pass)

Decoding: Fast (single forward pass)

Generation: Fast (sample prior, decode)

Real-time deployment is feasible with proper optimization (model pruning, quantization, efficient implementations).

15. What's the state-of-the-art performance for AAEs?

Multi-adversarial Autoencoders (MAAE) from October 2024 demonstrate improved stability and faster convergence with extensive experiments on MNIST, CIFAR10, and CelebA showing superior results. AVATAR framework from January 2025 shows significant improvements in time series generation quality and utility.

Key Takeaways

Adversarial Autoencoders combine autoencoders' compression capabilities with adversarial training's generative power to create robust generative models

Introduced by Alireza Makhzani and colleagues in November 2015, AAEs have matured into production-ready technology

Training involves two alternating phases: reconstruction (autoencoder) and regularization (adversarial matching)

AAEs significantly outperform VAEs in semi-supervised learning tasks and can impose arbitrary prior distributions

Real-world applications span drug discovery, anomaly detection, image generation, and time series forecasting

Successfully tested in vitro for generating novel drug candidates with good activity and selectivity

The generative AI market grows rapidly, projected to reach hundreds of billions of dollars by 2030

Recent innovations like MAAE (2024) and AVATAR (2025) improve stability, speed, and application scope

Implementation requires careful hyperparameter tuning, appropriate architecture design, and monitoring for training stability

AAEs offer better stability than GANs while maintaining superior performance compared to VAEs in many tasks

Actionable Next Steps

Start with the Basics: Implement a simple AAE on MNIST using PyTorch or TensorFlow to understand the training dynamics

Study the Original Paper: Read "Adversarial Autoencoders" by Makhzani et al. (2015) available on arXiv for theoretical foundations

Explore GitHub Implementations: Find community code examples and pre-trained models to accelerate your learning

Experiment with Hyperparameters: Test different learning rates, batch sizes, and loss weights on your chosen dataset

Monitor Training Carefully: Set up logging and visualization to track reconstruction loss, discriminator accuracy, and sample quality

Consider Your Application: Determine if AAEs fit your needs better than VAEs or GANs based on requirements for encoding, generation quality, and training stability

Start Simple, Scale Gradually: Begin with small models and datasets, then increase complexity as you understand the dynamics

Join the Community: Participate in forums, read recent papers, and contribute to open-source implementations

Explore Recent Innovations: Investigate MAAE and AVATAR frameworks for state-of-the-art techniques

Apply to Real Problems: Once comfortable, tackle domain-specific challenges in your field of interest

Glossary

Adversarial Training: A training method where two neural networks compete against each other, one generating samples and the other trying to distinguish real from generated samples.

Aggregated Posterior: The distribution of latent codes produced by encoding many data points through the encoder network.

Autoencoder: A neural network that learns to compress data into a latent representation and reconstruct the original input from this compressed form.

Convolutional Neural Network (CNN): A type of neural network especially effective for processing grid-like data such as images, using convolutional layers.

Decoder: The component of an autoencoder that reconstructs the original input from the latent representation.

Discriminator: A neural network that attempts to distinguish between real samples and generated samples in adversarial training.

Encoder: The component of an autoencoder that compresses input data into a latent representation.

Generative Adversarial Network (GAN): A framework where a generator network creates fake samples and a discriminator network tries to identify them, training both through competition.

Hyperparameter: A configuration setting for the model that is set before training (like learning rate, batch size) rather than learned from data.

Latent Space: The compressed, lower-dimensional representation learned by the encoder, capturing important features of the data.

Loss Function: A mathematical function that measures how far the model's predictions are from the desired output, used to guide training.

Mode Collapse: A failure mode in generative models where the generator produces limited variety in its outputs.

Prior Distribution: A probability distribution (often Gaussian) that we want the latent space to follow, enabling controlled sampling for generation.

Reconstruction Loss: The difference between the original input and the autoencoder's reconstructed output, measuring reconstruction quality.

Regularization: Techniques that prevent overfitting and encourage the model to learn generalizable patterns rather than memorizing training data.

Semi-Supervised Learning: Machine learning that uses both labeled and unlabeled data, leveraging abundant unlabeled data with limited labels.

Stochastic Gradient Descent (SGD): An optimization algorithm that updates model parameters using gradients computed on small batches of data.

Variational Autoencoder (VAE): A type of autoencoder that uses probabilistic encoding and KL divergence to regularize the latent space.

Sources & References

Makhzani, A., Shlens, J., Jaitly, N., & Goodfellow, I. (2015). Adversarial Autoencoders. arXiv preprint arXiv:1511.05644. https://arxiv.org/abs/1511.05644

Wu, X., & Jang, H. (2024). Multi-adversarial autoencoders: Stable, faster and self-adaptive representation learning. Expert Systems with Applications, 262, 125554. https://www.sciencedirect.com/science/article/abs/pii/S0957417424024217

Eskandari Nasab, M. R., Hamdi, S. M., & Filali Boubrahimi, S. (2025). AVATAR: Adversarial Autoencoders with Autoregressive Refinement for Time Series Generation. arXiv preprint arXiv:2501.01649. https://arxiv.org/abs/2501.01649

Kadurin, A., Aliper, A., Kazennov, A., Mamoshina, P., Vanhaelen, Q., Khrabrov, K., & Zhavoronkov, A. (2017). The cornucopia of meaningful leads: Applying deep adversarial autoencoders for new molecule development in oncology. Oncotarget, 8(7), 10883-10890. https://www.oncotarget.com/article/14073/text/

Polykovskiy, D., Zhebrak, A., Vetrov, D., Ivanenkov, Y., Aladinskiy, V., Bozdaganyan, M., Osipov, S., Kvetkova, D., Bezrukov, D., Aladinskaya, A., & Mamoshina, P. (2018). Entangled conditional adversarial autoencoder for de novo drug discovery. Molecular Pharmaceutics, 15(10), 4398-4405. https://pubs.acs.org/doi/10.1021/acs.molpharmaceut.8b00839

Kadurin, A., Nikolenko, S., Khrabrov, K., Aliper, A., & Zhavoronkov, A. (2017). druGAN: An Advanced Generative Adversarial Autoencoder Model for de Novo Generation of New Molecules with Desired Molecular Properties in Silico. Molecular Pharmaceutics, 14(9), 3098-3104. https://pubs.acs.org/doi/10.1021/acs.molpharmaceut.7b00346

Shayakhmetov, R., Kuznetsov, M., Zhebrak, A., Kadurin, A., Nikolenko, S., Aliper, A., & Polykovskiy, D. (2020). Molecular Generation for Desired Transcriptome Changes With Adversarial Autoencoders. Frontiers in Pharmacology, 11, 269. https://www.frontiersin.org/journals/pharmacology/articles/10.3389/fphar.2020.00269/full

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative Adversarial Networks. Advances in Neural Information Processing Systems (NIPS), 27. https://arxiv.org/abs/1406.2661

Kingma, D. P., & Welling, M. (2013). Auto-Encoding Variational Bayes. arXiv preprint arXiv:1312.6114. https://arxiv.org/abs/1312.6114

GeeksforGeeks. (2025, July 23). Adversarial Auto Encoder (AAE). https://www.geeksforgeeks.org/artificial-intelligence/adversarial-auto-encoder-aae/

Rousseau, T., Venture, G., & Hernandez, V. (2024, December). Latent Space Representation of Human Movement: Assessing the Effects of Fatigue. Sensors-Basel. https://www.researchgate.net/figure/Project-Overview-The-adversarial-autoencoder-AAE-is-trained-by-considering-a_fig1_361349893

Statista. (2024, April 11). Generative artificial intelligence (AI) market size worldwide from 2020 to 2030. https://www.statista.com/forecasts/1449838/generative-ai-market-size-worldwide

Grand View Research. (2024). Generative AI Market Size, Share & Trends Analysis Report. https://www.grandviewresearch.com/industry-analysis/generative-ai-market-report

The Business Research Company. (2024, July 9). Generative AI Market to Grow 43% Annually from 2024 to 2028. https://blog.marketresearch.com/generative-ai-market-to-grow-43-annually-from-2024-to-2028

Technavio. (2024). Generative Artificial Intelligence (AI) Market Growth Analysis - Size and Forecast 2025-2029. https://www.technavio.com/report/generative-ai-market-analysis

TechTarget. (n.d.). GANs vs. VAEs: What is the best generative AI approach? https://www.techtarget.com/searchenterpriseai/feature/GANs-vs-VAEs-What-is-the-best-generative-AI-approach

Coursera. (2025, May 1). VAE vs. GAN: What's the Difference? https://www.coursera.org/articles/vae-vs-gan

Baeldung. (2024, March 18). VAE Vs. GAN For Image Generation. https://www.baeldung.com/cs/vae-vs-gan-image-generation

Generative AI Lab. (2024, August 19). Comparing Diffusion, GAN, and VAE Techniques. https://generativeailab.org/l/generative-ai/a-tale-of-three-generative-models-comparing-diffusion-gan-and-vae-techniques/569/

Activeloop. (n.d.). What is Adversarial Autoencoders? https://www.activeloop.ai/resources/glossary/adversarial-autoencoders-aae/

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments