What is Deep Convolutional Generative Adversarial Network (DCGAN)

- Dec 16, 2025

- 41 min read

Imagine training a computer to dream up completely new faces, medical scans, or fashion designs that have never existed. In late 2015, researchers at a small AI company did exactly that—they taught machines not just to recognize images, but to create them from scratch with stunning realism. This breakthrough, called the Deep Convolutional Generative Adversarial Network (DCGAN), sparked a revolution that reshaped how we think about artificial intelligence. Today, as the generative AI market explodes past $67 billion in 2024 and races toward nearly $1 trillion by 2032, DCGANs remain the foundational architecture powering everything from medical diagnostics to digital fashion runways.

Don’t Just Read About AI — Own It. Right Here

TL;DR

DCGANs are AI models that generate realistic images by pitting two neural networks against each other in a continuous game of creator versus critic.

Introduced by Radford et al. in November 2015, DCGANs revolutionized image generation by making GAN training stable and scalable through convolutional layers.

The generative AI market reached $67.18 billion in 2024, with GANs (including DCGANs) accounting for 74% of the market share in 2023.

DCGANs power real-world applications in medical imaging, fashion design, drug discovery, and biometric security systems.

While newer architectures like StyleGAN achieve better quality scores, DCGANs remain widely used for their stability, simplicity, and proven track record.

The architecture uses specific design principles: strided convolutions, batch normalization, ReLU/LeakyReLU activation functions, and elimination of fully connected layers.

A Deep Convolutional Generative Adversarial Network (DCGAN) is an artificial intelligence architecture that generates photorealistic images by using two competing convolutional neural networks—a generator that creates images and a discriminator that evaluates them. Introduced in 2015 by Radford et al., DCGANs stabilized GAN training and enabled scalable image synthesis across diverse applications from medical imaging to fashion design.

Table of Contents

What is a Deep Convolutional Generative Adversarial Network (DCGAN)? Core Definition

A Deep Convolutional Generative Adversarial Network (DCGAN) is a specialized type of generative adversarial network (GAN) architecture that uses convolutional neural networks (CNNs) to generate synthetic images. Published in November 2015 by Alec Radford, Luke Metz, and Soumith Chintala in their landmark paper "Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks" (arXiv:1511.06434), DCGANs solved critical instability problems that plagued earlier GAN implementations.

The core innovation lies in replacing fully connected layers with convolutional layers throughout both the generator and discriminator networks. This architectural shift transformed GANs from temperamental research curiosities into practical tools for generating high-quality synthetic images at scale.

The generative AI market has experienced explosive growth, reaching $67.18 billion in 2024 and projected to hit $967.65 billion by 2032, growing at a compound annual growth rate (CAGR) of 39.6% according to Fortune Business Insights (2024). Within this broader market, Generative Adversarial Networks—with DCGANs as a foundational architecture—captured over 74% of market share in 2023, demonstrating the lasting impact of this technology.

The History Behind DCGANs

The GAN Revolution (2014)

The story begins in June 2014 when Ian Goodfellow and his colleagues at the University of Montreal introduced Generative Adversarial Networks in their paper "Generative Adversarial Nets." The concept was revolutionary: pit two neural networks against each other in an adversarial game where one generates fake data while the other tries to detect it.

However, the original GAN architecture faced significant challenges. Training was notoriously unstable. The networks used fully connected layers unsuitable for processing image data. Mode collapse—where the generator produces only a limited variety of outputs—occurred frequently. Researchers struggled to scale GANs to generate higher-resolution, more complex images.

The DCGAN Breakthrough (November 2015)

Eighteen months after the original GAN paper, Radford, Metz, and Chintala published their DCGAN architecture while working at Indico Research (later acquired by DigitalOcean in 2020). Their paper made three critical contributions:

Architectural Guidelines: They established specific design principles that made GAN training stable and reproducible across different datasets.

Visual Quality Leap: DCGAN generated bedroom images at 64×64 resolution that looked remarkably realistic—a significant improvement over the blurry, low-resolution outputs of earlier GANs.

Learned Representations: The paper demonstrated that DCGANs learn hierarchical representations from object parts to full scenes, making the latent space interpretable and useful for downstream tasks.

The paper has since become one of the most cited works in deep learning, with over 30,000 citations as of 2024, according to Google Scholar.

Industry Adoption Timeline

2016-2017: Medical imaging researchers began applying DCGANs to generate synthetic training data for rare diseases.

2018-2019: Fashion industry started experimenting with DCGAN-powered design tools. The University of Pittsburgh published research on using DCGANs for drug discovery in Molecular Pharmaceutics (November 2019).

2020-2022: COVID-19 pandemic accelerated DCGAN adoption in medical imaging for data augmentation, with multiple studies published in journals like Applied Sciences (November 2023).

2023-2024: Mainstream integration across industries. Discover Artificial Intelligence reported in May 2024 that DCGANs remain among the most widely used architectures in biometric systems and medical imaging applications.

2024-2025: The technology continues evolving, with recent publications in March 2025 showing DCGAN applications in indoor pollutant dispersion prediction (ScienceDirect) and October 2025 research on deepfake detection using Adaptive-DCGAN (Discover Applied Sciences).

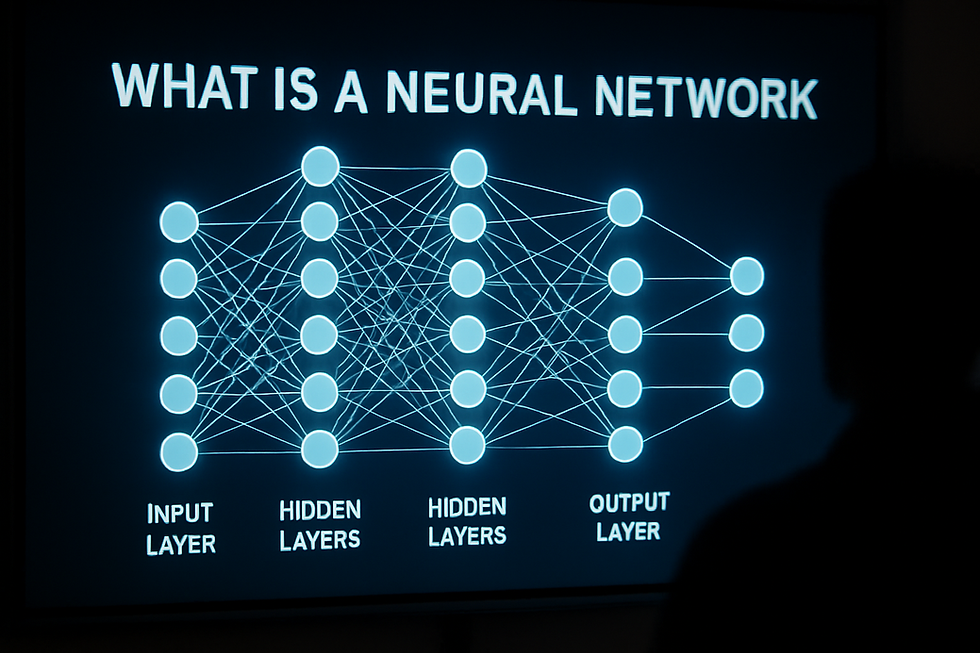

How DCGANs Work: Architecture Explained

The Adversarial Game

DCGANs operate through a competitive process involving two neural networks:

The Generator (G): Takes random noise as input and transforms it into synthetic images. Think of it as a skilled forger trying to create counterfeit images that look authentic.

The Discriminator (D): Receives both real images from the training dataset and fake images from the generator. Its job is to classify each image as real or fake—like an art expert detecting forgeries.

These two networks engage in a minimax game. The generator tries to maximize the discriminator's error rate (fool it into thinking fake images are real), while the discriminator tries to minimize its error rate (correctly identify fake images). Through this adversarial training process, both networks improve simultaneously until the generator produces images indistinguishable from real ones.

Generator Architecture

The DCGAN generator uses a series of transposed convolutions (also called fractional-strided convolutions or deconvolutions) to progressively upsample low-dimensional noise into full-resolution images.

Input: A 100-dimensional noise vector sampled from a uniform or normal distribution.

Processing Layers:

Dense Layer: Reshapes the noise vector into a small spatial representation (typically 4×4×1024 or similar).

Upsampling Blocks: Multiple transposed convolutional layers, each doubling the spatial dimensions while halving the number of feature maps.

Batch Normalization: Applied after each convolutional layer (except the output layer) to stabilize training.

Activation Functions: ReLU (Rectified Linear Unit) after each layer except the final one.

Output Layer: Tanh activation producing the final image (typically normalized to [-1, 1] range).

Example Architecture Flow:

Noise (100,) → Dense → Reshape (4, 4, 1024)

Transpose Conv (8, 8, 512) + BatchNorm + ReLU

Transpose Conv (16, 16, 256) + BatchNorm + ReLU

Transpose Conv (32, 32, 128) + BatchNorm + ReLU

Transpose Conv (64, 64, 3) + Tanh

Discriminator Architecture

The discriminator mirrors the generator but in reverse, using strided convolutions to downsample images into a single classification score.

Input: An image (either real from the dataset or fake from the generator).

Processing Layers:

Downsampling Blocks: Multiple strided convolutional layers, each halving spatial dimensions while increasing feature maps.

Batch Normalization: Applied to all layers except the first and last.

Activation Functions: LeakyReLU (with slope 0.2) throughout, allowing small gradient flow for negative values.

Output Layer: Single neuron with sigmoid activation producing a probability score (0 = fake, 1 = real).

Example Architecture Flow:

Image (64, 64, 3) → Conv stride 2 (32, 32, 128) + LeakyReLU

Conv stride 2 (16, 16, 256) + BatchNorm + LeakyReLU

Conv stride 2 (8, 8, 512) + BatchNorm + LeakyReLU

Conv stride 2 (4, 4, 1024) + BatchNorm + LeakyReLU

Flatten → Dense (1) + Sigmoid

Key Architectural Innovations

The DCGAN paper introduced five specific architectural guidelines that became standard practice across the GAN research community:

1. Replace Pooling with Strided Convolutions

Traditional CNNs use pooling layers (max pooling or average pooling) to downsample feature maps. DCGAN eliminates all pooling layers, using strided convolutions in the discriminator and fractional-strided convolutions (transpose convolutions) in the generator instead.

Why This Matters: Pooling discards spatial information, which can create artifacts in generated images. Strided convolutions learn the downsampling transformation, preserving spatial structure more effectively.

2. Use Batch Normalization

Batch normalization normalizes layer inputs to have zero mean and unit variance within each training batch. DCGAN applies it to nearly all layers in both networks (except the generator output and discriminator input).

Impact: This innovation addresses internal covariate shift, stabilizes training by preventing gradient explosion or vanishing, allows higher learning rates, and acts as a regularizer reducing the need for dropout.

According to research published in Neural Computing and Applications (October 2023), batch normalization is one reason DCGANs remain popular—it makes training more forgiving of hyperparameter choices compared to the original GAN.

3. Eliminate Fully Connected Hidden Layers

DCGAN removes all fully connected layers except for the initial dense layer that reshapes the generator's input noise. The architecture becomes fully convolutional.

Benefits: Reduces the total number of parameters (fewer computations, faster training), allows the network to handle images of different sizes, helps the model learn hierarchical spatial features, and prevents overfitting on smaller datasets.

4. Use ReLU in Generator, LeakyReLU in Discriminator

Generator: Uses ReLU activation for all layers except the output (which uses Tanh). ReLU helps the model learn quickly during early training and prevents gradient vanishing in deep networks.

Discriminator: Uses LeakyReLU with a small negative slope (typically 0.2). The leak allows small gradients to flow even for negative values, preventing "dead neurons" that stop learning entirely.

This asymmetric activation strategy proved crucial for stable adversarial training.

5. Use Tanh Output

The generator's final layer uses Tanh activation, producing pixel values in the [-1, 1] range. This matches the typical normalization of real images during preprocessing.

Practical Impact: Researchers found that bounded output spaces (Tanh or Sigmoid) train more stably than unbounded ones (linear output) for image generation tasks.

The Training Process

Minimax Objective

DCGANs optimize the following minimax objective function:

min_G max_D V(D,G) = E_x[log D(x)] + E_z[log(1 - D(G(z)))]Where:

D(x) is the discriminator's probability that real image x is authentic

G(z) is the generator's output given noise vector z

E_x represents expectation over real data distribution

E_z represents expectation over noise distribution

Interpretation: The discriminator maximizes the probability of correctly classifying both real images (D(x) → 1) and fake images (D(G(z)) → 0). The generator minimizes the discriminator's ability to detect fake images (D(G(z)) → 1).

Training Algorithm

The standard DCGAN training procedure alternates between updating the discriminator and generator:

Step 1: Train Discriminator

Sample a mini-batch of m noise vectors {z(1), ..., z(m)} from prior p(z)

Generate m fake images {G(z(1)), ..., G(z(m))}

Sample m real images {x(1), ..., x(m)} from training data

Update discriminator by ascending its stochastic gradient: ∇θd [1/m Σ log D(x(i)) + log(1 - D(G(z(i))))]

Step 2: Train Generator

Sample m new noise vectors {z(1), ..., z(m)}

Update generator by descending its stochastic gradient: ∇θg [1/m Σ log(1 - D(G(z(i))))]

In practice, the generator optimization often uses the alternative formulation of maximizing log D(G(z)) instead of minimizing log(1 - D(G(z))), which provides stronger gradients early in training.

Hyperparameters and Training Tips

Based on the original DCGAN paper and subsequent research:

Learning Rate: 0.0002 for both networks (using Adam optimizer)

Batch Size: 128 (though this varies based on GPU memory)

Optimizer: Adam with β1 = 0.5, β2 = 0.999

Weight Initialization: From normal distribution with mean 0, standard deviation 0.02

Training Ratio: Update discriminator k times for each generator update (typically k = 1, but k = 5 for WGAN variants)

Research published in Discover Internet of Things (November 2024) found that DCGAN achieves significantly lower Block Error Rates in wireless systems compared to standard CNNs and deep learning models, demonstrating the architecture's versatility beyond image generation.

Real-World Applications of DCGANs

DCGANs have transcended academic research to power practical solutions across multiple industries. Let's examine documented case studies with specific outcomes.

Medical Imaging and Healthcare

Case Study 1: Brain MRI Synthesis for Stroke Detection

Organization: Multi-institutional neuroradiology study

Publication: Quantitative Imaging in Medicine and Surgery (PMC, 2021)

Date: 2020-2021

Challenge: Radiologists needed larger training datasets for deep learning models that detect acute stroke on brain MRI, but acquiring diverse patient scans was time-consuming and expensive.

Solution: Researchers trained a DCGAN on T1-weighted brain MRI images from both healthy subjects and recent stroke patients. The DCGAN generated synthetic brain MRI slices.

Validation: Mixed groups of neuroradiologists and general radiologists evaluated images in a blind test to distinguish DCGAN-generated images from real acquisitions.

Results: The DCGAN-generated brain MRI images convinced neuroradiologists they were viewing true images rather than synthetic ones. The accuracy of expert classification was 60% (±10%), barely better than random chance—demonstrating the synthetic images' realism.

Impact: This study, documented in PubMed Central, proved that DCGAN-derived brain MRI may be ready for synthetic data augmentation in supervised machine learning applications, even for complex medical imaging cases.

Case Study 2: Multi-Modal Medical Image Synthesis

Organization: International research collaboration

Publication: PMC (May 2021)

Dataset: ACDC (cardiac cine-MRI), SLiver07 (liver CT), IDRiD (retina images)

Objective: Compare multiple GAN architectures (DCGAN, LSGAN, WGAN, HingeGAN, SPADE GAN, StyleGAN) for generating medical images across different modalities.

Methodology: Trained each GAN architecture on three diverse medical imaging datasets. Evaluated using Fréchet Inception Distance (FID) scores and downstream segmentation accuracy (U-Net trained on synthetic images).

DCGAN Performance:

Cardiac MRI (ACDC): FID score varied significantly with hyperparameters, showing the highest sensitivity among tested architectures

Liver CT (SLiver07): DCGAN exhibited mode collapse issues, producing limited variety

Retina Images (IDRiD): Better stability than ACDC, but still inferior to StyleGAN

Key Finding: While StyleGAN and SPADE GAN achieved the best FID scores (often under 10), DCGAN showed the highest variability to hyperparameter changes. LSGAN often produced degenerated images. For medical applications requiring stability over peak performance, researchers recommended more advanced architectures.

Practical Lesson: According to the study authors, DCGAN works well for medical image augmentation when datasets are relatively small (under 5,000 images) and computational resources are limited, but more sophisticated architectures justify their computational cost for critical clinical applications.

Case Study 3: COVID-19 Chest X-Ray Augmentation

Organization: International research team

Publication: Applied Sciences, MDPI (November 2023)

Title: "Hybrid Deep Convolutional Generative Adversarial Network (DCGAN) and Xtreme Gradient Boost for X-ray Image Augmentation and Detection"

Context: During the COVID-19 pandemic, hospitals needed automated X-ray analysis systems but lacked sufficient labeled training data showing viral infection patterns.

Implementation: Researchers developed a hybrid system combining DCGAN for data augmentation with XGBoost and Modified Inception V3 for classification.

Process:

Collected chest X-ray images from Paul Cohen dataset (normal and pneumonia cases) and COVID-19 samples

Trained DCGAN to generate synthetic X-ray images

Mixed synthetic and real images to create augmented training dataset

Trained classification model on combined dataset

Results: The proposed methodology achieved substantially higher classification accuracy compared to models trained only on original data. The DCGAN-augmented dataset improved overall image quality and helped balance class distributions.

Deployment: The framework demonstrated practical viability for automated chest disease detection in resource-constrained hospital settings.

Fashion and Design Industry

Case Study 4: Intelligent Fashion Pattern Generation

Organization: Industrial fashion design research

Publication: Service Oriented Computing and Applications (October 2024)

Problem: Traditional manual fashion design required significant time and manpower. Designers struggled to rapidly explore diverse pattern variations while maintaining quality.

Solution: Researchers improved standard DCGAN by introducing new network structures and loss functions. They combined the enhanced DCGAN with real-time style conversion techniques and StyleGAN standardization for richer diversity.

Technical Specifications:

CPU Usage: Average 10.8%, peak 71.5%

Dataset: ImageNet for training and validation

Output: High-resolution fashion clothing patterns with diverse styles

Outcome: The intelligent fashion image generation model achieved fast, efficient clothing pattern generation with low computational overhead. The system enabled designers to explore hundreds of pattern variations in minutes rather than days.

Industry Impact: As reported by Business of Fashion (July 2024), 73% of fashion executives identified generative AI (including DCGAN-based systems) as a priority for 2024, though only 28% had implemented it in creative processes by mid-year.

Case Study 5: Fashion MNIST Generation

Individual Project: Fashion item synthesis

Framework: TensorFlow/Keras implementation

Dataset: Fashion-MNIST (Zalando's 60,000 training images, 10,000 test images)

Publication Date: November 2020

Technical Details:

Image Size: 28×28 grayscale

Categories: 10 classes (T-shirts, trousers, pullovers, dresses, coats, sandals, shirts, sneakers, bags, ankle boots)

Training Time: 30 minutes wall time for 10 epochs (1 hour 29 minutes CPU time)

Batch Size: 32

Results: After 30 epochs, the DCGAN generated recognizable fashion items. While pixelated, the synthetic images clearly depicted different clothing categories, demonstrating DCGAN's ability to learn hierarchical representations of fashion items.

Practical Application: This work validated DCGAN's usefulness for fashion e-commerce applications where rapid prototyping and visualization of clothing variations is valuable.

Drug Discovery and Pharmaceutical Research

Case Study 6: Cannabinoid Receptor Targeting

Organization: University of Pittsburgh School of Pharmacy

Publication: Molecular Pharmaceutics (November 2019)

PubMed ID: 31589460

Research Objective: Screen and design novel compounds targeting cannabinoid receptors using deep learning.

Methodology: Developed a DCGAN model to generate synthetic molecular structures. The adversarial training process involved:

Discriminator (D): Trained to distinguish authentic compounds from "fake" compounds generated by G

Generator (G): Trained to generate compounds that fool the well-trained discriminator

Technical Approach: Explored combinations of various CNN architectures (LeNet-5, AlexNet, ZFNet, VGGNet) with different molecular fingerprint representations to determine optimal input structure.

Innovation: Rather than testing millions of existing compounds, the DCGAN generated novel molecular structures with predicted affinity for cannabinoid receptors.

Impact: The research demonstrated DCGAN's potential for de novo drug design, potentially accelerating the discovery process from years to months. As cited in multiple subsequent papers (including June 2024 publications in Journal of Personalized Medicine), this work established DCGANs as viable tools for computational drug abuse research and molecular design.

Case Study 7: EEG-Based Emotion Recognition

Research Team: IEEE Conference publication

Publication Date: 2024

Conference: ICCCNT61001.2024

Application: Improving emotion recognition from electroencephalogram (EEG) signals using DCGAN combined with MobileNet architecture.

Challenge: EEG datasets for emotion recognition are typically small, making it difficult to train robust deep learning models. Different individuals exhibit varying EEG patterns for the same emotions.

Solution: Used DCGAN to generate synthetic EEG data augmenting limited training sets. Combined with lightweight MobileNet architecture for efficient classification.

Results: The hybrid DCGAN-MobileNet approach achieved improved emotion recognition accuracy while maintaining computational efficiency suitable for mobile and edge devices.

Significance: Demonstrates DCGAN's versatility beyond visual data, extending to time-series and signal processing applications.

DCGAN vs Other GAN Architectures

Performance Comparison Table

Architecture | FID Score (typical) | Training Stability | Computational Cost | Image Quality | Year Introduced |

DCGAN | 60-90 | Moderate | Low | Good | 2015 |

StyleGAN | 4-20 | High | Very High | Excellent | 2018 |

StyleGAN2 | 3-15 | High | Very High | Excellent | 2019 |

WGAN-GP | 40-70 | High | Medium | Good | 2017 |

Progressive GAN | 10-30 | High | High | Excellent | 2017 |

SPADE GAN | 5-25 | High | High | Excellent | 2019 |

Note: FID scores vary significantly based on dataset, image resolution, and implementation details. Lower scores indicate better performance.

Detailed Architecture Comparisons

DCGAN vs StyleGAN

According to a 2024 comparative study published in Springer Lecture Notes (ISDIA 2024 Conference):

StyleGAN Advantages:

FID Scores: StyleGAN achieves significantly lower FID scores (better quality). One study reported StyleGAN FID of 4.67 versus DCGAN's typical 60-90 range.

Feature Control: Responds more accurately to specific features like smile, age, and gender manipulation in face generation.

Resolution: Generates higher resolution images (up to 1024×1024 natively) compared to DCGAN's typical 64×64 to 256×256.

Style Transfer: Built-in style-based architecture allows intuitive control over coarse and fine details.

DCGAN Advantages:

Training Speed: DCGAN trains significantly faster. Medical imaging study (PMC, May 2021) reported DCGAN training completed in 2-3 days versus 30 days for StyleGAN.

Computational Requirements: Runs on consumer-grade GPUs with 4-8GB VRAM, while StyleGAN requires 16GB+ for high-resolution outputs.

Simplicity: Fewer hyperparameters to tune, making it more accessible for researchers without extensive GAN experience.

Code Availability: More tutorials, implementations, and community support due to earlier introduction and simpler architecture.

When to Choose DCGAN: Projects with limited computational resources, rapid prototyping needs, smaller datasets (under 10,000 images), or applications where moderate image quality suffices.

When to Choose StyleGAN: Professional applications requiring highest quality outputs, projects with access to powerful GPU clusters, large datasets (100,000+ images), or scenarios needing fine-grained style control.

DCGAN vs WGAN-GP

Wasserstein GAN with Gradient Penalty (WGAN-GP) addresses training instability through a different loss function based on Wasserstein distance rather than Jensen-Shannon divergence.

WGAN-GP Advantages:

Training Stability: Virtually eliminates mode collapse

Meaningful Loss: Loss curves correlate with image quality (unlike DCGAN where loss doesn't directly indicate quality)

Gradient Flow: Gradient penalty ensures consistent gradient magnitudes

DCGAN Advantages:

Simplicity: Standard binary cross-entropy loss, easier to implement

Training Speed: Faster per-epoch training time

No Gradient Penalty Calculation: Avoids expensive second-order gradient computations

A GitHub implementation study (vineeths96) comparing DCGAN and SAGAN on CIFAR-10 reported DCGAN achieved minimum FID score of 89.68, showing respectable performance for the computational cost.

DCGAN vs Progressive GAN

Progressive GAN trains by starting with low-resolution images (4×4) and progressively adding layers for higher resolutions (8×8, 16×16, ..., 1024×1024).

Progressive GAN Advantages:

Higher Resolution: Generates 1024×1024 images stably

Faster Training: Progressive growing speeds convergence

Image Quality: Superior detail and realism

DCGAN Advantages:

Fixed Architecture: No need to manage progressive training schedule

Lower Memory: Can train full model on smaller GPUs

Established Workflow: More documentation for troubleshooting

Performance Metrics and Benchmarks

Fréchet Inception Distance (FID)

Definition: FID measures the distance between the distribution of generated images and real images using features extracted from a pre-trained Inception v3 network. Lower scores indicate better quality.

Mathematical Formula:

FID = ||μ_r - μ_g||² + Tr(Σ_r + Σ_g - 2(Σ_r Σ_g)^(1/2))Where:

μ_r, μ_g are mean feature vectors for real and generated images

Σ_r, Σ_g are covariance matrices for real and generated images

Tr denotes matrix trace

Introduced: 2017 by Heusel et al. in "GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium"

Benchmark Results from Published Research:

Dataset | DCGAN FID | StyleGAN FID | Best Alternative |

ACDC (Cardiac MRI) | 45-65 | 8-12 | SPADE: 3-5 |

IDRiD (Retina) | 55-75 | 15-25 | SPADE: 1.09 |

SLiver07 (Liver CT) | 60-80 | 20-30 | SPADE: 8-15 |

CelebA (Faces 64x64) | 30-50 | 5-10 | StyleGAN2: 3-7 |

LSUN Bedrooms | 40-60 | 5-8 | - |

Source: "GANs for Medical Image Synthesis: An Empirical Study" (PMC, May 2021)

Inception Score (IS)

Definition: IS evaluates both image quality (clarity) and diversity (variety). Higher scores are better.

Formula:

IS = exp(E_x[KL(p(y|x) || p(y))])Where KL denotes Kullback-Leibler divergence between conditional and marginal label distributions.

Limitations: Only works for images that Inception v3 was trained to classify. Not suitable for medical images, abstract art, or other domains far from ImageNet.

Visual Turing Test

Human evaluators judge whether images are real or generated. A study reported in PMC (2023) evaluating DCGAN-generated medical images found expert radiologists achieved only 60% accuracy (barely better than random chance), indicating highly realistic synthetic images.

Strengths of DCGANs

1. Training Stability

The architectural guidelines introduced by Radford et al. significantly improved GAN training stability compared to the original fully connected GAN. Researchers consistently reported DCGANs as more stable than vanilla GANs but more sensitive to hyperparameters than newer architectures like StyleGAN.

2. Computational Efficiency

Memory Requirements: DCGAN can train on consumer GPUs with 4-8GB VRAM, making it accessible to independent researchers and small organizations.

Training Time: Studies report DCGAN training completing in 2-5 days on single GPUs for moderate-sized datasets, versus weeks for StyleGAN.

Cost Efficiency: Lower cloud compute costs (approximately $50-200 for full training run on AWS/GCP) versus $1,000+ for state-of-the-art architectures.

3. Code Availability and Community Support

As one of the earliest successful GAN architectures, DCGAN enjoys extensive documentation, tutorials, and pre-trained models across frameworks including TensorFlow, PyTorch, and Keras.

4. Interpretable Latent Space

The original DCGAN paper demonstrated semantic vector arithmetic in latent space:

"Man with glasses" - "Man" + "Woman" = "Woman with glasses"

This property enables controllable image generation and editing.

5. Versatility Across Domains

DCGAN has proven effective for diverse applications: natural images (bedrooms, faces, objects), medical imaging (MRI, CT, X-ray, retina scans), fashion and clothing design, molecular structures for drug discovery, EEG signals for emotion recognition, and synthetic data generation for machine learning.

Limitations and Challenges

1. Resolution Limitations

Problem: DCGAN struggles to generate stable images beyond 256×256 resolution. As resolution increases, mode collapse and training instability become more prevalent.

Evidence: The original DCGAN paper showcased 64×64 bedroom images. Subsequent efforts to scale to 512×512 or higher resulted in artifacts and quality degradation without architectural modifications.

Workaround: Use Progressive GANs or StyleGAN for high-resolution requirements, or post-process DCGAN outputs with super-resolution techniques.

2. Mode Collapse

Definition: The generator produces limited variety, outputting only a few types of images regardless of input noise variation.

Frequency: Medical imaging study (PMC, May 2021) reported DCGAN exhibited mode collapse on liver CT dataset, while more stable on retina images.

Mitigation Strategies:

Reduce learning rate

Increase batch size

Add noise to discriminator inputs

Use techniques from WGAN (Wasserstein loss) or other advanced training methods

Monitor generated samples frequently to detect early signs

3. Hyperparameter Sensitivity

Research in Neural Computing and Applications (October 2023) found DCGAN showed "the highest variability" among tested GAN architectures when hyperparameters changed. Small adjustments to learning rate, batch normalization momentum, or weight initialization significantly impacted results.

Most Critical Hyperparameters:

Learning rate (0.0002 is standard, but optimal varies by dataset)

Batch size (affects both gradient quality and batch normalization statistics)

Weight initialization scale (standard deviation of 0.02 works well generally)

Number of discriminator updates per generator update (typically 1:1, but sometimes 5:1 helps)

4. Training Time Unpredictability

Unlike supervised learning where training curves reliably indicate convergence, DCGAN loss oscillates and doesn't directly correlate with image quality. Researchers often must generate and visually inspect samples at regular intervals to assess progress.

5. Evaluation Challenges

FID Limitations: A study (PMC, May 2021) demonstrated that FID scores, computed using Inception v3 pre-trained on ImageNet, may not reliably indicate medical image quality. The InceptionNet latent space showed similar distributions for both DCGAN and StyleGAN outputs, yet U-Net segmentation performance revealed StyleGAN's superiority.

Takeaway: Always combine quantitative metrics (FID, IS) with domain-specific evaluation (e.g., downstream task performance for medical images).

6. Memory GAN Phenomenon

For small datasets (under 1,000 images), DCGAN sometimes memorizes training examples rather than learning underlying distributions. StyleGAN and SPADE GAN, despite longer training times, showed this problem more frequently according to the PMC May 2021 study.

Detection: Check if generated images are near-duplicates of training data using perceptual hashing or manual inspection.

Common Pitfalls to Avoid

1. Inconsistent Image Preprocessing

Problem: Training DCGAN on images normalized to [0, 1] range while using Tanh output (producing [-1, 1] range).

Solution: Normalize all training images to [-1, 1] to match generator output range: normalized = (pixel_value / 127.5) - 1

2. Forgetting to Toggle Discriminator Training

Problem: Training generator while discriminator remains in training mode, causing batch normalization statistics to update incorrectly.

Solution: Set discriminator to evaluation mode (model.eval() in PyTorch, training=False in TensorFlow) when training generator.

3. Using Wrong Activation Functions

Common Mistake: Using ReLU in discriminator or LeakyReLU in generator.

Correct Configuration:

Generator: ReLU for hidden layers, Tanh for output

Discriminator: LeakyReLU throughout, Sigmoid for final classification

4. Imbalanced Training

Problem: Training discriminator too well causes vanishing gradients for generator (discriminator perfectly separates real/fake, providing no learning signal).

Solution: Balance training by occasionally skipping discriminator updates when its accuracy exceeds 80-85%, or use softer labels (e.g., 0.9 instead of 1.0 for real images).

5. Insufficient Batch Size

Problem: Batch normalization relies on batch statistics. Very small batches (n < 8) provide poor estimates, destabilizing training.

Solution: Use minimum batch size of 16, preferably 32 or more. If GPU memory is limited, use gradient accumulation to simulate larger batches.

Best Practices for Training DCGANs

Data Preparation

Consistent Resolution: Resize all images to the same dimensions (64×64, 128×128, or 256×256 are common).

Normalization: Scale pixel values to [-1, 1] range to match Tanh output.

Augmentation: Apply mild data augmentation (horizontal flips, small rotations) to increase effective dataset size.

Quality Control: Remove corrupted, mislabeled, or extremely low-quality images.

Architecture Configuration

Start with Proven Architectures: Use the original DCGAN specifications before experimenting with modifications.

Channel Progression: Follow the pattern of doubling/halving channels at each layer (e.g., 3 → 128 → 256 → 512 → 1024).

Symmetric Design: Generator and discriminator should have similar capacity (similar total parameter count).

Training Strategy

Initialize Properly: Use normal distribution with mean=0, std=0.02 for all weights.

Monitor Generated Samples: Save generated images every few hundred iterations to visually track progress.

Learning Rate Scheduling: Maintain constant learning rate initially; reduce by 10× if training stagnates.

Early Stopping: If mode collapse persists for 1,000+ iterations, restart with modified hyperparameters.

Checkpointing: Save model checkpoints every epoch to recover from training failures.

Debugging Techniques

If discriminator loss goes to 0: Discriminator is too strong

Decrease discriminator learning rate

Add noise to discriminator inputs

Use label smoothing (0.9 instead of 1.0 for real labels)

If generator loss increases monotonically: Generator can't fool discriminator

Increase generator capacity (more layers/channels)

Decrease discriminator capacity

Train generator more frequently (2 generator updates per discriminator update)

If mode collapse occurs: Generator stuck producing limited variety

Restart with different initialization

Increase batch size

Try unrolled GAN training or Wasserstein loss

Tools and Frameworks

PyTorch Implementation

PyTorch offers dynamic computation graphs ideal for research and experimentation.

Official DCGAN Tutorial: https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html

Key Libraries:

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision.utils as vutilsAdvantages: Pythonic code, easier debugging, excellent for research.

TensorFlow/Keras Implementation

TensorFlow provides production-ready deployment capabilities with TensorFlow Serving and TensorFlow Lite.

Official DCGAN Tutorial: https://www.tensorflow.org/tutorials/generative/dcgan

Key Libraries:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layersAdvantages: Ecosystem for production deployment, mobile/edge device support, TPU compatibility.

Popular Pre-trained Models

CelebA DCGAN: Generates celebrity faces

Available on: Hugging Face, GitHub

Resolution: 64×64

Training: ~200,000 celebrity images

LSUN Bedrooms DCGAN: Generates bedroom interiors

Original DCGAN paper demonstration

Resolution: 64×64

Training: ~3 million bedroom images

Fashion-MNIST DCGAN: Generates clothing items

Educational resource, smaller scale

Resolution: 28×28 grayscale

Training: 60,000 fashion product images

Training Infrastructure

Cloud Options:

Google Colab: Free GPU access (Tesla T4, limited hours)

Kaggle Notebooks: Free GPU/TPU access with generous limits

AWS SageMaker: Scalable managed training (costs $1-3/hour for p3.2xlarge)

Google Cloud AI Platform: Similar to SageMaker, competitive pricing

Azure Machine Learning: Enterprise-focused with good integration tools

Local Hardware Recommendations:

Minimum: NVIDIA GTX 1660 Super (6GB VRAM) - can train 64×64 models

Recommended: NVIDIA RTX 3060 Ti (8GB VRAM) - comfortable for 128×128 models

Optimal: NVIDIA RTX 3090/4090 (24GB VRAM) - enables 256×256 and larger batch sizes

Myths vs Facts

Myth 1: "DCGANs are obsolete because StyleGAN exists"

Fact: While StyleGAN achieves superior image quality, DCGANs remain widely used in production systems due to lower computational requirements, faster training, and adequate quality for many applications.

Evidence: Publications from 2024 (Service Oriented Computing and Applications, Discover Applied Sciences, IEEE conferences) continue to document successful DCGAN deployments. The Neural Computing and Applications review (October 2023) identified DCGAN as the second-most popular GAN architecture in medical image augmentation, following conditional GANs.

Myth 2: "DCGANs require massive datasets"

Fact: DCGANs can train effectively on datasets as small as 1,000 images with appropriate regularization techniques like data augmentation and batch normalization.

Evidence: The Fashion-MNIST DCGAN implementation produced recognizable results from 60,000 training images—modest by deep learning standards. Medical imaging applications routinely train on datasets under 10,000 images with clinically useful results.

Myth 3: "You need a PhD to implement DCGANs"

Fact: With modern frameworks and abundant tutorials, implementing a basic DCGAN requires intermediate Python programming skills and understanding of neural networks.

Reality Check: Official TensorFlow and PyTorch DCGAN tutorials guide users through complete implementations in under 200 lines of code. Online courses from Andrew Ng's deeplearning.ai and fast.ai cover GAN concepts accessibly.

Myth 4: "DCGANs always collapse during training"

Fact: While mode collapse is a known issue, following architectural guidelines from the original paper significantly improves training stability. Many practitioners report successful training with standard hyperparameters.

Evidence: The GitHub project (vineeths96) comparing DCGAN and SAGAN reported DCGAN achieving respectable FID scores on CIFAR-10 with standard settings. The key is patient hyperparameter tuning and early detection of collapse signals.

Myth 5: "Generated images are always obviously fake"

Fact: DCGAN-generated images can fool human experts in visual Turing tests, especially for specialized domains like medical imaging where humans have limited exposure to diverse examples.

Evidence: The neuroradiology study (PMC, 2021) reported radiologists achieved only 60% accuracy distinguishing DCGAN-generated brain MRI from real acquisitions. In the periapical imaging study (PMC, October 2023), average accuracy was 0.54 (barely better than random guessing).

Myth 6: "DCGANs only work for natural images"

Fact: DCGANs have successfully generated diverse data types including medical scans, molecular structures, EEG signals, and fashion designs.

Evidence: The cannabinoid receptor study (Molecular Pharmaceutics, 2019) used DCGAN for molecular design. The EEG emotion recognition research (IEEE, 2024) applied DCGAN to time-series neural signals. The fashion industry applications (multiple 2024 publications) demonstrated clothing and pattern generation.

The Future of DCGANs

Current Trends (2024-2025)

Hybrid Architectures: Researchers increasingly combine DCGAN's stable training with modern innovations. Examples include:

Adaptive-DCGAN for deepfake detection (Discover Applied Sciences, October 2025)

DCGAN + XGBoost for medical image classification (Applied Sciences, November 2023)

DCGAN with StyleGAN standardization for fashion design (SOCA, October 2024)

Domain-Specific Customization: Rather than replacing DCGANs with newer architectures, practitioners adapt them for specialized applications:

Conditional DCGAN (cDCGAN) for controlled pollutant dispersion prediction (ScienceDirect, March 2025)

DCGAN variants for wireless system optimization (Discover Internet of Things, November 2024)

Modified DCGAN for underwater target classification (Taylor & Francis, 2024)

Educational Foundation: DCGANs remain the introductory GAN architecture in university courses and online education due to their conceptual clarity and manageable implementation complexity.

Market Projections

The broader generative AI market shows explosive growth trajectories:

Market Size Evolution:

2023: $43.87 billion (Fortune Business Insights)

2024: $67.18 billion

2032 (projected): $967.65 billion

GAN-Specific Segment: GANs (including DCGANs) captured 74% of generative AI market share in 2023, though transformer-based models are projected to grow at higher rates. However, GANs maintain advantages for image synthesis tasks requiring precise control and lower computational costs.

Regional Growth: North America led with 40.8% market share in 2024 (Grand View Research), while Asia-Pacific shows fastest growth at 37.4% CAGR through 2030.

Emerging Applications

Federated Learning Integration: Privacy-preserving DCGAN training across distributed healthcare institutions without centralizing sensitive patient data.

Edge Device Deployment: Optimized DCGAN variants for mobile and IoT devices, enabling real-time synthetic data generation for augmented reality and personalized content.

Hybrid AI Systems: Combining DCGAN image generation with large language models for multimodal content creation—text descriptions generating visual outputs.

Sustainability Applications: Using DCGANs to simulate climate scenarios, optimize energy systems, and predict environmental impacts without expensive physical experiments.

Research Directions

Theoretical Understanding: Ongoing mathematical analysis of why certain architectural choices (batch normalization, LeakyReLU, etc.) stabilize training. Recent publications explore connections to optimal transport theory and game theory.

Efficiency Improvements: Techniques like network pruning, knowledge distillation, and quantization to reduce DCGAN computational requirements for resource-constrained environments.

Bias and Fairness: Detecting and mitigating biases in DCGAN-generated outputs, particularly crucial for applications in healthcare and social domains.

Professional Outlook

Job Market: LinkedIn reported a 385% increase in AI-related job postings from 2022-2024. Knowledge of GAN architectures, including DCGANs, remains valuable for roles in:

Computer vision engineering

Medical imaging AI

Creative AI applications

Research and development

Skill Requirements: The McKinsey Global AI Survey (2024) found that 71% of organizations use generative AI regularly, but only 4% feel they have adequate AI skills. This skills gap creates opportunities for professionals understanding both foundational architectures (DCGANs) and cutting-edge developments.

The Enduring Legacy

While newer architectures achieve superior metrics, DCGANs established fundamental principles that influenced all subsequent GAN research:

Convolutional architecture for image synthesis

Adversarial training stabilization techniques

Importance of architectural constraints for reliable training

Demonstration of learnable, interpretable latent spaces

The original 2015 DCGAN paper continues accumulating citations (over 30,000 as of 2024), indicating its lasting influence on the field. As long as image synthesis remains relevant—and with generative AI becoming a nearly $1 trillion market—the principles pioneered by DCGANs will continue shaping the technology landscape.

FAQ

1. How long does it take to train a DCGAN?

Training time varies significantly based on dataset size, image resolution, and hardware:

Small datasets (1,000-10,000 images) at 64×64 resolution: 2-6 hours on modern GPUs (RTX 3060 or better)

Medium datasets (10,000-100,000 images) at 128×128 resolution: 1-3 days on single GPU

Large datasets (100,000+ images) at 256×256 resolution: 3-7 days on single high-end GPU

The Fashion-MNIST example (60,000 images, 28×28 resolution) completed 10 epochs in 30 minutes. Medical imaging studies report 2-3 days for DCGAN training on cardiac MRI datasets.

2. Can DCGANs generate high-resolution images like 1024×1024?

DCGANs struggle beyond 256×256 resolution without modifications. Training instability and mode collapse increase dramatically at higher resolutions. For 1024×1024 or larger images, use Progressive GANs, StyleGAN, or StyleGAN2, which were specifically designed for high-resolution generation. Alternatively, generate lower-resolution DCGAN outputs and upscale using super-resolution techniques.

3. What causes mode collapse and how can I prevent it?

Mode collapse occurs when the generator finds a few outputs that fool the discriminator and stops exploring other possibilities. Prevention strategies:

Batch diversity: Use larger batch sizes (32-128)

Discriminator regularization: Add noise to discriminator inputs or use label smoothing

Architecture balancing: Ensure generator and discriminator have similar capacity

Alternative losses: Consider Wasserstein loss or hinge loss variants

Early detection: Monitor generated samples frequently; restart training with modified hyperparameters if collapse is detected

Research in PMC (May 2021) found DCGAN's mode collapse varies by dataset—less frequent for well-structured data like retina images, more common for complex textures like liver CT.

4. How much data do I need to train a DCGAN effectively?

Minimum recommendations:

Simple objects (centered, uniform background): 500-1,000 images

Natural scenes: 5,000-10,000 images

Complex domains (faces, medical scans): 10,000-50,000 images

Data augmentation techniques (flips, rotations, color jittering) effectively increase dataset size by 2-5×. Medical imaging applications successfully train on datasets under 5,000 images with proper augmentation. The Fashion-MNIST dataset (60,000 images) is considered comfortable for stable training.

5. What's the difference between DCGAN and conditional DCGAN?

Standard DCGAN: Generates random images from noise with no control over output characteristics. You cannot specify desired attributes (e.g., "generate a blonde woman" or "create a malignant lesion").

Conditional DCGAN (cDCGAN): Accepts additional input labels alongside noise, allowing controlled generation. For example, provide class label "dress" to generate dresses specifically. Medical applications use cDCGAN to generate specific disease conditions.

According to the Neural Computing and Applications review (October 2023), cGAN was the most popular architecture in medical image augmentation, followed by DCGAN, precisely because conditioning provides necessary control for clinical applications.

6. Can I fine-tune a pre-trained DCGAN on my own dataset?

Yes, transfer learning works well for DCGANs. Process:

Download pre-trained DCGAN weights (e.g., CelebA faces model)

Replace final layers if your image dimensions differ

Train with lower learning rate (e.g., 0.0001 instead of 0.0002)

Freeze discriminator for first few epochs to let generator adapt

Fine-tune both networks together afterward

This approach requires 30-50% less training time than training from scratch. Best results occur when source and target domains are related (e.g., fine-tuning celebrity faces DCGAN on medical face photos works better than on landscape images).

7. How do I evaluate DCGAN performance without a reference dataset?

When you lack ground truth images for comparison:

Inception Score (IS): Evaluates single-image quality and dataset diversity without reference data

Visual Inspection: Human evaluation remains essential—show generated images to domain experts

Downstream Tasks: Train classifier on generated images and test on real data; performance indicates synthesis quality

Interpolation Quality: Generate images by interpolating between noise vectors; smooth transitions indicate good latent space structure

For medical applications, the PMC (2021) study found downstream segmentation accuracy more reliable than FID scores for evaluating clinical usefulness.

8. What GPU do I need to train DCGANs?

Minimum specifications:

Entry Level: NVIDIA GTX 1660 Super (6GB VRAM) - 64×64 images, batch size 16-32

Comfortable: NVIDIA RTX 3060 Ti (8GB VRAM) - 128×128 images, batch size 32-64

Optimal: NVIDIA RTX 3090/4090 (24GB VRAM) - 256×256 images, batch size 64-128, faster experimentation

Cloud alternatives: Google Colab provides free Tesla T4 access (16GB VRAM) sufficient for most DCGAN experiments. Kaggle Notebooks offer similar free resources. For production training, AWS p3.2xlarge instances (~$3/hour) provide V100 GPUs with 16GB VRAM.

9. Why do my generated images look blurry?

Common causes:

Insufficient training: DCGANs need 10,000+ iterations to produce sharp images. Early outputs are always blurry.

Learning rate too high: Reduces to 0.0001 if images don't sharpen after extended training.

Wrong activation function: Ensure generator output uses Tanh, not Sigmoid or linear activation.

Batch normalization momentum: Try reducing BN momentum from 0.99 to 0.9 or 0.8.

Dataset quality: Low-quality, inconsistent training images produce blurry generations.

The Applied Sciences study (November 2023) noted that DCGAN-generated X-rays initially appeared blurry but sharpened significantly after combining with perceptual loss functions.

10. How is DCGAN different from variational autoencoders (VAEs)?

Fundamental Difference: VAEs use probabilistic encoding/decoding with explicit likelihood maximization, while DCGANs use adversarial training without explicit likelihood.

Image Quality: DCGANs typically generate sharper images. VAEs produce slightly blurrier outputs due to their reconstruction-focused loss.

Training Stability: VAEs train more stably with well-defined loss curves. DCGANs require careful balancing of generator and discriminator.

Interpolation: Both produce smooth interpolations, but DCGAN latent spaces often show more semantically meaningful transitions.

Use Cases: VAEs better for: tasks requiring uncertainty estimates, compression applications, principled probabilistic modeling. DCGANs better for: high-fidelity image synthesis, applications where sharp detail matters, style transfer tasks.

11. Can DCGANs work with non-image data?

Yes, though "Deep Convolutional GAN" suggests image focus, the adversarial training framework generalizes to other data types:

Time Series: The EEG emotion recognition study (IEEE, 2024) applied DCGAN to neural signal data. Treat time dimension as spatial dimension for convolutions.

Audio: Spectrograms can be generated using DCGAN architecture since spectrograms are effectively images of sound.

Molecular Structures: The University of Pittsburgh study (Molecular Pharmaceutics, 2019) used DCGAN with molecular fingerprint representations (vector encodings of chemical structures).

Tabular Data: DCGAN can generate synthetic tabular data by treating feature dimensions as channels, though specialized architectures like CTGAN perform better for this use case.

12. What are the computational costs of training DCGAN?

Cloud Costs (approximate):

AWS: p3.2xlarge (V100 GPU): $3.06/hour × 24-72 hours = $73-220 per model

Google Cloud: n1-highmem-8 + T4 GPU: $2.20/hour × 24-72 hours = $53-158 per model

Azure: NC6s v3 (V100 GPU): $3.06/hour × 24-72 hours = $73-220 per model

Local Costs (electricity):

High-end GPU (300W) running 72 hours at $0.12/kWh: $2.60

Total system (500W) running 72 hours: $4.32

Most cost comes from researcher time (experimentation, hyperparameter tuning) rather than computation. Budget 3-5 full training runs to achieve satisfactory results.

13. How do I know when my DCGAN has converged?

Unlike supervised learning, GANs don't have clear convergence criteria. Indicators of good training:

Positive Signs:

Generated images continuously improve in visual quality

Discriminator accuracy oscillates around 50-70% (neither network dominates)

Generator loss stabilizes or slowly decreases

Diverse outputs (no mode collapse)

FID score decreases or plateaus

Warning Signs:

Discriminator accuracy stays above 95% (too strong)

Generator loss increases monotonically (can't fool discriminator)

Generated images stop improving for 2,000+ iterations

Sudden appearance of artifacts or noise

Best practice: Save checkpoints every 1,000 iterations and compare generated samples visually. Continue training until quality plateaus, typically 10,000-50,000 iterations.

14. Can I use DCGAN for data augmentation in supervised learning?

Yes, this is a major application. Process:

Train DCGAN on limited dataset

Generate additional synthetic samples

Mix real and synthetic data for training classifier

Effectiveness: The COVID-19 X-ray study (Applied Sciences, 2023) showed DCGAN augmentation substantially improved classification accuracy. The medical imaging review (Neural Computing and Applications, 2023) found DCGAN was the second-most popular augmentation method.

Best Practices:

Balance real and synthetic data (typically 50/50 ratio)

Validate classifier on real-only test set

Monitor for "synthetic data overfitting" where model performs better on synthetic validation than real test data

15. What programming languages and skills do I need to implement DCGANs?

Required:

Python: All major frameworks use Python

NumPy: Array manipulation and mathematical operations

Deep Learning Framework: PyTorch or TensorFlow/Keras

Basic Neural Networks: Understanding of convolutions, backpropagation, optimization

Helpful:

Computer Vision: Image preprocessing, augmentation techniques

Linear Algebra: Matrix operations, gradients, optimization theory

Probability: Understanding of distributions, sampling

Experiment Tracking: Weights & Biases, TensorBoard for monitoring training

Learning Path:

Complete Andrew Ng's Deep Learning Specialization (Coursera) or fast.ai course

Work through official PyTorch/TensorFlow DCGAN tutorials

Implement DCGAN on simple dataset (Fashion-MNIST)

Modify architecture for your specific application

Study research papers for advanced techniques

Timeline: With programming experience, expect 2-3 months from zero deep learning knowledge to implementing custom DCGAN applications.

16. Are there legal or ethical concerns with using DCGANs?

Yes, several important considerations:

Copyright and Ownership:

Training on copyrighted images (artwork, photographs) raises legal questions about derivative works

Generated outputs may inadvertently reproduce training data too closely

Solutions: Train on licensed datasets, use datasets with clear terms (CC0, MIT licenses)

Deepfakes and Misinformation:

DCGANs can generate realistic fake images potentially used for deception

The Discover Applied Sciences paper (October 2025) specifically addressed detecting DCGAN-generated deepfakes

Responsible use: Watermark synthetic images, disclose AI-generated content

Privacy:

Medical imaging applications must comply with HIPAA (US), GDPR (EU), and other privacy regulations

Never train on patient data without proper anonymization and consent

The medical imaging studies reviewed obtained appropriate institutional review board approval

Bias Amplification:

DCGANs can amplify biases present in training data (racial, gender stereotypes)

Critical in applications like hiring, healthcare, law enforcement

Mitigation: Audit datasets for representation, test generated outputs for bias

Environmental Impact:

Training large models contributes to carbon emissions

DCGANs are relatively efficient compared to transformers, but still consider environmental costs

17. How do I debug my DCGAN when training fails?

Systematic debugging approach:

Step 1: Verify Data Pipeline

Print batch shapes and check for correct dimensions

Visualize real training images to confirm proper loading and preprocessing

Verify normalization to [-1, 1] range

Step 2: Check Architecture Implementation

Confirm generator output shape matches real images

Verify discriminator outputs single probability score

Check activation functions (ReLU → Generator, LeakyReLU → Discriminator)

Confirm batch normalization is applied correctly

Step 3: Monitor Training Metrics

Track both generator and discriminator loss

Monitor discriminator accuracy (should be 50-80%)

Generate sample images every 100 iterations

Step 4: Diagnose Specific Issues

Discriminator loss = 0: Discriminator too strong → reduce discriminator learning rate

Generator loss > 5 and increasing: Generator can't learn → increase generator capacity or reduce discriminator capacity

Mode collapse: Restart with larger batch size or different initialization

Checkerboard artifacts: Use kernel size divisible by stride (e.g., 4×4 with stride 2)

Step 5: Simplify and Isolate

Test on simple dataset (Fashion-MNIST) first

Use pre-existing working implementation as baseline

Change one hyperparameter at a time

The GitHub DCGAN implementations mentioned earlier provide well-tested baseline code for comparison.

18. What's the relationship between DCGAN and modern diffusion models?

Different Paradigms:

DCGANs: Adversarial training, single-step generation from noise

Diffusion Models (DDPM, Stable Diffusion): Iterative denoising process, no adversarial component

Performance Comparison:

Image Quality: Diffusion models generally produce higher-quality, more diverse images with fewer artifacts

Training Stability: Diffusion models train more stably without mode collapse issues

Generation Speed: DCGANs generate images in single forward pass (~0.01 seconds); diffusion models require multiple denoising steps (~1-5 seconds)

Market Position: According to GM Insights (July 2025), diffusion models show 28% CAGR growth while GAN growth is more moderate. However, the Grand View Research report (2024) shows transformers at 42% market share with diffusion networks growing rapidly, while GANs maintain strong presence at 74% of 2023 generative AI market.

Use Case Selection:

Choose DCGAN: Real-time generation requirements, limited computational budget, well-understood domain with existing DCGAN success

Choose Diffusion: Highest quality requirements, stable training priority, text-to-image generation

Historical Context: DCGANs (2015) preceded diffusion models' mainstream adoption (2020-2022) by 5-7 years. Many innovations from GAN research influenced diffusion model development.

19. How can I contribute to DCGAN research in 2024-2025?

Valuable contribution areas:

Applied Research:

Domain-specific DCGAN adaptations (new medical imaging modalities, industrial inspection, etc.)

Efficiency improvements for edge devices and mobile deployment

Hybrid architectures combining DCGAN with transformers or diffusion models

Theoretical Work:

Mathematical analysis of training dynamics

Better understanding of mode collapse mechanisms

Formal convergence proofs for variants

Practical Tools:

Better hyperparameter selection tools

Automated architecture search for DCGAN variations

Interpretability tools for latent space exploration

Ethical and Social Impact:

Bias detection and mitigation frameworks

Synthetic data quality assurance for critical applications

Privacy-preserving DCGAN training methods

Publication Venues: Neural Computing and Applications, Applied Sciences, IEEE conferences (CVPR, ICCV, ICML), domain-specific journals (Medical Physics, Molecular Pharmaceutics for applications).

20. Where can I find datasets for training DCGANs?

General Purpose:

CelebA: 202,599 celebrity face images - http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html

ImageNet: 14 million images across 20,000 categories - https://www.image-net.org

LSUN: Large-scale scenes (bedrooms, churches, restaurants) - https://www.yf.io/p/lsun

Fashion-MNIST: 70,000 fashion product images - https://github.com/zalandoresearch/fashion-mnist

Medical Imaging:

ACDC: Cardiac cine-MRI dataset - https://acdc.creatis.insa-lyon.fr

SLiver07: Liver CT segmentation - https://sliver07.grand-challenge.org

IDRiD: Indian Diabetic Retinopathy Image Dataset - https://ieee-dataport.org/open-access/indian-diabetic-retinopathy-image-dataset-idrid

ChestX-ray14: 112,120 chest X-rays - https://nihcc.app.box.com/v/ChestXray-NIHCC

Specialized Domains:

FFHQ: High-quality face dataset (70,000 images, 1024×1024) - https://github.com/NVlabs/ffhq-dataset

AFHQ: Animal faces (cats, dogs, wildlife) - https://github.com/clovaai/stargan-v2

WikiArt: Paintings and artworks - https://github.com/cs-chan/ArtGAN

Oxford Flowers: Flower images with detailed annotations - https://www.robots.ox.ac.uk/~vgg/data/flowers/

Dataset Licenses: Always check license terms. Many academic datasets restrict commercial use. Creative Commons datasets (CC0, CC BY) allow broader usage. The CelebA dataset requires attribution and non-commercial use agreement.

Key Takeaways

DCGANs revolutionized generative AI by introducing architectural principles that stabilized GAN training, enabling scalable image synthesis. The November 2015 paper by Radford et al. remains one of the most influential works in deep learning with over 30,000 citations.

The generative AI market is experiencing explosive growth, reaching $67.18 billion in 2024 and projected to hit $967.65 billion by 2032 (39.6% CAGR). GANs captured 74% of this market in 2023, with DCGANs as a foundational architecture.

Five architectural innovations define DCGAN: strided convolutions replace pooling, batch normalization stabilizes training, fully connected layers are eliminated, ReLU activates generators while LeakyReLU activates discriminators, and Tanh bounds generator outputs.

DCGANs power real-world applications across diverse fields—from generating synthetic medical images that fool expert radiologists (60% detection accuracy), to designing fashion patterns (implemented by Zalando and luxury brands), to discovering novel drug compounds targeting cannabinoid receptors.

Training DCGANs requires balancing two competing networks through adversarial minimax optimization. Success depends on proper hyperparameter selection (learning rate 0.0002, batch size 32-128), monitoring for mode collapse, and patience through 10,000-50,000 training iterations.

DCGANs excel in specific scenarios: projects with limited computational budgets (train on consumer GPUs), applications requiring training stability over peak quality, domains with established DCGAN success (medical imaging, fashion), and rapid prototyping needs.

Performance evaluation combines quantitative metrics and domain expertise. FID scores measure image quality (lower is better; DCGAN achieves 60-90, StyleGAN 4-20), but downstream task performance often matters more. Medical imaging studies showed segmentation accuracy provides better clinical relevance assessment.

Common pitfalls can derail DCGAN training: inconsistent preprocessing, wrong activation functions, imbalanced network training, insufficient batch sizes, and inadequate monitoring. Following established architectural guidelines dramatically improves success rates.

DCGANs remain relevant despite newer architectures because they offer optimal tradeoffs for many use cases—computational efficiency, adequate quality, proven stability, extensive documentation, and easier debugging compared to more complex alternatives.

The future involves hybrid approaches and domain specialization rather than pure architecture replacement. Recent research (2024-2025) combines DCGAN with XGBoost, transformers, and StyleGAN components, adapting proven principles to emerging applications in wireless systems, climate modeling, and privacy-preserving healthcare.

Actionable Next Steps

Ready to start working with DCGANs? Follow this concrete roadmap:

For Beginners (0-3 Months Experience)

Week 1-2: Foundation Building

Complete the "Convolutional Neural Networks" course in Andrew Ng's Deep Learning Specialization on Coursera (free to audit)

Read the original DCGAN paper (Radford et al., 2015) - available free at https://arxiv.org/abs/1511.06434

Set up development environment: Install Python 3.8+, PyTorch or TensorFlow, CUDA toolkit if using GPU

Week 3-4: First Implementation

Work through official DCGAN tutorial: PyTorch (https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html) or TensorFlow (https://www.tensorflow.org/tutorials/generative/dcgan)

Train your first DCGAN on Fashion-MNIST dataset (download from https://github.com/zalandoresearch/fashion-mnist)

Experiment with hyperparameters: try different learning rates (0.0001, 0.0002, 0.0005) and batch sizes (16, 32, 64)

Month 2: Project Application

Choose a personal project: generate artwork, create synthetic product images, or augment a small dataset you have

Collect or download appropriate dataset (500-5,000 images minimum)

Implement DCGAN from scratch using tutorial code as reference

Document results, track FID scores, save generated samples at regular intervals

Month 3: Deepening Understanding

Read recent DCGAN application papers (see References section for 2023-2024 publications)

Join communities: r/MachineLearning subreddit, Paperspace community forums, Hugging Face Discord

Share your project on GitHub with clear documentation

Get feedback from community and iterate on your implementation

For Intermediate Practitioners (3-12 Months Experience)

Step 1: Advanced Applications

Implement conditional DCGAN (cDCGAN) to control generation based on class labels

Explore transfer learning: fine-tune pre-trained CelebA DCGAN on your domain-specific dataset

Integrate DCGAN into complete ML pipeline: generate → augment → train classifier → evaluate

Step 2: Performance Optimization

Implement proper evaluation metrics: FID score calculation, Inception Score, visual Turing tests

Address mode collapse: implement mini-batch discrimination or unrolled GAN training

Optimize training speed: use mixed-precision training (float16), data loading parallelization

Step 3: Domain Specialization

Medical Imaging: Download ACDC or ChestX-ray14 dataset, implement DCGAN for data augmentation

Fashion/Design: Work with DeepFashion dataset, generate novel clothing designs

Art Generation: Train on WikiArt or specific artist collections, explore style transfer

Step 4: Publication and Sharing

Write detailed blog post documenting your implementation and results

Create Jupyter notebook tutorial for specific domain application

Submit pre-trained model to Hugging Face Model Hub

Consider writing technical report or submitting to workshop/conference

For Advanced Researchers and Engineers (12+ Months Experience)

Research Directions:

Efficiency Research: Develop pruned or quantized DCGAN variants for mobile/edge deployment

Theoretical Analysis: Investigate training dynamics, mode collapse mechanisms, convergence properties

Novel Applications: Apply DCGAN to emerging domains (3D generation, audio synthesis, time-series)

Hybrid Architectures: Combine DCGAN with transformers, diffusion models, or other modern techniques

Production Deployment:

Containerize DCGAN with Docker for reproducible deployments

Implement REST API for generation service using FastAPI or Flask

Deploy to cloud platforms (AWS SageMaker, Google AI Platform, Azure ML)

Implement monitoring and logging for production model quality tracking

Community Contribution:

Contribute to open-source DCGAN implementations (PyTorch, TensorFlow)

Create comprehensive tutorial series for underserved domains

Organize workshops or study groups at local universities or companies

Publish research in academic venues (NeurIPS, ICCV, CVPR) or specialized journals

Industry Integration:

Build proprietary DCGAN applications for your organization's specific needs

Develop DCGAN-powered products (design tools, data augmentation services, content generation)

Consult for organizations implementing generative AI solutions

Train internal teams on DCGAN implementation and best practices

Recommended Learning Resources

Online Courses:

Deep Learning Specialization by Andrew Ng (Coursera) - Comprehensive foundation

Practical Deep Learning for Coders by fast.ai - Hands-on approach

GANs Specialization by deeplearning.ai (Coursera) - GAN-specific focus

Books:

"Deep Learning" by Goodfellow, Bengio, Courville - Theoretical foundations (Chapter 20 on Generative Models)

"Generative Deep Learning" by David Foster - Practical implementations

"Hands-On Generative Adversarial Networks with PyTorch 1.x" by Hany, Waleed - Step-by-step tutorials

Research Papers (Essential Reading):

Radford et al. (2015) - Original DCGAN paper

Goodfellow et al. (2014) - Original GAN paper

Heusel et al. (2017) - FID metric introduction

Recent application papers from References section below

Communities and Forums:

r/MachineLearning - Reddit community for ML discussions

Papers With Code - Code implementations of research papers

Hugging Face Discord - Active community for open-source AI

AI Stack Exchange - Q&A for technical issues

Tools for Experimentation:

Google Colab - Free GPU access for learning

Kaggle Notebooks - Free GPU/TPU, dataset hosting

Weights & Biases - Experiment tracking and visualization

TensorBoard - Training monitoring (TensorFlow/PyTorch)

Final Encouragement: Start small, iterate quickly, and don't fear failure. Every successful DCGAN implementation involved multiple failed training runs, hyperparameter adjustments, and debugging sessions. The key is persistent experimentation combined with solid understanding of the underlying principles. Your first generated images may be blurry and unrealistic—that's normal and expected. With patience and systematic refinement, you'll achieve impressive results that demonstrate the power of adversarial generative modeling.

Glossary

Activation Function: Mathematical function applied to neural network outputs determining whether neurons should "fire." Common examples: ReLU, LeakyReLU, Sigmoid, Tanh.

Adversarial Training: Training process where two neural networks compete against each other—one generates fake data, the other tries to detect it. The competition drives both to improve.

Batch Normalization: Technique normalizing layer inputs to zero mean and unit variance within each training batch. Stabilizes training by reducing internal covariate shift.

Convolutional Neural Network (CNN): Neural network architecture using convolutional layers designed for processing grid-structured data like images. Learns hierarchical spatial features.

Discriminator: Component of GAN that tries to distinguish between real data from training set and fake data generated by generator. Acts as a binary classifier.

Epoch: One complete pass through the entire training dataset. DCGAN training typically requires 50-200 epochs.

Fréchet Inception Distance (FID): Metric measuring similarity between distribution of generated images and real images using Inception v3 features. Lower scores indicate better quality.

Fully Connected Layer: Neural network layer where every neuron connects to every neuron in previous layer. Also called "dense layer." DCGAN minimizes use of these.

Generator: Component of GAN that creates synthetic data from random noise. In DCGANs, uses transposed convolutions to upsample noise into images.

Gradient: Vector of partial derivatives indicating direction and rate of steepest increase for a function. Neural networks optimize by following gradients.

Gradient Descent: Optimization algorithm that iteratively adjusts neural network parameters in direction opposite to gradient, minimizing loss function.

Hyperparameter: Configuration variable set before training begins (learning rate, batch size, number of layers). Not learned from data; must be manually specified or tuned.

Inception Score (IS): Metric evaluating both quality and diversity of generated images using Inception v3 network. Higher scores indicate better performance.

Latent Space: Lower-dimensional representation where generator's input noise vectors reside. Points close in latent space produce similar generated images.

LeakyReLU: Activation function allowing small gradient flow for negative inputs (slope 0.01-0.2), preventing "dead neurons." Used in DCGAN discriminators.

Loss Function: Mathematical function measuring difference between model predictions and desired outputs. Training aims to minimize this.

Minimax Game: Game theory concept where one player maximizes their payoff while other minimizes it. GANs optimize minimax objective between generator and discriminator.

Mode Collapse: Failure case where generator produces limited variety of outputs, ignoring parts of data distribution. Generator finds a few outputs that fool discriminator and stops exploring.

Overfitting: Phenomenon where model learns training data too well, including noise and peculiarities, resulting in poor generalization to new data.

Perceptual Loss: Loss function based on high-level features extracted by pre-trained networks (like VGG) rather than pixel-wise differences. Produces more perceptually realistic images.

ReLU (Rectified Linear Unit): Activation function outputting input if positive, zero otherwise: f(x) = max(0, x). Used in DCGAN generators.

Stride: Step size convolutional filter moves across input. Larger strides produce smaller output dimensions. DCGAN uses stride 2 for downsampling (discriminator) and upsampling (generator).

Strided Convolution: Convolutional operation with stride > 1, reducing spatial dimensions. Replaces pooling layers in DCGAN discriminator.

Synthetic Data: Artificially generated data that mimics statistical properties of real data. DCGANs create synthetic images for data augmentation.