What Is A Vector Database? The Complete 2026 Guide to Modern AI Data Storage

- Muiz As-Siddeeqi

- 4 days ago

- 33 min read

Every time you ask ChatGPT a question about your company's documents, search for similar products on Amazon, or get eerily accurate Netflix recommendations, there's an invisible technology working behind the scenes. It's not miracle—it's a vector database, and it's quietly revolutionizing how AI systems understand and retrieve information. In 2024, this technology powered a USD 2.2 billion market that's exploding at 21.9% annually (Global Market Insights, 2024-12-01). But most people have never heard of it. That changes today.

Whatever you do — AI can make it smarter. Begin Here

TL;DR

Vector databases store data as mathematical vectors (arrays of numbers) rather than traditional rows and columns, enabling AI systems to find similar information based on meaning, not just exact matches

The market grew from USD 1.66 billion in 2023 to USD 2.2 billion in 2024, projected to reach USD 7-10 billion by 2030-2032 depending on the analysis (Grand View Research, 2024; Verified Market Research, 2025-03-05)

51% of enterprise AI implementations now use Retrieval Augmented Generation (RAG), up from 31% the year before—and vector databases power every RAG system (MarketsandMarkets, 2025)

Real companies see dramatic results: VIPSHOP reduced recommendation query times to below 30ms using Milvus; HumanSignal revolutionized healthcare data labeling with vector search (Zilliz Learn, 2024-01-09)

HNSW (Hierarchical Navigable Small World) indexing enables logarithmic search speed, making it possible to search billions of vectors in milliseconds instead of hours

Choose based on your needs: Pinecone for managed simplicity, Milvus for massive scale, Qdrant for cost-effectiveness, Weaviate for hybrid search, or ChromaDB for rapid prototyping

A vector database is a specialized data storage system that stores information as high-dimensional vectors—numerical representations that capture the meaning and context of data like text, images, or audio. Unlike traditional databases that find exact matches, vector databases use mathematical similarity measures (like cosine similarity) to find semantically related information, making them essential for AI applications including recommendation engines, semantic search, and large language model systems.

Table of Contents

What Is A Vector Database? The Foundation

Core Definition: A vector database is a purpose-built data storage and retrieval system optimized for high-dimensional vectors. These vectors are numerical representations—typically arrays of 100 to 4,096 numbers—that encode the semantic meaning of complex data like text, images, audio, or video.

Traditional databases excel at exact matching. If you search for "red shoes" in a SQL database, you get products with that exact phrase. Vector databases work differently. They understand that "crimson footwear" and "scarlet sneakers" mean roughly the same thing. They compute mathematical distances between vectors to find similar items, even when the words differ completely.

This capability matters because most valuable data is unstructured. IDC's Global DataSphere Forecast shows that unstructured data grew from 64.2 zettabytes in 2020 to an expected 180 zettabytes by 2025—with about 80% of all data being unstructured (Verified Market Research, 2025-03-05). Photos, emails, videos, audio recordings, and documents don't fit neatly into rows and columns. Vector databases solve this by converting everything into a common mathematical format that machines can compare.

The transformation process uses embedding models—neural networks trained to convert data into vectors. When you feed text into OpenAI's text-embedding-3 model, it returns a 1,536-dimensional vector. Each dimension captures some aspect of meaning: topic, sentiment, formality, and countless other subtle features. Similar concepts produce similar vectors. The vectors for "dog" and "puppy" point in nearly the same mathematical direction, while "dog" and "skyscraper" point in very different directions.

The Economic Impact

The numbers tell the growth story. Multiple market research firms confirm explosive expansion:

Global Market Insights reported the market reached USD 2.2 billion in 2024, growing at 21.9% CAGR toward 2034 (Global Market Insights, 2024-12-01)

Grand View Research estimated USD 1.66 billion in 2023, projecting USD 7.34 billion by 2030 at 23.7% CAGR (Grand View Research, 2024)

MarketsandMarkets forecasts growth from USD 2.65 billion in 2025 to USD 8.95 billion by 2030 at 27.5% CAGR (MarketsandMarkets, 2025)

Verified Market Research projects USD 2.2 billion in 2024 reaching USD 10.4 billion by 2032 at 21.7% CAGR (Verified Market Research, 2025-03-05)

North America dominates with 36.6% to 41.2% market share, driven by early AI adoption in tech companies (MarketsandMarkets, 2025; Technavio, 2025-12-12). But retail and e-commerce sectors show the fastest growth at 33.8% CAGR, as companies race to personalize customer experiences (Grand View Research, 2024).

The Math Behind the Miracle: How Vectors Work

Understanding vectors requires no advanced mathematics, just a different way of thinking about data.

What Is a Vector?

A vector is simply a list of numbers. The vector [0.2, 0.8, -0.3] is a 3-dimensional vector. Most AI applications use 384 to 1,536 dimensions, but some reach 4,096 or higher. Each number represents a feature or characteristic extracted by the embedding model.

Think of it like describing a person. You might use numbers for height (1.75 meters), weight (70 kg), age (32 years), income level (55,000), education years (16), and hundreds of other features. Together, these numbers create a mathematical fingerprint. Two people with similar vectors across all dimensions are likely to be similar people in meaningful ways.

Measuring Similarity

Vector databases use distance metrics to compare vectors. The three most common are:

Euclidean Distance: The straight-line distance between two points. If vectors are coordinates in space, Euclidean distance measures how far apart they are. Lower values mean more similar.

Cosine Similarity: Measures the angle between vectors, ignoring magnitude. Two vectors pointing in the same direction (small angle) are similar even if one is much longer. This works well for text where length matters less than direction.

Dot Product: Multiplies corresponding elements and sums them. Higher values indicate more similarity. Fast to compute but sensitive to vector length.

Different applications prefer different metrics. Text search typically uses cosine similarity because document length shouldn't affect relevance. Image recognition often uses Euclidean distance because pixel-level differences matter.

The Dimensionality Problem

Here's the challenge: searching through millions or billions of high-dimensional vectors takes time. Computing the distance from a query vector to every stored vector requires billions of calculations. For a database with 100 million 1,536-dimensional vectors, a single exact search requires 153.6 billion floating-point operations.

At 1 millisecond per search, this would take 27.8 hours. That's unacceptable for real-time applications. The solution? Approximate nearest neighbor (ANN) search and specialized indexing structures.

From Idea to Industry: The History of Vector Databases

Vector similarity search isn't new, but vector databases as a product category emerged only recently.

Early Foundations (1990s-2010s)

The theoretical groundwork began in the 1990s with locality-sensitive hashing (LSH). Researchers Indyk and Motwani introduced LSH in 1998 as a way to find approximate nearest neighbors in high-dimensional spaces faster than exhaustive search (Survey of Vector Database Management Systems, 2024-02).

Throughout the 2000s and early 2010s, academic work continued on tree-based indexes (like k-d trees and ball trees) and graph-based methods. Spotify released Annoy (Approximate Nearest Neighbors Oh Yeah) as an open-source library optimized for read-heavy workloads. Facebook (now Meta) developed FAISS (Facebook AI Similarity Search) in C++ with Python bindings, focusing on GPU acceleration for massive-scale vector search.

These were libraries, not databases. They lacked durability, concurrent writes, distributed architectures, and other database features. Developers had to build infrastructure around them.

The Explosion (2020-2024)

Two forces converged around 2020: better embedding models and the rise of large language models (LLMs). Transformer architectures like BERT produced higher-quality embeddings. Then OpenAI released ChatGPT in late 2022, demonstrating LLMs' potential—and their limitations.

LLMs trained on static datasets couldn't access current information or private company data. Retrieval Augmented Generation (RAG) emerged as the solution: retrieve relevant documents from a vector database, then feed them to the LLM for context-aware generation. RAG became the killer application for vector databases.

Venture capital flooded in. Pinecone raised $138 million by 2023. Weaviate, Qdrant, Chroma, and others launched. Established database vendors scrambled to add vector capabilities—PostgreSQL via pgvector, MongoDB Atlas Vector Search, Oracle Database 23ai with AI Vector Search (Oracle, 2024-09), Elasticsearch with vector fields, Redis with vector similarity search.

By 2024, over 40 specialized vector database platforms competed for market share (arXiv, 2025-02-28). But the hype also triggered skepticism. A VentureBeat analysis in November 2025 noted that 95% of organizations invested in generative AI saw zero measurable returns, and pure vector search alone often fell short without hybrid architectures (VentureBeat, 2025-11-16).

Current State (2025-2026)

The market has matured. The consensus in 2025: vector databases are essential infrastructure, but not silver bullets. Hybrid approaches combining vector search with keyword search (BM25), graph relationships (GraphRAG), and metadata filtering deliver better results than vectors alone.

Oracle introduced GPU-accelerated vector search in partnership with NVIDIA (Oracle Blog, 2024). Salesforce announced Data Cloud Vector Database in June 2024, enabling businesses to unify unstructured customer data (Grand View Research, 2024). MongoDB expanded vector search features across Community Edition and Enterprise Server in September 2025 (Polaris Market Research, 2024).

The technology has moved from "shiny object" to "critical building block" in enterprise AI stacks.

How Vector Databases Actually Work

Vector databases share a common architecture despite implementation differences. Understanding the core components clarifies how they deliver fast similarity search.

Core Components

1. Vector Storage Engine

The storage layer persists vectors efficiently. Unlike relational databases storing structured rows, vector storage optimizes for dense numerical arrays. Some systems store vectors in memory for speed (Redis), others use disk-based storage with clever caching (Milvus, Qdrant). Storage decisions affect latency, throughput, and cost.

Compression techniques like quantization reduce memory footprint. Product Quantization (PQ) divides vectors into chunks, approximating each chunk with a smaller representation. Scalar quantization converts 32-bit floats to 8-bit integers. These methods reduce memory by 4x to 32x with acceptable accuracy loss.

2. Indexing Subsystem

Raw vector storage enables exact search but not fast search. Indexes structure vectors to skip irrelevant candidates. The indexing subsystem builds and maintains these structures.

Popular index types include:

HNSW (Hierarchical Navigable Small World): Graph-based, multi-layer structure for logarithmic search

IVF (Inverted File): Cluster-based, partitions vectors into regions

LSH (Locality-Sensitive Hashing): Hash-based, maps similar vectors to same buckets

Tree-based: k-d trees, ball trees, or VP trees for lower-dimensional data

Index construction happens at data ingestion. Some indexes (like HNSW) require significant build time but deliver excellent query performance. Others (like LSH) build quickly with acceptable but lower recall.

3. Query Processing Pipeline

When a query arrives, the system:

Converts the query into a vector using the same embedding model

Consults the index to identify candidate vectors

Computes exact distances to candidates

Returns the top-k most similar results

Optionally applies metadata filters or reranking

Advanced systems support hybrid queries: combine vector similarity with structured filters ("find documents about AI published after 2023"). This requires coordinating vector search with traditional predicate evaluation.

4. Client SDKs and APIs

Vector databases expose functionality through REST APIs, gRPC interfaces, or language-specific SDKs (Python, JavaScript, Go, Rust, Java). These abstractions hide complexity, letting developers focus on application logic rather than vector mathematics.

Modern databases also integrate with AI frameworks. LangChain, LlamaIndex, and Haystack provide high-level interfaces to multiple vector databases, enabling developers to switch providers with minimal code changes.

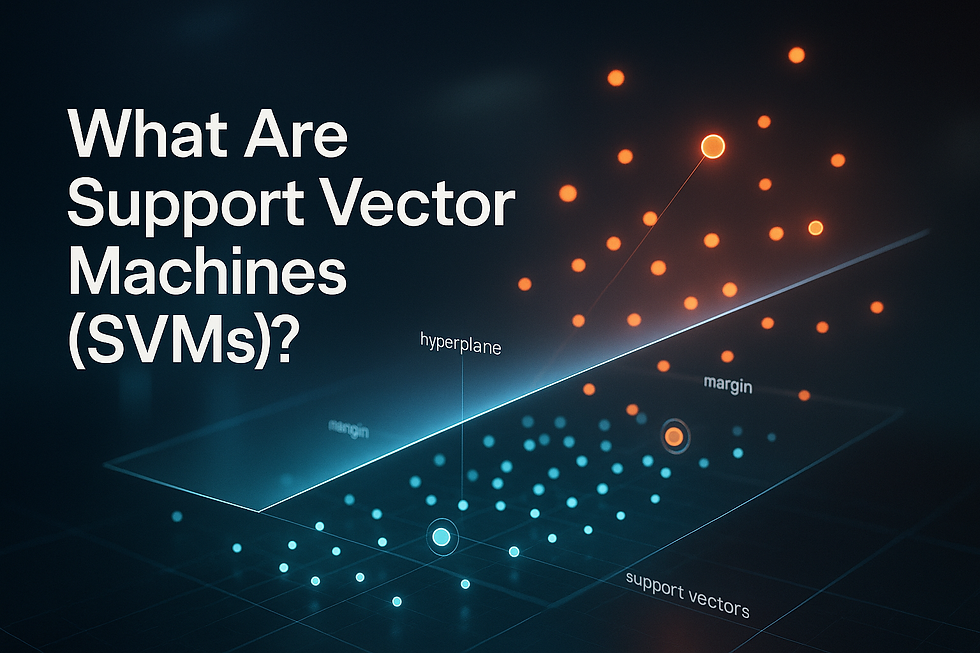

HNSW and Other Indexing Algorithms

HNSW has become the default indexing algorithm in most vector databases. Understanding why requires examining how it works and what it optimizes for.

HNSW: Hierarchical Navigable Small World

HNSW combines two concepts: skip lists and navigable small-world graphs.

Skip Lists Foundation

Skip lists are multi-layer linked lists. The bottom layer contains all elements in order. Each layer above removes elements based on a probability, creating sparser subsequences. Searching starts at the top, moves horizontally until overshooting, then drops down a layer. This achieves O(log n) search complexity.

Navigable Small Worlds

A NSW graph connects each vector to several nearby neighbors. These connections create both short-range links (to very similar vectors) and long-range links (to moderately similar ones). Greedy search starts at an entry point, repeatedly jumping to the neighbor closest to the query. In a well-constructed NSW, this reaches approximate nearest neighbors quickly.

Hierarchical Layers

HNSW builds multiple layers of NSW graphs stacked vertically. The top layer is sparsest with longest links; the bottom layer is densest with shortest links. Every vector in a higher layer also exists in all layers below.

When searching:

Enter at the top layer's designated entry point

Greedily traverse to the closest neighbor repeatedly

When no neighbor is closer, drop to the layer below

Continue until reaching the bottom layer

Return the k-nearest neighbors found

This structure achieves logarithmic search complexity: O(log n) with proper tuning. The top layers let queries "jump over" huge swaths of irrelevant data.

HNSW Parameters

Two key parameters tune HNSW performance:

M: Maximum number of connections per node in each layer. Higher M improves recall but increases memory and build time. Typical values: 16-48.

efConstruction: Size of dynamic candidate list during index construction. Higher values improve index quality but slow insertion. Typical values: 100-500.

ef: Size of dynamic candidate list during search. Higher values improve recall but slow queries. Adjusted at query time, not construction.

According to Weaviate documentation, HNSW indexes scale logarithmically, but memory requirements grow with the number of vectors and connections (Weaviate Docs, 2024). For 1 million 384-dimensional vectors with M=16, expect approximately 1-2 GB of index overhead.

Alternative Indexing Methods

IVF (Inverted File Flat)

IVF first clusters the dataset using k-means or similar algorithms. Each cluster gets a centroid. At query time, the system compares the query vector to cluster centroids, identifies the nearest few clusters, and exhaustively searches only those clusters. This trades exhaustive global search for exhaustive local search within clusters.

IVF works well for very large datasets where even HNSW becomes memory-intensive. The downside: lower recall if clusters poorly capture the data distribution, and sensitivity to the number of clusters chosen.

LSH (Locality-Sensitive Hashing)

LSH uses hash functions that map similar vectors to the same buckets with high probability. Multiple hash tables with different hash functions increase recall. LSH is memory-efficient and supports streaming data well, but recall often lags HNSW.

LSH suits extremely high-dimensional sparse vectors where other methods struggle. Text processing with bag-of-words representations benefits from LSH.

Product Quantization with IVF

Combining IVF with PQ (Product Quantization) creates memory-efficient indexes for billion-scale datasets. IVF provides coarse filtering; PQ compresses vectors within clusters. FAISS popularized this approach for large-scale image search.

Vector Databases vs Traditional Databases

Vector databases don't replace traditional databases. They complement them. Understanding the differences clarifies when to use each.

Feature | Traditional (SQL/NoSQL) | Vector Database |

Data Model | Structured rows/documents | High-dimensional vectors |

Query Type | Exact match, range, joins | Similarity search, ANN |

Primary Index | B-trees, hash indexes | HNSW, IVF, LSH |

Query Language | SQL, MongoDB query language | Vector similarity APIs |

Typical Data | Structured (names, numbers, dates) | Embeddings from text, images, audio |

Search Paradigm | "Find X WHERE attribute = value" | "Find items similar to this vector" |

Optimization Goal | ACID compliance, transaction speed | Low-latency similarity search, recall |

Scale Bottleneck | Complex joins, large transactions | High dimensionality, index memory |

When to Use Traditional Databases:

Storing structured records (user accounts, orders, inventory)

Enforcing referential integrity

ACID transactions across multiple tables

Exact matches, aggregations, and analytics

When to Use Vector Databases:

Semantic search over unstructured data

Recommendation engines based on user behavior embeddings

RAG systems for LLMs

Image/video similarity search

Fraud detection using behavior pattern vectors

Hybrid Architectures

Most real systems use both. A common pattern:

Store metadata in PostgreSQL (user IDs, timestamps, categories)

Store embeddings in Pinecone or Qdrant

Perform vector search to find candidates

Join results with PostgreSQL for full record details

Filter on metadata before or after vector search

Some databases now bridge both worlds. PostgreSQL with pgvector extension handles vectors and relational data in one system. MongoDB Atlas Vector Search combines document storage with vector indexes. This simplifies architecture but may sacrifice some performance at massive scale compared to purpose-built vector databases.

Real-World Applications Transforming Industries

Vector databases power applications most people use daily without realizing it. Here's where they're making the biggest impact.

1. Recommendation Engines

Netflix suggests shows you'll probably enjoy. Amazon recommends products similar to ones you've browsed. Spotify creates personalized playlists. Behind these features: vector databases storing user preference embeddings and item embeddings. A simple similarity search finds items close to your taste profile.

E-commerce companies use visual similarity search: upload a photo, find similar products. Pinterest Lens lets users photograph objects to find matching items. These systems convert images to vectors, then search for nearby vectors in the catalog.

2. Retrieval Augmented Generation (RAG)

RAG has become the dominant enterprise AI use case. The 2024 State of Generative AI in the Enterprise report by Menlo Ventures found that RAG powers 51% of enterprise AI implementations, up from 31% a year earlier (MarketsandMarkets, 2025).

RAG workflow:

User submits a question

System converts question to a vector

Vector database retrieves relevant documents

Documents get fed to LLM as context

LLM generates an answer grounded in retrieved documents

This addresses LLM hallucination by anchoring responses in real documents. Companies use RAG for internal knowledge bases, customer support chatbots, legal document analysis, and technical documentation search.

3. Semantic Search

Google doesn't just match keywords anymore—it understands intent. Vector databases enable this semantic understanding. When you search "how to fix a leaky faucet," the system retrieves documents about "repairing dripping taps" even though the words differ.

Enterprises deploy semantic search on internal wikis, support articles, and document repositories. Employees find relevant information faster because search understands concepts, not just keywords.

4. Fraud Detection and Anomaly Detection

Financial institutions represent transactions as vectors encoding features like amount, time, location, merchant type, and customer history. Normal transactions cluster together in vector space. Anomalous transactions fall far from clusters, triggering alerts.

Credit card companies use this to detect fraud in real time. A transaction that's similar to known fraud patterns but different from the cardholder's normal behavior gets flagged for verification.

5. Image and Video Search

Reverse image search tools (Google Lens, TinEye) convert images to vectors. Find all images visually similar to a query image, even if they share no metadata or tags.

Video platforms use this for duplicate detection and content recommendation. YouTube's recommendation engine analyzes video embeddings, not just titles and descriptions. Security companies use facial recognition by comparing face embeddings against databases of known individuals.

6. Healthcare and Life Sciences

Medical imaging systems use vector search to find similar cases. Radiologists upload an X-ray or MRI; the system retrieves similar images from historical cases with diagnoses. This assists diagnosis and reveals patterns doctors might miss.

Drug discovery teams represent molecular structures as vectors. Similarity search identifies compounds with similar properties, accelerating the search for therapeutic candidates.

Case Studies: Vector Databases in Action

Real companies implementing vector databases provide proof of concept beyond theoretical benefits. Here are three documented examples.

Case Study 1: HumanSignal – Healthcare Data Labeling

Company: HumanSignal (formerly Heartex)

Founded: 2019

Product: Label Studio, an open-source data labeling platform

Scale: 200,000+ users, 250 million data items labeled

Challenge: Healthcare organizations struggle to efficiently manage and analyze medical data for AI applications. Labeling medical images, patient records, and clinical notes requires domain experts. Finding relevant unlabeled data for specific tasks wastes time.

Solution: HumanSignal developed a Data Discovery feature within Label Studio Enterprise using Milvus, Zilliz's open-source vector database. The system converted medical data into vector embeddings, enabling semantic search.

Leveraging Milvus's support for multiple indexing algorithms (HNSW and IVF_SQ8), they optimized for both speed and efficiency. Deployment on AWS Elastic Kubernetes Service (EKS) ensured scalability and reliability (Zilliz Learn, 2024-01-09).

Results: The Data Discovery feature revolutionized healthcare data labeling. Clinicians could now search semantically for similar cases, images, or records. Instead of manually browsing thousands of unlabeled files, they queried by content and meaning. This streamlined the entire annotation workflow, reducing time-to-model for medical AI applications.

Case Study 2: VIPSHOP – E-Commerce Recommendations

Company: VIPSHOP

Industry: E-commerce (China)

Challenge: Scale and speed for product recommendation system

Background: VIPSHOP operates a major e-commerce platform. Their recommendation system suggests products to millions of users based on browsing history, purchase patterns, and product attributes. The original system used Elasticsearch for similarity search but struggled with performance as the catalog and user base grew.

Solution: After extensive research, VIPSHOP adopted Milvus to handle billions of vector embeddings. They transformed product features (images, descriptions, categories) into vector representations. Customer behavior also became vectors encoding preferences.

The system architecture leveraged Milvus's distributed deployment and multi-language SDKs for seamless integration. Data update pipelines synchronized vector data in real time (Zilliz Learn, 2024-01-09).

Results: Query response times plummeted to below 30 milliseconds. This enabled real-time personalization as users browsed. The performance improvement at scale demonstrated Milvus's capability to handle massive e-commerce workloads efficiently. VIPSHOP successfully transformed its recommendation engine into a competitive advantage.

Case Study 3: Home Depot – Search Engine Accuracy

Company: Home Depot

Industry: Home improvement retail

Application: Website search engine

Challenge: Customers searching Home Depot's website often used broad or ambiguous queries. Traditional keyword matching returned irrelevant results, frustrating users and reducing conversions.

Solution: Home Depot implemented vector search techniques powered by machine learning. Instead of exact keyword matches, the system inferred user intent from queries and product embeddings. The vector database stored embeddings for millions of products across thousands of categories (MyScale Blog, 2024).

Results: Search accuracy and usability improved significantly. Customers found relevant products faster, even when using non-standard terminology. For example, searching "thing to fix leaky pipe" now returns appropriate plumbing supplies, whereas keyword search would fail on "thing to fix." The improved search translated directly into better user experience and increased sales.

Popular Vector Database Solutions

Dozens of vector databases compete for market share. Here are the most widely adopted solutions, with their strengths and trade-offs.

Pinecone

Type: Fully managed, serverless

Launched: 2019

Best For: Teams wanting zero infrastructure management

Pinecone pioneered the fully managed vector database category. No servers to provision, no indexes to tune manually—just an API. It abstracts complexity behind a simple interface optimized for developers who want to focus on applications, not infrastructure.

Strengths:

Automatic scaling without manual intervention

Real-time indexing and querying

High availability with multi-region support

Excellent documentation and onboarding

Limitations:

Highest pricing among major providers

Less control over infrastructure and tuning

Serverless model may not suit all cost structures

Pricing: Starts with a free tier (1 index, limited vectors); paid plans scale based on pod type, storage, and queries. Enterprise pricing available for larger deployments (Liveblocks Blog, 2025-09-15).

Weaviate

Type: Open-source with managed cloud option

Launched: 2019

Best For: Hybrid search combining vectors, keywords, and graph relationships

Weaviate differentiates itself by combining vector search with a knowledge graph. Data objects connect through semantic relationships, enabling queries that blend similarity search with graph traversal. It also supports built-in vectorization modules, reducing the need for external embedding services.

Strengths:

GraphQL API familiar to many developers

Modular architecture with pluggable components

Strong hybrid search capabilities

Active open-source community

Limitations:

Higher memory requirements at very large scale (100M+ vectors)

Complex queries combining graphs and vectors add latency

Shorter trial period (14 days) for managed service

Pricing: Managed cloud starts at USD 25/month; open-source self-hosting available free with operational costs (Firecrawl Blog, 2025-10-09).

Qdrant

Type: Open-source with managed cloud option

Launched: 2021

Best For: Cost-conscious teams needing powerful filtering

Qdrant (pronounced "quadrant") offers a Rust-based implementation emphasizing performance and production readiness. It excels at combining vector search with complex metadata filters. The architecture supports ACID-compliant transactions, familiar to developers from traditional databases.

Strengths:

Best free tier: 1 GB forever, no credit card required

Sophisticated payload filtering integrated into search

RESTful and gRPC APIs

Compact memory footprint

Limitations:

Smaller ecosystem and community than Pinecone or Milvus

Complex filters on high-cardinality fields may degrade performance

Pricing: Free 1 GB tier; paid plans start around USD 25/month based on storage and compute (Firecrawl Blog, 2025-10-09; Liveblocks Blog, 2025-09-15).

Milvus (Zilliz Cloud)

Type: Open-source; Zilliz Cloud offers managed hosting

Launched: 2019

Best For: Enterprise-scale deployments with billions of vectors

Milvus is built for massive scale. It supports distributed architectures, multiple indexing algorithms (HNSW, IVF, DiskANN, and others), and GPU acceleration. Organizations with data engineering expertise and huge datasets choose Milvus for control and performance.

Strengths:

Proven at billion-vector scale

Supports widest variety of indexing methods

Kubernetes-native architecture

Strong open-source community and ecosystem (35,000+ GitHub stars)

Limitations:

Requires operational expertise for self-hosting

More complex setup than managed alternatives

Resource-intensive at scale

Pricing: Open-source is free; Zilliz Cloud (managed) offers serverless and dedicated options, starting around USD 89/month for typical workloads (Liveblocks Blog, 2025-09-15).

ChromaDB

Type: Open-source, embedded

Launched: 2022

Best For: Rapid prototyping, learning, small-scale projects (<10M vectors)

Chroma emphasizes simplicity. It embeds directly into Python applications with a NumPy-like API. Perfect for getting started quickly or building MVPs without infrastructure overhead.

Strengths:

Easiest to learn and deploy

Embedded mode requires no separate server

Great for experimentation and education

Active development and community (6,000+ GitHub stars)

Limitations:

Not designed for production scale beyond ~10 million vectors

Limited filtering and query capabilities compared to alternatives

Teams typically migrate to Qdrant, Pinecone, or Milvus for production

Pricing: Free and open-source (Firecrawl Blog, 2025-10-09).

PostgreSQL with pgvector

Type: Extension for existing PostgreSQL databases

Launched: pgvector 2021

Best For: Teams already using PostgreSQL, wanting unified data storage

pgvector adds vector capabilities to PostgreSQL. Store vectors alongside relational data, query them in the same transaction, and avoid managing separate infrastructure. Recent enhancements like pgvectorscale improve performance at scale.

Strengths:

Unified database for structured and vector data

Leverages existing PostgreSQL expertise and tooling

ACID compliance and mature ecosystem

Cost-effective for moderate scale

Limitations:

Performance limitations beyond 50-100 million vectors

Slower than purpose-built vector databases at massive scale

Limited indexing options compared to specialized solutions

Pricing: PostgreSQL and pgvector are open-source; hosting costs depend on infrastructure (Firecrawl Blog, 2025-10-09).

Market Dynamics

VectorDBBench and independent testing show performance differences narrow at moderate scale. Below 50 million vectors, most databases perform similarly for typical RAG workloads. Above 100 million vectors, purpose-built systems (Milvus, Pinecone, Qdrant) show clearer advantages.

The market is consolidating. Established database vendors (Oracle, MongoDB, Elasticsearch, Redis) added vector features, commoditizing basic functionality. Pure-play vector database startups must differentiate on performance, ease of use, hybrid capabilities, or specific domain expertise. A VentureBeat analysis in November 2025 noted the challenge: distinguishing one vector database from another grows harder as features converge (VentureBeat, 2025-11-16).

Pros and Cons of Vector Databases

Advantages

1. Semantic Understanding

Find information based on meaning, not just keywords. "How do I repair a dripping faucet?" matches "fixing leaky taps" without sharing words.

2. Unstructured Data Handling

Process images, audio, video, and text in a unified framework. Everything converts to vectors, enabling cross-modal search (find images similar to a text description).

3. Real-Time Personalization

Recommendation systems update instantly as user behavior changes. No need to retrain models or rebuild indexes overnight.

4. Scalability

Modern indexing algorithms (HNSW, IVF) scale logarithmically. Billion-vector databases deliver millisecond latency with proper infrastructure.

5. AI Application Enablement

RAG systems, chatbots, semantic search, and other AI applications become practical. Vector databases are infrastructure for the AI age.

Disadvantages

1. Memory Requirements

High-dimensional vectors consume significant memory. HNSW indexes require vectors and connection graphs in RAM. 100 million 1,536-dimensional vectors with metadata can need 100-200 GB of memory.

2. Index Build Time

Building indexes for billion-vector datasets takes hours or days. HNSW construction is CPU-intensive. This complicates initial deployment and large updates.

3. Approximate Results

ANN algorithms trade accuracy for speed. Recall (percentage of true nearest neighbors found) might be 95-98%. Some applications require exact results.

4. Complexity

Deploying and tuning vector databases requires new expertise. Choosing embedding models, configuring index parameters, and optimizing hybrid search adds operational burden.

5. Cost

Managed services charge premiums for convenience. Self-hosting requires significant infrastructure. Costs can exceed traditional databases for equivalent data volumes due to memory needs.

6. Evolving Standards

The field is young. Best practices, APIs, and tools are still maturing. Vendor lock-in risks exist as companies build on proprietary features.

Myths vs Facts

Myth 1: Vector Databases Replace Traditional Databases

Reality: Vector databases complement traditional databases, not replace them. Most applications need both. Store structured records in PostgreSQL; store embeddings in a vector database. They solve different problems.

Myth 2: Vector Search Alone Is Sufficient

Reality: Pure vector search often falls short. Hybrid approaches combining vector similarity, keyword matching (BM25), metadata filtering, and graph relationships deliver better results. Microsoft's GraphRAG, open-sourced mid-2024, showed that combining vectors with knowledge graphs boosted answer correctness from ~50% to 80%+ in benchmarks (VentureBeat, 2025-11-16).

Myth 3: All Vector Databases Perform Equally

Reality: Performance varies significantly based on scale, data dimensionality, query patterns, and index configuration. HNSW excels for high recall; IVF for memory-constrained environments; LSH for sparse data. Benchmark your specific workload before choosing.

Myth 4: Higher Dimensions Always Mean Better Accuracy

Reality: Diminishing returns kick in. OpenAI's text-embedding-3-small (1,536 dimensions) often outperforms older models with 3,072 dimensions. Quality of the embedding model matters more than raw dimensionality. Also, very high dimensions increase memory costs and search times without commensurate accuracy gains.

Myth 5: Vector Databases Are Only For AI Companies

Reality: Any company with unstructured data benefits. Retailers (product recommendations), healthcare (medical imaging), finance (fraud detection), media (content discovery), and many other industries deploy vector databases to solve practical business problems.

Myth 6: Expensive Managed Services Are Always Best

Reality: Cost-benefit depends on scale and team expertise. For small teams or prototypes, managed services (Pinecone) save time. For massive scale with strong ops teams, self-hosted open-source solutions (Milvus, Qdrant) offer better economics. The right choice depends on your context.

Implementation Checklist

Planning to implement a vector database? Follow this checklist to avoid common pitfalls.

1. Define Use Case and Requirements

[ ] Identify the core problem: semantic search, recommendations, RAG, or something else?

[ ] Estimate scale: millions or billions of vectors?

[ ] Determine latency requirements: <100ms, <1s, or batch processing acceptable?

[ ] Assess refresh rate: real-time updates or periodic batch?

2. Choose Embedding Model

[ ] Select embedding model based on data type (text, images, multimodal)

[ ] Typical text models: OpenAI text-embedding-3, Sentence-BERT, Cohere Embed

[ ] Typical image models: CLIP, ResNet embeddings, vision transformers

[ ] Test multiple models with your data to compare quality

3. Select Vector Database

[ ] Consider managed vs. self-hosted based on ops capability

[ ] Evaluate free tiers for prototyping (Qdrant, Pinecone, ChromaDB)

[ ] Match database strengths to your needs (hybrid search, scale, ease of use)

[ ] Check integration with existing tech stack (Python, Node.js, frameworks)

4. Design Data Pipeline

[ ] Build ingestion pipeline to convert data to vectors

[ ] Plan metadata storage (store alongside vectors or separately?)

[ ] Implement error handling and retry logic

[ ] Consider batch vs. streaming ingestion

5. Configure Indexing

[ ] Choose index type (HNSW for most use cases, IVF for extreme scale)

[ ] Tune parameters (M and efConstruction for HNSW; cluster count for IVF)

[ ] Enable compression if memory is constrained (quantization reduces footprint)

[ ] Plan for index rebuild or incremental updates

6. Implement Hybrid Search

[ ] Combine vector search with keyword search (BM25) for better recall

[ ] Add metadata filtering for faceted search

[ ] Use reranking to improve result quality

[ ] Test different weighting between vector and keyword scores

7. Build Security and Compliance

[ ] Implement access controls and authentication

[ ] Enable encryption at rest and in transit

[ ] Ensure compliance with data regulations (GDPR, HIPAA, etc.)

[ ] Plan audit logs for queries and data access

8. Monitor and Optimize

[ ] Track query latency, throughput, and recall

[ ] Monitor memory usage and disk I/O

[ ] Set up alerts for performance degradation

[ ] A/B test parameter changes on real traffic

9. Plan for Scale

[ ] Estimate growth in vector count over next 12-24 months

[ ] Test performance at 2x and 10x current scale

[ ] Plan for horizontal scaling or sharding if needed

[ ] Budget for infrastructure costs at scale

10. Document and Train Team

[ ] Create runbooks for common operations

[ ] Document embedding model versions and update procedures

[ ] Train team on vector database concepts and tooling

[ ] Establish processes for index rebuilding and data migration

Performance and Scalability Considerations

Achieving excellent performance at scale requires understanding trade-offs and optimization techniques.

Recall vs. Latency Trade-Off

ANN search trades accuracy (recall) for speed. Recall is the percentage of true nearest neighbors returned. 100% recall means exact search. 95% recall means you find 95 out of 100 true neighbors.

Higher recall requires larger candidate sets, more distance calculations, and longer query times. The trick: find the minimum recall acceptable for your application, then optimize for speed within that constraint.

For RAG applications, 90-95% recall often suffices. Missing a few relevant documents rarely breaks responses. For recommendation engines, 85% recall may be acceptable if speed enables real-time personalization. For fraud detection, higher recall (98%+) matters because false negatives cost money.

Index Parameter Tuning

HNSW offers configurability. Increase M (connections per node) to improve recall but consume more memory. Increase efConstruction during build to improve index quality but extend build time. Increase ef at query time to improve recall but slow queries.

Start with defaults (M=16, efConstruction=200, ef=100) and adjust based on benchmarks. If recall is too low, increase ef first (cheap at query time). If still insufficient, rebuild with higher M or efConstruction.

Memory Optimization

Vector databases are memory-hungry. Strategies to reduce footprint:

1. Quantization

Compress vectors from 32-bit floats to 8-bit integers (scalar quantization) or lower-dimensional approximations (product quantization). Reduces memory 4x to 32x with 1-5% recall loss.

2. Disk-Based Indexes

Some systems (Milvus DiskANN, Qdrant on-disk storage) keep indexes on SSD, loading hot data into memory. Slower but supports larger datasets on limited memory.

3. Dimensionality Reduction

Use PCA or random projection to reduce vector dimensions before storage. Trade-off: information loss and potential accuracy degradation.

Distributed Architectures

Billion-vector datasets exceed single-machine capacity. Distributed architectures shard data across nodes. Strategies include:

Sharding by ID: Partition vectors by hash of ID. Simple but doesn't preserve locality.

Sharding by Cluster: Partition based on vector clustering. Query routes to relevant shards only. Better efficiency but complex to maintain.

Replication: Duplicate data across nodes for fault tolerance and read scaling. Increases storage costs but improves availability.

Milvus, Weaviate, and Qdrant all support distributed deployments. Configuration complexity rises with scale, requiring DevOps expertise.

GPU Acceleration

GPUs excel at parallel vector operations. Oracle and NVIDIA collaborated to accelerate vector embedding generation and HNSW index creation using NVIDIA GPUs and cuVS library (Oracle Blog, 2024). Redis and FAISS also support GPU-accelerated search.

GPU acceleration makes sense for:

Extremely large datasets (billions of vectors)

High query throughput (thousands of QPS)

Real-time embedding generation

For moderate scale (millions of vectors, hundreds of QPS), CPU-only systems often suffice.

The Future: GraphRAG and Beyond

The vector database space is evolving rapidly. Three trends define the near future.

1. GraphRAG Adoption

GraphRAG combines vector databases with knowledge graphs. Vectors capture semantic meaning; graphs encode relationships between entities. Together, they address limitations of pure vector search.

Microsoft open-sourced GraphRAG mid-2024, accelerating adoption. Amazon's AI blog cited benchmarks from Lettria showing GraphRAG boosted answer correctness from ~50% to 80%+ across finance, healthcare, industry, and law datasets (VentureBeat, 2025-11-16).

The FalkorDB blog reports that when schema precision matters, GraphRAG can outperform vector retrieval by ~3.4x on certain benchmarks. GraphRAG-Bench (released May 2025) provides rigorous evaluation across reasoning tasks and multi-hop queries (VentureBeat, 2025-11-16).

Expect more databases to integrate graph capabilities. Weaviate already supports semantic relationships. Neo4j and other graph databases are adding vector search. Hybrid systems will become standard.

2. Multimodal and Temporal Extensions

Early vector databases focused on text. The next wave handles images, audio, and video simultaneously. Multimodal embeddings (like OpenAI's CLIP) unify text and images in one vector space, enabling queries like "find product images matching this description."

Temporal awareness is emerging. T-GRAG (Temporal GraphRAG) extends GraphRAG with time dimensions, enabling queries over evolving knowledge: "What was the CEO's stance on AI in Q1 2024 vs. Q3 2024?" (VentureBeat, 2025-11-16).

3. Hybrid Search as Default

Pure vector search is fading. Hybrid approaches combining:

Vector similarity (semantic understanding)

BM25 keyword search (exact term matching)

Metadata filtering (faceted search by date, category, author)

Reranking (cross-encoder models refine results)

This combination, often called Adaptive RAG, dynamically adjusts retrieval strategy based on query type. Research in 2024-2025 showed that adaptive methods outperform single-strategy approaches (arXiv, 2025-05-28).

Qdrant proposed BM42 (an improved BM25 variant), though later acknowledged errors in the implementation. The incident highlights the industry's focus on better hybrid methods. RAGFlow, open-sourced in April 2024, made hybrid search and semantic chunking core design principles, achieving 26,000+ GitHub stars by year-end (RAGFlow Blog, 2024-12-24).

4. Commoditization and Consolidation

As major database vendors add vector features, pure-play vector database startups face commoditization. MongoDB Atlas Vector Search, Oracle Database 23ai AI Vector Search, PostgreSQL pgvector, Elasticsearch vectors—all deliver basic functionality sufficient for many use cases.

Specialized vector databases must differentiate on:

Performance at extreme scale (billions of vectors)

Hybrid capabilities (combining vectors, graphs, keywords seamlessly)

Developer experience (easy onboarding, great docs, active community)

Managed services (zero-ops for teams without infrastructure expertise)

Market consolidation is likely. Expect acquisitions as larger players absorb innovative startups. The survivors will be those solving specific pain points better than general-purpose databases.

5. Standardization Efforts

No unified query language or API exists across vector databases yet. Developers face vendor lock-in. Frameworks like LangChain and LlamaIndex provide abstraction layers, but native APIs differ significantly.

Efforts toward standardization are emerging. Open-source benchmarks (VectorDBBench, ANN-Benchmarks) create common evaluation frameworks. If the community coalesces around shared standards, interoperability improves and vendor lock-in decreases.

FAQ

1. What is a vector database in simple terms?

A vector database stores data as arrays of numbers (vectors) that represent the meaning of text, images, or other content. It quickly finds similar items by calculating mathematical distances between vectors, enabling AI applications like smart search and recommendations.

2. How is a vector database different from a traditional database?

Traditional databases find exact matches using keywords or IDs. Vector databases find similar items based on meaning, even when words differ. Traditional databases use tables and SQL; vector databases use high-dimensional vectors and similarity search algorithms.

3. What are embeddings?

Embeddings are vector representations of data created by neural networks. An embedding model converts text, images, or audio into fixed-length arrays of numbers that capture semantic meaning. Similar content produces similar embeddings.

4. Do I need a vector database for my AI application?

You need a vector database if your application involves similarity search, semantic search, recommendations, or RAG systems. If you're only doing structured queries on exact fields, a traditional database suffices.

5. Which vector database should I choose?

For managed simplicity: Pinecone. For open-source flexibility: Qdrant or Milvus. For hybrid search: Weaviate. For rapid prototyping: ChromaDB. For integrating with PostgreSQL: pgvector. The best choice depends on your scale, budget, and team expertise.

6. How much does a vector database cost?

Open-source solutions are free but require infrastructure costs (servers, memory, storage). Managed services range from free tiers (Qdrant 1 GB, Pinecone limited) to hundreds or thousands of dollars monthly for larger deployments. Typical production deployments cost USD 100-500/month at moderate scale.

7. Can vector databases handle billions of vectors?

Yes. Milvus, Pinecone, and Qdrant have proven deployments at billion-vector scale. Performance depends on hardware, indexing algorithms, and architectural design. Expect significant memory requirements (hundreds of GB to TBs).

8. What is HNSW indexing?

HNSW (Hierarchical Navigable Small World) is a graph-based indexing algorithm that structures vectors in multiple layers. It achieves logarithmic search time by navigating from coarse top layers to fine bottom layers, dramatically speeding up similarity search.

9. What is RAG and why does it need vector databases?

RAG (Retrieval Augmented Generation) enhances large language models by retrieving relevant documents before generating responses. Vector databases enable fast semantic search to find those relevant documents, grounding LLM outputs in real information and reducing hallucinations.

10. Is vector search always approximate?

Most production systems use approximate nearest neighbor (ANN) search for speed. ANN algorithms achieve 90-99% recall (finding most true neighbors) with orders-of-magnitude speedup. Exact search is possible but impractically slow for large datasets.

11. What industries use vector databases most?

Technology companies dominate adoption, followed by retail/e-commerce (recommendations), finance (fraud detection), healthcare (medical imaging), media (content discovery), and legal (document search). Any industry with unstructured data benefits.

12. How do I measure vector database performance?

Key metrics: query latency (milliseconds per query), throughput (queries per second), recall (percentage of true neighbors found), memory usage, and index build time. Benchmark with your actual data and query patterns using tools like VectorDBBench.

13. Can I use vector databases with SQL databases?

Yes. Common pattern: store metadata in PostgreSQL, embeddings in a vector database. Query both and join results. Alternatively, use PostgreSQL with pgvector extension to unify storage (works well up to ~50 million vectors).

14. What is the difference between vector search and semantic search?

Semantic search finds results based on meaning, not keywords. Vector search is the technical implementation: convert queries and documents to vectors, then find nearest neighbors. Semantic search is the user-facing capability; vector search is the underlying mechanism.

15. Do vector databases require GPUs?

No. Most vector databases run efficiently on CPUs. GPUs accelerate specific workloads (embedding generation, very large-scale search) but aren't required for typical deployments. CPU-only systems handle millions of vectors with sub-100ms latency.

16. How often should I update vectors in my database?

Depends on use case. E-commerce catalogs update daily or hourly. News search updates continuously. Corporate knowledge bases might update weekly. Balance freshness needs against index rebuild costs.

17. What is quantization and should I use it?

Quantization compresses vectors by reducing numerical precision (e.g., 32-bit floats to 8-bit integers). It reduces memory by 4x+ with minimal accuracy loss (1-5% recall). Use it when memory is constrained or cost is critical.

18. Can vector databases handle multi-tenancy?

Yes. Most support multi-tenancy through namespaces, collections, or partitions. Ensure proper isolation and access controls. Pinecone, Qdrant, and Weaviate all offer multi-tenant features for SaaS applications.

19. What happens when my embedding model changes?

Changing embedding models requires re-embedding all data and rebuilding indexes. This is time-consuming at scale. Plan for model updates in your architecture. Some teams maintain multiple indexes with different models temporarily during transitions.

20. Is GraphRAG better than regular RAG?

GraphRAG combines vector search with knowledge graphs for better handling of relationships and multi-hop reasoning. Benchmarks show 30-80% improvement in answer quality for complex queries. However, it's more complex to implement. Regular RAG suffices for simpler use cases.

Key Takeaways

Vector databases are specialized systems that store high-dimensional vectors representing the semantic meaning of text, images, audio, or other data, enabling fast similarity search across billions of items.

The market is exploding, growing from USD 1.66-2.2 billion in 2023-2024 to projected USD 7-10 billion by 2030-2032 at 21-27% CAGR, driven by AI adoption and generative AI applications.

HNSW indexing dominates modern vector databases because it achieves logarithmic search complexity, enabling millisecond queries on billion-vector datasets by structuring data in hierarchical graph layers.

RAG systems depend on vector databases to ground large language models in real documents, with 51% of enterprise AI implementations now using RAG (up from 31% a year earlier).

Real companies achieve measurable results: VIPSHOP cut query times to under 30ms; HumanSignal transformed healthcare data labeling; Home Depot dramatically improved search accuracy—all using vector databases.

Hybrid architectures outperform pure vector search by combining vector similarity, keyword matching (BM25), metadata filtering, and increasingly, knowledge graph relationships (GraphRAG).

Choose databases based on needs: Pinecone for managed simplicity, Milvus for enterprise scale, Qdrant for budget-consciousness, Weaviate for hybrid search, ChromaDB for prototyping, or pgvector for PostgreSQL integration.

Trade-offs exist between recall, latency, and memory. Approximate search achieves 90-99% recall with huge speed gains. Quantization reduces memory 4-32x with minimal accuracy loss. Tune parameters for your specific workload.

GraphRAG and multimodal search define the future, with benchmarks showing 30-80% accuracy improvements over pure vector search for complex reasoning tasks.

The technology is maturing rapidly, shifting from "shiny object" in 2022-2023 to "critical infrastructure" in 2025-2026 as best practices emerge and major database vendors integrate vector capabilities.

Actionable Next Steps

Ready to implement vector databases? Follow this roadmap:

Start small with a prototype

Use ChromaDB or Qdrant's free tier to experiment. Build a simple semantic search over 1,000-10,000 documents from your domain. This proves the concept with minimal investment.

Choose an embedding model

For text: Try OpenAI text-embedding-3-small (1,536 dimensions) or Sentence-BERT. For images: CLIP embeddings. For multimodal: OpenAI CLIP or similar. Test multiple models on your data to compare quality.

Benchmark with your data

Don't trust vendor benchmarks alone. Load your actual data and queries into 2-3 candidate databases. Measure latency, recall, and resource usage under realistic conditions.

Design for hybrid search

Combine vector similarity with keyword search (BM25) and metadata filters. Implement reranking for top results. Hybrid approaches consistently outperform single-method systems.

Plan for scale

Estimate vector count growth over 24 months. Test at 2x and 10x current scale. Budget for memory, storage, and compute. Determine if self-hosted or managed service fits your economics.

Implement monitoring and alerts

Track query latency, throughput, recall, memory usage, and error rates. Set alerts for anomalies. Establish baselines before production launch.

Build reusable pipelines

Create ingestion pipelines that convert data to vectors, handle errors, and log metadata. Design for incremental updates, not just batch loads. Document your embedding model versions and dependencies.

Secure from day one

Implement authentication, authorization, and encryption. Plan for multi-tenancy if building SaaS. Ensure compliance with relevant regulations (GDPR, HIPAA, etc.).

Educate your team

Share resources on vector databases, embedding models, and similarity search. Run workshops to align understanding. Create internal docs and runbooks.

Join the community

Follow vector database GitHub repositories, Discord channels, and forums. The field evolves quickly. Community engagement keeps you updated on best practices, new features, and troubleshooting.

Glossary

ANN (Approximate Nearest Neighbor): Algorithms that find vectors similar to a query without checking every vector, trading perfect accuracy for speed.

BM25: A keyword-based ranking algorithm used in hybrid search to complement vector similarity with exact term matching.

Cosine Similarity: A distance metric measuring the angle between two vectors; higher values indicate greater similarity.

Embedding: A fixed-length vector representation of data (text, image, audio) created by a neural network.

Embedding Model: A neural network that converts raw data into vector embeddings (examples: OpenAI text-embedding-3, CLIP, Sentence-BERT).

Euclidean Distance: The straight-line distance between two points in space; lower values indicate greater similarity.

GraphRAG: A retrieval augmented generation approach combining vector search with knowledge graphs for better relational understanding.

HNSW (Hierarchical Navigable Small World): A graph-based indexing algorithm structuring vectors in layers to enable fast logarithmic search.

Hybrid Search: Combining multiple retrieval methods (vector similarity, keyword search, metadata filters) for better results than any single method.

Index: A data structure organizing vectors to enable fast similarity search without exhaustive comparison.

IVF (Inverted File Flat): A cluster-based indexing method that partitions vectors and searches only relevant clusters.

LSH (Locality-Sensitive Hashing): A hashing technique mapping similar vectors to the same buckets for fast approximate search.

Metadata Filter: Structured conditions applied during search (example: "find similar documents published after 2023").

Product Quantization (PQ): A compression technique dividing vectors into chunks and approximating each chunk, reducing memory usage.

Query Vector: The vector representation of a search query used to find similar items in the database.

Quantization: Compressing vectors by reducing numerical precision (32-bit to 8-bit) to save memory.

RAG (Retrieval Augmented Generation): An AI technique retrieving relevant documents from a vector database before generating LLM responses.

Recall: The percentage of true nearest neighbors found by an ANN algorithm; 95% recall means finding 95 out of 100 true neighbors.

Reranking: A post-retrieval step using more sophisticated models (like cross-encoders) to refine result quality.

Semantic Search: Finding information based on meaning and context, not just keyword matches.

Similarity Search: The core operation in vector databases—finding vectors most similar to a query vector.

Vector: An array of numbers representing data in a high-dimensional space (example: [0.1, -0.5, 0.3, ..., 0.8]).

Vector Database: A specialized database system optimized for storing, indexing, and searching high-dimensional vectors.

Vector Dimension: The length of a vector (number of elements); typical values range from 384 to 4,096.

Sources & References

Market Research & Statistics

Global Market Insights. (2024, December 1). Vector Database Market Size & Share, Forecasts 2025-2034. Retrieved from https://www.gminsights.com/industry-analysis/vector-database-market

MarketsandMarkets. (2025, February). Vector Database Market Size, Growth Drivers, Opportunities [Latest]. Retrieved from https://www.marketsandmarkets.com/Market-Reports/vector-database-market-112683895.html

Grand View Research. (2024). Vector Database Market Size, Share & Trends Report, 2030. Retrieved from https://www.grandviewresearch.com/industry-analysis/vector-database-market-report

Verified Market Research. (2025, March 5). Vector Database Market Size, Share, Trends, Growth & Forecast. Retrieved from https://www.verifiedmarketresearch.com/product/vector-database-market/

The Business Research Company. (2024). Vector Database Market Report 2025. Retrieved from https://www.researchandmarkets.com/reports/5948613/vector-database-market-report

Polaris Market Research and Consulting. (2024). Vector Database Market Size Worth $10,409.89 Million By 2032 | CAGR: 21.7%. Retrieved from https://www.polarismarketresearch.com/industry-analysis/vector-database-market

Technavio. (2025, December 12). Vector Database Market Growth Analysis - Size and Forecast 2025-2029. Retrieved from https://www.technavio.com/report/vector-database-market-industry-analysis

Market Research Future. (2025, May 9). Vector Database Market Size, Growth | Trends Report - 2034. Retrieved from https://www.marketresearchfuture.com/reports/vector-database-market-32105

Technical Documentation & Research Papers

Jie, J., et al. (2024, February). Survey of vector database management systems. VLDB Journal. Retrieved from https://dbgroup.cs.tsinghua.edu.cn/ligl/papers/vldbj2024-vectordb.pdf

Wang, C., et al. (2025, February 28). Towards Reliable Vector Database Management Systems: A Software Testing Roadmap for 2030. arXiv. Retrieved from https://arxiv.org/html/2502.20812v1

Malkov, Y., & Yashunin, D. (2020). Hierarchical navigable small world. Wikipedia. Retrieved January 22, 2026 from https://en.wikipedia.org/wiki/Hierarchical_navigable_small_world

Weaviate. (2024). Vector Indexing. Weaviate Documentation. Retrieved from https://docs.weaviate.io/weaviate/concepts/vector-index

Supabase. (2026, January 14). HNSW indexes. Supabase Docs. Retrieved from https://supabase.com/docs/guides/ai/vector-indexes/hnsw-indexes

Case Studies & Use Cases

Zilliz Learn. (2024, January 9). Building Scalable AI with Vector Databases: A 2024 Strategy. Retrieved from https://zilliz.com/learn/Building-Scalable-AI-with-Vector-Databases-A-2024-Strategy

Oracle Blog. (2024). Accelerating AI Vector Search in Oracle Database 23ai with NVIDIA GPUs. Retrieved from https://blogs.oracle.com/database/accelerating-ai-vector-search-in-oracle-database-23ai-with-nvidia-gpus

MyScale Blog. (2024). 5 Vector Database Use Cases for Real-World Applications. Retrieved from https://www.myscale.com/blog/innovative-real-world-applications-vector-databases/

RAG & AI Applications

Business & Information Systems Engineering, Springer. (2025, June 1). Retrieval-Augmented Generation (RAG). Retrieved from https://link.springer.com/article/10.1007/s12599-025-00945-3

AWS. (2026, January 14). What is RAG? - Retrieval-Augmented Generation AI Explained. Retrieved from https://aws.amazon.com/what-is/retrieval-augmented-generation/

Data Nucleus. (2025, September 24). RAG in 2025: The enterprise guide to retrieval augmented generation, Graph RAG and agentic AI. Retrieved from https://datanucleus.dev/rag-and-agentic-ai/what-is-rag-enterprise-guide-2025

arXiv. (2025, July 25). A Systematic Review of Key Retrieval-Augmented Generation (RAG) Systems: Progress, Gaps, and Future Directions. Retrieved from https://arxiv.org/html/2507.18910v1

Morphik Blog. (2025, July 9). RAG in 2025: 7 Proven Strategies to Deploy Retrieval-Augmented Generation at Scale. Retrieved from https://www.morphik.ai/blog/retrieval-augmented-generation-strategies

arXiv. (2025, May 28). Retrieval-Augmented Generation: A Comprehensive Survey of Architectures, Enhancements, and Robustness Frontiers. Retrieved from https://arxiv.org/html/2506.00054v1

VentureBeat. (2025, November 16). From shiny object to sober reality: The vector database story, two years later. Retrieved from https://venturebeat.com/ai/from-shiny-object-to-sober-reality-the-vector-database-story-two-years-later

RAGFlow Blog. (2024, December 24). The Rise and Evolution of RAG in 2024 A Year in Review. Retrieved from https://ragflow.io/blog/the-rise-and-evolution-of-rag-in-2024-a-year-in-review

Vector Database Comparisons

Firecrawl Blog. (2025, October 9). Best Vector Databases in 2025: A Complete Comparison Guide. Retrieved from https://www.firecrawl.dev/blog/best-vector-databases-2025

Liveblocks Blog. (2025, September 15). What's the best vector database for building AI products? Retrieved from https://liveblocks.io/blog/whats-the-best-vector-database-for-building-ai-products

Digital One Agency. (2025, August 18). Best Vector Database For RAG In 2025 (Pinecone Vs Weaviate Vs Qdrant Vs Milvus Vs Chroma). Retrieved from https://digitaloneagency.com.au/best-vector-database-for-rag-in-2025-pinecone-vs-weaviate-vs-qdrant-vs-milvus-vs-chroma/

LiquidMetal AI. (2025, April 9). Vector Database Comparison: Pinecone vs Weaviate vs Qdrant vs FAISS vs Milvus vs Chroma (2025). Retrieved from https://liquidmetal.ai/casesAndBlogs/vector-comparison/

DataCamp. (2025, January 18). The 7 Best Vector Databases in 2026. Retrieved from https://www.datacamp.com/blog/the-top-5-vector-databases

HNSW Algorithm Explanations

Medium. (2024, March 2). HNSW indexing in Vector Databases: Simple explanation and code by Will Tai. Retrieved from https://medium.com/@wtaisen/hnsw-indexing-in-vector-databases-simple-explanation-and-code-3ef59d9c1920

Pinecone. Hierarchical Navigable Small Worlds (HNSW). Retrieved from https://www.pinecone.io/learn/series/faiss/hnsw/

Oracle Blog. (2024). Using HNSW Vector Indexes in AI Vector Search. Retrieved from https://blogs.oracle.com/database/using-hnsw-vector-indexes-in-ai-vector-search

Zilliz Learn. (2024, July 17). Understanding Hierarchical Navigable Small Worlds (HNSW). Retrieved from https://zilliz.com/learn/hierarchical-navigable-small-worlds-HNSW

Milvus Blog. Understand HNSW for Vector Search. Retrieved from https://milvus.io/blog/understand-hierarchical-navigable-small-worlds-hnsw-for-vector-search.md

Redis Blog. (2025, October 31). How hierarchical navigable small world (HNSW) algorithms can improve search. Retrieved from https://redis.io/blog/how-hnsw-algorithms-can-improve-search/

TigerData. (2024, August 13). Vector Database Basics: HNSW. Retrieved from https://www.tigerdata.com/blog/vector-database-basics-hnsw

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments