What is Automated Machine Learning (AutoML)

- Muiz As-Siddeeqi

- Oct 11, 2025

- 37 min read

Picture this: A biology researcher with zero coding experience builds a machine-learning model that predicts disease markers in hours instead of months. A small retailer cuts inventory waste in half using AI predictions they created themselves. A payment company stops millions in fraud daily with models that evolve in real-time. This isn't science fiction—it's happening right now, powered by Automated Machine Learning. AutoML is demolishing the walls that once kept artificial intelligence locked inside tech giants and elite research labs, and it's reshaping how we solve problems across every industry imaginable.

TL;DR

AutoML automates the entire machine learning pipeline, from data preprocessing to model deployment, making AI accessible to non-experts

The market is exploding: Growing from $1.64 billion in 2024 to a projected $231.54 billion by 2034 at 48.3% annual growth (Market.us, 2025)

Real results: PayPal improved fraud detection accuracy from 89% to 94.7%; Lenovo boosted sales prediction accuracy by 7.5%; MIT researchers cut model development time from months to hours

Major platforms include Google Cloud AutoML, H2O Driverless AI, DataRobot, and open-source tools like TPOT and Auto-sklearn

Key challenges remain around model interpretability, high costs for enterprise solutions, and the risk of overfitting without expert oversight

Industries adopting AutoML fastest: Financial services (31% of 2024 demand), healthcare (44.88% growth rate), retail, manufacturing, and government

Automated Machine Learning (AutoML) is the process of automating end-to-end machine learning tasks including data preprocessing, feature engineering, model selection, and hyperparameter tuning. It enables people without deep data science expertise to build high-quality predictive models, democratizing access to artificial intelligence and dramatically reducing the time and cost of deploying AI solutions.

Table of Contents

Understanding AutoML: The Basics

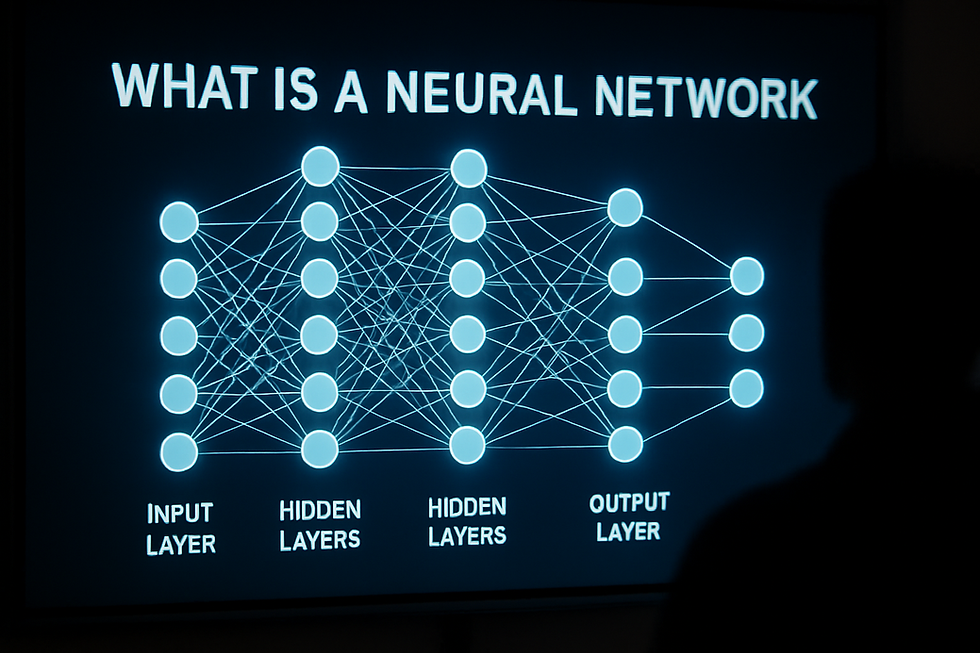

Automated Machine Learning represents a fundamental shift in how we build artificial intelligence systems. At its core, AutoML addresses a simple but powerful question: What if creating sophisticated AI models didn't require years of specialized training?

What AutoML Actually Does

AutoML automates the traditionally manual, time-consuming parts of machine learning. A 2022 Google course on machine learning foundations revealed a startling fact: over 80% of a typical ML project's time goes to data formatting and preparation alone (MIT News, 2023). AutoML tackles this head-on by automating:

Data Preprocessing: Cleaning messy real-world data, handling missing values, detecting outliers, and transforming raw inputs into usable formats.

Feature Engineering: Identifying which data attributes matter most, creating new features from existing ones, and automatically selecting the most predictive variables.

Model Selection: Testing dozens or hundreds of different algorithm types—from decision trees to neural networks—to find what works best for your specific problem.

Hyperparameter Tuning: Fine-tuning the countless configuration settings that determine how models learn, a task that traditionally required extensive trial and error.

Model Validation: Automatically splitting data, testing models rigorously, and ensuring predictions will work on new, unseen data.

Why AutoML Matters Now

The demand for machine learning solutions has exploded, but the supply of qualified data scientists hasn't kept pace. As of 2024, the AutoML market reached $1.64 billion and is projected to grow to $10.93 billion by 2029 at a compound annual growth rate of 46.8% (The Business Research Company, 2025).

This shortage of expertise creates a critical bottleneck. Organizations generate massive amounts of data but lack the human resources to transform it into actionable insights. AutoML bridges this gap by enabling domain experts—people who deeply understand their fields but lack coding skills—to harness AI's power directly.

The Democratization Effect

Before AutoML, building a machine learning model required assembling a team of specialists: data engineers to prepare the data, data scientists to build models, ML engineers to deploy them, and DevOps teams to maintain them. This process could take months and cost hundreds of thousands of dollars.

AutoML compresses this timeline dramatically. What once took a team of experts three to four months can now be accomplished in days or even hours. Consensus Corporation, for example, reduced deployment time from 3-4 weeks to just 8 hours using AutoML (AIMultiple, 2025).

How AutoML Works: The Technical Pipeline

Understanding AutoML's inner workings helps you appreciate both its power and its limitations.

Step 1: Data Ingestion and Analysis

AutoML platforms start by analyzing your raw data. They automatically:

Detect data types (numerical, categorical, text, dates)

Identify the target variable you want to predict

Assess data quality and flag issues

Generate initial statistics and visualizations

For instance, H2O Driverless AI can import datasets and immediately analyze structure, suggesting the best preliminary models based on what it finds in your data (H2O.ai, 2025).

Step 2: Automated Data Preprocessing

This crucial phase handles the grunt work:

Missing Value Imputation: Intelligently filling in gaps using statistical methods

Outlier Detection: Identifying and handling extreme values that could skew results

Data Transformation: Scaling numerical features, encoding categorical variables, and normalizing distributions

Data Splitting: Creating training, validation, and test sets to ensure robust model evaluation

Step 3: Feature Engineering Magic

This is where AutoML truly shines. Traditional feature engineering requires deep domain knowledge and creativity. AutoML automates this through:

Automatic Feature Generation: Creating polynomial features, interaction terms, and aggregate statistics

Feature Selection: Using algorithms to identify which features actually improve predictions

Dimensionality Reduction: Compressing high-dimensional data while preserving information

H2O Driverless AI, for example, automates the entire feature engineering process, detecting relevant features, finding interactions, handling missing values, deriving new features, and comparing their importance (H2O.ai, 2025).

Step 4: Model Search and Selection

Here's where different AutoML platforms diverge in their approaches:

Genetic Programming Approach: TPOT, developed in 2015 by Dr. Randal Olson, uses evolutionary algorithms that "breed" machine learning pipelines, combining different algorithms and seeing which combinations perform best over multiple generations (AutoML.info, 2023).

Bayesian Optimization: Auto-sklearn uses Bayesian methods to intelligently navigate the space of possible models, learning from previous attempts to make smarter choices about which models to try next (Springer, 2024).

Neural Architecture Search (NAS): Google Cloud AutoML employs NAS for deep learning tasks, automatically designing neural network architectures optimized for your specific data (Google Cloud, 2025).

Step 5: Hyperparameter Optimization

Each machine learning algorithm has dozens of tunable parameters. AutoML systematically explores this vast configuration space using techniques like:

Grid search for exhaustive testing

Random search for efficient sampling

Bayesian optimization for intelligent exploration

Evolutionary strategies for complex landscapes

Step 6: Model Ensemble and Validation

Advanced AutoML platforms don't stop at finding one good model—they combine multiple models to achieve even better results. This ensembling approach often outperforms any single model.

Rigorous validation ensures models will perform well on real-world data through:

Cross-validation across multiple data splits

Holdout testing on completely unseen data

Performance metrics tailored to your specific problem

Step 7: Deployment and Monitoring

Modern AutoML platforms handle the final mile:

Generating production-ready code in Python, Java, or other languages

Creating APIs for easy integration

Providing monitoring dashboards to track model performance over time

Enabling model retraining as new data arrives

The Evolution of AutoML: From 2014 to Today

The Pre-AutoML Era

Before 2014, machine learning practitioners tackled individual automation problems—hyperparameter optimization, algorithm selection—but no unified framework existed. Building ML models remained a manual, expert-driven process.

2014: The Birth of AutoML

The term "AutoML" as a comprehensive discipline emerged in 2014 at the first dedicated AutoML workshop at ICML (International Conference on Machine Learning). This marked a shift from isolated automation efforts to a holistic vision of end-to-end machine learning automation (Springer, 2023).

2015-2016: The Open-Source Wave

TPOT (2015): Dr. Randal Olson released TPOT, one of the first AutoML systems, using genetic programming to optimize entire ML pipelines. His 2016 papers won best paper awards at both EvoStar and GECCO computer science conferences (AutoML.info, 2023).

Auto-sklearn (2015): Matthias Feurer and colleagues introduced Auto-sklearn, bringing Bayesian optimization and meta-learning to the AutoML toolkit. This system could learn from previous experiments on other datasets to make smarter initial choices (Springer, 2024).

2017-2019: Commercial Platforms Emerge

Tech giants recognized AutoML's potential:

Google Cloud AutoML (2018): Made AutoML accessible through a user-friendly interface, particularly strong for vision and language tasks

H2O Driverless AI: Brought enterprise-grade AutoML to market, focusing on interpretability and feature engineering

DataRobot: Positioned itself as a comprehensive end-to-end platform for business users

2020-2023: Specialization and Refinement

AutoML began addressing specific domains and challenges:

2023 - BioAutoMATED: MIT researchers released an open-source AutoML platform specifically designed for biological sequences, reducing model development time from months to hours (MIT News, 2023). Published in Cell Systems on June 21, 2023, it integrated multiple AutoML tools optimized for DNA, RNA, peptide, and glycan data.

Healthcare and medical imaging applications exploded

Financial services adopted AutoML for fraud detection and risk modeling

Government agencies began automating benefits adjudication

2024-2025: The Maturation Phase

By 2024, AutoML had evolved from experimental technology to mission-critical infrastructure. Key developments include:

Explainability Focus: Platforms now integrate SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) to make model decisions transparent

Federated Learning: AutoML platforms enabling privacy-preserving collaborative model training

Edge Deployment: Optimizing models for deployment on mobile and IoT devices

Hybrid Cloud Solutions: Flexible deployment across on-premises and cloud infrastructure

The number of AutoML publications quintupled from 2014 to 2021, reflecting the field's explosive growth (Springer, 2023).

Major AutoML Platforms and Tools

Enterprise Commercial Platforms

DataRobot

Strengths: Comprehensive end-to-end platform with strong enterprise features. Forrester Research named DataRobot a Leader in their AI/ML platforms analysis, praising its "rise from a niche automated machine learning (AutoML) player to a full-lifecycle AI platform" (eWEEK, 2022).

Key Features:

Automated model documentation and governance

MLOps capabilities for deployment and monitoring

Collaboration tools for teams

Support for open-source modeling techniques from R, Python, Spark, H2O, XGBoost

Pricing: Subscription-based with annual fees typically ranging from $50,000 to over $200,000 for medium-sized organizations depending on user count, data volume, and feature scope (DS Stream, 2025).

Best For: Large enterprises requiring comprehensive AI lifecycle management with strong governance and compliance needs.

H2O Driverless AI

Strengths: Industry-leading AutoML capabilities with a focus on interpretability and feature engineering. Recognized as a Visionary in the 2025 Gartner Magic Quadrant for Cloud AI Developer Services (H2O.ai, 2025).

Key Features:

Automated feature engineering using proprietary algorithms

Built-in model interpretability with detailed explanations

GPU acceleration for faster training

Support for time series forecasting

Pricing: Premium subscription model with enterprise licenses reaching approximately $390,000 for three years, excluding infrastructure costs (DS Stream, 2025). The open-source H2O-3 offers a free alternative with fewer features.

Best For: Organizations prioritizing model interpretability and sophisticated feature engineering, particularly in regulated industries like finance and healthcare.

Real-World Impact: PayPal reported that their fraud detection model efficiency increased from 89% to 94.7% through adoption of H2O.ai's AutoML tool (Knowledge-Sourcing, 2025). Commonwealth Bank of Australia trained 900 analysts to use H2O.ai, with their Chief Data & Analytics Officer stating they're "making millions of decisions every day 100% better" (H2O.ai, 2025).

Google Cloud AutoML

Strengths: Exceptional ease of use with a no-code interface. Leverages over a decade of Google's exclusive research advancements, particularly strong for vision, language, and forecasting tasks.

Key Features:

Minimal technical expertise required

Human labeling service for data annotation

Integration with Google Cloud ecosystem (BigQuery, Vertex AI)

State-of-the-art neural architecture search for custom models

Pricing: Pay-as-you-go model based on training time and predictions. Generally more accessible for small to medium deployments.

Best For: Organizations wanting quick results without extensive data science teams, particularly for vision and NLP applications.

Open-Source Tools

TPOT (Tree-based Pipeline Optimization Tool)

History: One of the first AutoML tools, developed by Dr. Randal Olson in 2015. Won multiple best paper awards at major conferences (AutoML.info, 2023).

Approach: Uses genetic programming to evolve machine learning pipelines over multiple generations. Built on scikit-learn.

Strengths:

Completely free and open-source

Exports Python code for the discovered pipeline

Easy to use for beginners

Active community support

Limitations:

Can be time-consuming for large datasets

Results may vary between runs due to stochastic nature

Primarily focused on tree-based algorithms

Auto-sklearn

Approach: Uses Bayesian optimization combined with meta-learning, learning from previous experiments on other datasets to make smarter initial choices.

Strengths:

Built on scikit-learn, offering flexibility

Strong customization options

Meta-learning accelerates optimization

Best For: Data scientists who want more control over the AutoML process while still benefiting from automation.

AutoKeras

Focus: Specializes in neural architecture search for deep learning models.

Strengths:

Automatically designs neural network architectures

Built on Keras/TensorFlow

Strong for image, text, and structured data

Comparison Table

Platform | Type | Pricing | Best For | Interpretability | Ease of Use |

DataRobot | Commercial | $50K-$200K+/year | Enterprise governance | High | Medium |

H2O Driverless AI | Commercial | $390K/3 years | Feature engineering | Very High | Medium |

Google Cloud AutoML | Commercial | Pay-per-use | Quick prototyping | Medium | Very High |

TPOT | Open-source | Free | Learning AutoML | Low | High |

Auto-sklearn | Open-source | Free | Customization | Medium | Medium |

AutoKeras | Open-source | Free | Deep learning | Low | High |

Real-World Case Studies

Case Study 1: PayPal's Fraud Detection Transformation

Company: PayPal

Challenge: Protecting buyers and sellers from fraud across billions of transactions

Solution: H2O.ai AutoML

Date: Ongoing since pre-2023

Background: PayPal offers extensive purchase protection guarantees, promising to reimburse buyers for the full purchase price plus shipping if they don't receive items. This protection, while customer-friendly, creates opportunities for fraud through buyer-seller collusion.

Implementation: PayPal's teams of data scientists, financial analysts, and intelligence agencies collaborate to understand fraud perpetrator behavior and build robust predictive models. They adopted H2O.ai's AutoML platform to accelerate model development and improve accuracy.

Results:

Fraud detection efficiency jumped from 89% to 94.7% (Knowledge-Sourcing, 2025)

6X speed improvement when using H2O4GPU with Driverless AI, according to Venkatesh Ramanathan, Senior Data Scientist at PayPal (H2O.ai, 2025)

Model development time reduced from several months to just over a few days with Driverless AI

Impact: The improvements translate to millions of dollars in prevented fraud and better protection for both buyers and sellers in PayPal's ecosystem.

Source: H2O.ai case studies and Knowledge-Sourcing market report, 2025

Case Study 2: MIT BioAutoMATED for Biology Research

Institution: Massachusetts Institute of Technology

Researchers: Jim Collins, Jacqueline Valeri, Luis Soenksen, and team

Publication: Cell Systems, June 21, 2023

Tool: BioAutoMATED (Open-source)

Challenge: Building machine learning models for biological research required months of work by both biologists and ML experts. The design choices underlying ML models presented major barriers for biologists wanting to incorporate AI into their research.

Solution: BioAutoMATED, an end-to-end AutoML framework optimized for biological sequences (nucleic acids, peptides, glycans). The system integrates three open-source AutoML tools—AutoKeras, DeepSwarm, and TPOT—into a unified framework with automatic data preprocessing, architecture selection, and model deployment.

Key Innovation: BioAutoMATED handles biological sequences explicitly, something traditional AutoML tools don't do well. It automatically provides techniques for analyzing, interpreting, and designing biological sequences.

Results:

Reduced model development time from months to hours (MIT News, July 6, 2023)

Achieved state-of-the-art performance for RBS sequence translation efficiency prediction in under 30 minutes with only 10 lines of user code

Successfully predicted gene regulation, peptide-drug interactions, and glycan annotation

Enabled design of optimized synthetic biology components

Practical Applications:

Predicting translation efficiency for E. coli ribosome-binding sites

Analyzing antibody-drug binding affinities

Glycan structure annotation

Accessibility: Open-source code publicly available on GitHub, designed to be easy to run even for non-ML experts.

Broader Impact: "What we would love to see is for people to take our code, improve it, and collaborate with larger communities to make it a tool for all," said Luis Soenksen, co-first author (MIT News, 2023).

Source: MIT News (July 6, 2023), Cell Systems journal, PubMed (June 21, 2023)

Case Study 3: Lenovo Sales Forecasting Improvement

Company: Lenovo

Application: Sales prediction and forecasting

Solution: AutoML software (specific platform from DataRobot ecosystem)

Date: Pre-2025

Challenge: Accurately predicting product sales to optimize inventory management and reduce costs.

Implementation: Lenovo adopted AutoML tools to build predictive models for sales forecasting across their product lines.

Results:

Sales prediction model accuracy increased by 7.5% after AutoML adoption (Knowledge-Sourcing, 2025)

Impact: Improved forecasting directly reduces inventory carrying costs, minimizes stockouts, and optimizes supply chain efficiency across Lenovo's global operations.

Source: Knowledge-Sourcing AutoML Market Report, 2025

Case Study 4: California Design Den Inventory Optimization

Company: California Design Den (Bedding solutions provider)

Challenge: High inventory carryover costs

Solution: Google AutoML

Date: Pre-2025

Results:

Lowered inventory carryover by approximately 50% using Google's AutoML tool (Knowledge-Sourcing, 2025)

Impact: Dramatic reduction in capital tied up in excess inventory, improved cash flow, and better alignment of stock levels with actual demand.

Source: Knowledge-Sourcing AutoML Market Report, 2025

Case Study 5: Insurance Fraud Detection

Industry: Insurance

Challenge: Detecting fraudulent claims in workers' compensation

Solution: H2O AutoML

Context: The insurance industry estimates claims fraud amounts to $80 billion annually in the United States alone (H2O.ai, 2025)

Traditional Approach: Professional claims examiners manually selected suspicious claims for analysis, pulling information from multiple systems—a time-consuming process contributing to case backlogs.

Implementation: A global insurance company consolidated data from multiple sources into a Hadoop data store and deployed H2O for automated fraud detection.

Key Benefits:

Models export as Plain Old Java Objects (POJOs) for easy production deployment

Can run anywhere Java runs—transaction systems, case management, authorization systems

Fraud models are highly complex, and H2O's approach dramatically reduces programming time

Industry Impact: Insurers expect savings of $80-160 billion by 2032 as automated models mine structured and unstructured data for suspicious claims (Mordor Intelligence, 2025).

Source: H2O.ai case studies, Mordor Intelligence market report (July 2025)

Case Study 6: Trupanion Customer Churn Prediction

Company: Trupanion (Pet insurance)

Application: Customer churn prediction

Solution: AutoML platform

Results:

Successfully identifies two-thirds of customers who will churn before they actually churn (AIMultiple, August 2025)

Impact: Enables proactive retention efforts, targeting at-risk customers with interventions before losing them, significantly improving customer lifetime value.

Source: AIMultiple AutoML case studies compilation, August 12, 2025

Market Landscape and Growth Trends

Explosive Market Growth

The AutoML market is experiencing unprecedented expansion. Multiple research firms project similar explosive growth trajectories:

Market.us (March 2025):

2024: $4.5 billion

2034 projection: $231.54 billion

CAGR: 48.30% over 2025-2034

The Business Research Company (2025):

2024: $1.64 billion

2025: $2.35 billion

2029 projection: $10.93 billion

CAGR: 46.8% over 2024-2029

Knowledge-Sourcing (2025):

2025: $1.933 billion

2030 projection: $11.306 billion

CAGR: 42.37%

Global Market Insights (April 2024):

2023: $1.4 billion

2032 projection: Over $10 billion

CAGR: 30% over 2024-2032

While projections vary based on methodology, all point to the same conclusion: AutoML is one of the fastest-growing segments in enterprise software.

Geographic Distribution

North America Dominance: In 2024, North America captured 46.4% of the global market share, generating $2.08 billion in revenue (Market.us, 2025). The United States alone accounted for $1.67 billion, with a CAGR of 47.1%.

Factors driving North American leadership:

Rapid technological advancement

Widespread AI adoption across industries

Concentration of major AutoML vendors

Strong venture capital ecosystem (200+ AutoML funding rounds in 2024)

Asia-Pacific Growth: Projected to register a 45.97% CAGR between 2025-2030, the fastest growth rate globally (Mordor Intelligence, July 2025).

Key drivers:

National AI strategies deployed by governments

Japan's AI economy projected to grow from $4.5 billion to $7.3 billion by 2027

China leads in patent publications for 37 of 44 critical AI technologies

Southeast Asian manufacturers adopting AutoML for yield optimization

Europe: Germany held approximately 23% share in AutoML market in 2023 (P&S Market Research, 2025). The German government adopted ambitious national AI strategies in 2018, committing to spend over $3.33 billion (€3 billion) on AI by 2025.

Emerging Markets: Latin America, Middle East, and Africa remain in nascent adoption stages but show promise as infrastructure and investment grow.

Market Segmentation

By Offering (Market.us, 2025):

Solutions: 68.8% of market share

Services: 31.2%

By Enterprise Size (Market.us, 2025):

Large enterprises: 74.5% of market share

SMEs: 25.5%

By Deployment (Market.us, 2025):

Cloud-based: 60.2% of market share

On-premises: 39.8%

By Application (Market.us, 2025):

Data processing: 34.6% of market share

Feature engineering: Significant portion

Model selection and validation: Growing segment

What's Driving Growth?

1. Data Science Talent Shortage

The demand for data scientists massively outstrips supply. Organizations generate more data than ever but lack experts to analyze it. AutoML addresses this critical bottleneck by enabling non-experts to build effective models.

Global Market Insights (April 2024) identified the "shortage of skilled data scientists and machine learning engineers" as a primary growth driver, enabling organizations to leverage ML effectively without relying on limited expert talent.

2. Rising Demand for Fraud Detection

Financial institutions are rapidly moving from static rule-based systems to AutoML-powered fraud detection that learns from real-time transaction flows.

Allianz Insurance plc detected $95.2 million (£77.4 million) in claims fraud in 2023, up from $86.96 million (£70.7 million) in 2022 (The Business Research Company, February 2024). This increasing fraud drives demand for advanced detection solutions.

3. Cloud Computing Integration

The synergy between AutoML and cloud platforms provides scalable computing resources essential for running complex models. In 2022, the worldwide public cloud services market surpassed $500 billion, growing 22.9% year-over-year (P&S Market Research, 2025).

4. AI Democratization Push

Organizations across all sectors recognize that AI competitive advantages require broad adoption, not just elite data science teams. AutoML makes this democratization technically feasible.

5. Cost Efficiency Imperative

Building ML models traditionally requires expensive specialists and lengthy timelines. AutoML dramatically reduces both cost and time, delivering faster ROI.

Investment Trends

Venture investors closed more than 200 AutoML-related funding rounds in 2024, feeding a vibrant startup pipeline that accelerates product innovation (Mordor Intelligence, July 2025).

Major tech companies continue heavy investment:

Oracle's cloud infrastructure revenue rose 52% in fiscal 2025 as regulated industries moved workloads to FedRAMP-compliant regions

Google, Microsoft, Amazon all expanding AutoML offerings

Specialized vertical solutions emerging for healthcare, finance, manufacturing

Industries Transformed by AutoML

Financial Services (31% of 2024 Demand)

Primary Applications:

Fraud detection and prevention

Credit risk scoring

Algorithmic trading

Anti-money laundering (AML)

Customer churn prediction

Loan default prediction

Why It Works: Financial services generate massive structured transaction data ideal for ML. Regulatory requirements for model explainability align well with modern AutoML interpretability features.

Real Impact: Financial institutions are moving from static rule sets to AutoML-based fraud systems that learn from real-time transaction flows, cutting false positives and improving recovery rates. Insurers expect savings of $80-160 billion by 2032 (Mordor Intelligence, July 2025).

Federal agencies projected $247 billion in improper payments in fiscal year 2022, with cumulative federal improper payment estimates totaling about $2.4 trillion since fiscal 2003 (P&S Market Research, 2025).

Healthcare (44.88% CAGR - Fastest Growing)

Primary Applications:

Clinical decision support

Medical imaging analysis and triage

Patient flow optimization

Disease prediction and risk stratification

Drug discovery

Genomics analysis

Why It's Growing: Healthcare generates enormous amounts of data but faces critical shortages of AI expertise. AutoML's explainability modules satisfy medical device regulators and hospital ethics boards.

Regulatory Fit: The FDA and other regulatory bodies require transparent, explainable AI models for medical applications. Modern AutoML platforms with built-in interpretability features (SHAP, LIME) help meet these requirements.

Real Examples:

University of North Carolina researchers used Google Cloud AutoML Tables to build screening tools for Early Childhood Caries, winning multiple graduate student research awards (Google for Education, 2025)

Dental applications showing 95.4% precision in dental implant classification and 92% accuracy in paranasal sinus disease detection (PMC, 2025)

Retail and E-Commerce

Primary Applications:

Demand forecasting

Customer segmentation

Personalized product recommendations

Dynamic pricing optimization

Inventory management

Customer lifetime value prediction

Impact: AutoML personaliz ation engines lift conversion rates by double-digit percentages. California Design Den reduced inventory carryover by 50% using Google AutoML (Knowledge-Sourcing, 2025).

Manufacturing

Primary Applications:

Predictive maintenance

Quality control on production lines

Yield optimization

Supply chain forecasting

Defect detection

Adoption Driver: Southeast Asian manufacturers adopt AutoML for yield optimization to offset rising labor costs and supply-chain volatility (Mordor Intelligence, July 2025).

Government and Public Sector

Primary Applications:

Benefits adjudication automation

Fraud detection in welfare programs

Resource allocation optimization

Citizen service prediction

Example: Brazil's social security institute aims to process 55% of welfare claims via AI by 2025 (Mordor Intelligence, July 2025).

Energy and Utilities

Primary Applications:

Load pattern modeling for smart grids

Predictive maintenance for infrastructure

Demand forecasting

Renewable energy optimization

Telecommunications

Primary Applications:

Network optimization

Customer churn prediction

Predictive maintenance

Fraud detection

Benefits of AutoML

1. Dramatic Time Savings

Traditional Approach: Building a production ML model typically takes 3-6 months with a team of specialists.

AutoML Approach: Same model ready in days or hours.

Real Examples:

Consensus Corporation: Reduced deployment time from 3-4 weeks to 8 hours

MIT BioAutoMATED: Cut model development from months to under 30 minutes for some applications

PayPal: New model development time went from several months to just over a few days

2. Cost Reduction

Direct Savings:

Reduced need for expensive data science specialists

Faster time-to-value means quicker ROI

Lower infrastructure costs through optimized model selection

Real Impact: IFFCO-Tokio uses H2O AI Cloud to save over $1 million annually by transforming fraud prediction processes (H2O.ai, 2025).

3. Accessibility for Non-Experts

Domain experts can now build models directly without writing code:

Biologists can create genomics models

Business analysts can build forecasting tools

Healthcare providers can develop diagnostic aids

This democratization allows people with deep domain knowledge to apply ML to problems they understand best.

4. Improved Accuracy

AutoML often outperforms manually designed models because:

It tests more algorithm combinations than humans have time for

It optimizes hyperparameters more thoroughly

It avoids human biases in model selection

It uses ensemble methods to combine multiple models

Examples:

PayPal: Accuracy improved from 89% to 94.7%

Lenovo: Sales prediction accuracy increased by 7.5%

Trupanion: Identifies two-thirds of churning customers before they leave

5. Consistency and Reproducibility

AutoML eliminates subjective decision-making and manual errors, producing:

Reproducible results across different runs

Standardized modeling approaches

Documented model development processes

Audit trails for compliance

6. Continuous Improvement

Modern AutoML platforms enable:

Automated model retraining as new data arrives

Performance monitoring and drift detection

A/B testing of model versions

Seamless model updates without manual intervention

7. Reduced Barriers to AI Adoption

Small and medium enterprises can now leverage AI without building large data science teams, leveling the playing field with tech giants.

Limitations and Challenges

1. Interpretability and Black Box Concerns

The Challenge: AutoML-generated models can be complex and difficult to understand, creating "black box AI" where the model's decision-making process is opaque (IBM, April 2025).

Why It Matters:

Regulated industries require explainable models

Medical professionals need to understand diagnostic recommendations

Financial institutions must justify credit decisions

Bias detection requires understanding feature importance

Current Solutions:

SHAP (Shapley Additive Explanations) integration

LIME (Local Interpretable Model-agnostic Explanations)

Feature importance visualizations

Automated model documentation

Remaining Gap: While interpretability tools help, they don't fully replicate the deep understanding a human expert develops when manually building models.

2. High Costs for Enterprise Solutions

Commercial Platform Pricing:

DataRobot: $50,000-$200,000+ annually

H2O Driverless AI: ~$390,000 for three-year enterprise license

These costs can be prohibitive for small and medium-sized businesses, potentially limiting AutoML adoption to large enterprises with substantial AI budgets.

Consideration: Organizations must weigh upfront costs against the expense of hiring and maintaining data science teams, which can easily exceed AutoML platform costs.

3. Limited Customization

The Trade-Off: Automation sacrifices flexibility for convenience. In niche cases requiring highly customized models, AutoML solutions may struggle to deliver appropriate results (IBM, April 2025).

When Manual Approaches Win:

Novel research requiring cutting-edge techniques not yet in AutoML platforms

Highly specialized domains with unique requirements

Cases where domain-specific architectures are known to work best

4. Data Quality Dependency

Fundamental Limitation: "An AI model is as strong as its training data" (IBM, April 2025). AutoML cannot overcome poor data quality, bias in training sets, or inappropriate problem framing.

Common Issues:

Missing values beyond what imputation can reasonably handle

Insufficient data volume for reliable model training

Biased historical data perpetuating unfair outcomes

Data drift making models obsolete

Solution: Data governance frameworks and unified data quality standards during early stages ensure cleaner, more reliable datasets for modeling (AIMultiple, August 2025).

5. Risk of Overfitting

Without expert oversight, AutoML tools may create models that perform brilliantly on training data but fail on real-world inputs (IBM, April 2025).

Why It Happens: Exhaustive search over many models and parameters can find patterns in training data that don't generalize.

Mitigation: Proper validation procedures, holdout testing, and monitoring deployment performance catch overfitting before it causes problems.

6. Computational Resource Requirements

The Reality: Training multiple models with extensive hyperparameter search is computationally expensive.

Cost Considerations:

Cloud computing fees can add up quickly

GPU requirements for deep learning tasks

Time costs for extensive searches

Balancing Act: Users must balance search thoroughness against computational budgets and time constraints.

7. Need for Human Oversight

Despite automation, experts remain necessary for:

Defining the right problem to solve

Ensuring models are applied correctly and ethically

Monitoring for data privacy violations

Detecting and addressing bias in model predictions

Understanding business context and constraints

Key Insight: "The bulk of work a data scientist does is not modeling, but rather data collection, domain understanding, figuring out how to design a good experiment, and what features can be most useful" (Neptune.ai, August 2023).

AutoML handles modeling but cannot replace domain expertise and strategic thinking.

8. Integration Challenges

Legacy System Compatibility: Older systems frequently face compatibility issues with modern AutoML platforms and API integration difficulties (AIMultiple, August 2025).

Solution: Thorough technical assessments before platform selection and planned system upgrades or middleware solutions.

9. Resistance to Change

Cultural Barriers: Traditional analysts may view AutoML skeptically. Limited understanding of AutoML tools among staff can slow adoption (AIMultiple, August 2025).

Solution: Comprehensive training programs emphasizing AutoML as a complementary tool, fostering acceptance and empowering teams.

10. Evolving Regulatory Landscape

As AutoML becomes more prevalent in high-stakes domains, regulatory requirements are tightening:

EU AI Act requiring enhanced observability and compliance templates

Brazil's Senate passed a national AI law in March 2025 defining transparency, accountability, and oversight agency remit (Mordor Intelligence, July 2025)

Increasing disclosure standards from finance regulators

Platforms must continuously adapt to meet new compliance requirements.

AutoML vs Traditional Machine Learning

Development Timeline

Aspect | Traditional ML | AutoML |

Data preprocessing | Days to weeks (manual) | Hours (automated) |

Feature engineering | Weeks (requires expertise) | Hours (automated exploration) |

Model selection | Days (test handful of options) | Hours (test dozens/hundreds) |

Hyperparameter tuning | Days to weeks (manual search) | Hours (systematic optimization) |

Total Time | 3-6 months | Days to weeks |

Team Requirements

Traditional ML:

Data engineers (data preparation)

Data scientists (model building)

ML engineers (deployment)

DevOps (infrastructure)

Domain experts (guidance)

AutoML:

Domain experts (problem definition)

1-2 technical staff (platform management)

Optional: ML expert for oversight

Cost Structure

Traditional ML:

High personnel costs ($100K-$200K+ per specialist annually)

Extended project timelines delay ROI

Ongoing maintenance requires specialist retention

AutoML:

Platform licensing fees ($50K-$400K annually for enterprise)

Reduced personnel requirements

Faster time-to-value

Often lower total cost of ownership

Control and Flexibility

Traditional ML:

✅ Complete control over every decision

✅ Can implement cutting-edge techniques

✅ Deep customization possible

❌ Time-consuming and error-prone

❌ Requires specialized expertise

AutoML:

✅ Consistent, reproducible results

✅ Tests more options thoroughly

✅ Accessible to non-experts

❌ Limited to platform capabilities

❌ Less control over specific choices

Performance

Traditional ML:

Can achieve optimal performance with expert tuning

Performance depends heavily on practitioner skill

Risk of human error and bias in model selection

AutoML:

Often matches or exceeds manually designed models

Consistent quality across projects

Systematic exploration reduces risk of missing good solutions

May struggle with highly specialized or novel problems

When to Choose Which

Choose Traditional ML When:

You need cutting-edge techniques not yet in AutoML platforms

The problem requires highly specialized domain knowledge in model architecture

You have time and expertise for custom solution development

Research novelty is more important than rapid deployment

Choose AutoML When:

You need fast results with limited data science resources

The problem fits standard ML tasks (classification, regression, forecasting)

Consistency and reproducibility are critical

Multiple stakeholders need to build models

Budget or timeline constraints are tight

The Hybrid Approach

Many organizations use both:

AutoML for rapid prototyping: Quickly test if ML can solve the problem

Traditional ML for refinement: Expert-led customization of promising approaches

AutoML for production pipelines: Standardized, maintainable deployment

Myths vs Facts

Myth 1: AutoML Will Replace Data Scientists

Fact: AutoML augments data scientists rather than replacing them. Data scientists are freed from repetitive tasks to focus on higher-value work like problem formulation, domain understanding, and strategic decision-making.

"There's a sentiment that AutoML could leave a lot of Data Scientists jobless. Will it? Short answer – Nope. Even if AutoML solutions become 10x better, it will not make Machine Learning specialists of any trade irrelevant" (Neptune.ai, August 2023).

The bulk of a data scientist's work—data collection, domain understanding, experiment design, feature selection—requires human judgment that AutoML cannot replicate.

Myth 2: AutoML Always Produces Better Models

Fact: AutoML often produces excellent models but not always superior to expert-crafted solutions. For highly specialized domains or novel problems requiring cutting-edge techniques, manual approaches may still win.

BioAutoMATED's antibody-drug interaction models, while highly predictive, didn't match the performance of a manually tuned model comprising six different custom architectures (Cell Systems, June 2023).

Myth 3: AutoML Requires No Technical Knowledge

Fact: While AutoML reduces technical barriers, users still need understanding of:

What problem they're solving and why

Basic data quality assessment

How to interpret results and limitations

When model predictions should be trusted

Non-technical users can use AutoML, but some technical guidance improves results dramatically.

Myth 4: AutoML Is Only for Beginners

Fact: Expert data scientists increasingly use AutoML to accelerate workflows, establish baselines, and handle routine modeling tasks, freeing time for complex challenges requiring human creativity.

DataRobot users "appreciate the company's rise from a niche automated machine learning (AutoML) player to a full-lifecycle AI platform" serving "extended AI teams" (eWEEK, 2022).

Myth 5: All AutoML Tools Are Basically the Same

Fact: AutoML platforms differ dramatically in approach (genetic programming vs Bayesian optimization vs NAS), features, interpretability, customization, and target use cases.

TPOT uses genetic algorithms

Auto-sklearn uses Bayesian optimization with meta-learning

Google Cloud AutoML uses neural architecture search

H2O Driverless AI emphasizes feature engineering and interpretability

Myth 6: AutoML Models Are Black Boxes

Fact: While older AutoML systems lacked interpretability, modern platforms integrate explainability tools like SHAP and LIME, automatically generating feature importance rankings, partial dependence plots, and decision explanations.

However, interpretability challenges remain, especially for deep learning models with many layers.

Myth 7: AutoML Works on Any Data

Fact: AutoML still requires:

Sufficient data volume (typically hundreds to thousands of examples minimum)

Reasonable data quality with not excessive missing values

Appropriate problem framing

Meaningful features or ability to create them

"Garbage in, garbage out" applies to AutoML just as to traditional ML.

Myth 8: AutoML Is Too Expensive for Small Businesses

Fact: While enterprise platforms are pricey, free open-source alternatives exist:

TPOT (completely free)

Auto-sklearn (free)

H2O-3 (free open-source version)

AutoKeras (free)

Plus, cloud AutoML services offer pay-as-you-go pricing accessible to smaller budgets.

Myth 9: AutoML Deployment Is Automatic

Fact: While AutoML automates model building, deployment still requires:

Integration with existing systems

Monitoring for performance drift

Retraining schedules

Governance and compliance processes

Modern AutoML platforms include deployment tools, but production operation requires ongoing management.

Implementation Checklist

Phase 1: Assessment and Preparation

Define the Problem

[ ] Clearly articulate what you want to predict or classify

[ ] Establish success metrics (accuracy threshold, business impact)

[ ] Identify stakeholders and their requirements

[ ] Confirm ML is appropriate for this problem

Assess Data Readiness

[ ] Identify available data sources

[ ] Evaluate data volume (hundreds to thousands of examples minimum)

[ ] Check data quality (missing values, errors, consistency)

[ ] Verify you have permission to use the data

[ ] Ensure data is representative of real-world scenarios

Establish Budget and Timeline

[ ] Define project timeline (weeks, months, quarter)

[ ] Allocate budget for platform costs or personnel

[ ] Plan for computational resources (cloud credits, hardware)

[ ] Identify required team members and their availability

Phase 2: Platform Selection

Evaluate Requirements

[ ] Determine if you need no-code, low-code, or full programming access

[ ] Assess interpretability requirements (regulated industry?)

[ ] Define deployment needs (cloud, on-premises, edge)

[ ] Consider integration requirements with existing systems

Compare Options

[ ] Test 2-3 platforms with small pilot projects

[ ] Evaluate ease of use for your team's skill level

[ ] Review interpretability and explainability features

[ ] Compare pricing models and total cost of ownership

[ ] Check community support and documentation quality

Make Decision

[ ] Document selection criteria and decision rationale

[ ] Secure necessary approvals and budget allocation

[ ] Set up initial platform access and accounts

Phase 3: Initial Implementation

Data Preparation

[ ] Consolidate data from multiple sources if needed

[ ] Clean obvious data quality issues

[ ] Create training/validation/test splits

[ ] Upload data to chosen platform

[ ] Verify data import and preview look correct

Configure First Model

[ ] Define target variable and features

[ ] Set appropriate optimization metric

[ ] Configure time/resource budgets

[ ] Select relevant algorithm families if platform allows

[ ] Launch initial model training

Evaluate Results

[ ] Review performance metrics on test set

[ ] Examine feature importance rankings

[ ] Check for signs of overfitting

Phase 4: Refinement and Validation

Model Improvement

[ ] Address identified data quality issues

[ ] Engineer additional features if needed

[ ] Adjust optimization metrics if initial choice wasn't ideal

[ ] Increase search budget for better results

[ ] Test ensemble methods if available

Validation Testing

[ ] Test on completely new, unseen data if possible

[ ] Validate with domain experts reviewing predictions

[ ] Check performance across different subgroups

[ ] Assess potential biases in predictions

[ ] Document model limitations and failure modes

Interpretability Review

[ ] Generate and review feature importance explanations

[ ] Create SHAP or LIME explanations for key predictions

[ ] Prepare model documentation for stakeholders

[ ] Verify explanations align with domain knowledge

Phase 5: Deployment

Prepare for Production

[ ] Export model in required format (API, code, container)

[ ] Set up deployment infrastructure

[ ] Configure monitoring and alerting

[ ] Establish model retraining schedule

[ ] Create rollback procedures

Integration Testing

[ ] Test API endpoints or integration points

[ ] Verify latency meets requirements

[ ] Validate predictions in production environment

[ ] Confirm monitoring is capturing necessary metrics

Go-Live

[ ] Deploy to production following change management procedures

[ ] Monitor closely during initial period

[ ] Gather user feedback

[ ] Document any issues and resolutions

Phase 6: Ongoing Operations

Monitoring

[ ] Track prediction accuracy over time

[ ] Monitor for data drift

[ ] Watch for unexpected patterns

[ ] Review error cases regularly

Maintenance

[ ] Retrain models on schedule or when drift detected

[ ] Update features as new data sources become available

[ ] Refine based on feedback and business changes

[ ] Archive model versions for audit trail

Governance

[ ] Maintain documentation of model decisions

[ ] Conduct bias audits periodically

[ ] Ensure compliance with relevant regulations

[ ] Review and update ethical guidelines

Future Outlook

Near-Term Developments (2025-2027)

Enhanced Explainability: Growing regulatory requirements will drive AutoML platforms to integrate more sophisticated interpretability tools beyond current SHAP and LIME implementations. Expect native support for counterfactual explanations, causal inference tools, and domain-specific explanation frameworks.

Federated Learning Integration: AutoML platforms will increasingly support federated learning, allowing organizations to collaboratively train models while keeping sensitive data on-premises—critical for healthcare, finance, and government applications.

Edge AI Optimization: AutoML will automatically optimize models for deployment on mobile devices and IoT hardware, balancing accuracy against memory, compute, and energy constraints.

Multimodal AutoML: Current platforms primarily handle structured data, images, or text separately. Next-generation tools will seamlessly combine multiple data types in single models—simultaneously processing transaction records, customer service transcripts, and identity photos.

Medium-Term Evolution (2027-2030)

Level 6 Autonomy: Current AutoML represents Level 3-4 autonomy (task-specific automation). Research points toward Level 6 systems that can interactively recommend prediction tasks, automatically determine if ML is appropriate, and handle entire data science workflows with minimal human input (ResearchGate, May 2020).

Causal AutoML: Moving beyond correlation to automatically discover causal relationships in data, enabling more robust predictions and actionable insights for interventions.

AutoML for Small Data: While current AutoML requires substantial datasets, advances in transfer learning, few-shot learning, and synthetic data generation will make AutoML effective with limited examples—crucial for rare diseases, emerging products, and specialized domains.

Automated Bias Detection and Mitigation: As AI ethics becomes increasingly important, AutoML platforms will incorporate automatic bias detection across protected classes and suggest debiasing strategies during model development.

Long-Term Vision (Beyond 2030)

Natural Language Problem Definition: Instead of configuring models through interfaces, users will describe problems in natural language: "I want to predict which customers will churn in the next 90 days and why, focusing on factors we can influence."

Automated Experiment Design: AutoML systems will not just build models but design data collection strategies, A/B tests, and intervention experiments to answer business questions optimally.

Self-Optimizing Production Systems: Deployed models will continuously learn from new data, detect drift, retrain themselves, and deploy updates without human intervention—while maintaining safety guardrails and human oversight for critical decisions.

Domain-Specialized AutoML: While general-purpose platforms dominate today, specialized AutoML tools optimized for specific domains (legal contract analysis, protein folding, financial portfolio optimization) will emerge, incorporating deep domain knowledge.

Market Trajectory

Multiple projections point to AutoML becoming a fundamental component of enterprise software, integrated into:

Business intelligence platforms

Customer relationship management systems

Supply chain management tools

Healthcare electronic medical records

Financial services platforms

Rather than standalone tools, AutoML will be embedded intelligence powering decision support across software ecosystems.

Persistent Challenges

The Expertise Paradox: As AutoML makes basic ML more accessible, demand for expert data scientists who understand when and how to use it will increase, not decrease. The field will bifurcate between automated routine tasks and complex strategic work requiring human creativity.

Governance at Scale: As thousands of models are automatically built and deployed, organizations will struggle with:

Auditing which models are in production where

Ensuring compliance across diverse regulatory frameworks

Managing model versions and dependencies

Coordinating retraining across interconnected systems

Energy and Compute Costs: Extensive automated search requires significant computational resources. Unless efficiency dramatically improves, environmental and cost concerns may constrain AutoML's most ambitious applications.

Despite challenges, the trajectory is clear: AutoML will transition from emerging technology to essential infrastructure, fundamentally changing who can build AI systems and how quickly they can be deployed.

FAQ

What is AutoML in simple terms?

AutoML (Automated Machine Learning) is software that automatically builds AI prediction models for you. Instead of hiring data scientists to write code for months, you upload your data, tell the system what you want to predict, and it handles data preparation, testing different algorithms, and creating the best model—often in hours instead of months.

How much does AutoML cost?

Costs vary dramatically: Free open-source options (TPOT, Auto-sklearn, AutoKeras) exist with no licensing fees. Cloud services like Google Cloud AutoML use pay-as-you-go pricing starting from a few dollars. Enterprise commercial platforms (DataRobot, H2O Driverless AI) range from $50,000 to over $390,000 annually. Total cost depends on platform choice, data volume, and computational requirements.

Can AutoML replace data scientists?

No. AutoML handles repetitive modeling tasks but cannot replace the strategic thinking, domain expertise, and creative problem-solving that data scientists provide. Research shows over 80% of ML work involves understanding the problem, collecting the right data, and designing experiments—tasks requiring human judgment. AutoML makes data scientists more productive by automating routine work so they can focus on higher-value activities.

What industries benefit most from AutoML?

Financial services (fraud detection, credit scoring), healthcare (diagnostic imaging, clinical decision support), retail (demand forecasting, personalization), manufacturing (predictive maintenance, quality control), and government (fraud prevention, resource allocation) see the biggest impacts. Any industry with substantial data and prediction problems can benefit.

How accurate are AutoML models?

AutoML often matches or exceeds manually designed models because it systematically tests more options than humans have time for. Examples: PayPal improved fraud detection from 89% to 94.7% accuracy, Lenovo increased sales prediction accuracy by 7.5%, dental implant classification achieved 95.4% precision. However, accuracy depends on data quality, problem complexity, and domain appropriateness.

What are the main limitations of AutoML?

Key limitations include interpretability challenges (black box models), high costs for enterprise platforms ($50K-$400K+ annually), limited customization compared to manual approaches, dependency on data quality, risk of overfitting without expert oversight, and computational expense. AutoML also cannot define the right problem to solve or replace domain expertise.

How long does it take to build a model with AutoML?

Model building time varies by problem complexity and platform configuration, typically ranging from 30 minutes to several hours. Some simple models train in minutes, while complex deep learning tasks may take hours or days. This represents a massive time savings compared to traditional approaches requiring weeks or months.

Do I need coding skills to use AutoML?

It depends on the platform. No-code AutoML tools like Google Cloud AutoML require minimal technical skills—you upload data through a web interface and click buttons. Low-code platforms like H2O may require basic understanding of data concepts but minimal coding. Open-source tools like TPOT require Python programming skills. Choose based on your team's capabilities.

What's the difference between AutoML and traditional machine learning?

Traditional ML requires manually writing code for every step: data cleaning, feature engineering, selecting algorithms, tuning settings, validating results. AutoML automates these steps. Traditional ML offers more control and customization but takes longer and requires specialized expertise. AutoML is faster and more accessible but less flexible for highly specialized problems.

Can AutoML work with small datasets?

AutoML typically performs best with hundreds to thousands of training examples. With very small datasets (dozens of examples), AutoML may struggle or overfit. However, some platforms are developing transfer learning and few-shot learning capabilities to work with limited data. The required data volume depends on problem complexity—simpler problems need less data.

How do I choose the right AutoML platform?

Consider:

(1) Your team's technical skill level (no-code vs code-required)

(2) Interpretability requirements (regulated industries need explainable models)

(3) Deployment needs (cloud vs on-premises)

(4) Budget constraints

(5) Integration with existing systems

(6) Support and documentation quality.

Test 2-3 platforms with pilot projects before committing to ensure they meet your specific needs.

Is AutoML suitable for deep learning?

Yes, some AutoML platforms excel at deep learning through Neural Architecture Search (NAS). Google Cloud AutoML, AutoKeras, and others can automatically design neural network architectures for image, text, and structured data. However, deep learning typically requires more data and computational resources than simpler algorithms.

What data formats does AutoML support?

Most AutoML platforms handle: Structured tabular data (CSV, Excel, database tables), images (JPEG, PNG), text data, and time series. Some specialized platforms support biological sequences (DNA, RNA, proteins), audio, video, and graph data. Data format support varies by platform—check specific tool documentation.

How does AutoML handle missing data?

AutoML platforms automatically detect missing values and apply appropriate imputation strategies: mean/median imputation for numerical features, mode imputation for categorical features, predictive imputation using other features, or marking missingness as informative. The specific strategy depends on the platform and data characteristics.

Can AutoML detect bias in data?

Modern AutoML platforms increasingly include bias detection features that identify disparate performance across demographic groups, flag imbalanced representations in training data, and generate fairness metrics. However, fully addressing bias requires human judgment about what constitutes fairness in specific contexts. AutoML assists bias detection but doesn't automatically solve ethical challenges.

What happens after AutoML creates a model?

Most platforms provide multiple outputs: (1) Trained model exported as code (Python, Java) or API endpoint, (2) Deployment instructions, (3) Model documentation and explanations, (4) Performance metrics and validation results. You then integrate the model into your application, set up monitoring, and establish retraining schedules to maintain accuracy as data evolves.

Does AutoML work for time series forecasting?

Yes, many AutoML platforms include specialized time series capabilities that handle seasonality, trends, and temporal dependencies. Google Vertex AI AutoML Forecasting, H2O Driverless AI, and others offer dedicated time series modules. They automatically detect patterns like weekly cycles, holidays, and long-term trends to generate accurate forecasts.

How secure is AutoML?

Security depends on deployment choice: Cloud-based AutoML stores data on provider servers (Google, AWS, etc.) with their security measures. On-premises or airgapped deployments keep data entirely within your infrastructure. Most enterprise platforms offer compliance certifications (SOC 2, HIPAA, FedRAMP) for regulated industries. Review specific platform security features and choose deployment models matching your requirements.

Key Takeaways

AutoML democratizes AI by automating the complex, time-consuming parts of machine learning, enabling non-experts to build sophisticated predictive models without writing code or deep technical knowledge.

The market is experiencing explosive growth, expanding from $1.64 billion in 2024 to projected $10.93-231.54 billion by 2029-2034 (depending on source), with annual growth rates of 42-48%.

Real results validate the technology: PayPal improved fraud detection accuracy from 89% to 94.7%, Lenovo boosted sales forecasting by 7.5%, and MIT researchers cut biology model development time from months to hours.

Multiple platform options exist ranging from free open-source tools (TPOT, Auto-sklearn) to enterprise solutions (DataRobot, H2O Driverless AI) to cloud services (Google Cloud AutoML), each with different strengths, costs, and use cases.

AutoML augments rather than replaces data scientists, handling repetitive modeling tasks while experts focus on problem definition, domain understanding, and strategic decision-making—tasks requiring human creativity.

Key benefits include dramatic time savings (months to days), cost reduction, accessibility for domain experts, improved accuracy through systematic search, and consistent reproducible results.

Significant challenges remain around model interpretability (black box concerns), high enterprise platform costs ($50K-$400K+ annually), limited customization, data quality dependency, and need for expert oversight.

Industries adopting fastest include financial services (31% of 2024 demand), healthcare (44.88% growth rate), retail, manufacturing, and government—any sector with substantial data and prediction problems.

Geographic leadership: North America dominates with 46.4% market share ($2.08B in 2024), but Asia-Pacific shows fastest growth (45.97% CAGR through 2030) driven by national AI strategies.

Future trajectory points to enhanced explainability, federated learning integration, edge AI optimization, and evolution toward Level 6 autonomous systems that interactively recommend tasks and handle entire workflows with minimal human input.

Actionable Next Steps

Assess Your Use Case: Identify a specific prediction problem in your organization where ML could add value. Start with problems that have clear metrics (reducing fraud, improving forecast accuracy, cutting costs) rather than vague goals.

Evaluate Your Data Readiness: Before choosing a platform, audit your data: Do you have at least hundreds of labeled examples? Is data quality reasonable? Can you access all relevant data sources? Address major data issues before starting with AutoML.

Start with Free Trials: Test 2-3 AutoML platforms with small pilot projects before committing budget. Most commercial platforms offer free trials. Open-source tools like TPOT and Auto-sklearn are always free to test.

Build Internal Knowledge: Invest in training for 1-2 team members who will champion AutoML adoption. Even no-code platforms benefit from users who understand basic ML concepts and limitations.

Begin with Low-Risk Projects: Choose initial projects that can be completed in under six months with clear business value. Avoid mission-critical applications until you've gained experience. Colin Priest at DataRobot notes projects over a year are "almost certainly doomed for failure."

Establish Data Governance: Before scaling AutoML, implement data quality standards, privacy protections, and bias monitoring frameworks. Problems in these areas compound as you deploy more models.

Plan for Deployment: Building models is only half the challenge. Before starting, plan how models will integrate with existing systems, who will monitor performance, and how you'll retrain as data evolves.

Create Cross-Functional Teams: Include domain experts, IT staff, and business stakeholders from the start. One major reason for abandoned projects is that IT teams aren't informed early enough in the lifecycle.

Budget Realistically: Factor in not just platform costs but computational resources (cloud credits or hardware), personnel time, and ongoing maintenance. Compare total cost of ownership against hiring specialized data science staff.

Measure and Iterate: Define success metrics upfront (accuracy targets, cost savings, time reduction). Measure actual results against goals and iterate based on learnings. Celebrate wins to build organizational momentum for AI adoption.

Glossary

AutoML (Automated Machine Learning): The process of automating the end-to-end application of machine learning to real-world problems, including data preprocessing, feature engineering, model selection, and hyperparameter tuning.

What is AI Bias? The Hidden Problem Affecting MillionsSystematic errors in model predictions that unfairly discriminate against certain groups, or statistical tendencies that lead to inaccurate estimates.

Bayesian Optimization: A technique for finding the best hyperparameters by building a probability model of the objective function and using it to select promising configurations to test.

Black Box Model: A machine learning model whose internal decision-making process is difficult or impossible for humans to understand or explain.

Classification: A type of prediction problem where the goal is to assign input data to predefined categories (e.g., spam vs. not spam, disease present vs. absent).

Cross-Validation: A technique for assessing model performance by splitting data into multiple subsets, training on some and testing on others, then rotating which subsets are used for each purpose.

Data Preprocessing: The process of cleaning and transforming raw data into a format suitable for machine learning, including handling missing values, outliers, and encoding categorical variables.

Deployment: The process of putting a trained machine learning model into production where it can make predictions on new, real-world data.

Ensemble: A machine learning technique that combines predictions from multiple models to achieve better performance than any single model.

Feature Engineering: The process of creating new input variables (features) from raw data, or selecting which existing features to use, to improve model performance.

Feature Selection: Techniques for identifying which input variables are most predictive and should be included in a model, while removing irrelevant or redundant features.

Genetic Programming: An evolutionary algorithm that evolves computer programs (or ML pipelines) by mimicking biological evolution through selection, crossover, and mutation operations.

Hyperparameter: Configuration settings that control how a machine learning algorithm learns, which must be set before training begins (e.g., learning rate, tree depth, regularization strength).

Hyperparameter Tuning: The process of systematically testing different hyperparameter configurations to find settings that produce the best-performing model.

Interpretability: The degree to which a human can understand why a machine learning model made a particular prediction or decision.

LIME (Local Interpretable Model-agnostic Explanations): A technique that explains individual predictions by approximating the complex model locally with a simpler, interpretable model.

Meta-Learning: Using knowledge from previous machine learning experiments on other datasets to make smarter initial choices when tackling new problems.

Model Selection: The process of choosing which machine learning algorithm or architecture to use for a specific problem from among many options.

Neural Architecture Search (NAS): Automatically designing neural network architectures by systematically exploring different layer configurations, connections, and operations.

Overfitting: When a model performs very well on training data but poorly on new, unseen data because it learned patterns specific to the training set rather than general patterns.

Pipeline: A sequence of data processing steps (preprocessing, feature engineering, model training) that transforms raw data into predictions.

Regression: A type of prediction problem where the goal is to predict continuous numerical values (e.g., house prices, temperature, sales volume).

SHAP (Shapley Additive Explanations): A method for explaining model predictions by calculating how much each feature contributed to a particular prediction, based on game theory concepts.

Supervised Learning: Machine learning where the algorithm learns from labeled examples (input-output pairs) to make predictions on new, unlabeled data.

Time Series Forecasting: Predicting future values based on historical sequences of data points collected over time (e.g., sales, weather, stock prices).

Transfer Learning: Using knowledge from a model trained on one task to improve performance on a related but different task, often with less data.

Validation Set: Data held aside from training to evaluate model performance during development and make decisions about hyperparameters or model selection.

Sources & References

Market.us. (March 2025). "Automated Machine Learning Market Size | CAGR at 48.30%". https://market.us/report/automated-machine-learning-market/

The Business Research Company. (2025). "Automated Machine Learning (AutoML) Market Report 2025". https://www.researchandmarkets.com/reports/5896115/automated-machine-learning-automl-market-report

Knowledge-Sourcing. (2025). "Automated Machine Learning (AUTOML) Market Size". https://www.knowledge-sourcing.com/report/automated-machine-learning-automl-market

Business Wire. (July 19, 2024). "Global Automated Machine Learning (AutoML) Business Analysis Report 2024: Market to Grow by Almost $10 Billion to 2030 - AutoML is Becoming a Key Tool for Enterprises - ResearchAndMarkets.com". https://www.businesswire.com/news/home/20240719084267/en/

Global Market Insights. (April 1, 2024). "Automated Machine Learning (AutoML) Market Size". https://www.gminsights.com/industry-analysis/automated-machine-learning-market

Mordor Intelligence. (July 7, 2025). "Automated Machine Learning Market Size, Trends, Share & Growth Analysis 2030". https://www.mordorintelligence.com/industry-reports/automated-machine-learning-market

P&S Market Research. (2025). "Automated Machine Learning (AutoML) Market Report". https://www.psmarketresearch.com/market-analysis/automated-machine-learning-market

DS Stream. (2025). "Comparison of AI Data Analytics Platforms: DataRobot vs H2O.ai vs Google AutoML". https://www.dsstream.com/post/comparison-of-ai-data-analytics-platforms-datarobot-vs-h2o-ai-vs-google-automl

eWEEK. (November 29, 2022). "DataRobot vs. H2O.ai: Top AI Cloud Platforms". https://www.eweek.com/big-data-and-analytics/datarobot-vs-h2oai/

H2O.ai. (2025). "H2O Driverless AI". https://h2o.ai/platform/ai-cloud/make/h2o-driverless-ai/

H2O.ai. (2025). "Driving Away Fraudsters at Paypal". https://h2o.ai/case-studies/driving-away-fraudsters-at-paypal/

H2O.ai. (2023). "Building a Fraud Detection Model with H2O AI Cloud". https://h2o.ai/blog/2023/building-a-fraud-detection-model-with-h2o-ai-cloud/

MIT News. (July 6, 2023). "MIT scientists build a system that can generate AI models for biology research". https://news.mit.edu/2023/bioautomated-open-source-machine-learning-platform-for-research-labs-0706

Cell Systems. Valeri, J.A., Soenksen, L.R., et al. (June 21, 2023). "BioAutoMATED: An end-to-end automated machine learning tool for explanation and design of biological sequences". https://www.sciencedirect.com/science/article/pii/S2405471223001515

PubMed. (June 21, 2023). "BioAutoMATED: An end-to-end automated machine learning tool for explanation and design of biological sequences". https://pubmed.ncbi.nlm.nih.gov/37348466/

Harvard-MIT HST. (July 12, 2023). "MIT scientists build a system that can generate AI models for biology research". https://hst.mit.edu/news-events/mit-scientists-build-system-can-generate-ai-models-biology-research

AutoML.info. (June 11, 2023). "TPOT - AutoML". http://automl.info/tpot/

Springer. Hutter, F., Kotthoff, L., & Vanschoren, J. (April 18, 2024). "Automated machine learning: past, present and future". Artificial Intelligence Review. https://link.springer.com/article/10.1007/s10462-024-10726-1

Springer. Gil, Y., et al. (August 8, 2023). "Eight years of AutoML: categorisation, review and trends". Knowledge and Information Systems. https://link.springer.com/article/10.1007/s10115-023-01935-1

AIMultiple. (August 12, 2025). "22 AutoML Case Studies: Applications and Results". https://research.aimultiple.com/automl-case-studies/

Neptune.ai. (August 4, 2023). "AutoML Solutions: What I Like and Don't Like About AutoML as a Data Scientist". https://neptune.ai/blog/automl-solutions

IBM. (April 17, 2025). "What Is AutoML?". https://www.ibm.com/think/topics/automl

ResearchGate. Karmaker, S.K., et al. (May 8, 2020). "AutoML to Date and Beyond: Challenges and Opportunities". https://www.researchgate.net/publication/341252268_AutoML_to_Date_and_Beyond_Challenges_and_Opportunities

PMC (PubMed Central). (2025). "Automated Machine Learning in Dentistry: A Narrative Review". https://pmc.ncbi.nlm.nih.gov/articles/PMC11817062/

Wikipedia. (June 30, 2025). "Automated machine learning". https://en.wikipedia.org/wiki/Automated_machine_learning

Google Cloud. (2025). "AutoML Solutions - Train models without ML expertise". https://cloud.google.com/automl

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments