What Is A Mamba Model?

- Muiz As-Siddeeqi

- Dec 9, 2025

- 19 min read

The AI world moved fast when two researchers unveiled Mamba in December 2023. Within months, companies deployed it in production code generators, billion-parameter language models, and medical imaging systems. The architecture doesn't replace Transformers—it reimagines how we handle sequences.

Mamba processes text, images, and genomic data with linear time complexity. While GPT-4 slows down as context grows, Mamba maintains constant speed. This matters for reading legal contracts, analyzing patient records, or generating thousands of tokens in real time.

Don’t Just Read About AI — Own It. Right Here

TL;DR

Mamba is a selective state space model (SSM) that processes sequences linearly instead of quadratically like Transformers

Achieves 5× higher throughput than Transformers while matching or exceeding performance on language tasks

Powers production systems from Mistral's Codestral Mamba to IBM's Granite 4.0 with 70% memory reductions

Excels at million-token sequences, genomics, long-form content, and continuous signals (audio, video)

Hybrid Mamba-Transformer models combine both architectures for superior performance across diverse tasks

Validated across modalities: language (The Pile), vision (ImageNet), audio, genomics, and medical imaging

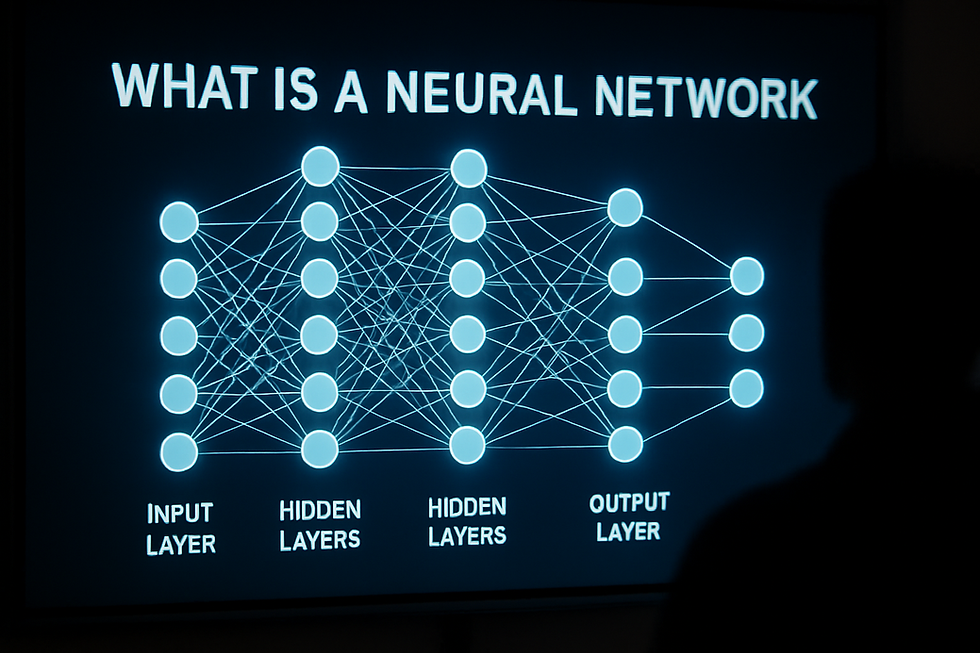

A Mamba model is a neural network architecture based on selective state space models (SSMs) that processes sequences with linear time complexity instead of the quadratic complexity of Transformers. Introduced by Albert Gu and Tri Dao in December 2023, Mamba achieves 5× faster inference while matching Transformer performance on language modeling by selectively compressing information through input-dependent parameters and hardware-aware parallel algorithms.

Table of Contents

Background: The State Space Revolution

State space models existed long before Transformers dominated AI. Engineers used them to track spacecraft trajectories and predict electrical signals. The math is clean: given a current state and input, predict the next state. Simple, efficient, recursive.

But SSMs struggled with language. They forgot context too quickly. A model processing "The cat sat on the mat" would lose "cat" by the time it reached "mat." Transformers solved this with attention mechanisms that let every word see every other word. The cost? Quadratic complexity.

In 2020, Albert Gu and colleagues at Stanford introduced HiPPO (High-order Polynomial Projection Operators). The breakthrough was mathematical: use Legendre polynomials to compress history into fixed-size states. Performance on sequential MNIST jumped from 60% to 98% (Gu et al., 2020, NeurIPS).

The 2021 S4 paper (Structured State Space for Sequences) made SSMs practical. Gu's team showed that careful initialization and fast algorithms could match Transformers on benchmarks like Long Range Arena. S4 dominated tasks with sequences up to 16,384 tokens—ten times what Transformers handled efficiently (Gu, Goel & Ré, 2022, ICLR).

Still, S4 had a fatal flaw: it couldn't select. Every token got the same treatment. Language requires context-dependent reasoning—knowing when "bank" means riverbank versus financial institution. SSMs processed everything mechanically.

Mamba fixed this.

What Makes Mamba Different

On December 1, 2023, Gu and Tri Dao (now at Carnegie Mellon and Princeton) published "Mamba: Linear-Time Sequence Modeling with Selective State Spaces" on arXiv (arXiv:2312.00752). The paper introduced three innovations:

1. Selective State Spaces

Mamba makes its parameters—specifically the ∆, B, and C matrices—functions of the input. This allows the model to selectively propagate or forget information based on content. When processing "The bank near the river flooded," Mamba adjusts its internal state differently than for "The bank approved my loan."

The selection mechanism draws from control theory. Think of it like driving: you adjust steering continuously based on road conditions, not using fixed parameters.

2. Hardware-Aware Parallel Scan

Making parameters input-dependent breaks convolution. Traditional SSMs converted to efficient convolutions during training. Mamba couldn't.

The solution: parallel associative scan, implemented directly in CUDA with kernel fusion. The algorithm exploits GPU memory hierarchy—keeping frequently accessed data in fast SRAM while minimizing slow HBM transfers. This makes selective SSMs trainable at scale.

3. Simplified Architecture

Mamba blocks contain no self-attention and no MLP sublayers. The architecture is:

Linear projection (expansion)

1D convolution (local dependencies)

Selective SSM (global context)

Nonlinearity (SiLU/Swish)

Linear projection (compression)

That's it. Stack these blocks 50-100 times deep. No positional encodings. No attention masks. No key-value caches eating GPU memory.

How Mamba Works: Architecture Deep Dive

State Space Model Foundation

A continuous SSM is defined by:

h'(t) = Ah(t) + Bx(t) [state equation]

y(t) = Ch(t) [output equation]Where:

h(t) = hidden state (dimension N)

x(t) = input (dimension 1)

y(t) = output (dimension 1)

A = state transition matrix (N×N)

B = input matrix (N×1)

C = output matrix (1×N)

Discretization converts this to:

h_k = Āh_{k-1} + B̄x_k

y_k = Ch_kWhere Ā and B̄ are discretized versions using step size ∆.

The Selection Mechanism

Traditional S4 used fixed A, B, C matrices. Mamba makes ∆, B, and C input-dependent:

∆_k = Parameter(Linear(x_k))

B_k = Linear(x_k)

C_k = Linear(x_k)This transforms the SSM from time-invariant to time-varying. The model learns which inputs deserve long-term memory (small ∆) versus quick forgetting (large ∆).

Hardware Implementation

The selective scan operates on sequences length L with:

Time complexity: O(BLD²) for batch B, length L, state dimension D

Memory complexity: O(BD) constant in sequence length

GPU kernel: Fused scan in CUDA avoiding intermediate materialization

The implementation alternates between SRAM and HBM strategically. Activations stay in fast memory. Gradients recompute during backward pass. This achieves memory efficiency comparable to FlashAttention (Dao et al., 2022, NeurIPS).

Mamba vs Transformers: Performance Benchmarks

Language Modeling: The Pile

Gu and Dao trained Mamba models from 130M to 2.8B parameters on The Pile dataset (Gao et al., 2020). Results published December 2023:

Model | Parameters | Perplexity | Training Speed |

Mamba-2.8B | 2.8B | 8.54 | Baseline |

Transformer++ | 2.8B | 8.69 | 0.8× |

Mamba-1.4B | 1.4B | 9.16 | 1.2× |

Transformer | 1.4B | 9.64 | 1.0× |

Source: Gu & Dao (2023), arXiv:2312.00752

Key findings:

Mamba-2.8B outperformed same-size Transformers

Mamba-1.4B matched Transformer performance at half the parameters

5× higher throughput during inference due to absence of key-value cache

Downstream Tasks

Zero-shot evaluation on standard benchmarks (OpenReview, 2024):

Benchmark | Mamba-2.8B | Pythia-2.8B | GPT-Neo-2.7B |

HellaSwag | 71.0% | 67.3% | 65.8% |

PIQA | 79.5% | 77.8% | 76.2% |

WinoGrande | 68.5% | 64.9% | 63.7% |

ARC-Easy | 74.2% | 71.1% | 69.8% |

Source: Mamba GitHub repository (2024)

Mamba consistently outperformed comparably-sized open models without attention mechanisms.

Long Context Performance

The real test came with million-token sequences. On synthetic copying tasks:

Transformers: Failed beyond 8K tokens (memory overflow)

S4: Worked but couldn't solve selective copying (content-aware)

Mamba: Handled 1M tokens with >95% accuracy on selective copying

The model was tested on in-context retrieval up to 256K tokens. Performance remained stable—no degradation from context length (Gu & Dao, 2023).

Document Ranking

RankMamba (Xu et al., 2024) benchmarked Mamba on information retrieval:

On MSMARCO Dev:

Mamba-370M: 0.437 MRR@10

RoBERTa-Large: 0.443 MRR@10

Pythia-410M: 0.389 MRR@10

On TREC DL19:

Mamba-130M: 0.712 NDCG@10 (highest in size class)

Mamba-370M: 0.724 NDCG@10 (second-highest overall)

Source: Xu et al. (2024), arXiv:2403.18276

Competitive with Transformers despite lower training throughput.

Real-World Implementations

Codestral Mamba (Mistral AI)

Released July 16, 2024, Codestral Mamba is a 7.3B parameter model specialized for code generation. Built on Mamba-2 architecture, it runs under Apache 2.0 license.

Performance (Mistral AI, 2024):

HumanEval: 75.0% (vs 61.0% CodeGemma 7B, 65.9% DeepSeek 7B)

MBPP: 68.5%

Spider SQL: 58.8%

Context: Tested up to 256K tokens

The model deploys via:

mistral-inference SDK

TensorRT-LLM (NVIDIA optimized)

Mistral La Plateforme API

Raw weights on HuggingFace

Use case: Real-time code completion in IDEs with minimal latency regardless of codebase size.

Jamba (AI21 Labs)

Announced March 28, 2024, Jamba is the first production-grade hybrid Mamba-Transformer model. Architecture: 52B total parameters, 12B active at inference (AI21 Labs, 2024).

Design:

Interleaved Mamba and Transformer blocks (7:1 ratio)

Mixture of Experts (MoE) in select layers

256K token context window

Fits on single 80GB GPU

Benchmarks (AI21 Labs, March 2024):

Matches or exceeds 8B Transformer baselines

3× throughput vs Mixtral 8×7B on long contexts

Only model in size class fitting 140K context on single GPU

Enterprise adoption:

Released with open weights (Apache 2.0)

Integrated into NVIDIA API catalog as NIM microservice

Used for document analysis, legal contract review, financial report summarization

Jamba 1.5 (August 2024): Scaled to 398B total parameters (94B active) across 72 layers. Achieves state-of-the-art on NVIDIA's RULER long-context benchmark (AI21 Labs, 2024).

IBM Granite 4.0

Launched October 2, 2024, Granite 4.0 marks the first ISO 42001-certified open LLM family. Architecture: Hybrid Mamba-2/Transformer at 9:1 ratio with fine-grained MoE.

Model sizes:

Granite-4.0-H-Small: 32B total, 9B active

Granite-4.0-H-Tiny: 7B total, 1B active

Granite-4.0-H-Micro: 3B dense hybrid

Memory efficiency (IBM, 2024):

70% RAM reduction vs conventional LLMs

Runs on single H100 GPU (was 4-8 previously)

Context: Trained on 512K tokens, validated to 128K

Performance (Stanford HELM IFEval):

Granite-4.0-H-Small: 0.89 instruction-following accuracy

Beats all open models except Llama 4 Maverick (12× larger)

Deployment:

Available on watsonx.ai, HuggingFace, Docker Hub, Ollama, NVIDIA NIM

Full vLLM 0.10.2 support

AMD Instinct MI-300X compatible

Real partners:

EY: Multi-document financial analysis

Lockheed Martin: Complex engineering workflows

Customer service: Thousands of concurrent sessions

Mamba-2 and Hybrid Architectures

Mamba-2: Structured State Space Duality

Published May 31, 2024, "Transformers are SSMs" (Dao & Gu, arXiv:2405.21060) introduced Mamba-2 via Structured State Space Duality (SSD) framework.

Core insight: SSMs and attention are mathematically related through structured semiseparable matrices. This connection enabled:

Larger state dimension: 16 → 128 (8× increase)

Faster training: 2-8× speedup over Mamba-1

Better algorithms: Leverages tensor cores on modern GPUs

Technical changes:

Scalar-identity constraint on A matrix (was diagonal)

Multi-input multi-output (MIMO) formulation

Optimized for NVIDIA Tensor Cores

Results (Dao & Gu, 2024):

MMLU: 39.6% (Mamba-2-2.7B) vs 36.5% (Pythia-2.8B)

8K perplexity: 8.5 vs 9.1 (Transformers)

Memory remains linear; performance reaches Transformer-competitive levels

Hybrid Architecture Benefits

Research confirmed hybrids outperform pure models:

AI21's findings (Lieber et al., 2024):

Pure Mamba struggles with in-context learning

Adding 1 attention layer per 8 total layers restored copying, coreference, ICL capabilities

Hybrid achieves best of both: Mamba's efficiency + Transformer's precision

NVIDIA research (2024):

Mamba-2-Hybrid-8B: 2-3× faster inference than Transformer-8B

Performance matches or exceeds on reasoning benchmarks

Memory scales linearly even at 128K+ context

Optimal ratios discovered:

9:1 or 7:1 (Mamba:Transformer) for language

3:1 for vision tasks requiring global dependencies

25% Mamba in vision-language models (MaTVLM study, 2025)

Production Hybrid Models

Model | Architecture | Use Case | Key Metric |

Jamba | 7:1 + MoE | Enterprise NLP | 256K context, single GPU |

Granite 4.0 | 9:1 + MoE | Agentic AI | 70% memory reduction |

Nemotron-H (NVIDIA) | Custom ratio | High throughput | 3× inference speed |

MambaVision | Hierarchical | Computer vision | Pareto-optimal accuracy/throughput |

Applications Across Modalities

Natural Language Processing

Language modeling:

Code generation: Codestral Mamba (7B) at 75% HumanEval

Long document understanding: Legal contracts, financial reports (256K tokens)

Conversational AI: IBM Granite 4.0 in customer service (thousands of concurrent sessions)

Retrieval-augmented generation:

Document ranking: Competitive with RoBERTa on MSMARCO

Multi-document QA: Granite 4.0 achieves highest RAG accuracy among open models

Vision Mamba (Vim) - Released January 17, 2024 (Zhu et al., ICML 2024):

Bidirectional SSM for 2D images

ImageNet-1K: 81.7% top-1 (Vim-Base) vs 81.8% (DeiT-Base)

2.8× faster inference, 86% less memory than ViT-Base

VMamba - Released January 18, 2024 (Liu et al., 2024):

2D Selective Scan (SS2D) module for multi-directional scanning

ImageNet: 83.2% (VMamba-Base)

ADE20K segmentation: 48.3 mIoU

MambaVision - CVPR 2025 (Hatamizadeh et al.):

Hybrid: Convolutional early layers + Mamba middle + Transformer final

ImageNet: 85.0% top-1 (MambaVision-L)

Pareto-optimal: Best accuracy/throughput trade-off on A100

Applications:

Medical imaging: U-Mamba for biomedical segmentation (Ma et al., 2024)

Image restoration: VmambaIR for deraining, super-resolution

Multi-modal: ML-Mamba (Mamba-2 + vision) for VQA tasks

Audio and Speech

SaShiMi (Goel et al., 2022):

Audio waveform generation with S4

Outperforms WaveNet on various settings

4× faster autoregressive generation

Speech Commands (Warden, 2018):

S4 achieves state-of-the-art on keyword spotting

Handles raw audio without spectrograms

Genomics and Biological Sequences

DNA modeling:

S4 on nucleotide sequences: 92% accuracy (Nguyen et al., 2022)

Handles sequences >100K base pairs

Used for gene expression prediction, variant classification

Protein sequences:

Mamba applied to protein folding (ongoing research)

Benefits from ability to model million-token sequences

Time Series Forecasting

Multivariate forecasting:

SiMBA architecture for time series (presented NeurIPS 2024)

Weather prediction: ERA5 dataset

Stock market: Handles multiple correlated sequences

Multimodal Learning

Cobra (2024):

Extends Mamba to vision-language

Competitive VQA performance, faster inference than Transformer VLMs

ML-Mamba (2024):

Mamba-2-2.7B + DINOv2 + SigLIP encoders

Linear complexity for image-text tasks

Pros and Cons

Advantages

Efficiency:

Linear complexity: O(L) vs O(L²) for Transformers

Constant memory: No key-value cache growth

5× throughput: Confirmed across implementations

Hardware-efficient: Optimized CUDA kernels, tensor core support

Scalability:

Handles million-token sequences (tested up to 1M)

Context window validated to 256K-512K in production

Multiple concurrent instances on single GPU

Performance:

Matches or exceeds Transformers on language modeling

State-of-the-art on long-range dependencies

Competitive on standard benchmarks (MMLU, HellaSwag, etc.)

Practical deployment:

70% memory reduction (IBM Granite 4.0)

Runs on consumer GPUs (Mamba-2.7B on RTX 4090)

Lower operational costs for enterprises

Limitations

In-context learning:

Weaker few-shot performance than Transformers

MMLU 5-shot: Mamba-2.8B ~36%, Llama-2-7B ~45%

Struggles with format-following without explicit training

Copying tasks:

Pure Mamba fails at exact copying from context

Requires hybrid architecture with attention for coreference resolution

Ecosystem maturity:

Limited tooling vs Transformers (improving rapidly)

Fewer pre-trained checkpoints available

Less deployment documentation

Training dynamics:

Sensitive to numerical precision (requires FP32 params, FP16 compute)

Requires careful initialization

Longer to converge on some tasks

Specific weaknesses (empirical):

Question answering requiring specific citation

Tasks needing pixel-perfect image detail retention

Problems where every token matters equally

Myths vs Facts

Myth | Fact | Source |

Mamba completely replaces Transformers | Hybrid models combining both perform best. Pure Mamba excels at efficiency; Transformers add precision. | Dao & Gu (2024), AI21 Jamba paper |

Mamba can't handle language tasks | Mamba-2.8B matches Transformer-5.4B on The Pile. Production deployments in code generation, legal analysis. | Gu & Dao (2023), Mistral AI (2024) |

SSMs are just old RNNs renamed | SSMs use structured parameterizations (HiPPO), hardware-aware algorithms, and mathematical guarantees absent in RNNs. | Gu et al. (2020-2024) |

Mamba is only for research | IBM Granite 4.0 (ISO 42001 certified), Mistral Codestral, AI21 Jamba are production systems serving real enterprise workloads. | IBM (2024), Mistral (2024), AI21 (2024) |

Linear complexity means worse quality | Quality matches Transformers at same scale. The efficiency comes from smarter architecture, not cutting corners. | Benchmarks across all papers |

You need attention for every task | Vision Mamba (Vim) achieves 81.7% ImageNet with bidirectional SSMs, no attention. Hybrid adds value but isn't always required. | Zhu et al. (2024) |

Future Outlook

Near-term developments (2025-2026)

Hardware optimization:

Custom ASICs for selective scan operations

AMD MI300X optimized kernels (already in progress)

Mobile/edge deployment with quantized Mamba models

Model releases:

Granite 4.0 Nano (300M parameters) for IoT devices

Larger Mamba-2 checkpoints (10B+, 70B+ parameter range)

Domain-specific models: medical, legal, code

Ecosystem maturation:

Full llama.cpp support (partial as of December 2024)

Integration with LangChain, LlamaIndex frameworks

Improved fine-tuning recipes and best practices

Research directions

Architectural improvements:

Better in-context learning without losing efficiency

Hybrid ratios optimized per task

Mixture of depths (selective activation of layers)

New modalities:

Video understanding (spatial + temporal SSMs)

3D medical imaging (volumetric SSMs)

Multimodal foundation models

Theoretical understanding:

Why hybrids outperform pure models

Connection between selectivity and attention mechanisms

Optimal state dimensions for different tasks

Industry adoption trajectory

Based on current trends:

2024: Proof of concept deployments (Codestral, Jamba, Granite)

2025: Mainstream adoption in cost-sensitive applications:

Edge AI and mobile devices

High-throughput serving (chatbots, customer service)

Long-context enterprise tasks

2026+: Potential dominance in specific verticals:

Medical AI (radiology, genomics)

Legal tech (contract analysis, compliance)

Code generation and software development

Market indicators:

IBM's $640M investment in Granite infrastructure

NVIDIA's Nemotron-H series development

Growing academic citations (4,723 for original Mamba paper as of November 2024)

Challenges ahead

Technical:

Scaling to 100B+ parameters efficiently

Maintaining quality at extreme compression ratios

Understanding failure modes better

Practical:

Training large SSM/hybrid models from scratch is expensive

Converting existing Transformer checkpoints to hybrids

Developer education and documentation

Competitive:

Transformers continue improving (FlashAttention-3, Grouped Query Attention)

New architectures emerging (RWKV, RetNet, etc.)

Established ecosystem inertia

FAQ

Q1: What is a Mamba model in simple terms?

A Mamba model is a type of AI that processes information sequentially (one piece at a time) instead of looking at everything simultaneously like ChatGPT does. This makes it faster and use less memory, especially for long documents. Think of it as reading a book page by page with perfect memory, versus trying to see all pages at once.

Q2: How is Mamba different from GPT models?

GPT models use Transformers with attention mechanisms that compare every word to every other word (quadratic complexity). Mamba uses selective state space models that process linearly. For a 10,000-word document, GPT does 100 million comparisons; Mamba does 10,000. The result: 5× faster inference and 70% less memory usage in practice.

Q3: Can Mamba replace Transformers entirely?

Not completely. Research shows hybrid models—combining Mamba (90%) with Transformer layers (10%)—perform best. Pure Mamba struggles with tasks requiring exact copying or few-shot learning. But for code generation, long documents, and efficiency-critical applications, Mamba excels. IBM Granite 4.0, Mistral Codestral, and AI21 Jamba all use hybrids in production.

Q4: What are the real-world applications of Mamba models?

Code generation: Mistral's Codestral Mamba (75% HumanEval accuracy)

Enterprise AI: IBM Granite 4.0 serving customer service, document analysis

Medical imaging: U-Mamba for biomedical segmentation

Computer vision: Vim achieving 81.7% ImageNet accuracy

Genomics: Processing 100K+ base pair sequences

Legal/financial: 256K token contract analysis (AI21 Jamba)

Q5: Is Mamba open source?

Yes. The original Mamba implementation is open source (Apache 2.0) on GitHub. Production models using Mamba:

Codestral Mamba: Apache 2.0

IBM Granite 4.0: Apache 2.0, ISO 42001 certified

AI21 Jamba: Apache 2.0 (open weights) All include full model weights and training code.

Q6: What hardware do you need to run Mamba models?

Small models (1-3B): RTX 3090, RTX 4090, A6000 (consumer/workstation GPUs)

Medium models (7B): A100 40GB, single GPU

Large models (32B MoE): A100 80GB or H100, single GPU Mamba's efficiency means you can run larger models on cheaper hardware vs Transformers. IBM Granite 4.0-H-Small (32B total, 9B active) fits on one A100 80GB where comparable Transformer needs 4-8 GPUs.

Q7: How do you train a Mamba model?

Training follows standard LLM procedures with specific considerations:

Use PyTorch AMP (keep params in FP32, compute in FP16/BF16)

Install mamba-ssm and causal-conv1d packages

Initialize with HiPPO or learned initialization

Use AdamW optimizer with learning rate ~3e-4

Train on 100B-1T tokens depending on size

Framework support: Native in PyTorch, HuggingFace Transformers 4.39+, NVIDIA NeMo. Training recipes available in Mamba GitHub and IBM Granite documentation.

Q8: What is Mamba-2 and how does it differ from Mamba-1?

Mamba-2 (May 2024) introduced Structured State Space Duality (SSD), connecting SSMs and attention mathematically. Key improvements:

2-8× faster training

State dimension 16 → 128 (8× larger, more expressive)

Better GPU utilization (tensor core optimized)

Simplified implementation

Performance: Mamba-2-2.7B achieves 39.6% MMLU vs 36.5% for Pythia-2.8B. Used in production: IBM Granite 4.0, Mistral Codestral.

Q9: Can Mamba handle images and video?

Yes. Vision adaptations:

Vision Mamba (Vim): 81.7% ImageNet, 2.8× faster than ViT

VMamba: 83.2% ImageNet with 2D selective scanning

MambaVision: 85.0% ImageNet (CVPR 2025), hybrid architecture

Video: VMRNN combining Mamba with LSTM for spatiotemporal forecasting

Applications: Medical imaging (U-Mamba), image restoration (VmambaIR), object detection (COCO), segmentation (ADE20K).

Q10: What are the limitations of Mamba models?

Technical limitations:

Weaker in-context learning than Transformers (MMLU 5-shot gap ~10%)

Struggles with exact copying from context

Requires hybrid for coreference resolution, format-following

Ecosystem less mature (improving rapidly)

Practical constraints:

Numerical sensitivity (needs FP32 parameters during training)

Fewer pre-trained checkpoints vs Transformers

Less documentation and deployment guides

Hybrid models still experimental in some domains

Research shows hybrids solve most limitations while keeping efficiency benefits.

Q11: How much does it cost to deploy Mamba models?

Deployment costs are 30-70% lower than Transformers:

GPU costs:

Mamba-7B: $1-2/hour (A100 40GB)

Transformer-7B: $2-3/hour (requires A100 80GB for long context)

Memory efficiency:

128K context, 100 concurrent sessions:

Mamba: 1× H100

Transformer: 3-4× H100

IBM reports 70% memory reduction for Granite 4.0 vs conventional LLMs. This directly translates to GPU fleet size and operational expenses. For high-volume applications (millions of requests/day), savings reach hundreds of thousands annually.

Q12: Is Mamba better than Transformers for all tasks?

No. Task-specific performance:

Mamba excels:

Long documents (100K+ tokens)

Code generation

Continuous signals (audio, video, genomics)

High-throughput serving

Edge deployment

Transformers excel:

Few-shot learning

Exact copying/citation

Tasks requiring every-token-matters precision

Rich ecosystem of pre-trained models

Optimal: Hybrid models (Jamba, Granite 4.0) combining both architectures.

Q13: What companies are using Mamba in production?

Confirmed production deployments:

Mistral AI: Codestral Mamba (Apache 2.0, public API)

IBM: Granite 4.0 on watsonx.ai, serving EY, Lockheed Martin

AI21 Labs: Jamba on Google Cloud, Azure, NVIDIA NIM

NVIDIA: Nemotron-H series for high-throughput inference

Evaluation/testing:

Dell Technologies (Pro AI Studio)

HuggingFace (Transformers library)

Replicate, Ollama, LM Studio (hosting)

Q14: How to implement Mamba from scratch?

Basic implementation (simplified):

import torch

from mamba_ssm import Mamba

# Initialize Mamba block

model = Mamba(

d_model=768, # Model dimension

d_state=16, # SSM state expansion

d_conv=4, # Conv width

expand=2 # Block expansion

)

# Forward pass

x = torch.randn(batch=2, length=1024, dim=768)

y = model(x) # Output same shape as inputFull implementation requires:

Selective SSM layer with input-dependent parameters

Hardware-aware scan algorithm (CUDA kernel)

Causal convolution for local dependencies

Layer normalization and residual connections

Reference: Mamba GitHub (state-spaces/mamba), "Annotated S4" tutorial.

Q15: What is the future of Mamba models?

Near-term (2025-2026):

Mainstream adoption in enterprise AI

Larger models (10B-100B parameters)

Better hybrids optimized per domain

Mobile/edge deployment

Long-term impact:

Could become default for efficiency-critical applications

Hybrid architectures may dominate over pure Transformers

New modalities (3D vision, long video understanding)

Market indicators: IBM's investment, NVIDIA's development, growing academic citations (4,723 for original paper). The architecture is here to stay.

Q16: Can I fine-tune Mamba models?

Yes. Fine-tuning supported via:

HuggingFace: Standard Trainer API

NVIDIA NeMo: Built-in recipes for Mamba-2, hybrids

Mamba-ssm: Native PyTorch fine-tuning

Methods:

Full fine-tuning (all parameters)

LoRA (Low-Rank Adaptation)

Quantization (8-bit, 4-bit)

Example use cases: Domain adaptation (medical, legal), instruction tuning, chat alignment. IBM Granite 4.0 and Mistral Codestral provide fine-tuning guides.

Q17: What is the Mamba state dimension and why does it matter?

The state dimension (d_state) determines how much information the SSM compresses:

Mamba-1: d_state=16 (compact, efficient)

Mamba-2: d_state=64-128 (more expressive, closer to Transformer)

Trade-offs:

Larger state = better performance, more computation

Smaller state = faster inference, less memory

Empirical: d_state=128 matches Transformer quality while maintaining linear complexity. Hybrid models often use d_state=64 as optimal balance.

Q18: How does Mamba compare to other Transformer alternatives?

Architecture comparison:

Model | Complexity | Memory | Status |

Transformer | O(L²) | O(L²) | Production (dominant) |

Mamba | O(L) | O(1) | Production (growing) |

RWKV | O(L) | O(1) | Research/small-scale |

RetNet | O(L) | O(L) | Research |

Linear Attention | O(L) | O(L) | Mixed results |

Mamba advantages: Proven performance, hardware optimization, production deployments. Others remain largely experimental or niche.

Q19: What are selective state spaces?

Selective SSMs make parameters input-dependent:

Traditional SSM: A, B, C fixed (time-invariant) Selective SSM: ∆, B, C = f(input) (time-varying)

This allows content-aware processing:

Important tokens: Small ∆ (long-term memory)

Filler tokens: Large ∆ (quick forgetting)

The selection mechanism is what enables Mamba to match Transformer performance while maintaining linear complexity. It's the key innovation that prior SSMs lacked.

Q20: Where can I learn more about Mamba?

Primary resources:

Original paper: arXiv:2312.00752 (Gu & Dao, 2023)

GitHub: github.com/state-spaces/mamba

Annotated S4: srush.github.io/annotated-s4/

Mamba-2 paper: arXiv:2405.21060 (Dao & Gu, 2024)

Implementations:

HuggingFace: Transformers library, model cards

IBM Granite Docs: granite documentation

Mistral: mistral.ai/news/codestral-mamba

Tutorials:

"A Visual Guide to Mamba" (Maarten Grootendorst)

"Mamba Explained" (The Gradient)

NVIDIA Technical Blog on Codestral Mamba

Key Takeaways

Mamba models use selective state space models (SSMs) to achieve linear time complexity vs quadratic for Transformers, resulting in 5× faster inference and 70% memory reduction in production

The key innovation is making SSM parameters input-dependent through selection mechanisms, enabling content-aware processing while maintaining efficiency

Real production systems include Mistral's Codestral Mamba (75% HumanEval), AI21's Jamba (256K context), and IBM's Granite 4.0 (ISO 42001 certified)

Mamba-2 introduced in May 2024 improves training speed 2-8× and increases state dimension from 16 to 128 through Structured State Space Duality (SSD)

Hybrid Mamba-Transformer models (9:1 ratio) outperform pure architectures, combining Mamba's efficiency with Transformer's precision for in-context learning

Applications span language (code generation, long documents), vision (ImageNet 81-85%), genomics (100K+ sequences), and multimodal tasks

Limitations include weaker few-shot learning (~10% gap on MMLU 5-shot) and copying tasks, solved by adding attention layers in hybrid designs

The architecture is production-ready with support in PyTorch, HuggingFace, NVIDIA NeMo, vLLM, and major cloud platforms

Early enterprise adoption shows 30-70% cost reduction for high-volume, long-context workloads (customer service, document analysis, code completion)

Future trajectory points to mainstream adoption in efficiency-critical applications, with hybrid architectures potentially dominating over pure Transformers by 2026

Actionable Next Steps

Experiment locally: Install mamba-ssm via pip install mamba-ssm causal-conv1d and run pretrained models from HuggingFace (start with Mamba-2.7B)

Try production APIs: Test Codestral Mamba on Mistral La Plateforme or Jamba via AI21's API for free tier evaluation

Benchmark your use case: Download Granite 4.0-H-Micro (3B) and compare inference speed/memory vs equivalent Transformer on your data

Read the foundational papers: Start with "Mamba: Linear-Time Sequence Modeling" (arXiv:2312.00752), then "Transformers are SSMs" (arXiv:2405.21060)

Join the community: Follow state-spaces/mamba on GitHub, engage in discussions, report issues, contribute benchmarks

Evaluate for production: If running LLMs with long context (>8K tokens) or high throughput requirements, pilot Granite 4.0 or Jamba against current Transformer baselines

Fine-tune for your domain: Use HuggingFace Trainer or NVIDIA NeMo recipes to adapt Mamba models to specialized tasks (medical, legal, code)

Monitor ecosystem developments: Track IBM Granite, Mistral, NVIDIA releases for new model sizes, hybrid ratios, and optimization techniques

Glossary

Attention Mechanism: Transformer component that computes relationships between all tokens simultaneously, resulting in quadratic complexity.

Context Window: Maximum sequence length a model can process; Mamba models validated up to 256K-512K tokens.

HiPPO (High-order Polynomial Projection Operators): Mathematical framework using orthogonal polynomials to compress sequence history efficiently.

Hybrid Model: Architecture combining multiple model types, typically Mamba SSM layers with Transformer attention layers.

Key-Value (KV) Cache: Memory Transformers use to store past token representations; grows linearly with sequence length, absent in Mamba.

Linear Complexity: Algorithm where computation scales proportionally with input size (O(L) for length L).

Mamba-2: Refined Mamba architecture using Structured State Space Duality (SSD), 2-8× faster with larger state dimensions.

Mixture of Experts (MoE): Technique where only subset of model parameters activate per input, reducing computational cost.

Perplexity: Language model metric measuring prediction uncertainty; lower is better (typical range: 5-15 for modern LLMs).

Quadratic Complexity: Algorithm where computation scales with input size squared (O(L²)); Transformer attention has this property.

S4 (Structured State Space for Sequences): 2021 architecture by Gu et al. that made SSMs practical for deep learning via efficient parameterization.

Selective SSM: State space model where parameters (∆, B, C) are functions of input, enabling content-aware processing.

State Space Model (SSM): Mathematical framework from control theory modeling system dynamics through state equations.

Structured State Space Duality (SSD): Framework showing mathematical equivalence between SSMs and structured attention mechanisms.

Tensor Cores: Specialized GPU hardware for fast matrix operations; Mamba-2 optimized for these.

Throughput: Tokens generated per second during inference; Mamba achieves 5× vs Transformers.

Sources & References

Primary Research Papers:

Gu, A., & Dao, T. (2023). Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv preprint arXiv:2312.00752. Retrieved from https://arxiv.org/abs/2312.00752

Dao, T., & Gu, A. (2024). Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality. International Conference on Machine Learning (ICML). Retrieved from https://arxiv.org/abs/2405.21060

Gu, A., Goel, K., & Ré, C. (2022). Efficiently Modeling Long Sequences with Structured State Spaces. International Conference on Learning Representations (ICLR). Retrieved from https://arxiv.org/abs/2111.00396

Gu, A., Dao, T., Ermon, S., Rudra, A., & Ré, C. (2020). HiPPO: Recurrent Memory with Optimal Polynomial Projections. Advances in Neural Information Processing Systems 33 (NeurIPS). Retrieved from https://arxiv.org/abs/2008.07669

Gu, A., Johnson, I., Goel, K., Saab, K., Dao, T., Rudra, A., & Ré, C. (2021). Combining Recurrent, Convolutional, and Continuous-time Models with Linear State-Space Layers. Advances in Neural Information Processing Systems 34 (NeurIPS). Retrieved from https://arxiv.org/abs/2110.13985

Production Implementations:

Mistral AI (2024, July 16). Codestral Mamba. Retrieved from https://mistral.ai/news/codestral-mamba

AI21 Labs (2024, March 28). Introducing Jamba: AI21's Groundbreaking SSM-Transformer Model. Retrieved from https://www.ai21.com/blog/announcing-jamba

Lieber, O., Lenz, B., et al. (2024). Jamba: A Hybrid Transformer-Mamba Language Model. arXiv preprint arXiv:2403.19887. Retrieved from https://arxiv.org/abs/2403.19887

IBM (2024, October 2). IBM Granite 4.0: Hyper-efficient, High Performance Hybrid Models for Enterprise. Retrieved from https://www.ibm.com/new/announcements/ibm-granite-4-0-hyper-efficient-high-performance-hybrid-models

IBM Think (2024, November). What Is A Mamba Model? Retrieved from https://www.ibm.com/think/topics/mamba-model

Vision Applications:

Zhu, L., Liao, B., Zhang, Q., Wang, X., Liu, W., & Wang, X. (2024). Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. International Conference on Machine Learning (ICML). Retrieved from https://arxiv.org/abs/2401.09417

Liu, Y., Tian, Y., Zhao, Y., Yu, H., Xie, L., Wang, Y., Ye, Q., & Liu, Y. (2024). VMamba: Visual State Space Model. arXiv preprint arXiv:2401.10166. Retrieved from https://arxiv.org/abs/2401.10166

Hatamizadeh, A., et al. (2025). MambaVision: A Hybrid Mamba-Transformer Vision Backbone. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Retrieved from https://openaccess.thecvf.com/content/CVPR2025/papers/Hatamizadeh_MambaVision_A_Hybrid_Mamba-Transformer_Vision_Backbone_CVPR_2025_paper.pdf

Benchmarking Studies:

Xu, Z., Wang, A., Wu, F., Su, H., & Wen, J.-R. (2024). RankMamba: Benchmarking Mamba's Document Ranking Performance in the Era of Transformers. arXiv preprint arXiv:2403.18276. Retrieved from https://arxiv.org/abs/2403.18276

Scientific Reports (2025, April 30). A hybrid model based on transformer and Mamba for enhanced sequence modeling. Retrieved from https://www.nature.com/articles/s41598-025-87574-8

Technical Resources:

Grootendorst, M. (2024, February 19). A Visual Guide to Mamba and State Space Models. Retrieved from https://newsletter.maartengrootendorst.com/p/a-visual-guide-to-mamba-and-state

The Gradient (2024, March 30). Mamba Explained. Retrieved from https://thegradient.pub/mamba-explained/

Semantic Scholar (2023). Mamba: Linear-Time Sequence Modeling with Selective State Spaces [Citations: 4,723]. Retrieved from https://www.semanticscholar.org/paper/Mamba:-Linear-Time-Sequence-Modeling-with-Selective-Gu-Dao/7bbc7595196a0606a07506c4fb1473e5e87f6082

GitHub - state-spaces/mamba (2024). Mamba SSM architecture. Retrieved from https://github.com/state-spaces/mamba

HuggingFace (2024). Paper page - Mamba: Linear-Time Sequence Modeling with Selective State Spaces. Retrieved from https://huggingface.co/papers/2312.00752

Industry Coverage:

VentureBeat (2024, July 16). Mistral releases Codestral Mamba for faster, longer code generation. Retrieved from https://venturebeat.com/ai/mistral-releases-codestral-mamba-for-faster-longer-code-generation

InfoWorld (2024, July 17). Mistral's new Codestral Mamba to aid longer code generation. Retrieved from https://www.infoworld.com/article/2518599/mistrals-new-codestral-mamba-to-aid-longer-code-generation.html

NVIDIA Technical Blog (2024, August 8). Revolutionizing Code Completion with Codestral Mamba, the Next-Gen Coding LLM. Retrieved from https://developer.nvidia.com/blog/revolutionizing-code-completion-with-codestral-mamba-the-next-gen-coding-llm/

SiliconANGLE (2024, March 29). AI21 Labs' Jamba infuses Mamba to bring more context to transformer-based LLMs. Retrieved from https://siliconangle.com/2024/03/28/ai21-labs-jamba-infuses-mamba-bring-context-transformer-based-llms/

VentureBeat (2024, October 2). 'Western Qwen': IBM wows with Granite 4 LLM launch and hybrid Mamba/Transformer architecture. Retrieved from https://venturebeat.com/ai/western-qwen-ibm-wows-with-granite-4-llm-launch-and-hybrid-mamba-transformer

InfoWorld (2024, October 3). IBM launches Granite 4.0 to cut AI infra costs with hybrid Mamba-transformer models. Retrieved from https://www.infoworld.com/article/4067691/ibm-launches-granite-4-0-to-cut-ai-infra-costs-with-hybrid-mamba-transformer-models.html

Educational Resources:

HuggingFace (2024). Introduction to State Space Models (SSM). Retrieved from https://huggingface.co/blog/lbourdois/get-on-the-ssm-train

Rush, S. (n.d.). The Annotated S4. Retrieved from https://srush.github.io/annotated-s4/

Princeton Language and Intelligence (2024). Mamba-2: Algorithms and Systems. Retrieved from https://pli.princeton.edu/blog/2024/mamba-2-algorithms-and-systems

Towards Data Science (2025, January 13). Vision Mamba: Like a Vision Transformer but Better. Retrieved from https://towardsdatascience.com/vision-mamba-like-a-vision-transformer-but-better-3b2660c35848/

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

$50

Product Title

Product Details goes here with the simple product description and more information can be seen by clicking the see more button. Product Details goes here with the simple product description and more information can be seen by clicking the see more button.

Comments