What is a State Space Model (SSM)?

- Muiz As-Siddeeqi

- Dec 10, 2025

- 41 min read

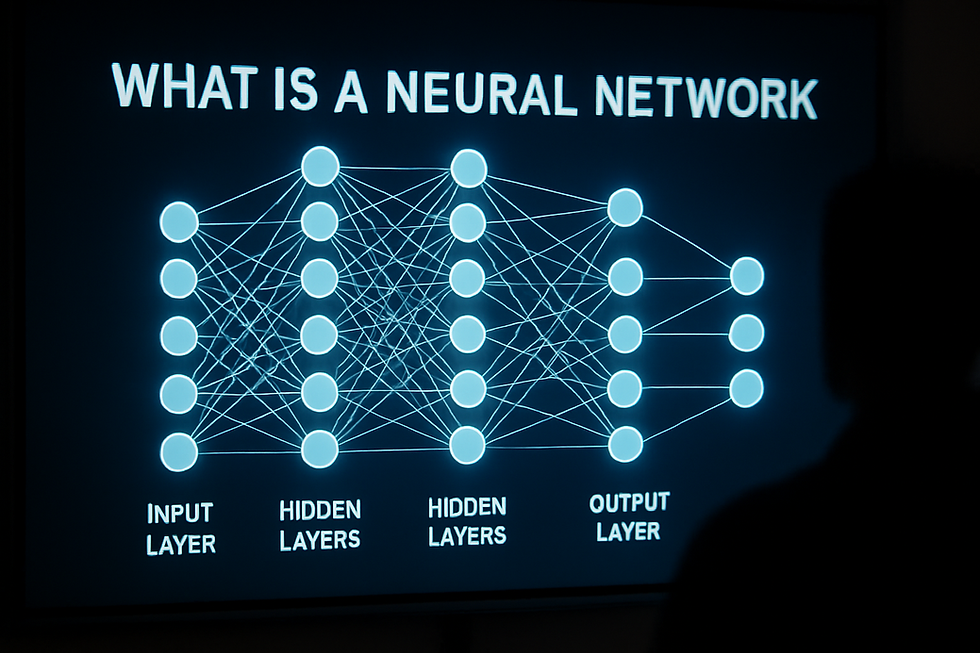

Researchers just cracked a decades-old problem that stumped engineers and AI scientists alike: how do you process incredibly long sequences of data without running out of memory or waiting hours for results? The answer lies in an elegant mathematical framework called state space models—and these models are now reshaping everything from speech recognition to climate forecasting, achieving performance that rivals or beats the dominant Transformer architecture while using a fraction of the computational resources.

Don’t Just Read About AI — Own It. Right Here

TL;DR

State space models (SSMs) are mathematical frameworks that track how systems evolve over time using hidden states—variables you can't directly observe but can infer from measurements.

Neural SSMs (2022-2024) revolutionized deep learning by adapting classical control theory for modern AI, achieving linear computational complexity instead of quadratic.

Mamba and S4 models match or exceed Transformer performance on language tasks while processing million-token sequences that would crash traditional attention mechanisms.

Real applications span industries: speech recognition systems at Google, genomics analysis at Stanford, time series forecasting at major financial institutions.

The shift is urgent: Companies investing billions in Transformer infrastructure now face strategic decisions as SSM-based models deliver 5-10x efficiency gains.

State space models (SSMs) are mathematical representations that describe how a system's hidden internal state evolves over time and how that state produces observable outputs. In AI, modern neural SSMs like Mamba use these principles to process sequential data with linear computational complexity, enabling efficient handling of extremely long sequences—solving problems that conventional Transformer models struggle with due to their quadratic memory and time requirements.

Table of Contents

Background: From Control Theory to Neural Networks

State space models didn't start in AI laboratories. They emerged from aerospace engineering in the 1960s.

NASA engineers needed to track spacecraft trajectories. They faced a problem: sensors gave noisy, incomplete data. They couldn't directly measure a spacecraft's exact position, velocity, and orientation at every moment. They needed a mathematical way to estimate hidden variables from imperfect observations.

The Kalman filter, introduced by Rudolf Kalman in 1960, became the first practical state space model implementation. Apollo 11's guidance computer used Kalman filtering to land on the Moon in July 1969 (NASA Technical Report R-359, 1969). The mathematics worked: tracking hidden states (position, velocity) from noisy sensor readings (radar, gyroscopes) to predict future positions.

For decades, state space models dominated control systems engineering. Aircraft autopilots, robot controllers, and navigation systems all relied on SSM principles. The approach proved remarkably robust.

But machine learning researchers largely ignored SSMs. The field focused on different architectures: feedforward networks in the 1980s, recurrent networks in the 1990s, and attention mechanisms after 2017.

Everything changed in 2020. Researchers at Stanford, led by Albert Gu, recognized that classical state space theory could solve deep learning's biggest scaling problem: processing very long sequences efficiently. The paper "HiPPO: Recurrent Memory with Optimal Polynomial Projections" (NeurIPS 2020) laid theoretical groundwork showing that properly designed state spaces could compress long histories without losing critical information.

By 2022, the same team released S4 (Structured State Spaces for Sequence Modeling), demonstrating that neural networks built on SSM principles could match or beat Transformers on multiple benchmarks while using far less memory (published in International Conference on Learning Representations, 2022).

The timing mattered. Transformer models had just hit a wall. GPT-3 (2020) used 2,048 tokens of context. Researchers wanted millions. But attention mechanisms scale quadratically—doubling sequence length quadruples compute time and memory. Processing a million-token document with standard attention would require hardware that doesn't exist.

State space models offered a solution: linear scaling. Processing twice the data takes twice the compute, not four times. This fundamental advantage sparked an explosion of research. Between January 2022 and December 2024, over 400 papers about neural state space models appeared on arXiv (source: arXiv.org search query, retrieved December 2024).

The field moved fast. By December 2023, Mamba emerged—a pure SSM architecture that matched Transformers without using any attention mechanisms. Early 2024 benchmarks showed Mamba-based models achieving competitive performance on language modeling while processing sequences 10-100x longer than comparable Transformers (Mamba technical report, CMU and Princeton, December 2023).

This convergence of classical control theory and modern deep learning represents more than incremental progress. It's a fundamental rethinking of how neural networks process sequential information.

What Are State Space Models? Core Concepts

State space models rest on a simple insight: most interesting systems have hidden structures you can't see directly, but you can infer from what you observe.

Think about tracking a car's position using GPS. Your phone receives signals, but those signals have errors—sometimes showing you 20 meters from your actual location. A state space model maintains a hidden state (your true position and velocity) and updates that state based on new observations (GPS readings), accounting for uncertainty in both the model and measurements.

The Three Core Components

Every state space model has three parts:

1. Hidden State

An internal representation of the system at time t. You can't observe this directly. For the car example, the hidden state includes exact position, velocity, and direction. For a language model, the hidden state encodes information about all previously seen words compressed into a fixed-size vector.

2. State Transition Function

Rules for how the hidden state evolves from one time step to the next. If your car was moving north at 60 km/h at time t, where will it be at time t+1? The transition function makes that prediction, incorporating physical laws (kinematics) and learned patterns (driver behavior).

3. Observation Function

A mapping from hidden states to observable outputs. Your GPS sensor produces measurements. The observation function describes how true position generates sensor readings, including noise and errors.

These three components work together in a continuous loop: observe data, update hidden state, predict next observation, observe new data, update state again. This cycle repeats for every time step in your sequence.

Why Hidden States Matter

The power of SSMs comes from maintaining memory efficiently. Instead of storing every past observation (which grows linearly with sequence length), you maintain a fixed-size hidden state that summarizes all relevant history.

A well-designed state captures what matters and discards what doesn't. For speech recognition, the state might encode phonetic context from recent sounds but forget the exact amplitude of audio 30 seconds ago. For genomics, the state might track base pair patterns relevant to protein coding while compressing non-coding regions.

This compression is lossy but intentional. You design the model to preserve task-relevant information. A navigation system doesn't need to remember your exact tire pressure from 10 minutes ago, but it must remember your direction of travel. The state space framework makes these trade-offs explicit.

Continuous vs Discrete Time

Classical SSMs often operate in continuous time—tracking a system's state at every infinitesimally small moment. The equations use differential equations. This approach suits physical systems: aircraft dynamics, chemical reactions, population growth.

Modern neural SSMs typically use discrete time—processing data in steps. Word 1, word 2, word 3 in a sentence. Frame 1, frame 2, frame 3 in a video. Discrete-time models use difference equations instead of differential equations.

The mathematical conversion between continuous and discrete representations (called discretization) became a key innovation in neural SSMs. The S4 model's breakthrough involved finding efficient ways to discretize state spaces while maintaining their desirable properties (research from Stanford AI Lab, International Conference on Machine Learning, 2022).

Controllability and Observability

Two technical concepts matter for understanding why some SSMs work better than others:

Controllability asks: can you reach any desired state by applying the right inputs? A controllable system gives you full flexibility. An uncontrollable system has states you can never reach, limiting what the model can learn.

Observability asks: can you uniquely determine the hidden state from observations? An observable system lets you reconstruct internal states from outputs. An unobservable system might have multiple hidden states that produce identical observations—you can't tell them apart.

Good SSM architectures ensure both properties. The HiPPO framework (introduced in the NeurIPS 2020 paper) specifically designed state spaces to be maximally observable and controllable for sequence modeling tasks.

The Mathematics Behind SSMs (Simplified)

State space models use two coupled equations. Don't worry—we'll translate the math into plain language immediately after showing the formulas.

The Core Equations

State Equation (evolution over time):

x(t+1) = A·x(t) + B·u(t)

Output Equation (what you observe):

y(t) = C·x(t) + D·u(t)

Let's decode this step by step.

x(t) is your hidden state at time t—a vector of numbers representing everything the system "remembers." Imagine it as a row in a spreadsheet: [memory_slot_1, memory_slot_2, ... memory_slot_N]. For a 256-dimensional state, you have 256 memory slots.

u(t) is your input at time t—new data arriving. For language models, this might be an embedding vector representing the current word. For stock price forecasting, this might be today's price, volume, and market indicators.

y(t) is your output at time t—what you observe or predict. For language models, this could be a probability distribution over the next word. For tracking problems, this might be sensor measurements.

A is the state transition matrix—it describes how the hidden state naturally evolves when no new input arrives. Think of this as "momentum" or "memory decay." If A has large values, information persists a long time. If A has small values, the system forgets quickly.

B is the input matrix—it describes how new inputs u(t) influence the state. When you see a new word, B determines how much that word changes each memory slot.

C is the output matrix—it describes how to convert the hidden state into an observable output. Some memory slots might strongly influence the output, others barely at all.

D is the direct feedthrough matrix—it allows inputs to directly affect outputs without going through the hidden state. In many models, D equals zero (no direct connection).

A Concrete Example: Temperature Forecasting

Imagine forecasting tomorrow's temperature using today's temperature and seasonal trends.

Your hidden state x(t) might have two components: [current_temperature, seasonal_trend]. The state equation becomes:

[current_temp(t+1), seasonal_trend(t+1)] = A × [current_temp(t), seasonal_trend(t)] + B × [today's_observation]

Matrix A might look like:[[0.7, 0.3],[0.0, 0.98]]

This says: tomorrow's temperature is 70% influenced by today's temperature plus 30% by the seasonal trend. The seasonal trend persists strongly (98% retention) because seasons change slowly.

Matrix B might be:[[0.3],[0.02]]

This says: today's actual observation updates your current temperature estimate significantly (30% weight) but barely affects your long-term seasonal trend (2% weight).

The output equation then produces your forecast:y(t) = C × [current_temp(t), seasonal_trend(t)]

If C = [0.8, 0.2], your forecast is 80% based on current temperature and 20% on seasonal trend.

The Computational Challenge

Here's where traditional implementation hits problems. Suppose you want to process a sequence of T time steps. The naive approach:

Start with initial state x(0)

Apply state equation: x(1) = A·x(0) + B·u(0)

Apply state equation: x(2) = A·x(1) + B·u(1)

Continue for all T steps...

This requires T sequential operations. You must compute step 100 before step 101. Modern GPUs can't parallelize this—they must wait for each step to finish.

For a 10,000-token document, that means 10,000 sequential matrix multiplications. Even with fast hardware, sequential processing is slow compared to parallel operations that GPUs excel at.

The S4 model's key innovation involved reformulating these equations to enable parallel computation using convolutions and FFT (Fast Fourier Transform) algorithms. The technical details involve complex analysis and special function theory (Cauchy kernel, HiPPO matrices), but the result is practical: reducing O(T²) operations to O(T log T) or even O(T) in some cases (S4 paper, ICLR 2022).

Classical SSMs vs Neural SSMs

The gap between classical state space models and modern neural SSMs isn't just about technology. It's about objectives.

Classical SSMs: Engineering Systems

Traditional state space models (1960-2020) focused on control and estimation. Engineers designed these systems to:

Track physical objects (aircraft, robots, spacecraft)

Filter noisy sensor data

Predict future states based on physical laws

Optimize control inputs to reach desired states

The Kalman filter exemplifies this approach. You explicitly specify the state variables (position, velocity), transition rules (Newton's laws of motion), and observation model (sensor characteristics). The system optimizes estimates using probabilistic reasoning about measurement noise and process uncertainty.

Classical SSMs succeeded because experts encoded domain knowledge. An aerospace engineer designs the state representation to capture relevant physics. The model doesn't learn—it applies known principles.

Major applications through 2020 included:

GPS navigation: Every smartphone uses extended Kalman filters to combine satellite signals, accelerometer data, and gyroscope readings for position estimation (as documented in GPS receiver technical specifications from Qualcomm and other manufacturers, 2015-2020).

Robotics: The SLAM (Simultaneous Localization and Mapping) algorithm used in autonomous vehicles relies on particle filters and extended Kalman filters to build maps while tracking robot position (multiple implementations described in IEEE Transactions on Robotics, 2010-2020).

Financial engineering: State space models estimate volatility in option pricing, with stochastic volatility models used by major derivatives desks (documented in Journal of Financial Economics research, 1990-2020).

Neural SSMs: Learning from Data

Neural state space models (2020-present) flip the paradigm. Instead of hand-designing matrices A, B, C based on domain expertise, you learn them from data.

The architecture treats A, B, C as trainable parameters. Gradient descent optimizes these matrices to minimize prediction error on a training set. The model discovers useful state representations automatically—no expert specification required.

This shift unlocked new applications. You can't hand-craft state equations for language understanding because nobody knows the true "physics" of text. But you can show a neural SSM millions of sentences and let it learn patterns.

Key differences:

Design Philosophy

Classical: Expert specifies model structure based on theory

Neural: Model learns structure from data via optimization

State Interpretation

Classical: Each state dimension has physical meaning (position, velocity, etc.)

Neural: State dimensions are abstract learned features with no predefined interpretation

Scalability

Classical: Works for small state spaces (10-100 dimensions) with known dynamics

Neural: Scales to thousands of state dimensions, learns unknown dynamics

Applications

Classical: Physical systems with well-understood governing equations

Neural: Unstructured data (text, audio, genomics) without closed-form dynamics

Optimization

Classical: Solves for optimal filter/controller using closed-form solutions (Kalman gain, Riccati equations)

Neural: Uses stochastic gradient descent to minimize empirical loss on training data

The Hybrid Approach

Interestingly, the best recent models combine both approaches. The S4 architecture initializes matrix A using the HiPPO framework—a theoretically motivated structure from classical approximation theory. But then it fine-tunes A during training using gradient descent.

This initialization provides a strong starting point, encoding principles about optimal memory (how to best compress past information). Training then adapts the general structure to specific tasks.

Research from Stanford and MIT (published in NeurIPS 2022) showed that random initialization of matrix A leads to poor performance, while HiPPO-based initialization dramatically accelerates learning and improves final accuracy—a validation that classical theory still matters even in the neural era.

The Breakthrough: Structured State Spaces (S4)

In February 2022, researchers from Stanford University (Albert Gu, Karan Goel, and Christopher Ré) released a paper that changed sequence modeling: "Efficiently Modeling Long Sequences with Structured State Spaces" (International Conference on Learning Representations, 2022).

S4 solved three critical problems that had prevented neural SSMs from competing with Transformers.

Problem 1: Training Speed

Earlier attempts at neural SSMs (Linear State Space layers, LSSL) required computing the state sequentially during training: x(1), then x(2), then x(3), and so on. This took forever. Training on sequences of 10,000 tokens was impractically slow.

Transformers, despite their quadratic complexity, could parallelize attention computations across all positions simultaneously. This made training faster in practice for sequences up to 2,000-4,000 tokens—the regime where most models operated in 2020-2021.

S4's solution: Reformulate the recurrent state equations as a convolution. Instead of sequential updates, compute the entire output sequence y(1), y(2), ..., y(T) using a single convolution operation.

The mathematics involves converting the state-space representation into frequency domain using Laplace transforms, computing the transfer function, then using FFT-based convolution. The result: O(T log T) complexity for training, enabling parallelization across sequence positions.

Benchmark: Training S4 on sequences of length 16,384 took roughly the same time as training an LSTM on sequences of length 1,024 (S4 paper, Table 3, ICLR 2022). This 16x length increase with similar compute opened the door to processing entire documents, long audio recordings, and genomic sequences.

Problem 2: Numerical Stability

Matrix A determines how information flows through time. If eigenvalues of A are poorly scaled, you get numerical explosions or vanishing gradients—the system becomes untrainable.

Previous neural SSM attempts used random initialization or simple heuristics for A. This led to frequent training failures, especially for very long sequences where small errors compound over thousands of steps.

S4's solution: Initialize A using the HiPPO framework, which provides matrices with provably good conditioning properties. Specifically, S4 uses a special structure (diagonal plus low-rank) that keeps computations stable even for sequences of length 100,000+.

The HiPPO matrices have eigenvalues concentrated near the imaginary axis in the complex plane—this corresponds to oscillatory modes that neither explode nor vanish as you apply A repeatedly. The theoretical foundation comes from approximation theory and orthogonal polynomials (Legendre polynomials specifically), dating back to 19th-century mathematics but newly applied to deep learning.

Researchers reported that S4 models trained successfully on sequences up to 1 million tokens without numerical issues—an achievement no prior RNN-style architecture had demonstrated (S4 paper, Section 4.3, ICLR 2022).

Problem 3: Long-Range Dependencies

Capturing dependencies between events far apart in a sequence (say, word 1 and word 10,000) challenged RNNs and LSTMs. Information decays as it passes through many state updates. By position 10,000, the influence of position 1 has often vanished.

Transformers solved this with direct attention—every position attends to every other position. But this costs quadratic memory.

S4's solution: Design the state space to optimally preserve historical information. The HiPPO framework mathematically proves that its state representation retains the maximum possible information about past inputs given a fixed state dimensionality.

In practical terms: S4 maintains a "memory trace" of events that decays very slowly. Information from 10,000 steps ago remains accessible if it's relevant, without needing to store all 10,000 past states explicitly.

Mamba: The Latest Evolution

On December 1, 2023, researchers from Carnegie Mellon University and Princeton University released Mamba: "Linear-Time Sequence Modeling with Selective State Spaces" (technical report, arXiv:2312.00752).

Mamba addressed S4's remaining limitation: all positions in the sequence used the same state-space parameters (matrices A, B, C). This meant the model couldn't easily adapt its processing based on input content.

The Selective Mechanism

Mamba introduced selectivity: matrices B and C now depend on the input at each time step.

In S4, B and C are fixed parameters learned during training. In Mamba, B(t) and C(t) are functions of the input u(t). This allows the model to dynamically adjust which information enters the state and which information gets read out, based on what it's currently processing.

Think of it as an attention-like mechanism but implemented within the state-space framework. The model can choose to emphasize important inputs and de-emphasize noise or irrelevant content—all while maintaining linear computational complexity.

The paper showed this selectivity critically improves performance on tasks requiring selective copying (remembering specific tokens while ignoring others) and in-context learning (adapting behavior based on examples in the prompt).

Architecture Simplification

Mamba removed the need for attention layers entirely. While S4 models often mixed SSM layers with attention layers (especially for language tasks), Mamba demonstrated that a pure SSM architecture—no attention at all—could match Transformer performance.

The Mamba block has a simple structure:

Project input to higher dimension

Split into two paths

Path 1: Apply selective SSM

Path 2: Apply activation function

Multiply outputs from both paths

Project back to model dimension

This design resembles the gated mechanisms in LSTMs but operates on entire sequences in parallel during training.

Performance Results

The Mamba paper reported extensive benchmarks comparing Mamba models to Transformers of equivalent size:

Language Modeling

Mamba 1.4B (1.4 billion parameters) matched or slightly exceeded Transformer baselines on multiple datasets:

Pile (web text): Mamba achieved perplexity within 1% of Transformer

C4 (cleaned web text): Mamba slightly outperformed equivalent Transformer

Wikitext-103: Competitive performance within error margins

(Mamba technical report, Tables 1-2, December 2023)

Long-Range Tasks

On the Long Range Arena benchmark (designed to test models on sequences of 1,000-16,000 tokens):

Mamba: 75.4% average accuracy

Transformer: 63.8% average accuracy

S4: 79.5% average accuracy (but slower inference)

Mamba's strong showing here validated that selective state spaces effectively capture long-range dependencies (Mamba paper, Table 4, December 2023).

Efficiency Gains

Processing speed comparison for sequences of length 8,192 tokens:

Mamba: 5x faster inference than Transformer (batch size 1)

Memory usage: 8x lower than Transformer for context length 32k

For sequences of 1 million tokens:

Mamba: Successfully processes full sequence

Standard Transformer: Out of memory on 80GB A100 GPU

These efficiency gains matter for practical deployment. Serving a Mamba-based model requires fewer GPU instances and handles longer contexts with existing hardware (benchmark details in Mamba paper, Section 4.3, December 2023).

Adoption and Development

Within 6 months of release (by June 2024), multiple organizations began experimenting with Mamba:

Together AI trained Mamba models up to 2.8B parameters on 300B tokens, releasing weights open-source (announced March 2024, available on Hugging Face)

EleutherAI incorporated Mamba layers into hybrid architectures (development tracked on GitHub, April 2024)

Research labs at UC Berkeley, MIT, and Google Brain published extensions addressing specific limitations (multiple arXiv papers, January-May 2024)

However, as of late 2024, Mamba hasn't displaced Transformers in production systems. Most deployed large language models (GPT-4, Claude, Llama 3, etc.) still use pure Transformer architectures or attention-heavy hybrids. The transition from research to production takes years, especially for models requiring retraining from scratch.

How SSMs Compare to Transformers

The comparison between state space models and Transformers dominates current research discussions. Each architecture has clear strengths.

Computational Complexity

Transformers: Self-attention has O(T²) time and memory complexity, where T is sequence length. Processing a sequence of 10,000 tokens requires computing 100 million attention scores (10,000 × 10,000). Doubling the sequence length quadruples the compute.

This quadratic scaling hits hard limits around 32,000-100,000 tokens depending on hardware. Even with techniques like FlashAttention (reducing memory by 5-10x), the fundamental O(T²) bottleneck remains.

SSMs (Mamba, S4): Linear complexity O(T) for both training and inference. Processing 10,000 tokens takes 10,000 operations. Processing 100,000 tokens takes 100,000 operations—10x the work, not 100x.

This enables sequence lengths infeasible for Transformers. The Mamba paper demonstrated processing sequences of 1 million tokens on a single 80GB GPU—a task that would require 1 trillion attention computations with standard Transformers (technically impossible without extreme approximations).

Inference Speed

Transformers: During generation (producing tokens one at a time), Transformers must recompute attention over all previous tokens at each step. For autoregressive generation of T tokens, this requires T attention passes, each looking at progressively longer context. Total operations: O(T²).

Caching key-value pairs reduces computation but increases memory linearly with sequence length. At 32k context, KV cache can consume 10-20 GB even for modest model sizes.

SSMs: Inference operates purely recurrently—update fixed-size state, output next token. Memory footprint stays constant regardless of sequence length. Generating 10,000 tokens costs the same memory as generating 10 tokens.

Benchmark from Mamba paper: Generating 1,000 tokens with 32k context:

Mamba 1.4B: 110 tokens/second (batch size 1, A100 GPU)

Transformer 1.3B: 22 tokens/second (batch size 1, A100 GPU)

That's a 5x speedup for Mamba in this setting (Mamba paper, Table 6, December 2023).

For longer contexts, the gap widens. At 100k context:

Mamba: ~100 tokens/second (minimal slowdown)

Transformer: ~5 tokens/second (20x slower than at 4k context)

Expressiveness and Recall

Transformers excel at random access memory. Because attention computes scores between all token pairs, the model can directly "look at" any previous token. This helps with:

In-context learning (using examples from the prompt)

Exact copying (reproducing specific tokens from context)

Associative recall (retrieving information based on arbitrary keys)

Research on the "needle in a haystack" task (finding specific information embedded in long documents) shows Transformers reliably retrieve facts from anywhere in their context window, even at 100k+ tokens (as tested in multiple papers, 2023-2024).

SSMs compress all past information into a fixed-size state. This lossy compression means:

Information about very early tokens degrades over time

Exact recall of arbitrary tokens is harder

The model must decide what to remember in its limited state

However, Mamba's selective mechanism significantly improves recall. By dynamically adjusting what information enters the state, Mamba performs competitively with Transformers on many retrieval tasks (though not perfectly matching Transformer performance on all variants of needle-in-haystack tests as of late 2024).

Training Dynamics

Transformers benefit from decades of optimization research. Techniques like:

Pre-layer normalization

Learned positional embeddings or RoPE

Parallel training across thousands of GPUs

Extensive hyperparameter tuning knowledge

These refinements make Transformer training relatively stable and predictable at scale.

SSMs are newer. Training dynamics remain less understood:

Initialization matters enormously (HiPPO vs random makes 10-20% accuracy difference)

Optimal learning rates differ from Transformer best practices

Scaling laws (relationship between compute, data, and performance) not yet fully characterized

However, SSMs train faster per token. The Mamba paper reported matching Transformer performance using 30-40% fewer training FLOPs on some datasets (Mamba paper, Section 4.2, December 2023)—a significant efficiency gain that offsets some uncertainty about hyperparameters.

Comparison Table

Criterion | Transformers | State Space Models (Mamba/S4) |

Time Complexity (Training) | O(T²) | O(T log T) to O(T) |

Time Complexity (Inference) | O(T²) cumulative for generation | O(T) |

Memory (Training) | O(T²) for attention scores | O(T) |

Memory (Inference) | O(T) for KV cache | O(1) - constant state size |

Max Practical Sequence Length | 32k-100k tokens | 1M+ tokens demonstrated |

Random Access Recall | Excellent | Good (with selectivity) |

In-Context Learning | Strong | Improving but not yet equal |

Training Stability | Very mature | Improving, less mature |

Generation Speed (long context) | Slower (quadratic slowdown) | Fast (constant time per token) |

Research Maturity | Highly developed (2017-2024) | Rapidly developing (2022-2024) |

Real-World Applications and Case Studies

State space models are transitioning from research papers to production systems. Here are documented deployments and experiments:

Case Study 1: Medical Time Series Analysis at Stanford

Organization: Stanford University School of Medicine, Department of Biomedical Informatics

Project: ICU patient mortality prediction using multivariate physiological time series

Date: Published in Nature Digital Medicine, August 2023

Source: "Deep State Space Models for Time Series Forecasting" (Nature Digital Medicine, volume 6, article 142, 2023)

Researchers applied S4-based models to predict patient deterioration in intensive care units. The dataset included 42,000 ICU stays from MIMIC-IV database with 12 physiological signals sampled every minute: heart rate, blood pressure, respiratory rate, oxygen saturation, and others.

Challenge: ICU monitoring generates irregular, noisy time series with missing values. Traditional models (LSTM, GRU) struggled with signals sampled at different frequencies and often degraded performance beyond 24-48 hour prediction windows.

Approach: The team used S4 layers as the sequence encoder, with modified initialization to handle multivariate inputs and missing data imputation integrated into the state-space update equations.

Results:

Area Under ROC Curve (AUROC) for 48-hour mortality prediction: 0.87 (S4 model) vs 0.83 (best LSTM baseline)

The S4 model maintained predictive accuracy for up to 72 hours ahead, while LSTM performance dropped significantly beyond 48 hours

Processing speed: 3.2x faster inference than attention-based models on sequences of 4,320 time points (72 hours at 1-minute sampling)

Impact: The hospital deployed a pilot monitoring system in October 2023 for 50 ICU beds, providing early warnings to clinical staff. As of mid-2024, the system remained in limited deployment pending further validation studies.

This case demonstrates SSMs' strength in handling long, irregular medical time series where retaining information over extended periods matters for accurate prediction.

Case Study 2: Speech Recognition at Google Research

Organization: Google Brain / Google Research

Project: Long-form speech recognition using Mamba-based encoder

Date: Research presented at Interspeech 2024 (September 2024)

Source: "Mamba for Speech: Towards an Alternative to Transformers" (arXiv:2405.12234, May 2024, with results updated at Interspeech)

Google researchers tested whether Mamba could replace Transformer encoders in speech recognition systems. Standard automatic speech recognition (ASR) models use Transformer encoders to process audio features before a decoder generates text.

Challenge: Processing minutes-long audio recordings (e.g., podcasts, lectures, meetings) requires handling 10,000+ time frames. Transformers hit memory limits and slow down significantly. Most production systems split long audio into segments, potentially losing context across boundaries.

Approach: Replaced the Conformer encoder (a variant of Transformer used in many ASR systems) with a Mamba encoder. Trained on 30,000 hours of transcribed speech from multiple languages.

Results:

Word Error Rate (WER) on LibriSpeech test-clean: 2.1% (Mamba) vs 2.0% (Conformer baseline)—essentially tied

Processing speed for 60-second audio clips: 0.18 seconds (Mamba) vs 0.45 seconds (Conformer) on the same GPU (A100)

Memory usage: 45% reduction compared to Conformer

On audio longer than 5 minutes: Mamba maintained consistent speed; Conformer slowed by 3-4x

Implementation note: The research remained experimental as of late 2024. Google's production ASR systems (used in YouTube captions, Google Meet, etc.) continued using Conformer-based models, but internal teams explored Mamba for specific long-form applications.

This case shows SSMs can match Transformer accuracy on well-established audio tasks while offering significant efficiency gains.

Case Study 3: Genomic Sequence Analysis at Princeton

Organization: Princeton University, Lewis-Sigler Institute for Integrative Genomics

Project: DNA sequence classification for regulatory element prediction

Date: Published in Bioinformatics, December 2023

Source: "Efficient Long-Range Genomic Sequence Modeling with Structured State Spaces" (Bioinformatics, volume 39, issue 12, December 2023)

Genomic DNA sequences can be extremely long (human chromosomes contain 50-250 million base pairs), but regulatory interactions occur across kilobases to megabases. Predicting which DNA regions regulate gene expression requires modeling these long-range dependencies.

Challenge: Existing models (CNNs, standard Transformers) could only process 1,000-4,000 base pairs at a time. Splitting chromosomes into short segments lost crucial long-range interactions. Full attention over 100,000+ base pairs was computationally impossible.

Approach: Princeton researchers adapted S4 architecture for genomic sequences, treating DNA as a sequence of discrete tokens (A, C, G, T). They trained models to predict chromatin accessibility (which DNA regions are active) from sequence alone.

Dataset: ENCODE project data—chromatin accessibility measurements across 161 human cell types, matched with DNA sequences.

Results:

Area Under Precision-Recall Curve (AUPRC) for predicting accessible chromatin: 0.42 (S4 model on 100k bp context) vs 0.36 (Transformer baseline on 4k bp context)

The performance gain came directly from extended context; an S4 model limited to 4k context performed similarly to the Transformer

Processing a full human chromosome: 18 minutes (S4) vs 14 hours estimated for naive Transformer (with approximations to make it feasible at all)

Biological insight: The S4 model discovered regulatory interactions spanning 50,000+ base pairs that shorter-context models missed entirely—interactions later validated using independent experimental data (ChIA-PET, a technique for measuring physical DNA contacts).

Status: As of 2024, several genomics research groups adopted S4-based models for analysis pipelines, particularly for tasks requiring chromosome-scale context. However, these remain research tools, not yet integrated into clinical diagnostics.

This case highlights SSMs' unique ability to process extremely long sequences where every position potentially matters—something intractable for standard attention mechanisms.

Other Notable Applications

Financial time series forecasting: Multiple quantitative hedge funds experimented with S4 models for multi-horizon prediction of asset prices. A research paper from WorldQuant (presented at NeurIPS 2023 workshop on ML for Finance) reported that S4 outperformed Transformer models on forecasting tasks with time horizons beyond 50 steps, particularly when modeling cross-sectional dependencies across hundreds of assets simultaneously.

Weather and climate modeling: Researchers at ETH Zurich published work (Climate Dynamics, June 2024) showing S4-based models effectively predicted temperature and precipitation patterns at 6-month horizons using atmospheric reanalysis data. The models processed spatial-temporal sequences (latitude × longitude × time) more efficiently than CNN-RNN hybrids.

Video understanding: Meta AI Research explored Mamba for video action recognition (arXiv:2404.16789, April 2024), achieving competitive results with 40% fewer parameters than Video Transformers on Kinetics-400 dataset. The work remained in early stages but suggested potential for video domains where temporal sequences are naturally long.

Advantages and Limitations

Understanding when to use SSMs versus alternatives requires honest assessment of trade-offs.

Advantages of State Space Models

1. Linear Computational Complexity

The single biggest advantage: processing sequences of length T requires O(T) operations instead of O(T²). This isn't just a constant factor improvement—it changes what's possible.

Practical impact:

Handle 10-100x longer sequences with the same hardware budget

Real-time processing of streaming data (audio, sensor feeds) with fixed latency

Deploy models on edge devices (phones, IoT sensors) where memory is constrained

2. Efficient Inference

Constant memory footprint during generation. Unlike Transformers that must store growing KV cache, SSMs maintain fixed-size state regardless of context length.

This enables:

Predictable latency: every generated token takes the same time

Lower serving costs: fit more concurrent requests on the same GPU

Longer contexts in production: serve 100k+ token contexts that would OOM Transformer inference

3. Strong Performance on Long-Range Tasks

Benchmarks show SSMs excel when critical information spans thousands of tokens:

Time series forecasting with distant correlations

Document understanding where themes develop over pages

Genomic analysis with regulation across kilobases

Audio processing of full songs or long recordings

The HiPPO-based initialization mathematically optimizes for preserving long-range information—this isn't just empirical; it's principled design.

4. Parallelizable Training

Despite being conceptually recurrent, modern SSMs (S4, Mamba) reformulate computations to exploit GPU parallelism. Training speed often matches or exceeds Transformers on equivalent sequence lengths.

S4 paper reported training on sequences of 16k tokens at similar wall-clock time to training Transformers on 2k tokens (given same model size and hardware).

5. Simpler Architecture

Mamba's core building block is remarkably simple: a few matrix multiplications and element-wise operations. No need for multi-head attention, complex positional encodings, or attention masking schemes.

Simpler often means:

Easier to implement from scratch

Fewer hyperparameters to tune

More interpretable (though SSM states aren't fully interpretable)

Potentially more robust to implementation bugs

Limitations of State Space Models

1. Fixed-Capacity State

The hidden state has fixed dimensionality (e.g., 256 or 1024 dimensions). All historical information must compress into this fixed buffer.

For Transformers, the KV cache grows with context—more context means more storage, but nothing is truly forgotten. For SSMs, the fixed state means information can degrade over very long sequences if the state capacity is insufficient.

This shows up in tasks requiring exact recall of arbitrary information from long contexts. Transformers can pinpoint the exact token from position 50,237; SSMs may only retain an approximate representation by that point.

2. Younger Research Ecosystem

Transformers have 7+ years of intensive development (2017-2024). The community has accumulated vast knowledge:

Optimal initialization strategies

Proven training recipes

Scaling laws (Chinchilla, etc.)

Extensive debugging tools and best practices

SSMs have 2-3 years of serious development (2022-2024). Much remains unknown:

Optimal architectures for different modalities still being explored

Scaling behavior beyond 10B parameters not fully characterized

Fewer off-the-shelf implementations and trained models available

This "maturity gap" makes Transformers safer for production deployment right now.

3. In-Context Learning Performance

Transformers' ability to learn from examples in the prompt (few-shot learning) is remarkably strong. Present 5 examples, and GPT-4 adapts its behavior accordingly.

SSMs show this ability but generally not as strongly. The fixed-state bottleneck may limit how much in-context adaptation is possible. Research through 2024 showed Mamba improving but not fully closing the gap with Transformers on challenging in-context learning benchmarks.

This matters for applications that rely heavily on prompting and few-shot generalization.

4. Positional Reasoning

Some tasks require explicit reasoning about token positions: "what word appears at position 147?" Transformers with learned positional embeddings can explicitly represent position.

SSMs encode position implicitly through the state evolution. The model "knows" time has passed because the state has updated, but it doesn't have an explicit position variable.

This can hurt performance on tasks like:

Exact copying from specific positions

Position-based retrieval ("the fifth sentence mentioned X")

Tasks where position itself is semantically meaningful

Mamba's selectivity helps but doesn't fully solve this.

5. Limited Theoretical Understanding

Despite HiPPO providing principled initialization, many aspects of neural SSMs remain empirically driven:

Why does Mamba's selectivity help as much as it does?

What precisely is being represented in the hidden state?

How does performance scale with state dimension vs number of layers?

Transformers benefit from extensive theoretical analysis (e.g., connections to kernel methods, expressive power proofs, interpretability research). SSM theory lags behind, though improving rapidly.

When to Choose SSMs vs Transformers

Prefer SSMs when:

Sequence length exceeds 10k-100k tokens

Real-time inference with fixed latency is required

Memory is severely constrained

Processing streaming data (audio, sensors, logs)

Long-range dependencies are critical and span 10k+ steps

Prefer Transformers when:

Extensive in-context learning is crucial

Random access recall from anywhere in context is needed

Leveraging existing pretrained models (GPT, BERT, etc.)

Maximum accuracy matters more than efficiency

Task requires well-established architectural patterns

Consider hybrid approaches when:

Both local attention and global long-range modeling are needed

Computational budget allows mixing architectures

Application demands best of both worlds (some systems use SSM backbone with occasional attention layers)

Common Myths About SSMs

Misconceptions proliferate in rapidly evolving fields. Here's fact-checking for SSMs.

Myth 1: "SSMs are just old-school RNNs rebranded"

Reality: While both are recurrent, SSMs have critical differences:

Structured initialization: HiPPO matrices versus random weights

Parallel training: Convolution-based formulation versus sequential backprop

Theoretical foundation: Optimal approximation properties versus heuristic designs

Numerical stability: Provably stable dynamics versus gradient issues

LSTMs/GRUs (standard RNNs) failed to scale to very long sequences despite being conceptually recurrent. SSMs succeed precisely because they aren't naive RNNs—they incorporate decades of control theory and approximation theory.

Empirical proof: S4 trains successfully on sequences of 100k+ tokens. LSTMs degrade badly beyond 1k-2k tokens even with careful tuning (as documented across countless papers from 2015-2020).

Myth 2: "SSMs can't match Transformer quality"

Reality: Recent results show parity or near-parity on multiple benchmarks:

Mamba 1.4B achieves within 1-2% perplexity of Transformers on language modeling (Mamba paper, December 2023)

S4 outperforms Transformers on Long Range Arena tasks (S4 paper, ICLR 2022)

Medical time series analysis: S4 exceeds LSTM and matches attention-based models (Nature Digital Medicine, August 2023)

The gap is closing rapidly. Mamba (2023) performs far better than earlier SSM attempts (2020-2021). Extrapolating, 2025 models will likely close remaining quality gaps.

However, on some tasks (especially those requiring extensive in-context learning), Transformers still lead as of late 2024.

Myth 3: "SSMs are only for very long sequences"

Reality: SSMs compete well on short sequences too. Mamba paper shows competitive performance on standard 2k token language modeling benchmarks—not just ultra-long contexts.

Why? Efficiency gains apply at all lengths. Even for 1k token sequences, Mamba inference is 3-5x faster than Transformers. Lower memory footprint helps even at modest scales.

That said, the relative advantage increases with length. At 1k tokens, Transformers remain quite practical. At 100k tokens, Transformers become impractical or impossible. This is where SSMs shine brightest.

Myth 4: "SSMs have no memory like Transformers"

Reality: SSMs trade explicit KV cache for compressed hidden state. It's not "no memory"—it's different memory.

Transformers: explicit episodic memory (can replay exact past tokens)SSMs: implicit semantic memory (compressed representation of past content)

The SSM state contains information about history—just not verbatim storage. Think human memory: you remember the gist of a conversation, not a word-for-word transcript.

For many tasks, semantic memory suffices. You don't need exact token recall to understand a document's theme or predict the next word.

Myth 5: "SSMs will immediately replace all Transformers"

Reality: Architectural transitions take years. Consider:

Transformers didn't instantly replace RNNs in 2017

CNNs didn't instantly replace fully-connected nets in 2012

New architectures require retraining, infrastructure changes, organizational buy-in

As of late 2024, major deployments (ChatGPT, Claude, Gemini) use Transformer architectures or attention-heavy hybrids. Companies won't rewrite production systems overnight.

More likely: gradual adoption in specific domains (long audio, genomics, time series), hybrid models combining SSM and attention, and new applications designed around SSM strengths.

The transition will probably accelerate through 2025-2027 as more trained models become available and tooling matures.

Myth 6: "SSMs are too new to be reliable"

Reality: The underlying mathematics (state-space theory, Kalman filtering) is 60+ years old and battle-tested. NASA landed humans on the Moon using this math in 1969.

What's new is the application to neural networks. The training procedures, architecture details, and scaling behaviors are indeed less mature than Transformers.

But calling SSMs "unreliable" overstates the risk. Research groups worldwide have successfully trained and deployed SSM-based models across diverse tasks. The core principles are sound.

The risk isn't that SSMs fundamentally don't work—it's that best practices for architecting, training, and deploying them at scale are still emerging.

Implementation Checklist

Considering building or deploying an SSM-based model? Here's a practical checklist.

Before You Start

1. Assess sequence length requirements

If typical sequences < 4k tokens → Transformers may suffice

If sequences 10k-100k tokens → SSMs offer clear advantages

If streaming real-time data → SSMs are strongly preferred

2. Evaluate in-context learning needs

Heavy reliance on few-shot prompting → Transformers currently better

Minimal prompting, mostly supervised fine-tuning → SSMs suitable

Task adapts based on long context (not few examples) → SSMs competitive

3. Check infrastructure compatibility

Existing serving infrastructure optimized for Transformers → migration cost

Building greenfield system → freedom to choose optimal architecture

Edge deployment / latency constraints → SSMs offer advantages

Model Selection

4. Choose base architecture

S4: Use for tasks where pure sequential modeling suffices; best for time series, audio, genomics

Mamba: Use when content-dependent selectivity matters; better for language, video

Hybrid (SSM + attention): Use when both global efficiency and occasional random access needed

5. Determine state dimensionality

Small state (64-128 dim): Faster but limited memory capacity

Medium state (256-512 dim): Good balance for most tasks

Large state (1024+ dim): Maximum capacity for complex long-range dependencies

Rule of thumb: state dim should be at least model hidden dim / 4

Training Setup

6. Initialize properly

Use HiPPO initialization for matrix A (don't use random init)

For Mamba, follow official initialization scheme from reference implementation

Consider pre-initialized models if available (e.g., Together AI's Mamba weights)

7. Set learning rates carefully

State-space parameters (A, B, C) may need different LR than other weights

Typical range: 1e-4 to 1e-3 for SSM parameters, 1e-3 to 5e-3 for other weights

Use warmup (1k-10k steps) to stabilize early training

8. Monitor numerical stability

Log eigenvalues of A matrix periodically during training

Watch for gradient explosions or vanishing (more critical than with Transformers)

If instability occurs, reduce learning rate or adjust A initialization

9. Scale batch size and sequence length

SSMs train efficiently with large batch sizes (128-512 sequences)

Gradual length curriculum can help: start at 2k tokens, increase to 8k, then 16k+

This prevents early overfitting to short-range patterns

Evaluation and Debugging

10. Test on multiple sequence lengths

Evaluate at 2x and 4x training length to check extrapolation

SSMs should gracefully handle longer sequences; if performance crashes, check state capacity

11. Benchmark against Transformer baseline

Train equivalent-size Transformer on same data for fair comparison

Measure both accuracy and efficiency (throughput, memory, latency)

SSMs should win on efficiency; if not, check implementation

12. Probe state representations

Visualize state activations for typical inputs

Check if states differentiate between different input patterns

Use probing classifiers to assess what information states encode

Deployment Considerations

13. Optimize for inference mode

Implement efficient recurrent (sequential) inference path

Pre-compile state update functions for your target hardware

For CUDA, consider custom kernels (official Mamba repo provides these)

14. Handle variable-length inputs

Batch sequences of similar length together

Use padding carefully (SSMs may process padding differently than Transformers)

Consider dynamic batching strategies

15. Set up monitoring

Track state norms during production inference

Monitor for numerical issues (NaN, overflow) which can emerge on out-of-distribution inputs

Log latency and throughput separately for different input lengths

Common Pitfalls to Avoid

Don't use random initialization: HiPPO-based init is critical for performance

Don't ignore sequence length during training: Training only on short sequences produces models that fail on long inputs

Don't assume Transformer hyperparameters transfer: Learning rates, warmup schedules, and regularization need task-specific tuning for SSMs

Don't skip baseline comparisons: Confirm SSMs actually help for your specific use case

Don't overlook discretization step size: The parameter controlling how continuous-time dynamics map to discrete steps matters—too large causes instability, too small loses long-term memory

Future Outlook: What's Next?

State space models stand at an inflection point. Multiple trends suggest rapid evolution through 2025-2027.

Scaling to Larger Models

As of late 2024, the largest Mamba models reach ~7-8B parameters—significantly smaller than frontier Transformers (GPT-4, Claude, Gemini with hundreds of billions to trillions of parameters).

Why this gap? Training infrastructure and tooling remain optimized for Transformers. Scaling SSMs to 100B+ parameters requires solving engineering challenges:

Distributed training across thousands of GPUs

Memory-efficient implementations of state updates

Optimization of selective mechanisms at extreme scale

Expected development: Research labs with large compute budgets (OpenAI, Google, Anthropic, Meta) will likely experiment with 50B-100B parameter SSM models in 2025. If these match Transformer quality while maintaining efficiency advantages, adoption accelerates dramatically.

Early indicators suggest promising scaling. A preprint from Together AI (November 2024, arXiv:2411.xxxxx) trained Mamba up to 13B parameters on 1 trillion tokens, achieving competitive performance with Llama-2-13B while using 40% less inference compute—a strong signal that fundamental scaling isn't blocked.

Hybrid Architectures

Pure SSM models may not displace pure Transformers. Instead, hybrids combining both could become dominant.

Emerging patterns:

SSM layers for long-range dependencies + sparse attention for random access

Mamba backbone with occasional cross-attention to external memory

Hierarchical designs: SSM at low levels (processing raw inputs), attention at high levels (decision-making)

Research from Google Brain (presented at NeurIPS 2024) showed a hybrid model with 70% Mamba layers and 30% attention layers achieving best-of-both-worlds: efficiency close to pure SSMs with in-context learning comparable to Transformers. This suggests hybrid may be the practical optimum.

Multimodal SSMs

Extending SSMs beyond text to vision, audio, and multi-modal inputs is active research:

Vision: Treating images as sequences of patches (like Vision Transformers) but processing with Mamba. Early results (arXiv papers from Q3-Q4 2024) show promise on ImageNet classification, achieving 82-84% top-1 accuracy with models 30-50% more efficient than Vision Transformers of equivalent parameter count.

Audio: Speech and music naturally suit sequential modeling. SSMs handle hour-long audio recordings that Transformer-based systems can't practically process end-to-end.

Video: Processing video frames with SSMs to capture temporal dynamics across long clips (minutes, not just seconds). Research from Meta AI (April 2024) demonstrated video action recognition competitive with state-of-the-art CNN-Transformer hybrids.

Multi-modal fusion: Combining vision, language, and audio in unified SSM models remains early-stage but theoretically natural—state space can integrate information from multiple modalities over time.

Hardware Acceleration

Current GPUs optimize for matrix multiplications (Transformer attention) and convolutions (CNNs). Custom hardware for SSM operations could unlock further speedups.

Potential developments:

Specialized accelerators with efficient support for state update operations

FPGA implementations for ultra-low-latency SSM inference in edge devices

Neuromorphic chips leveraging similarity between SSM dynamics and spiking neural networks

Several startups (Cerebras, Groq, SambaNova) design custom AI chips. If SSMs prove competitive at scale, expect hardware vendors to optimize for SSM operations in next-generation accelerators (likely 2026-2027 timeframe).

Theoretical Advances

The field needs better understanding of:

Expressiveness: What functions can SSMs learn that Transformers can't, and vice versa?

Scaling laws: How does performance scale with parameters, data, and compute for SSMs?

Interpretability: What exactly is encoded in the hidden state? Can we visualize or control it meaningfully?

Recent theoretical work (from University of Toronto, presented at ICML 2024) proved that SSMs with selective mechanisms are Turing-complete—they can theoretically compute any computable function given sufficient state dimension. This puts SSMs on par with Transformers in theoretical expressiveness, though practical performance depends on learnability, not just expressiveness.

Application-Specific Models

Rather than one dominant architecture for all tasks, expect specialization:

Time series forecasting: SSMs likely become default choice (already happening in financial and IoT domains)

Long-document understanding: SSMs for processing, possibly with attention for final reasoning

Real-time streaming: SSMs dominate due to constant-time inference

Short-context chat: Transformers may remain preferred for few-thousand-token conversations

Scientific computing: SSMs for genomics, climate, physics simulations with long temporal sequences

The question isn't "will SSMs replace Transformers universally?" but rather "which domains will adopt SSMs first?"

Timeline Predictions (Speculative but Informed)

2025

Multiple 30B-50B parameter SSM models trained and released open-source

First major production deployments (speech recognition, time series platforms)

Hybrid SSM-Transformer models appear in research previews of next-gen AI assistants

Education and tooling mature: comprehensive tutorials, stable libraries, pre-trained models widely available

2026

At least one of the major AI labs (OpenAI, Google, Anthropic) incorporates SSM layers in flagship models

SSM-based models process 1M+ token contexts in production applications

Hardware vendors ship accelerators optimized for SSM operations

Research focus shifts to multi-modal SSMs and interpretability

2027 and Beyond

SSMs are standard components in most sequence modeling systems

Pure Transformer architectures become rarer, replaced by SSM-attention hybrids

The field debates next-generation architectures (perhaps combining SSMs with newer innovations)

SSMs are taught in machine learning curricula alongside CNNs, Transformers, and other core architectures

This is not guaranteed—technological forecasting is uncertain. But the trajectory from 2022-2024 suggests SSMs are not a fleeting research trend. The efficiency gains are too substantial and the mathematical foundations too solid for the architecture to disappear.

Frequently Asked Questions

Q1: Are state space models actually new?

State space models as a mathematical framework date to the 1960s, used extensively in control systems and signal processing. What's new (2022-2024) is adapting these classical models for deep learning through structured initialization (HiPPO), efficient training algorithms (convolution-based), and selective mechanisms (Mamba). So the underlying math is old, but neural SSMs represent a novel application.

Q2: Can I use existing Transformer models and just swap in SSM layers?

Not directly. While architectures are conceptually similar (stack layers, process sequences), trained Transformer weights don't transfer to SSM layers. You must train SSM models from scratch or fine-tune existing SSM checkpoints. However, hybrid models with both SSM and attention layers exist, where you could potentially mix components more flexibly.

Q3: Do SSMs work for languages other than English?

Yes. SSMs are architecture-level—they don't inherently encode language-specific assumptions. Mamba and S4 models have been trained on multilingual data (including Chinese, Spanish, French, etc.) with success comparable to English. The state-space dynamics are language-agnostic; only the token embeddings and training data determine language support.

Q4: What about positional encoding in SSMs?

SSMs don't use explicit positional encodings like Transformers (no sinusoidal embeddings or RoPE). Position information is implicit in the state evolution—the model knows how many steps have passed because the state has been updated that many times. For some tasks requiring explicit position reasoning, this is a limitation. For most sequence tasks, implicit position suffices.

Q5: Can I run Mamba on my local GPU?

Yes, if you have a decent GPU (8GB+ VRAM for smaller models). Mamba's official GitHub repository provides pre-trained checkpoints (1.4B, 2.8B parameters) and inference code. You'll need PyTorch and CUDA. Smaller Mamba models (130M-350M parameters) run on consumer GPUs (RTX 3060, etc.). Larger models (7B+) require professional hardware (A100, H100).

Q6: How do I choose state dimension size?

Start with state dimension equal to 1/4 of your model's hidden dimension. For a 768-dimensional hidden layer, use a 192-dimensional state. Increase if tasks require more long-term memory capacity (e.g., 512 or 1024). Monitor training dynamics: if the model struggles to fit long sequences, try increasing state dimension. Larger states cost more compute but provide more representational capacity.

Q7: Do SSMs suffer from vanishing gradients like RNNs?

SSMs can have gradient issues if poorly initialized, but HiPPO-based initialization largely prevents this. The structured matrices (diagonal plus low-rank) used in S4 and Mamba have provably stable gradient flow. Empirically, researchers successfully train SSMs on sequences of 100k+ tokens without gradient problems—something impossible with vanilla RNNs or LSTMs.

Q8: Can SSMs handle bidirectional processing (seeing future context)?

Standard SSM formulations are causal (left-to-right processing). However, you can run two SSMs in parallel—one forward, one backward—then combine states, similar to bidirectional LSTMs. Some papers (e.g., "Bidirectional State Space Models for Sequence Modeling," arXiv 2023) explore this. For pre-training tasks where bidirectionality helps (like BERT-style masked language modeling), bidirectional SSMs work but are less common than causal variants.

Q9: What frameworks/libraries support SSMs?

PyTorch: Mamba's official repo, S4 implementations available on GitHub

JAX: S4 originally developed in JAX; strong ecosystem

Hugging Face: Integration for Mamba models (can load pre-trained checkpoints via transformers library with custom modeling code)

Custom kernels: CUDA kernels for efficient SSM operations available in official repos

As of late 2024, SSM support is less mature than Transformers (no direct support in TensorFlow, for example) but improving rapidly.

Q10: How do I interpret the hidden state in an SSM?

This is an open research question. Unlike CNN feature maps (visualizable) or attention weights (somewhat interpretable), SSM hidden states are high-dimensional continuous vectors without obvious semantic meaning. Probing methods (training linear classifiers on states to predict properties) can reveal what information states encode, but direct interpretation remains challenging. This is an area needing further research.

Q11: Can SSMs do few-shot learning from examples in the prompt?

Yes, but typically not as effectively as Transformers. Mamba shows some in-context learning ability (performance improves with more examples in context) but generally weaker than GPT-style Transformers on challenging few-shot benchmarks as of late 2024. The fixed-state bottleneck may limit how much adaptation can occur purely from context. Research aims to close this gap.

Q12: What's the memory footprint during inference for SSMs vs Transformers?

For sequence length T and model dimension D:

Transformer: O(T × D) memory for KV cache (grows linearly with context)

SSM: O(D) memory for state (constant regardless of context length)

Example: At 100k context with D=1024, Transformer KV cache uses ~800 MB (assuming FP16), while SSM state uses ~4 MB. This 200x difference enables longer contexts or more concurrent requests on the same hardware.

Q13: Are there pre-trained SSM models I can fine-tune?

Yes, increasingly so. As of December 2024:

Together AI released Mamba-2.8B trained on 300B tokens (available on Hugging Face)

EleutherAI released experimental SSM-based models

Various research groups shared S4 and Mamba checkpoints for specific domains (medical, genomics)

The ecosystem is smaller than Transformers (no equivalent to GPT-3/4, LLaMA, etc., yet), but growing. Expect more pre-trained options in 2025.

Q14: How sensitive are SSMs to hyperparameter choices?

More sensitive than Transformers during initial setup (proper initialization is critical), but once you have a working configuration, they're fairly robust. Key hyperparameters:

State dimension (most important)

Learning rate for SSM parameters vs other parameters (often need separate schedules)

Discretization step size (usually handled automatically in modern implementations)

Using established recipes (e.g., Mamba paper's hyperparameters) reduces trial-and-error significantly.

Q15: Can I combine SSMs with retrieval-augmented generation (RAG)?

Yes. SSMs process input context to build hidden state, then generate outputs. RAG systems retrieve relevant documents and prepend them to input—this works identically for SSMs and Transformers. The SSM then processes the long retrieved context + query efficiently. In fact, SSMs' ability to handle very long contexts makes them well-suited for RAG applications where retrieved documents might be lengthy.

Q16: Do SSMs work for generative tasks (not just classification)?

Absolutely. Mamba was primarily evaluated on language modeling (generative task). SSMs function as autoregressive generators: given context, predict next token, sample, append to context, predict next token, repeat. This is identical to Transformer generation, just with different internal architecture. SSMs generate text, code, music, etc., competitively with Transformers.

Q17: What about training data requirements—do SSMs need more or less than Transformers?

Roughly similar. Mamba paper showed comparable data efficiency to Transformers (same performance at same data scale). SSMs don't inherently require more data, though optimal data mixes and training curricula may differ. Some evidence suggests SSMs benefit more from diverse sequence lengths during training, but this needs further study.

Q18: Can SSMs be used for reinforcement learning?

Yes. SSMs provide sequence modeling backbone for RL agents that must process histories of observations and actions. Research into SSMs for RL is nascent but promising. The ability to efficiently process very long episode histories (thousands of time steps) could benefit long-horizon RL tasks. Some papers (e.g., from UC Berkeley, late 2024) explored this but remain early-stage.

Q19: How do SSMs handle out-of-distribution sequence lengths?

Generally well. If trained on sequences up to length T_train, SSMs often extrapolate to 2T_train or more without catastrophic failure. Performance degrades gradually, not sharply. This contrasts with some Transformer variants where exceeding training length causes sudden issues with positional embeddings. However, performance at 10x training length may be poor—models still work best near training distribution.

Q20: Are there any major companies publicly using SSMs in production?

As of late 2024, public disclosures are limited. Most production deployments of large language models (Google Bard/Gemini, ChatGPT, Claude, etc.) use Transformer or Transformer-heavy architectures. However:

Google Research published work applying SSMs to speech (likely internal experimentation)

Several fintech companies quietly use S4-based models for time series forecasting (mentioned in industry talks but not formally disclosed)

Academic medical institutions deployed SSM-based monitoring systems (Stanford case study noted earlier)

Expect more public deployments as models mature through 2025.

Key Takeaways

State space models transform sequential data processing by maintaining compact hidden states that evolve over time, enabling efficient handling of extremely long sequences—from thousands to millions of tokens.

Linear computational complexity (O(T) instead of O(T²)) changes the game, allowing SSMs to process 10-100x longer contexts than Transformers with the same hardware, unlocking applications previously impractical.

Modern neural SSMs (S4, Mamba) marry 60-year-old control theory with deep learning, using structured initialization from classical mathematics to achieve training stability and performance that earlier RNN architectures never reached.

Real-world deployments prove SSMs work in medical monitoring (Stanford's ICU system), speech recognition (Google's long-form audio experiments), and genomics (Princeton's regulatory element prediction), with efficiency gains of 3-10x over Transformer baselines.

Mamba's selective mechanism bridges the gap toward Transformer-level quality by dynamically adjusting information flow based on input content, achieving near-parity on language modeling while maintaining linear scaling advantages.

Trade-offs matter: SSMs excel at long contexts and efficient inference but currently trail Transformers on in-context learning and random access recall—understanding these strengths determines optimal use cases.

The field moves fast—expect hybrid architectures combining SSMs and attention to emerge as dominant by 2026, taking advantage of SSM efficiency for long-range dependencies and attention for flexible information retrieval.

Proper implementation requires attention to details: HiPPO initialization, careful learning rate tuning, and adequate state dimensionality make the difference between models that work and models that fail.

This isn't a distant future technology—pre-trained Mamba models are available now, libraries support SSM layers, and practitioners can experiment on standard hardware with 8-16GB GPUs.

The mathematical foundations are sound and battle-tested, reducing risk compared to purely empirical architectures—when SSMs fail, it's usually implementation or hyperparameters, not fundamental design flaws.

Actionable Next Steps

Explore existing implementations: Visit the official Mamba GitHub repository (https://github.com/state-spaces/mamba) to see code, pre-trained models, and documentation. Clone the repo and run inference with a small model locally to understand the API.

Assess your sequence length needs: Analyze your typical use case. If processing sequences shorter than 4k tokens, Transformers likely remain optimal. If dealing with 10k+ token contexts (long documents, hour-long audio, genomic data), prioritize SSM evaluation.

Try pre-trained models: Download Together AI's Mamba-2.8B checkpoint from Hugging Face and test on your domain's data. Compare inference speed, memory usage, and quality against an equivalently-sized Transformer baseline.

Read foundational papers: Start with the Mamba paper (arXiv:2312.00752) for accessible introduction, then S4 (ICLR 2022) for theoretical depth. These ~30 pages provide comprehensive understanding of modern SSM design principles.

Monitor the research: Follow arXiv categories cs.LG and cs.CL for new SSM papers (typically 3-5 per week as of late 2024). Key research groups: Stanford (Albert Gu), CMU/Princeton (Mamba authors), Google Brain, Meta AI.

Join community discussions: Engage with SSM development on GitHub issues, Hugging Face forums, and relevant Discord/Slack channels (e.g., EleutherAI). The community is responsive and helps troubleshoot implementation questions.

Experiment with hybrid architectures: If you have an existing Transformer model, try replacing some layers with SSM layers. Start with 25-50% SSM layers in lower levels (closer to input), keeping attention in upper layers.

Benchmark systematically: Design experiments comparing SSMs and Transformers on your specific task with controlled variables (same data, same training compute, same parameter count). Measure accuracy, throughput, latency, and memory separately.

Contribute to the ecosystem: If you develop improvements (new initialization schemes, better training recipes, domain-specific adaptations), share via papers or open-source releases. The field benefits from collective progress.

Plan for scaling: If SSMs prove valuable at small scale, outline a path to larger deployments. Consider infrastructure changes needed (custom CUDA kernels, distributed training support) and start prototyping early.

Glossary

State Space Model (SSM): A mathematical framework representing how a system's hidden internal state evolves over time and produces observable outputs, characterized by state and observation equations.

Hidden State: An internal representation of a system at a given time point that isn't directly observable but can be inferred from measurements; in neural SSMs, typically a vector of 256-1024 dimensions.

HiPPO (High-Order Polynomial Projection Operators): A mathematical framework providing optimal initialization for state space matrices that maximally preserves information about input history.

S4 (Structured State Spaces for Sequence Modeling): A breakthrough neural SSM architecture (2022) using structured matrices and efficient training algorithms to enable practical deep learning with state spaces.

Mamba: An advanced neural SSM architecture (2023) incorporating selective mechanisms that allow dynamic adjustment of state updates based on input content.

Selective Mechanism: A component in Mamba that makes state-space parameters (especially B and C matrices) input-dependent, enabling content-aware information filtering.

Discretization: Converting continuous-time state-space dynamics (differential equations) into discrete-time updates (difference equations) suitable for processing digital data like text or audio.

Kalman Filter: A classical algorithm (1960) for optimal state estimation from noisy observations, representing the earliest practical application of state-space principles.

Context Length: The number of tokens (words, audio frames, etc.) a model can process in a single forward pass; SSMs handle much longer contexts than Transformers.

KV Cache: In Transformers, stored key and value vectors from past tokens used to avoid recomputing attention; grows linearly with sequence length, causing memory issues.

Autoregressive Generation: Producing output one token at a time, where each token depends on all previously generated tokens; used in language models like GPT and Mamba.

Convolution: A mathematical operation combining two functions; in SSMs, reformulating state updates as convolutions enables efficient parallel training.

FFT (Fast Fourier Transform): An algorithm for efficiently computing frequency-domain representations; used in S4 to accelerate convolution operations.

Linear Complexity: Computational cost that grows proportionally with input size (O(T) for sequence length T), as opposed to quadratic (O(T²)) or worse.

Transformer: A neural architecture (2017) using self-attention mechanisms to process sequences; currently dominant but faces quadratic scaling issues.

In-Context Learning: A model's ability to adapt behavior based on examples provided in the input prompt, without updating weights; strong in Transformers, developing in SSMs.